4.4 Topology selection

|

| < Day Day Up > |

|

4.4 Topology selection

In Chapter 2, "Overview of WebSphere Application Server V5" on page 19, we have described that WebSphere Application Server provides support for the multi-tier application model. A topology for the integrated system is the layout of its main components such as Web servers, WebSphere Application Servers, and DB2 UDB servers over one or more machines, spread over one or more geographically distributed locations.

4.4.1 Selection criteria

A variety of factors come into play when considering the appropriate topology. The selection criteria typically include a review of your requirements in the following factors:

-

Security

-

Performance

-

Throughput

-

Scalability

-

Availability

-

Maintainability

-

Session management

4.4.2 Performance and scalability

Performance involves minimizing the response time for a given transaction load. Although a number of factors relating to application design can affect performance, one or both of the following techniques are commonly used to improve the performance:

-

Vertical scaling

Involves creating additional application server processes on a single physical machine, providing for software/application server fail-over as well as load balancing across multiple JVMs (application server processes). Vertical scaling allows an administrator to profile an existing application server for bottlenecks in performance, and potentially use additional application servers, on the same machine, to get around these performance issues.

-

Horizontal scaling

Involves creating additional application server processes on multiple physical machines to take advantage of the additional processing power available on each machine. This provides hardware fail-over support and allows an administrator to spread the cost of an implementation across multiple physical machines.

4.4.3 Single machine topology

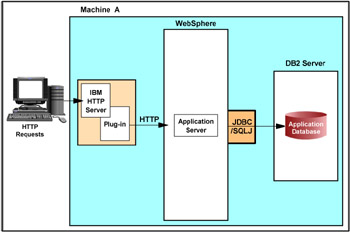

The starting scenario is the configuration where all components reside on the same machine, as shown in Figure 4-4. The Web server routes requests, as appropriate, to the WebSphere Application Server on the same machine for processing.

Figure 4-4: Single machine topology

Some reasons to use a single machine topology are:

-

Maintainability: Easy to install and maintain.

This configuration is most suitable as a startup configuration in order to evaluate and test the basic functionality of WebSphere and related components. The installation is automated by tools supplied with the WebSphere distribution. This configuration is also the easiest to administer.

-

Performance, security, and availability are not critical goals.

This may be the case for development, testing, and some intranet environments. We are limited to the resources of a single machine, which are shared by all components.

-

Low cost.

Consider the following when you use a single machine topology:

-

Performance: Components' interdependence and competition for resources

All components compete for the shared resources (CPU, memory, network, and so on). Since components influence each other, bottlenecks or ill-behaved components can be difficult to identify.

-

Security: No isolation

There is no explicit layer of isolation between the components.

-

Availability: Single point of failure

This configuration is a single point of failure.

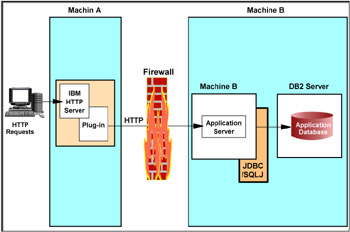

4.4.4 Separating the HTTP server

When compared to a configuration where the application server and the HTTP server are collocated on a single physical server, separation of the application server and the HTTP server can be utilized to provide varying degrees of improvement in:

-

Performance

-

Process isolation

-

Security

This configuration is illustrated in Figure 4-5.

Figure 4-5: Separating HTTP server

The WebSphere V5.0 HTTP plug-in allows the HTTP server to be physically separated from the application server. It uses an XML configuration file (plugin-cfg.xml) containing settings that describe how to handle and pass on requests to the WebSphere Application Server(s).

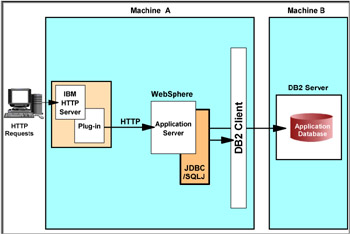

4.4.5 Separating the DB2 UDB server

WebSphere Application Server accesses DB2 UDB Server through DB2 JDBC drivers. As long as the configuration is correct, WebSphere will be able to communicate with a DB2 UDB server located anywhere accessible through TCP/IP. We discuss later on how to configure WebSphere to communicate with a remote DB2 UDB server.

In the simple single machine configuration described in "Single machine topology" on page 90, the application database and WebSphere Application Server reside on the same machine. However, installing the DB2 UDB server on a different machine, creating a two-tier configuration (as illustrated in Figure 4-6), represents a good practice, with several advantages.

Figure 4-6: Separating the DB2 server

Some reasons to separate the database server are:

-

Performance: Less competition for resources

If both the DB2 UDB and WebSphere Application server are placed on the same machine, then you have two programs: The application server and the DB2 UDB server, competing for increasingly scarce resources (CPU and memory). So, in general, we can expect significantly better performance by separating the WebSphere Application server from the DB2 server.

-

Performance: Differentiated tuning

By separating the servers, we can independently tune the machines that host the database server and the application server to achieve optimal performance for each other. The database server is typically sized and tuned for database performance, which may differ from the optimal configuration for the application server.

On many UNIX servers, installing the database involves modification of the OS kernel. This database-specific tuning is often detrimental to the performance of application servers located on the same machine.

-

Availability: Use of already established highly available database servers

Many organizations have invested in high-availability solutions for their database servers, reducing the possibility of the server being a single point of failure in a system.

-

Maintainability: Independent installation/re-configuration

Components can be re-configured, or even replaced, without affecting the installation of the other component.

Consider the following when using a remote database server:

-

Network access may limit performance

Depending upon the network hardware and remoteness of the database server, the network response time for communication between WebSphere Application Server and the database server may limit the performance of WebSphere. When collocated on the same server, network response is not an issue.

-

Architectural complexity

Hosting the database server on a separate machine introduces yet another box that must be administered, maintained, and backed up.

-

Maintainability: Complexity of configuration

Remote database access requires more complex configuration, setting up clients, and so on.

-

Cost

The cost of a separate machine for database server may not be justified for the environment in which the WebSphere Application Server will be installed.

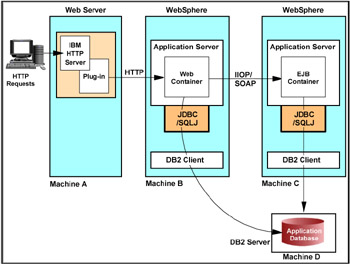

4.4.6 Separating the Web container and the EJB container

Depending on how your application is architected, it can be advantageous to physically separate the application server where your servlets run from your EJB application servers. An example topology for this configuration is illustrated in Figure 4-7.

Figure 4-7: Separated Web container and EJB container

Some reasons to separate the Web container and the EJB container are:

-

Performance: Less competition for resources

Performance gains can occur for applications consisting of business objects that are accessed mainly through EJBs. Separating Web containers from EJB containers prevents the servlet load from affecting EJB-based applications. Servlets that have little interaction with EJBs or none at all can also be deployed on a relatively smaller server.

-

Security

When running in an environment where two firewalls are employed, you can provide the same level of security for Entity EJBs as is provided for application data.

4.4.7 Vertical scaling

Vertical scaling provides a straightforward mechanism for creating multiple instances of an application server, and hence multiple JVM processes. In the simplest case, one can create many application servers on a single machine, and this single machine also runs the HTTP server process. This configuration is illustrated in Figure 4-8.

Figure 4-8: Vertical scaling

Some reasons to use vertical scaling are:

-

Performance: Better use of the CPU.

An instance of an application server runs in a single Java Virtual Machine (JVM) process. However, the inherent concurrency limitations of a JVM process may prevent it from fully utilizing the processing power of a machine. Creating additional JVM processes provides multiple thread pools, each corresponding to the JVM associated with each application server process. This can enable the application server to make the best possible use of the processing power and increase throughput of the host machine.

-

Availability: Fail-over support in a cluster.

A vertical scaling topology also provides process isolation and fail-over support within an application server cluster. If one application server instance goes offline, the requests can be redirected to other instances on the machine to process.

-

Throughput: WebSphere workload management.

Vertical scaling topologies can make use of the WebSphere Application Server workload management facility. The HTTP server plug-in distributes requests to the Web containers and the ORB distributes requests to EJB containers.

-

Maintainability: Easy-to-administer member application servers.

With the concept of cells and clusters in WebSphere Application Server V5.0, it is easy to administer multiple application servers from a single point.

-

Maintainability: Vertical scalability can easily be combined with other topologies.

We can implement vertical scaling on more than one machine in the configuration. (IBM WebSphere Application Server Network Deployment V5.0 must be installed on each machine.) We can combine vertical scaling with the other topologies described in this chapter to boost performance and throughput. This assumes, of course, that sufficient CPU and memory are available on the machine.

-

Cost: Does not require additional machines.

Consider the following when using vertical scaling:

-

Availability: Machine still a single point of failure.

-

Single machine vertical scaling topologies have the drawback of introducing the host machine as a single point of failure in the system. However, this can be avoided by using horizontal scaling on multiple machines.

-

Performance: Scalability limited to a single machine. Scalability is limited to the resources available on a single machine.

4.4.8 Horizontal scaling with clusters

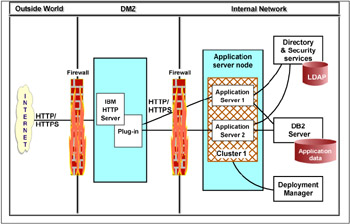

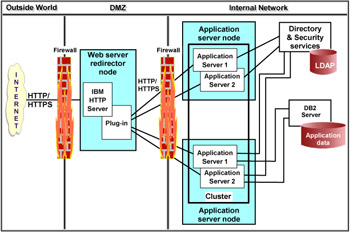

Horizontal scaling exists when the members of an application server cluster are located across multiple physical machines. This lets a single WebSphere application span several machines, yet still present a single logical image. This configuration is illustrated in Figure 4-9 on page 97.

Figure 4-9: Horizontal scaling with clusters

The WebSphere HTTP server plug-in distributes requests to cluster member application servers on the application server nodes.

Advantages

Horizontal scaling using clusters has the following advantages:

-

Availability

Provides the increased throughput of vertical scaling topologies but also provides fail-over support. This topology allows handling of application server process failures and hardware failures without significant interruption to client service.

-

Throughput

Optimizes the distribution of client requests through mechanisms such as workload management or remote HTTP transport.

Disadvantages

Horizontal scaling using clusters has the following disadvantages:

-

Maintainability: With the concept of cells and clusters in IBM WebSphere Application Server Network Deployment V5.0, it is easy to administer multiple application servers from a single point. However, there is more installation and maintenance associated with the additional machines.

-

Cost: More machines.

4.4.9 Session persistence considerations

If the application maintains state between HTTP requests and we are using vertical or horizontal scaling, then we must consider using an appropriate strategy for session management.

Each application server runs in its own JVM process. To allow a fail-over from one application server to another without logging out users, we need to share the session data between multiple processes. There are two different ways of doing this in IBM WebSphere Application Server Network Deployment V5.

Memory-to-memory session replication

Provides replication of session data between the process memory of different application server JVMs. A Java Message Service (JMS) based publish/subscribe mechanism, called Data Replication Service (DRS), is used to provide assured session replication between the JVM processes. DRS is included with IBM WebSphere Application Server Network Deployment V5 and is automatically started when the JVM of a clustered (and properly configured) application server starts.

Database persistence

Session data is stored in a database shared by all application servers.

Memory-to-memory replication has the following advantages and disadvantages compared to database persistence:

-

Memory-to-memory replication is faster by virtue of the high performance messaging implementation used in WebSphere V5.

-

No separate database product is required. But normally you will use a database product anyway for your application, so this might not be an inhibitor.

-

If you have a memory constraint, using database session persistence rather than memory-to-memory replication might be the better solution. There are also several options to optimize memory-to-memory replication.

Persistent session should be enabled in some scenarios. Please refer to Chapter 8, "DB2 UDB V8 and WAS V5 integrated performance" on page 287, for more details.

4.4.10 Topology selection summary

These considerations for topology selection are not mutually exclusive. They can be combined in different ways. Table 4-3 provides a summary of the topology selection considerations.

| Security | Performance | Throughput | Maintainability | Availability | Session | |

|---|---|---|---|---|---|---|

| Single machine | Little isolation between components | Competition for machine resources. | Limited to machine resources. | Ease of installation and maintenance. | Machine is single point of failure. | |

| Remote HTTP Server | Allows for firewall/ DMZ | Separation of loads. Performance usually better than local DB server. | Independent tuning. | Independent configuration and component replacement. More administrative overhead. Need copy of plugin-cfg.xml file. | Introduces single point of failure. | |

| Separate DB2 UDB server | Firewall can provide isolation | Separation of loads. Performance usually better than local DB server. | Independent tuning. Must consider network bottleneck. | Use already established DBA procedures. Independent configuration. More administrative overhead. | Introduces single point of failure. Use already established HA servers. | |

| Separate Web/EJB container | More options for firewall | Typically slower than single JVM. | Clustering can improve throughput. | More administrative overhead. | Introduces single point of failure. |

|

| Vertical scaling | Improved throughput on large SMP servers. | Limited to resources on a single machine. | Easiest to maintain. | Process isolation Process redundancy. | May use session affinity. Memory-to -memory session replication. Persistent session database for session fail-over. | |

| Horizontal scaling | Distribution of load. | Distribution of connections. | More to install/maintain. Code migrations to multiple nodes. | Process and hardware redundancy. | May use session affinity. Memory-to -memory session replication. Persistent session database for session fail-over. | |

| Add HTTP server | Distribution of load. | Distribution of connections. | More to install/maintain. | Best in general. | Use load balancers. SSL session ID affinity when using SSL. | |

| One domain | Ease of maintenance. | |||||

| Multiple domains | Less lookups and interprocess communication. | Harder to maintain than single domain. | Process hardware and software redundancy. |

|

| < Day Day Up > |

|

EAN: N/A

Pages: 90