Touchpoint Process: Abuse Case Development

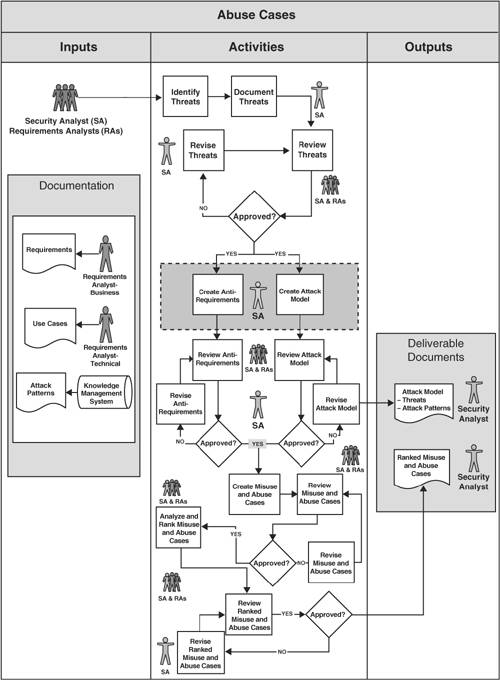

| Unfortunately, abuse cases are only rarely used in practice even though the idea seems natural enough. Perhaps a simple process model will help clarify how to build abuse cases and thereby fix the adoption problem. Figure 8-1 shows a simple process model. Figure 8-1. A simple process diagram for building abuse cases. Abuse cases are to be built by a team of requirements people and security analysts (called RAs and SAs in the picture). This team starts with a set of requirements, a set of standard use cases (or user stories), and a list of attack patterns.[3] This raw material is combined by the process I describe to create abuse cases.

The first step involves identifying and documenting threats. Note that I am using the term threat in the old-school sense. A threat is an actor or agent who carries out an attack. Vulnerabilities and risks are not threats.[4] Understanding who might attack you is really critical. Are you likely to come under attack from organized crime like the Russian mafia? Or are you more likely to be taken down by a university professor and the requisite set of overly smart graduate students all bent on telling the truth? Thinking like your enemy is an important exercise. Knowing who your enemy is likely to be is an obvious prerequisite.

Given an understanding of who might attack you, you're ready to get down to the business of creating abuse cases. In the gray box in the center of Figure 8-1, the two critical activities of abuse case development are shown: creating anti-requirements and creating an attack model. Creating Anti-RequirementsWhen developing a software system or a set of software requirements, thinking explicitly about the things that you don't want your software to do is just as important as documenting the things that you do want. Naturally, the things that you don't want your system to do are very closely related to the requirements. I call them anti-requirements. Anti-requirements are generated by security analysts, in conjunction with requirements analysts (business and technical), through a process of analyzing requirements and use cases with reference to the list of threats in order to identify and document attacks that will cause requirements to fail. The object is explicitly to undermine requirements. Anti-requirements provide insight into how a malicious user, attacker, thrill seeker, competitor (in other words, a threat) can abuse your system. Just as security requirements result in functionality that is built into a system to establish accepted behavior, anti-requirements are established to determine what happens when this functionality goes away. When created early in the software development lifecycle and revisited throughout, these anti-requirements provide valuable input to developers and testers. Because security requirements are usually about security functions and/or security features, anti-requirements are often tied up in the lack of or failure of a security function. For example, if your system has a security requirement calling for use of crypto to protect essential movie data written on disk during serialization, an anti-requirement related to this requirement involves determining what happens in the absence of that crypto. Just to flesh things out, assume in this case that the threat in question is a group of academics. Academic security analysts are unusually well positioned to crack crypto relative to thrill-seeking script kiddies. Grad students have a toolset, lots of background knowledge, and way too much time on their hands. If the crypto system fails in this case (or better yet, is made to fail), giving the attacker access to serialized information on disk, what kind of impact will that have on the system's security? How can we test for this condition? Abuse cases based on anti-requirements lead to stories about what happens in the case of failure, especially security apparatus failure.

Creating an Attack ModelAn attack model comes about by explicit consideration of known attacks or attack types. Given a set of requirements and a list of threats, the idea here is to cycle through a list of known attacks one at a time and to think about whether the "same" attack applies to your system. Note that this kind of process lies at the heart of Microsoft's STRIDE model [Howard and LeBlanc 2003]. Attack patterns are extremely useful for this activity. An incomplete list of attack patterns can be seen in the box Attack Patterns from Exploiting Software [Hoglund and McGraw 2004] on pages 218 through 221. To create an attack model, do the following:

Together, the resulting attack model and anti-requirements drive out abuse cases that describe how your system reacts to an attack and which attacks are likely to happen. Abuse cases and stories of possible attacks are very powerful drivers for both architectural risk analysis and security testing. The simple process shown in Figure 8-1 results in a number of useful artifacts. The simple activities are designed to create a list of threats and their goals (which I might call a "proper threat model"), a list of relevant attack patterns, and a unified attack model. These are all side effects of the anti-requirements and attack model activities. More important, the process creates a set of ranked abuse casesstories of what your system does under those attacks most likely to be experienced. As you can see, this is a process that requires extensive use of your black hat. The more experience and knowledge you have about actual software exploit and real computer security attacks, the more effective you will be at building abuse cases (see Chapter 9). |

EAN: 2147483647

Pages: 154