Client-Side DataPortal

| Tip | We discussed these technologies in Chapter 3, including the meanings of the namespaces. |

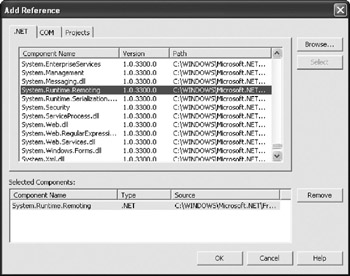

Notice that in this implementation, we're using the HTTP channel for remoting. This is because we'll be using IIS to host the server-side DataPortal classes. Even though we'll use the HTTP channel, however, we'll still implement code to make sure that we're using the more efficient binary formatter to encode the data.

| Tip | If you choose to implement a custom remoting host, you could opt instead to use the faster TCP channel. This would preclude the use of Windows' integrated security, but it would work fine with our custom, table-driven security implementation. Typically, such a host would be created as a Windows service. We won't be implementing such a custom host in this book, since the IIS approach is much easier to implement, configure, and manage. |

The client-side DataPortal will expose four methods, described in Table 5-2, that can be called by our business logic.

| Method | Description |

|---|---|

| Create() | Calls the server-side DataPortal , which invokes our DataPortal_Create() method |

| Fetch() | Calls the server-side DataPortal , which invokes our DataPortal_Fetch() method |

| Update() | Calls the server-side DataPortal , which invokes our DataPortal_Update() method |

| Delete() | Calls the server-side DataPortal , which invokes our DataPortal_Delete() method |

Behind the scenes in each of these methods, we need to implement the functionality to create the server-side DataPortal , to deal with the [Transactional()] attribute, and so forth.

Helper Methods

Before we get into the implementation of the four primary methods in Table 5-2, let's implement some helper methods that will deal with most of the details. We'll enclose these methods in a region:

#region Helper methods #endregion

IsTransactionalMethod

Within a business class, the business developer may choose to mark any of our four DataPortal_xyz() methods as transactional, using code like this:

[Transactional()] protected override void DataPortal_Update()

To detect this, we'll need to write a method to find out whether the [Transactional()] attribute has been applied to a specified method. This is very similar to the NotUndoableField() method that we implemented in UndoableBase in Chapter 4:

static private bool IsTransactionalMethod(MethodInfo method) { return Attribute.IsDefined(method, typeof(TransactionalAttribute)); } Here, we're passed a MethodInfo object, which provides access to the information describing a method, as a parameter. Using the Attribute.IsDefined() method, we can easily find out whether the attribute was applied to the method in question. If it is, we return true .

GetMethod

Before we can find out if one of the DataPortal_xyz() methods on the business object has the [Transactional()] attribute applied, we need to get the MethodInfo object that describes the method in question. This requires the use of reflection, so that we can query the business object's type for a method of a particular name :

static private MethodInfo GetMethod(Type objectType, string method) { return objectType.GetMethod(method, BindingFlags.FlattenHierarchy BindingFlags.Instance BindingFlags.Public BindingFlags.NonPublic); } We provide qualifiers to the GetMethod() call to indicate that we don't care if the method is public or non- public . Either way, we'll get a MethodInfo object describing the method on the business object, which we can then use as a parameter to IsTransactionalMethod() .

Creating the Server-Side DataPortal

Next, we need to write methods that create the simple and transactional server-side DataPortal objects locally or using remoting, as required.

Reading the Configuration File

To do this, we need to read the application's configuration file:

#region Server-side DataPortal static private string PORTAL_SERVER { get { return ConfigurationSettings.AppSettings["PortalServer"]; } } static private string SERVICED_PORTAL_SERVER { get { return ConfigurationSettings.AppSettings["ServicedPortalServer"]; } } #endregion As with our other configuration file methods, we convert any null values to string.Empty to simplify the code that uses the returned values.

If we wanted the server-side DataPortal to run via remoting, we'd include lines in our application configuration file such as

<add key="PortalServer" value="http://appserver/DataPortal/DataPortal.rem" /> <add key="ServicedPortalServer" value="http://appserver/DataPortal/ServicedDataPortal.rem" />

The URLs will point to the DataPortal website that we'll create later in this chapter as a remoting host. If these lines aren't present in the application configuration file, the requests for the PortalServer and ServicedPortalServer entries will return empty string values. We can easily test these to see if local or remote configuration is desired.

| Tip | If we do supply URLs for our server-side DataPortal objects, the .NET Remoting subsystem will automatically invoke the objects on the server we specify. This "server" could be a web farm, because we're using the HTTP protocol. The reality isn't quite as pretty, however, because remoting caches the IP connection to the server after it's established. This results in better performance, because subsequent calls via remoting can reuse that connection, but it means that subsequent remoting requests aren't load balanced, since they always go back to the same server. If that server can't be reached, remoting will automatically create a new connection, and if our servers are in a web farm, that could mean a different server. This means we do get good fault tolerance, but we don't really get ideal load balancing. |

Configuring Remoting

Now that we have methods to retrieve the URL values (if any) from the configuration file, we can configure remoting. The easiest way to do this is in a static constructor. The .NET runtime will automatically call this method before any other code in our client-side DataPortal class is executed, so we're guaranteed that remoting will be configured before anything else happens.

Since we're using the HTTP channel, which defaults to using the SOAP formatter, this is a bit tricky. We want to use the binary formatter instead, because it sends a lot less data across the network. We also need to register the Server.DataPortal and Server.ServicedDataPortal.DataPortal classes as being remote, if the application configuration file contains URLs for them.

Add the following code to the Helper Methods region:

static DataPortal() { // see if we need to configure remoting at all if(PORTAL_SERVER.Length > 0 SERVICED_PORTAL_SERVER.Length > 0) { // create and register our custom HTTP channel // that uses the binary formatter Hashtable properties = new Hashtable(); properties["name"] = "HttpBinary"; BinaryClientFormatterSinkProvider formatter = new BinaryClientFormatterSinkProvider(); HttpChannel channel = new HttpChannel(properties, formatter, null); ChannelServices.RegisterChannel(channel); // register the data portal types as being remote if(PORTAL_SERVER.Length > 0) { RemotingConfiguration.RegisterWellKnownClientType( typeof(Server.DataPortal), PORTAL_SERVER); } if(SERVICED_PORTAL_SERVER.Length > 0) { RemotingConfiguration.RegisterWellKnownClientType( typeof(Server.ServicedDataPortal.DataPortal), SERVICED_PORTAL_SERVER); } } } The first thing we do here is create an HTTP channel that uses the binary formatter, so we transfer less data across the network than if we used the default SOAP formatter. You've seen code like this before, back in Chapter 3. The outcome is that we create a custom HTTP channel object, and then register it with remoting. After that's done, remoting will use our custom channel for all HTTP remoting requests.

We then check to see if the application configuration file contains a URL for the nontransactional server-side DataPortal . If it does, we register the Server.DataPortal class as being remote, using that URL as the location. Then we do the same thing for ServicedDataPortal . If there's a URL entry in the configuration file, we configure the class to be run remotely.

By taking this approach, we can configure the transactional and nontransactional server-side DataPortal objects to run on different servers if needed, providing a lot of flexibility.

Creating the Server-Side DataPortal Objects

Now we're ready to create the server-side DataPortal objects themselves . Actually, we'll cache these objects, which will speed up performance, since it means that the client needs to go through the creation process only once. Declare a couple of variables to hold the cached references:

public class DataPortal { static Server.DataPortal _portal; static Server.ServicedDataPortal.DataPortal _servicedPortal; Now let's add a method to create the simple server-side DataPortal object. Add this to the Server-side Data Portal region:

static private Server.DataPortal Portal { get { if(_portal == null) _portal = new Server.DataPortal(); return _portal; } } First, we see if we already have a server-side DataPortal object in the _portal variable. If not, we proceed to create the object.

As I've said before, we're going to configure the IIS remoting host such that the server-side DataPortal object is a SingleCall object. This means that a new Server.DataPortal object will be created for each method call across the network. Such an approach provides optimal isolation between method calls and is the simplest programming model we can use.

| Tip | This is also the approach that will likely give us optimal performance. Our other option is to use a Singleton configuration, which would mean that all calls from all clients would be handled by a single server-side DataPortal object. Since concurrent client requests would be running on different threads, we'd have to write extra code in our server-side DataPortal to support multithreading, which would mean the use of synchronization objects. Anytime we use synchronization objects, we tend to reduce performance as well as radically increase the complexity of our code for both development and debugging! |

We can create a similar method to return a reference to the transactional, serviced DataPortal :

static private Server.ServicedDataPortal.DataPortal ServicedPortal { get { if(_servicedPortal == null) _servicedPortal = new Server.ServicedDataPortal.DataPortal(); return _servicedPortal; } } Again, we check whether a cached transactional DataPortal object already exists. If it doesn't, then we create an instance of the transactional DataPortal object:

_servicedPortal = new Server.ServicedDataPortal.DataPortal();

At this point, we have easy access to both the transactional and nontransactional server-side DataPortal objects from within our code.

Handling Security

The last thing we need to do before implementing the four data access methods is to handle security. Remember that we're intending to support either Windows' integrated security or our custom table-driven security.

If we're using Windows' integrated security, we're assuming the environment to be set up so that the user's Windows identity is automatically passed to the server through IIS. This means that our server-side code will run within an impersonated Windows security context, and the server security will match the client security automatically, without any effort on our part.

If we're using our custom security, we need to do this impersonation ourselves by ensuring that the server has the same .NET security objects as the client. We'll implement the table-driven security objects later in this chapter when we create our own principal and identity objects.

Our custom principal object will be set as our thread's CurrentPrincipal on the client, making it the active security object for our client-side code. When we invoke the server-side DataPortal , we need to pass the CurrentPrincipal value to the server, so that it can make the server-side CurrentPrincipal match the one on the client.

As is our habit, we'll use an application configuration entry to indicate whether we're using Windows security ”the default will be to use our custom table-driven security. To use Windows' integrated security, our application configuration file would have an entry like this:

<add key="Authentication" value="Windows" />

Though we'll default to CSLA table-based security, we could also explicitly indicate we're using that security model with an entry like this:

<add key="Authentication" value="CSLA" />

To read this entry, let's implement a helper property in the DataPortal class:

#region Security static private string AUTHENTICATION { get { return ConfigurationSettings.AppSettings["Authentication"]; } } #endregion With this in place, we can implement another helper function to return the appropriate security object to pass to the server. If we're using Windows security, this will be null ; if not, it will be the principal object from the current thread's CurrentPrincipal property. Add this to the same region:

static private System.Security.Principal.IPrincipal GetPrincipal() { if(AUTHENTICATION == "Windows") // Windows integrated security return null; else // we assume using the CSLA framework security return System.Threading.Thread.CurrentPrincipal; } We can use this GetPrincipal() method to retrieve the appropriate value to pass to the server-side DataPortal . If we're using CSLA .NET's table-based security, we'll be passing the user's identity objects to the server so that the server-side DataPortal and our business logic on the server can run within the same security context as we do on the client.

If we're using Windows' integrated security, we need to make sure that the user's security credentials are properly passed from the client to the server by remoting. To do this, we need to add a bit of code to our static constructor method to properly configure the remoting channel:

// create and register our custom HTTP channel // that uses the binary formatter Hashtable properties = new Hashtable(); properties["name"] = "HttpBinary"; if(AUTHENTICATION == "Windows") { // make sure we pass the user's Windows credentials // to the server properties["useDefaultCredentials"] = true; } BinaryClientFormatterSinkProvider formatter = new BinaryClientFormatterSinkProvider(); HttpChannel channel = new HttpChannel(properties, formatter, null); The useDefaultCredentials property tells the channel to automatically carry the user's Windows security credentials from the client to the server.

Data Access Methods

At last, we can implement the four primary methods in the client-side DataPortal . Since we've done all the hard work in our helper methods, implementing these will be pretty straightforward. All four will have the same structure of determining whether the business object's method has the [Transactional()] attribute and then invoking either the simple or the transactional server-side DataPortal object.

Create

Let's look at the Create() method first:

#region Data Access methods static public object Create(object criteria) { if(IsTransactionalMethod(GetMethod( criteria.GetType().DeclaringType, "DataPortal_Create"))) return ServicedPortal.Create(criteria, GetPrincipal()); else return Portal.Create(criteria, GetPrincipal()); } #endregion The method accepts a Criteria object as a parameter. As we discussed in Chapter 2, all business classes will contain a nested Criteria class. To create, retrieve, or delete an object, we'll create an instance of this Criteria class, initialize it with criteria data, and pass it to the data portal mechanism. The server-side DataPortal code can then use the Criteria object to find out the class (data type) of the actual business object with the expression Criteria.GetType.DeclaringType .

In the client-side DataPortal code, we're using the DeclaringType property to get a Type object for the business class and passing that Type object to the GetMethod() helper function that we created earlier. We're indicating that we want to get a MethodInfo object for the DataPortal_Create() method from the business class.

That MethodInfo object is then passed to IsTransactionalMethod() , which tells us whether the DataPortal_Create() method from our business class has the [Transactional()] attribute. All of this is consolidated into the following line of code:

if(IsTransactionalMethod(GetMethod( criteria.GetType().DeclaringType, "DataPortal_Create")))

And based on this, we either invoke the transactional server-side DataPortal :

return ServicedPortal.Create(criteria, GetPrincipal());

or we invoke the nontransactional server-side DataPortal :

return Portal.Create(criteria, GetPrincipal());

In both cases, we're calling our helper functions to create (if necessary) either the serviced or the simple server-side DataPortal object. We then call the Create() method on the server-side object, passing it both the Criteria object and the result from our GetPrincipal() helper function.

The end result is that the appropriate (transactional or nontransactional) server-side DataPortal 's Create() method runs (either locally or remotely, based on configuration settings), and can act on the Criteria object to create and load a business object with default values. The fully populated business object is returned from the server-side Create() method, and then we return it as a result of our function as well.

Fetch

The Fetch() method is almost identical to Create() , since it also receives a Criteria object as a parameter. Add this to the same region:

static public object Fetch(object criteria) { if(IsTransactionalMethod(GetMethod( criteria.GetType().DeclaringType, "DataPortal_Fetch"))) return ServicedPortal.Fetch(criteria, GetPrincipal()); else return Portal.Fetch(criteria, GetPrincipal()); } Once again, we go through the process of retrieving a MethodInfo object, this time for the DataPortal_Fetch() method of our business class. This is passed to IsTransactionalMethod() so that we know whether to invoke the transactional or the nontransactional server-side DataPortal .

In this case, we're calling the Fetch() method of the server-side DataPortal object, passing it the Criteria object and the result from GetPrincipal() . In response, the server-side DataPortal creates a business object and has it load its data based on the Criteria object. The resulting fully populated business object is returned from the server, and we return it as a result of our Fetch() method.

Update

The Update() method is similar again, but it doesn't get a Criteria object as a parameter. Instead, it gets passed the business object itself. This way, it can pass the business object to the server-side DataPortal , which can then call the object's DataPortal_Update() method, causing the object to save its data to the database. Here's the code:

static public object Update(object obj) { if(IsTransactionalMethod(GetMethod(obj.GetType(), "DataPortal_Update"))) return ServicedPortal.Update(obj, GetPrincipal()); else return Portal.Update(obj, GetPrincipal()); } The basic structure of the implementation remains the same, but we're working with the business object rather than a Criteria object, so there are some minor changes. First, we get the MethodInfo object for the business object's DataPortal_Update() method, and check to see if it has the [Transactional()] attribute:

if(IsTransactionalMethod(GetMethod(obj.GetType(), "DataPortal_Update")))

This is a bit easier than with a Criteria object ”since we have the business object itself, we can just use its GetType() method to retrieve its Type object. We can then call the Update() method on either the transactional or the nontransactional server-side DataPortal , as appropriate. The business object and the result from our GetPrincipal() method are passed as parameters.

When the server-side DataPortal is done, it returns the updated business object back to us, and we then return it as a result. It's important to realize that if the server-side DataPortal is running in-process, the updated business object that we get as a result of calling Update() will be the same object we started with. If the server-side DataPortal is accessed via remoting, however, the result of the Update() call will be a new object that contains the updated data.

As a general practice, then, we should always write our code to assume that we get a new object as a result of calling Update() . If we do that, then our code will work regardless of whether we get a new object or the original, shielding us from any future configuration changes that cause the server-side DataPortal to switch from being in-process to being remote or vice versa.

| Note | Update() will be called by UI code via the business object's Save() method, so this implies that any UI code written to call Save() should always assume that it will get a new object as a result of doing so. |

By always returning the updated business object back to the client, we simplify the business developer's life quite a lot. It means that the business developer can make changes to the object's state during the update process without worrying about losing those changes. Essentially, we're ensuring that anywhere within the business class, the business developer can change the object's variables and those changes are consistently available, regardless of whether the changes occurred when the object was on the client or on the server.

Delete

The final client-side DataPortal method is Delete() , which is virtually identical to Create() and Fetch() . It also receives a Criteria object as a parameter, which it uses to get a Type object for the business class, and so forth.

static public void Delete(object criteria) { if(IsTransactionalMethod(GetMethod( criteria.GetType().DeclaringType, "DataPortal_Delete"))) ServicedPortal.Delete(criteria, GetPrincipal()); else Portal.Delete(criteria, GetPrincipal()); } The Delete() method exists to support the immediate deletion of an object, without having to retrieve the object first. Instead, it accepts a Criteria object that identifies which object's data should be deleted. Ultimately, the server-side DataPortal calls the business object's DataPortal_Delete() method to perform the delete operation.

Nothing is returned from this method, as it doesn't generate a business object. If the delete operation itself fails, it should throw an exception, which will be returned to the client as an indicator of failure.

EAN: 2147483647

Pages: 111

If you may any questions please contact us: flylib@qtcs.net