7.1 THE LIFECYCLE MODEL

|

| < Day Day Up > |

|

7.1 THE LIFECYCLE MODEL

The lifecycle is a generic model. This means that you may need to tailor it to your own specific environment by adding a further level of detail. You may also need to add some additional activities that are specific to the way in which your organization operates. The most likely area where this will be necessary is in the budget approval tasks. You may even be able to skip some of the specific tasks described later.

The model is described by means of a data flow diagram (DFD) type of notation used to describe a process rather than to model a computer system's behavior. I have found this to be an extremely useful way of modeling processes. The notation is quite straightforward:

-

Circles represent processes, tasks or activities. Essentially these show elements of work that need to be carried out by people.

-

Directed lines show the inputs to or outputs from processes. These are objects, resources or deliverables consumed or produced by the processes.

-

Parallel lines indicate stores of information. Conventionally these are files or database entities within a computer system but in our model they have a wider meaning. They can be electronic stores but they are also used to indicate paper files, any type of repository and even generic stores such as "literature."

-

Rectangles retain the conventional DFD meaning of terminators. In this model they represent objects or entities with which the Software Metrics implementation process must interact.

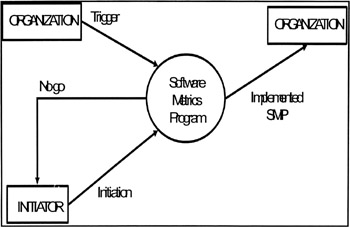

The model is layered with greater detail being added to each layer. We start with the highest level of the model, the context diagram of Figure 7.1, Notice that I will use the term "Software Metrics Program" to describe the development and implementation of a measurement initiative.

Figure 7.1: Software Metrics Initiative Context Diagram

This describes the system boundary of our implementation program. It shows that the program is a process in its own right that takes, as input, some trigger from the organization and delivers, some time later, the results of the implementation back to the organization. I have deliberately shown the initiator of the Software Metrics program, which I will sometimes refer to as the SMP, separately because, as you will see in the next chapter, this individual or group of people is a major element in determining the scope of the program.

The context diagram is useful at the conceptual level because it recognizes that the trigger, the situation or situations that give rise to a need for a measurement initiative, the initiator and the eventual customer or recipient of the Software Metrics program are external to the process of developing and implementing that program. The key word is "customer" and this theme of the program serving the needs of customers will recur many times.

Something else we need to be aware of is that a decision not to proceed with the SMP is possible. We will try to avoid this situation—but forewarned is forearmed.

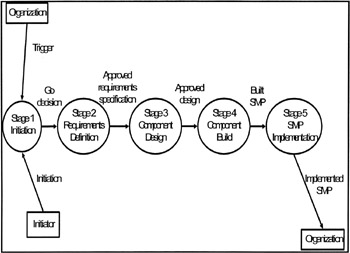

If we move down one level and explode the SMP process bubble you see, in Figure 7.2, that the lifecycle model looks familiar. It bears a close resemblance to simplistic system design lifecycles common in the computing industry. This is quite deliberate.

Figure 7.2: Level 1 Lifecycle Model

Introducing the use of Software Metrics to an organization demands the same approach as any other project whose aim is to develop a system. Ignoring this fact, or missing out one or more of these high-level stages, probably accounts for more failed Software Metrics programs than any other single mistake.

Everything starts somewhere, so we recognize this with an Initiation Stage. Decisions taken during this stage set the scene for the rest of the program and can form the foundation of a successful program—or sound its death knell before it ever really gets going. It is a question of designing for success rather than designing to fail, and we probably all have experience of projects where the latter was the case.

Requirements gathering and specification, which I have referred to as Requirements Definition, is the next stage and is so often ignored. It is not enough to simply read the literature and then to blindly impose a program on the organization. It is very easy to define and develop a Software Metrics program that addresses productivity measurement only to find that the most senior customer for that program is concerned about user satisfaction and not with productivity. The Software Metrics Program needs to address the problems of the organization and we cannot do this unless we know what those problems are. Please remember that one person's view of those problems will never give you the total picture. There are always different viewpoints within an organization and these will have different problems as well as different requirements. I will talk more about this later, but let me stress the importance of this by saying that recognition of these different viewpoints is another important step towards a successful measurement initiative.

Design is also necessary. The Design Stage encompasses both the choice of specific metrics; notice that it is only now that this is considered, together with the design of the infrastructure that will support the use of those metrics. Many programs fail because they concentrate on the metrics at the expense of this infrastructure and one of the areas that seems to let many such programs down is in the area of feedback. Many programs seem to assume that this will happen automatically once data has been collected, but it will not. This aspect of the Software Metrics program needs to be addressed in as much detail as the derivation and specification of the metrics themselves. Both aspects of the program are important. I have used the term Component Design to indicate that there are different streams within the program, perhaps concentrating on cost estimation and on management information as an example.

The Component Build Stage is the easy part, at least speaking relatively. Provided the design is correct and is based on effective requirements capture, the build stage is equivalent to coding from a good software design; it is mechanistic. That is not to say that there will not be a considerable amount of work to do. You will not only have to do the equivalent of coding in terms of writing operational procedures, you will have to build the full system from the ground up from welding the case together to writing the user guides! A lot of work, so make it as easy as possible by getting the earlier stages right!

Implementation is where it gets difficult again. This is where you will be putting your theories into practice. You will meet many problems that you will not have thought about before; this is a fact of life. You will be working closely with other people who may not immediately see the benefits of your proposals. You will be changing the culture. Anyone who has been involved in the introduction of a new computer system will be only too aware of the vagaries and upsets that can occur during this stage but will also be aware of the satisfaction that comes with success.

And if we succeed, then we do it all again while expanding our system across more of the organization and tackling the requirements we put off until the "second build." Do you really want all this grief?

If you do; if you become responsible for the introduction of Software Metrics to your organization, then be prepared for a roller coaster ride. Like a roller coaster you will find things start off slowly as you climb the first slope. But once you pass the summit of that first slope you will be moving so fast through the ups and downs that you could often feel things are out of control. You may well experience real fear at some of things that happen and the things that you see but you will also see a lot more than those on the ground. The trick is to keep control of yourself and the program. When you finally get off the roller coaster you will have a real sense of achievement. Welcome aboard!

|

| < Day Day Up > |

|

EAN: 2147483647

Pages: 151

- Structures, Processes and Relational Mechanisms for IT Governance

- Linking the IT Balanced Scorecard to the Business Objectives at a Major Canadian Financial Group

- Measuring and Managing E-Business Initiatives Through the Balanced Scorecard

- Technical Issues Related to IT Governance Tactics: Product Metrics, Measurements and Process Control

- Governance in IT Outsourcing Partnerships