Managing Bandwidth Usage

You only need one Windows Media server to stream content over an IP network. If streaming media usage were very low in the company (only one or two concurrent, low-bit-rate streams), a Fabrikam employee in D sseldorf could stream content from one Toronto server. The only negative impact would occur on low-bandwidth WANs. Most of the network and the server could easily handle the load. In fact, if this was the expected usage, the company could simply stream from their Web servers.

However, this will not be the case. A server and network can handle many users accessing static Web content such as Web pages and images because data is sent in bursts and large chunks. Streaming media content, on the other hand, requires that data be sent in smaller chunks, and over extended, continuous periods. For example, a user streaming a 100 Kbps stream for 30 minutes will require that much network bandwidth for that amount of time. If 1,000 users want to stream content, such as a live unicast broadcast, a 100 Mbps network will be completely consumed for 30 minutes—assuming there is no other traffic at all on the network, which is rarely the case.

Network bandwidth is by far the most important issue for streaming media. All streaming media tools, protocols, servers, encoders, and players are focused on delivering the best user experience while managing bandwidth usage. For example, an encoder reduces the bit rate of content and packages it to be sent over a network. A media server manages bandwidth to reduce impact on the network and to deliver a high-quality user experience. Protocols like UDP reduce bandwidth usage. You can stream from a Web server, but Windows Media Services works with Windows Media Player to provide much more sophisticated bandwidth management and a better end user experience.

Streaming media producers, developers, and IT professionals must balance quality with bit rate—getting the playback quality as high as possible while keeping the bit rate as low as possible.

It helps to view deploying a streaming media system as deploying a bandwidth management system. The job is not just getting pictures and sound from one place to another; it is also delivering digital media content reliably while balancing bandwidth usage and playback quality. To that end, companies continue searching for improvements in techniques and technologies for creating, delivering, and playing digital media. One of the most effective techniques available today involves decentralizing the hosting of content by placing multiple servers as close to users as possible. This technique is sometimes called “edge serving.”

Using Edge Servers to Manage Bandwidth

In a typical enterprise intranet, maximum concurrencies for clients streaming on-demand content range from 8 to 15 percent of the user population. Therefore, if a network segment has 1,000 users, you can assume that about 80 to 150 users will be streaming unicast content concurrently. If the average bit rate of streaming media content is 100 Kbps, bandwidth usage will be 8 to 15 Mbps. On a 100-Mbps network, this is a reasonable amount of streaming activity, considering that 8 to 15 Mbps of bandwidth usage does not apply to the entire network, but is concentrated on the network segments nearest the servers. In other words, bandwidth usage decreases as the data paths fan out from the servers.

Using these figures as a rough guide, think about what would happen on the Fabrikam network with different server solutions. First, let’s look at a simple network in which users stream from one centralized server.

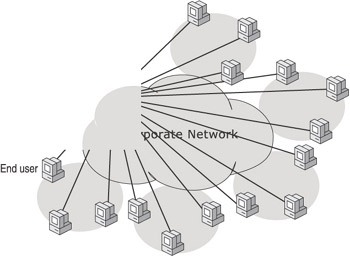

In figure 20.1, you can see that the network segments nearest to the server can become very congested when all users connect to one server. Also, low- to mid-bandwidth WAN connections can be easily consumed with streaming media traffic. A centralized server may work for static content or in situations where the amount of streaming traffic is low, but this topology becomes too congested when there are many concurrent connections.

Figure 20.1: Centralized network topologies cause congestion around the media server.

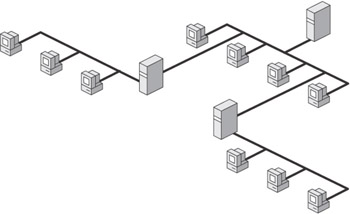

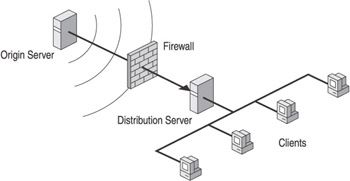

In figure 20.2, the company added edge servers to the remote sites, and implemented a bandwidth-management system. In this managed-bandwidth scenario, instead of all clients streaming from one origin server farm, clients stream from the edge server that is closest to them. Bandwidth is managed on the segments nearest the origin servers and across the entire network, including all WANs.

Figure 20.2: Edge servers reduce congestion by moving content closer to users.

By adding remote servers and a bandwidth-management system, the company has decentralized server activity so bandwidth usage is spread out evenly across the network. With edge serving, network bandwidth no longer dictates the quality of the end user experience. By decentralizing the distribution of streams, you can offer a higher-quality user experience without having to install a higher-bandwidth infrastructure. To handle an increase in user demand, you can simply add more edge servers.

Streaming broadcast content can place more load on a network than on-demand content because there is more likely to be a higher number of concurrent connections for a live event. Fabrikam plans to use multicast for broadcasting live streams. Multicast solves the network load problem because many users can stream multicast content with little increase in bandwidth usage.

However, there will be areas of the network that cannot be configured for multicast because they are served by routers that cannot be multicast-enabled or are on token-ring segments. Also, remote users connecting over dial-up or Internet connections may not be able to receive multicast packets. To solve these problems, the remote servers can be configured to distribute or rebroadcast a unicast stream. This is called “stream splitting.” The server makes one connection to the origin server or another distribution server, and then splits or duplicates the stream to multiple clients that connect locally. By splitting the live stream, the remote server helps to minimize bandwidth usage on the network segments leading to the origin server.

Managing Content

Bandwidth management using edge servers means getting the content closer to the end user. Content management means knowing what content you have on your servers, and making sure that it is the right content. You can do that manually by making lists in a spreadsheet, and manually copying files to edge servers, or you can automate the process with a content management system.

One way to automate the management of on-demand content is to use a system that regularly replicates content from the origin server to all remote servers. The advantage of such a system is that users everywhere in the company will have quick access to any content, and bandwidth usage on the core infrastructure is minimized. Replication can be scheduled to occur at times when network usage is low, such as at night or during weekends. However, not all content is needed at all locations. For example, programs created specifically for a Toronto-based audience might not need to be available on a server in Rotterdam. Therefore, a system of selective replication could greatly decrease the time and bandwidth needed for the process, as well as the storage requirements on the remote servers.

One way to implement selective replication is to manually decide which files should be replicated. However, this process can be time and resource intensive. Besides, how do you make those selection decisions? For example, there might be one employee in Rotterdam who must view the Toronto video. A better solution might be to automate the selection process with cache/proxy servers.

If remote servers have cache/proxy functionality, you do not need to replicate or push content to the servers. Cache/proxy servers automatically cache content that end users request. If a request is made for content that is available in the cache, the cache/proxy server streams the file locally. Otherwise, it streams the file from the origin server, sending the stream to the end user while caching a copy. A cache/proxy server also checks to make sure the local file is current (or “fresh”) before streaming it. Only files that are used locally need to be cached. And by caching only the content that is requested, bandwidth usage on the core network infrastructure is minimized.

One problem of automatic, on-demand caching is that an end user may not choose the best time to request some content that must be streamed from the origin server. For example, an end user may decide to play a stream over a WAN connection when the network is at peak usage. Also, incomplete or partial caches may occur when an end user views only part of a long file or skips to different sections of a file. To remedy this situation, a cache/proxy server can cache the file in a stream that is independent of the stream being sent to the end user.

The best solution for managing content, therefore, is a combination of these concepts. With a complete content management solution, you can choose how to control when content is sent from the origin server. The following items summarize the on-demand content-management solutions and how they might work together:

Automated Replication

With automated replication, you configure a content management system to automatically prestuff files based on your estimation of client usage. Prestuffing is the process of moving content to a cache/proxy server’s cache before it is requested by an end user. You can select which files are replicated and at what time replication occurs. You can also select which servers will receive the files.

For example, you could schedule a Europe-specific video file for replication to all European servers at 2:00 AM GMT. Based on your estimation, the European data centers will then be prepared for the expected high demand of the content.

Cache/proxy Functionality

If content is not available on a remote server, the server’s cache/proxy functionality handles the streaming and caching of the file from the origin server when an end user requests it. For example, an end user in New York might want to view the Europe-specific program, which has not been replicated to his local server.

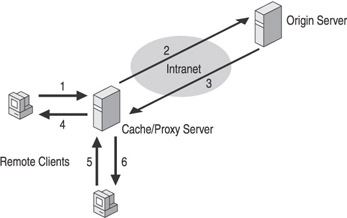

Figure 20.3 shows how the cache/proxy process works. The steps of the process occur in this order:

Figure 20.3: Steps of the cache/proxy process.

-

Client makes a request for content on the origin server. The request is intercepted by the local cache/proxy server, which checks for the content in the local cache.

-

If the content is not found in the local cache, the cache/proxy server transfers the request to the origin server.

-

The origin server streams the content to the cache/proxy server.

-

The cache/proxy server stores the content in the cache and streams the content to the client.

-

A second client makes a request for the same content on the origin server. Again, the request is intercepted by the local cache/proxy server.

-

The cache/proxy server checks for the content in the local cache. If content is available and fresh, the cache/proxy server streams the content locally.

Manual Delivery

In cases where automated replication and cache/proxy functionality are not the best ways to get content closer to the user, you can fall back on manually prestuffing files. For example, you may need to disable cache/proxy functionality on specific remote servers to restrict streaming over slow WANs. However, there may be times when end users in these areas need to view files that have not been automatically replicated. In cases like these, you can implement a system through which users can request content. For example, an end user in New York can request the Europe-specific program. A system administrator or an automated system can then copy the file to the New York server manually or add it to the files to be replicated automatically overnight.

The following summarizes broadcast content management solutions:

Multicast Delivery

In the ideal enterprise network, all end users would receive live programming through multicast delivery. With multicast, one stream serves multiple users, so bandwidth usage is as low as possible. Bandwidth usage is further restricted to only those network paths on which clients request the stream. For example, 10 end users in D sseldorf will require the same network bandwidth as 500 users. If there are no clients receiving the multicast stream in D sseldorf, the stream will not be present on the network and, therefore, no bandwidth will be used. For more details on multicast delivery, see chapter 22.

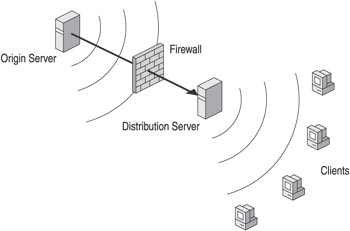

Unicast Distribution

The goal of the Fabrikam deployment is to use multicast delivery on as much of the network as possible. However, if network devices such as firewalls and switches block multicast packets, they can use unicast distribution to stream through the devices, and then use multicast delivery on the other side. Figure 20.4 shows how this works.

Figure 20.4: Using unicast distribution for multicast delivery through a firewall.

Any broadcast publishing point that is configured for multicast delivery can also be enabled for unicast delivery. To set up unicast distribution, publishing points on one or more remote Windows Media servers are configured to source from the origin server or another distribution server using unicast. Then the remote publishing points rebroadcast the stream using multicast delivery. By leap-frogging areas of the network that do not pass multicast packets, you can avoid having to upgrade or redesign those areas.

You can also use multicast distribution, in which you source from a multicast stream and rebroadcast it as unicast streams.

Automatic Stream Splitting

In areas of the network that are not multicast enabled, you can use stream-splitting. Unicast distribution and stream-splitting do roughly the same thing: one stream feeds multiple clients. The differences have to do with how the methods are implemented and how the stream is delivered to the clients. Figure 20.5 shows how stream splitting works:

Figure 20.5: Using stream-splitting with a cache/proxy server.

Where multicast or unicast distribution is accomplished using a broadcast publishing point on a Windows Media server, stream-splitting is accomplished with a cache/proxy server. The advantage is that cache/proxy servers handle stream-splitting automatically, unlike the distribution method in which publishing points need to be created and configured manually. However, a disadvantage of stream-splitting is that clients receive streams through a unicast connection. Therefore, stream-splitting should only be used on network segments that cannot pass multicast traffic, but have the bandwidth to handle multiple unicast streams. For example, cache/proxy servers can be used to split live streams to remote users connected using Remote Access Service (RAS).

Scheduled Delivery

This method combines many of the advantages of on-demand and live delivery. With scheduled delivery, a Windows Media file that would typically be played on demand by an end user is instead broadcast on a publishing point. To view the file, end users tune in at scheduled times. Because multicast can be used for scheduled delivery, more users can view the content concurrently and receive a better user experience with less impact on network bandwidth.

Directing the Client to Content

An edge server solution has two components. The first component involves getting the content closer to the user. The second component involves getting the user to the content. You can do that by redirecting user requests for content on an origin server to a remote or cache/proxy server. After intercepting a request, the cache/proxy server handles the request by streaming content from its cache, streaming and caching content from the origin server, or by splitting a live stream from the origin server.

There are three ways to direct or redirect a client request to an edge server:

Directing the User Manually

If the edge server is not a proxy server, an end user simply enters the URL of the edge server in Windows Media Player. This would be the case if the remote server is simply another Windows Media server connected to the origin server. This might be a good solution in a small company with only a few remote locations. You could manually replicate all files, the file structure, and publishing points from the origin server to all remote servers. Then an end user could connect directly to the remote server. For example, if the URL of some content on the origin server was rtsp://Origin/File.wmv, a remote user would enter the URL rtsp://RemoteServer/File.wmv to access that content.

To make the manual method easier for users, you can include the URL in a Windows Media metafile. For example, you could create a metafile Chicago.asx through which Chicago users could access the file from their remote server. The URL of the file could be something like rtsp://ChicagoWMS1/File.wmv.

You could also create a system that automates the generation of custom metafiles. For example, you could develop an ASP page that returns custom metafiles based on a user’s IP address.

Configuring Proxy Settings

If the edge server is a proxy server, an administrator can configure proxy settings in Windows Media Player. This method is commonly referred to as the forward proxy method for redirection. Figure 20.6 shows the proxy settings in Windows Media Player.

Figure 20.6: Configuring Player proxy settings to access a specific proxy server.

After an end user has added a proxy server and port to a protocol, all requests made using that protocol are sent to the proxy server. For example, if a user requests rtsp://Origin/File.wmv, the Player will send the request to RemoteServer on port 554.

The Enterprise Deployment Pack for Windows Media Player can be used to create a custom installation package for the Player. When creating the package, proxy settings can be specified so every installation of the Player is preconfigured with the correct settings.

Transparent Proxy

At Fabrikam, the cache/proxy servers support the transparent proxy of client requests. This means that all client requests for streaming content at a remote site are automatically sent through the proxy server, regardless of the URL entered by an end user or the proxy settings specified in Windows Media Player.

With the transparent proxy method, the Player and other applications do not require any configuration to use a cache/proxy server. This makes edge serving easier for end users and IT personnel. After transparent proxy has been set up, all redirection is handled using one method, which is controlled by the IT department. For example, an end user cannot mistakenly configure the wrong proxy server in his Player or request content from the wrong remote server.

The easiest way to enable transparent proxy is to assign IP address ranges to remote clients based on location. Then use a system that redirects client requests based on IP address. A layer 4 or layer 7 switch, Web Cache Communication Protocol (WCCP) routers, or policy-based routing can be used to redirect requests. Because the devices work on the application layer, they can redirect the connections within the network and cache/proxy devices transparently to the user and Web application. For more information about the layers of the Open System Interconnection (OSI) model, see the OSI model sidebar in chapter 22.

In the Fabrikam scenario, the IT department will be deploying a decentralized system that delivers streaming media using transparent proxies, that manages bandwidth, and that enables them to manage content. The system also provides feedback that can help Fabrikam understand the health of the system, the quality of the user experience, and details of streaming media usage on the company intranet.

EAN: 2147483647

Pages: 258