Chapter 7: Temporal Segmentation of Video Data

Edoardo Ardizzone and Marco La Cascia

Dipartimento di Ingegneria Informatica

University of Palermo

Palermo, ITALY

{ardizzone, lacascia}@unipa.it

1. Introduction

Temporal segmentation of video data is the process aimed at the detection and classification of transitions between subsequent sequences of frames semantically homogeneous and characterized by spatiotemporal continuity. These sequences, generally called camera-shots, constitute the basic units of a video indexing system. In fact, from a functional point of view, temporal segmentation of video data can be considered as the first step of the more general process of content-based automatic video indexing. Although the principal application of temporal segmentation is in the generation of content-based video databases, there are other important fields of application. For example in video browsing, automatic summarization of sports video or automatic trailer generation of movies temporal segmentation is implicitly needed. Another field of application of temporal segmentation is the transcoding of MPEG 1 or 2 digital video to the new MPEG-4 standard. As MPEG-4 is based on video objects that are not coded in MPEG 1 or 2, a temporal segmentation step is in general needed to detect and track the objects across the video.

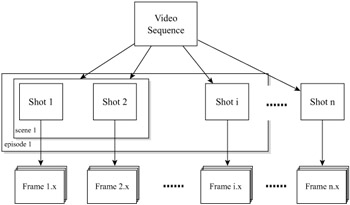

It is important to point out the conceptual difference between the operation of an automatic tool aimed at the detection and classification of the information present in a sequence and the way a human observer analyzes the same sequence. While the human observer usually performs a semantic segmentation starting from the highest conceptual level and then going to the particular, the automatic tool of video analysis, in a dual manner, starts from the lowest level, i.e., the video bitstream, and tries to reconstruct the semantic content. For this reason, operations that are very simple and intuitive for a human may require the implementation of quite complex decision making schemes. For example consider the process of archiving an episode of a tv show. The human observer probably would start annotating the title of the episode and its number, then a general description of the scenes and finally the detailed description of each scene. On the other side the automatic annotation tool, starting form the bitstream, tries to determine the structure of the video based on the homogeneity of consecutive frames and then can extract semantic information. If we assume a camera-shot is a sequence of semantically homogeneous and spatiotemporally continuous frames then the scene can be considered as an homogeneous sequence of shots and the episode as a homogeneous sequence of scenes. In practice, the first step for the automatic annotation tool is the determination of the structure of the video based on camera-shots, scenes and episodes as depicted in Figure 7.1.

Figure 7.1: General structure of a video sequence.

In a video sequence the transitions between camera-shots can be of different typologies. Even though in many cases the detection of a transition is sufficient, in some cases it is important at least to determine if the transition is abrupt or gradual. Abrupt transitions, also called cuts, involve only two frames (one for each shot), while gradual transitions involve several frames belonging to the two shots processed and mixed together using different spatial and intensity variation effects [4].

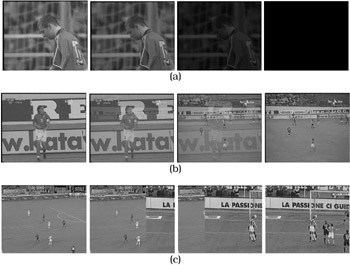

It is also possible to further classify gradual transitions [28], [5]. Most common gradual transitions are fades, dissolves and wipes. Fade effects consisting of the transition between the shot and a solid background are called fade-out, while opposite effects are called fade-in. Fade-in and fade-out are often used as beginning and end effect of a video. Dissolve is a very common editing effect and consists of a gradual transition between two shots where the first one slowly disappears and, at the same time and at the same place, the second one slowly appears. Finally, wipes are the family of gradual transitions where the first shot is progressively covered with the second one, following a well defined trajectory. While in the dissolve the superposition of the two shots is obtained changing the intensity of the frames belonging to the shots, in the case of the wipes the superposition is obtained changing the spatial position of the frames belonging to the second shot. In Figure 7.2 are reported a few frames from a fade-out, a dissolve and a wipe effect.

Figure 7.2: Examples of editing effects. (a) fade-out, (b) dissolve, (c) wipe.

The organization of this chapter is as follows. In Sect. 2 we review most relevant shot boundary detection techniques, starting with basic algorithms in uncompressed and compressed domains and then discuss some more articulated methodologies and tools. In Sect. 3 we propose a new segmentation technique, grounded on a neural network architecture, which does not require explicit threshold values for detection of both abrupt and gradual transitions. Finally, we present in Sect. 4 a test bed for experimental evaluation of the quality of temporal segmentation techniques, employing our proposed technique as an example of use.

EAN: 2147483647

Pages: 393