Developing with the Data Mapping Application Block

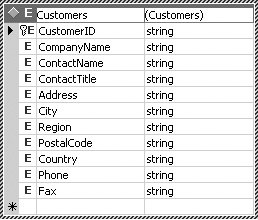

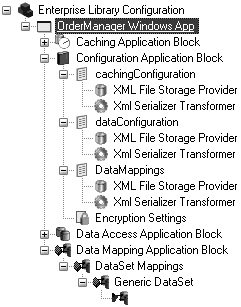

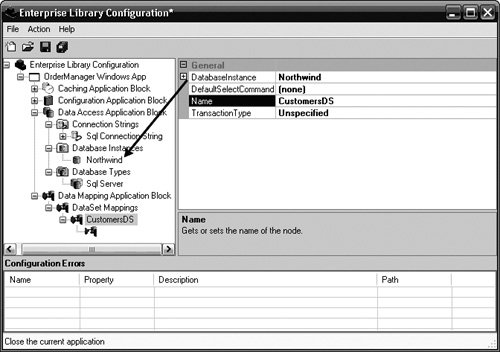

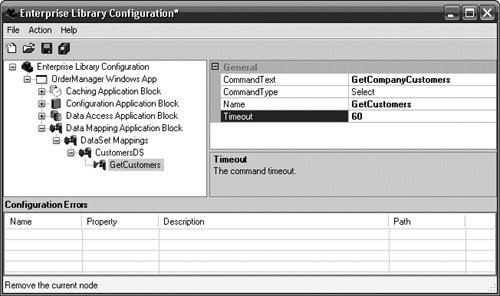

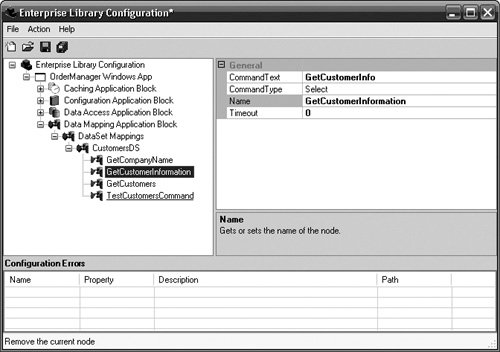

| The previous section covered the design of the Data Mapping Application Block. The primary design goal for the Data Mapping Application Block was to make it easy for developers to create data access logic components without having to worry about writing code to map stored procedure parameters to DataFields in DataTables. After the DataSets and stored procedures have been created for an application, the next step is to create a class that is derived from the DataMapper base class and configure the application block so the new concrete DataMapper can use it. This section details how to use the features described in the previous section to configure and develop an application to use the Data Mapping Application Block. Creating DataSets and Stored ProceduresBefore you can develop and test a data access logic component, you must create the typed DataSet or business entity that it will manage as well as the stored procedures to which it will be mapped. There are many tools you can use to create stored procedures and typed DataSets. One of them is Visual Studio. You can easily create a typed DataSet in Visual Studio by dragging stored procedures or tables from Server Explorer onto the design-time surface for its XML Schema Definition (XSD) tool. Figure A.5 shows the results of dragging a stored procedure named GetCustomers onto this surface and renaming the element to Customers. Figure A.5. Creating a Simple Strongly Typed DataSet in Visual Studio.NET The process for creating the stored procedures and DataSets and configuring them to map to one another could be automated by making it wizard driven. It is possible that a complement to the Data Mapping Application Block could be developed that steps a developer through the process of creating a stored procedure and a corresponding strongly typed DataSet. It could produce output for the stored procedure, a strongly typed DataSet, configuration data that maps them together, and starting code for the derived DataMapper. This would make the setup needed before configuring and developing against the application block a more automated process. Configuration and DevelopmentThe flexibility provided by the Data Mapping Application Block comes in its capacity to use configuration data to determine how to map stored procedure parameters to DataFields, determine the transaction IsolationLevel for database operations, decide how to cache and expire from cache the data returned from database operations, and set command properties like the command's Timeout. This not only drastically reduces the need to spend development cycles coding this logic in a data access logic component, but it also allows the data access logic to more easily adapt to changes in the environment. For example, if the names of stored procedure parameters change or entirely different stored procedures need to be called to perform specific database operations, no code needs to change. Only the configuration for a data access logic component in the Data Mapping Application Block would need to be modified. This section shows you how to configure the Data Mapping Application Block. Adding the Application BlockThe first step to configuring any data mapping between a stored procedure and a strongly typed DataSet is to add the Data Mapping Application Block to an application's configuration information. To do this, right-click on the application node and select New > Data Mapping Application Block. Once the block has been added, a subnode will be created underneath the application node and a new configuration section will be added to the settings for the Configuration Application Block to represent the metaconfiguration data for the Data Mapping Application Block. Figure A.6 shows what the new configuration hierarchy looks like once the Data Mapping Application Block has been added. Figure A.6. Configuration Hierarchy After Adding the Data Mapping Application Block Since the Data Mapping Application Block has a dependency on the Data Access Application Block and, if planning to cache data, a dependency on the Caching Application Block, those blocks are automatically added to the configuration hierarchy if they did not already exist. For more information on configuring the Data Access Application Block and the Caching Application Block, please see Chapters 3 and 4, respectively. DataSetMappingOnce the block has been added to an application's configuration, a new, blank DataSetMapping will already have been added to the configuration hierarchy for the Data Mapping Application Block. New DataSetMappings can be added by right-clicking the DataSet Mappings node for the Data Mapping Application Block and selecting New > DataSet Mapping. The name for a DataSetMapping must be changed to the same name as the DataSet to which it is intended to map stored procedure parameters. For example, if a data access logic component is going to manage the operations for the Customers DataSet shown in Figure A.5, then the name of the Data-SetMapping must be CustomersDS, because that is the name that I gave to the DataSet. The DataSetMappingProvider uses the name of the DataSet to match the DataSet to its mapping information. Additionally, the DatabaseInstance needs to be set to point to the database where the stored procedure that will be mapped to this DataSet exists. The DatabaseInstance is configured the way it normally would be with the Data Access Application Block. The Property pane for DataSet-Mapping provides a drop-down list box so that an existing DatabaseInstance can be selected. Figure A.7 shows how to use the configuration properties for a DataSetMapping to change to point it at a DatabaseInstance that is configured in the Data Access Application Block. Figure A.7. Renaming the DataSetMapping and Setting the DatabaseInstance SelectCommandMappingIn order to retrieve data from the data source that is represented by the DatabaseInstance property, a SelectCommandMapping must be added to the DataSetMapping. A SelectCommandMapping is a special type of CommandMapping that is preset for operations that do not require a mapping from DataFields to stored procedure parameters. For the most part, it is used for Retrieve operations; however, SelectCommandMappings can also be used to execute nonqueries because the parameters that get passed to this method do not require any mapping. A DataSetMapping can have multiple SelectCommandMappings. One of the SelectCommandMappings must be used to fill the DataSet, while the others can be used to return DataReaders, scalar values, and to execute nonqueries. To add a SelectCommandMapping to a DataSetMapping, right-click the DataSet Mappings node and select New > SelectCommand Mapping. A new SelectCommandMapping node will be added as a subnode under the DataSetMapping node. To configure which stored procedure this SelectCommandMapping represents, set the CommandText property to the name of the stored procedure. Additionally, the name for the SelectCommandMapping should be changed to something meaningful for this mapping. The CommandTimeout property can also be optionally set to any long value; the default value for the CommandTimeout is 30 seconds. In Figure A.8, a SelectCommandMapping has been added to the CustomersDS DataSetMapping. The name of the SelectCommandMapping has been changed to GetCustomers and maps to the stored procedure named GetCompanyCustomers. Also, the timeout for this command has been extended to 60 seconds. Figure A.8. Configuring a SelectCommandMapping After SelectCommandMappings have been created and configured, database operations can be executed that return results as strongly typed DataSets, DataReaders, scalar values, and values in output parameters (by executing nonqueries). One of the SelectCommandMappings should be selected as the DefaultSelectCommand. This is the SelectCommandMapping that represents the command that will be used to fill the DataSet. The DefaultSelectCommand is a property that can be configured for the DataSetMapping node. Figure A.7 shows the drop-down list box where an existing SelectCommandMapping can be selected as the DefaultSelectCommand. Creating the Data Access Logic ComponentA data access logic component needs to be created before any values can be returned in an application. To create a data access logic component, derive a class from the abstract DataMapper class and override the abstract DataSetType function so that it returns a new strongly typed DataSet. The CustomersMapper class shown in Listing A.2 represents a data access logic component that manages operations for the CustomersDS typed DataSet. The DataSetType method returns a new CustomersDS DataSet. New SelectCommandMappings can be added to the configuration for this DataSetMapping in the Enterprise Library Configuration Tool, and methods can be added to the CustomersMapper data access logic component that returns the proper results. For example, Figure A.9 illustrates how three more SelectCommandMappings have been added to the configuration information for the CustomersDS DataSet. Figure A.9. Adding More SelectCommandMappings Now, the CustomersMapper class can be extended to return DataReaders and scalar values and to execute nonquery commands. The CustomersMapper class has been extended with methods to do just that in Listing A.3. Listing A.3. Extended CustomersMapper Data Access Logic Component

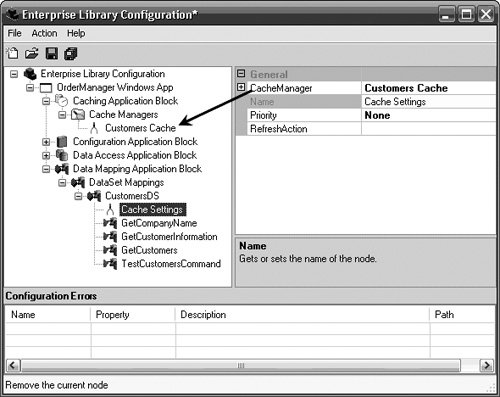

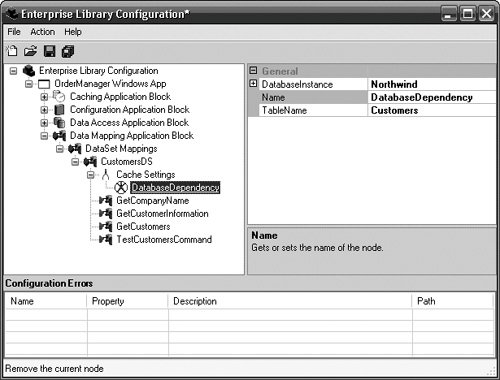

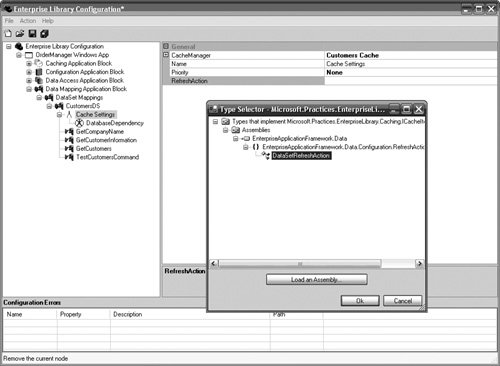

CachingOne of the best practices with respect to designing data access logic components in a distributed application is to consider caching data that is nontransactional, expensive to create or transform, and is static or semi-static. Thus, another design goal for the Data Mapping Application Block was to provide configurable caching capabilities in data access logic components by encapsulating many of the features of the Caching Application Block. Configuration data is used to determine whether or not to cache data for a DataSet. If a DataSet is configured so that it is cached, additional configuration information is used to set multiple CacheItemExpirations and a CacheItemRefreshAction to control the expiration behavior for the cached data. Caching is enabled for a data access logic component by right-clicking on a DataSetMapping node and selecting New > Cache Settings. The inclusion of this node indicates to the Data Mapping Application Block that it should cache the data for this data access logic component. The guidance around caching data in data access logic components is to have separate caches for each type of data. For example, if an application needs to cache orders data and customers data, it should use a different CacheManager for each of these. When the CacheSettings are added to the Data Mapping Application Block, a CacheManager that has already been configured in the Caching Application Block's settings must be selected (see Chapter 4 for more information on how to create a CacheManager). If no other settings are added for caching this data, then the data will exist in cache until it is scavenged. This determination is made by the configuration that has been set for the CacheManager in the Caching Application Block. Figure A.10 shows how the Customers data access logic component has been configured to cache its data in the Customers Cache CacheManager. Figure A.10. Enabling Caching and Setting the CacheManager Adding a CacheItemExpirationIt will often be necessary to expire data from the cache after a certain period of time has passed or a change has been made to some dependency, like a file or database table. For example, it may be desirable to expire the data from the cache if either the database table from which the cached data originated has been modified (i.e., a DatabaseDependencyExpiration) or if more than five minutes has passed since the last time this data was accessed (i.e., a SlidingTimeExpiration). Many expiration policies can be configured for a data access logic component; if any of them occurs, the item will be expired from cache. To create a CacheItemExpiration, right-click on the Cache Settings node, select New, and the type of expiration to add. Then configure the properties that are specific for that type of expiration policy. In Figure A.11, a database dependency expiration policy has been added to the cache settings for the Customers data access logic component and configured to remove cached data when a change occurs in the Customers table in the Northwind database. For more information about the properties that are available for each type of expiration policy, see Chapter 4. Figure A.11. Configuring a DatabaseDependencyExpirationNode Adding a RefreshActionIt is sometimes necessary to take some action when an item is removed from the cache. For example, sometimes it will be important to refetch the expired data and add it back to the cache, while other times it may simply be necessary to log the fact that an item was removed. The CacheItemRefreshAction serves this purpose. CacheItemRefreshActions can be powerful because they are completely decoupled from the data access logic component or the running application that references them. It is entirely possible to reconfigure a data access logic component to use a new CacheItemRefreshAction to perform a specific action (e.g., log information about why an item was expired from the cache) without modifying the application code in any way. To add and configure a CacheItemRefreshAction, select the ellipses button for the RefreshAction property for the Cache Settings node. The Enterprise Library Configuration Tool will display the Type Selector dialog, and the tool will automatically try to find all implementations of the ICacheItemRefreshAction interface that it knows about. Selecting one of these implementations will cause that CacheItemRefreshAction to be notified as items are expired from that particular CacheManager. Figure A.12 shows how to add the DataSetRefreshAction to the configuration data for the CustomersDS cache settings information. Figure A.12. Adding a RefreshAction DataTableMappingsSo far I haven't shown how to map stored procedure parameters to DataFields in the DataTables of a DataSet. That is because SelectCommands simply pass through the arguments that they are given. The Data Access Application Block handles mapping the values for these arguments to the parameters that it discovers for the stored procedure. It does not, however, do any mapping for Insert, Update, and Delete operations. When using DataSets to contain the data that needs to be propagated to the database, data mapping entails setting the values that get passed into stored procedures to the values for DataFields in the DataTables of a DataSet. To begin mapping these fields, a DataTableMapping needs to be added for a DataSetMapping. To add a DataTableMapping, right-click on the DataSetMapping node and select New > DataTable Mapping. The name for the DataTableMapping must be the same as the name of the DataTable to which it is being mapped. Figure A.13 shows the results of adding a Data-TableMapping for the Customers DataTable in the CustomersDS DataSet. Figure A.13. Adding a DataTableMapping CommandMappingsA DataTableMapping contains a collection of CommandMappings. After the DataTableMapping has been created, at least one CommandMapping should be added to insert, update, and/or delete data from the data source. Since it doesn't really make much sense to have a DataTableMapping without a CommandMapping, a CommandMapping is automatically added as a subnode to the DataTableMapping when the DataTableMapping node is created. Its CommandType is set to Select by default; however, this can be changed in the Property pane for the CommandMapping node. Before changing it however, it is important to understand what SelectCommandMappings mean in the context of a DataTableMapping. SelectCommand: Lazy LoadingSelectCommandMappings for a Data-Table work differently than SelectCommandMappings do for the entire DataSet. One of the benefits of loading a DataSet into memory is that all tables can get loaded into memory at the same time. For example, given a hierarchical DataSet where there is a parent Orders table and a child OrderDetails table, not only can the parent Orders table be loaded with data, but the child OrderDetails table can as well. However, loading all the data for a DataSet with a complex hierarchy or a tremendous amount of data can incur considerable performance costs. This is especially detrimental if some DataTables never need to be populated with data or all of the data that is loaded is not needed. A best practice concerning the use of data access logic components is to only retrieve the data that is needed and only when it is needed. [4] This is referred to as lazy loading and it can significantly improve the performance of an application and enhance its scalability if there is a massive amount of data or many objects to load. The FillTable function in the DataMapper class can be used to perform lazy loading. Using the DataMapper's Get-Object function, a data access logic component can ensure that only the data that is required is retrieved during the initial load. The FillTable function can then be used to fill the DataTables in the DataSet as needed.

When the DataSet is originally filled, the Data Mapping Application Block will not fill DataTables that have a SelectCommand associated with them. This serves as an indicator to the block that this table should be explicitly loaded. Using the SelectCommand for a table and the FillTable function, a developer can load this data into memory only when needed. Caching will still apply if it has been set for the DataSet. The Data Mapping Application Block will keep a separate copy of the DataSet for every unique instance. For example, rather than retrieving all of the OrderDetails records for every Order record in an hierarchal orders DataSet, only the Orders table can be initially populated and the OrderDetails records can be retrieved as needed. When the OrderDetails records are needed for a specific order, those records can be retrieved, cached, and returned by the Data Mapping Application Block. Listing A.4 demonstrates how to accomplish this by using the FillTable method to lazy load the data for the OrderDetails table for a specific Order record. Listing A.4. Lazy Loading the OrderDetails Data

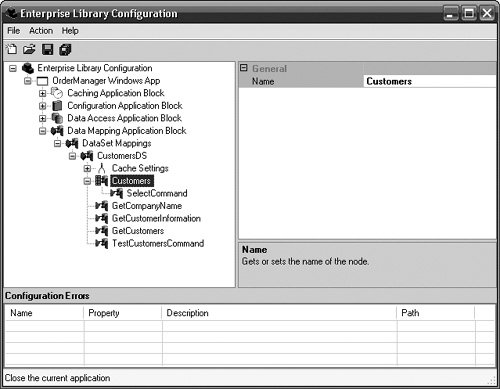

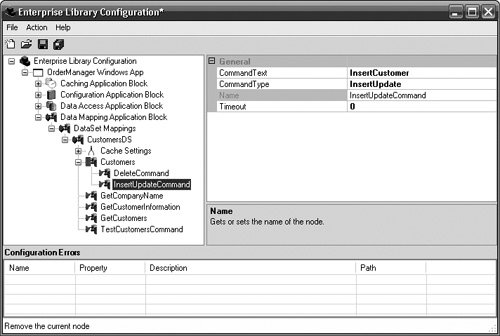

Insert, Update, and Delete CommandsConfiguring a DataTableMapping with its update operations is where the data mapping process actually begins. The difference between retrieval operations and update operations is that update operations contain zero or more CommandParameterMappings and retrieval operations do not. The first step in configuring an update operation is to change the CommandType for a CommandMapping to Insert, Update, Delete, or InsertUpdate. The InsertUpdate value is meant to be used for both insert and update operations. Often one stored procedure will exist that handles both insert and update operations by checking to see if a record exists (update) or not (insert). Rather than creating two separate CommandMappings with the same collection of CommandParameterMappings, the InsertUpdate CommandType allows one CommandMapping to be used for both operations. To create a new CommandMapping, right-click on the DataTableMapping node and select New > Command Mapping. Then change the CommandType to either Select, Insert, Update, Delete, or InsertUpdate. Figure A.14 shows how the configuration for the CustomersMapper data access logic component has been extended with a DeleteCommand and InsertUpdateCommand for its Customers DataTable. Figure A.14. Creating a New CommandMapping for a DataTable CommandParameterMappingsThe details for the data mapping between a stored procedure's parameters and a DataSet's DataFields occur at the lowest level of the configuration hierarchy. CommandParameterMappings allow the DataFields in the Data-Tables of a DataSet to map to the parameters for a stored procedure. A CommandParameterMapping simply holds the state for the name of the parameter and the source column (i.e., name of the DataField) in the DataSet. If the source column is left blank, the block assumes that there is no DataField mapping and that the value for the parameter must be explicitly set at runtime. This is to allow for non-DataSet (or explicit) parameters. There may be situations where it may not make sense to include all stored procedure parameters as DataFields. For example, stored procedures sometimes require a UserId or UserName parameter to determine if a user is authorized to access or update a certain set of data; however, from an object-oriented perspective, it may not make sense to have a user ID element in the typed DataSet. Identification/authentication fields like SessionId, UserId, or even Current Date and Time often need to be explicitly set; they are elements of data that need to be passed to the data source for data entitlement and auditing reasons. However, they are more global in nature than the data that typically exists in any specific DataSet. In the Data Mapping Application Block, fields that must be explicitly set are referred to as non-DataSet (or explicit) parameters. To create a new CommandParameterMapping, right-click on the CommandMapping node and select New > CommandParameterMapping. Set the Name property to the name of the stored procedure parameter and SourceColumn to the name of the DataField to which it should map. If the parameter is meant to be explicitly set at runtime, then the SourceColumn property must be left blank. It is also important that the CommandParameterMappings are listed in the order in which they appear in the stored procedure, because the Data Access Application Block's GetStoredProcCommandWrapperWithSourceColumns method expects the CommandParameters to be in the order that they exist in the stored procedure. If the CommandParameterMappings are added in the wrong order, the order can be changed by right-clicking on a CommandParameterMapping node and selecting either Move Up or Move Down. Figure A.15 shows how the configuration for the Customers DataTable has been completed by adding the CommandParameterMappings to the two CommandMappings. The delete command accepts one parameter, and the value for this parameter is retrieved from the CustomerID field in the Customers DataTable. The insert and update commands accept the following parameters: CustomerID, CompanyName, ContactName, ContactTitle, Address, City, Region, PostalCode, Country, Phone, and Fax. Figure A.15. Adding CommandParameterMappings for a Command Once the insert, update, and delete commands have been set for a data access logic component, the base DataMapper's PutObject method can be called in a data access logic component to propagate changes back to the data source. For example, the CustomersMapper data access logic component may have a RemoveCustomer method that accepts a customer ID. Since the mapping of stored procedure parameters to DataFields is handled by the Data Mapping Application Block, the RemoveCustomer method should just remove the appropriate record from the Customers DataTable and call the base DataMapper's PutObject method. Listing A.5 shows how this is accomplished. Listing A.5. Calling PutObject to Propagate Changes to the Data Source

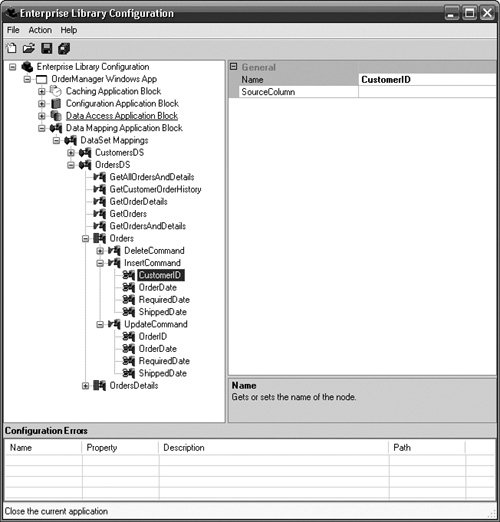

The base DataMapper class contains a ListDictionary that is expected to contain a list of any explicit parameters and their values. Helper functions exist to facilitate getting and setting the explicit parameters and their values. Any items in this list can be used during an update operation. Suppose an enterprise application needed to manage information about orders and order details in addition to customers. Additionally, it may have requirements such that order and order detail information needs to be retrieved per customer. However, for this application's design, the customer ID is not a field in the OrdersDS typed DataSet that contains the order and order detail information. The database operations for this data access logic component can be configured similarly to the way the CustomersMapper was. One difference, however, is that since the stored procedures expect a customer ID and that field does not exist in the OrdersDS DataSet, it will need to be explicitly set at runtime. Figure A.16 shows the configuration for the OrdersMapper data access logic component and highlights the configuration node for CustomerID, where the SourceColumn is left blank to indicate that it should be explicitly set at runtime. Figure A.16. Setting Explicit Parameters for CommandParameterMappings When an order needs to be added, the customer ID can be marked as an explicit parameter by using the helper methods that are part of the base DataMapper class. Listing A.6 shows how this can be accomplished. Listing A.6. Setting Explicit Parameters Before Calling PutObject

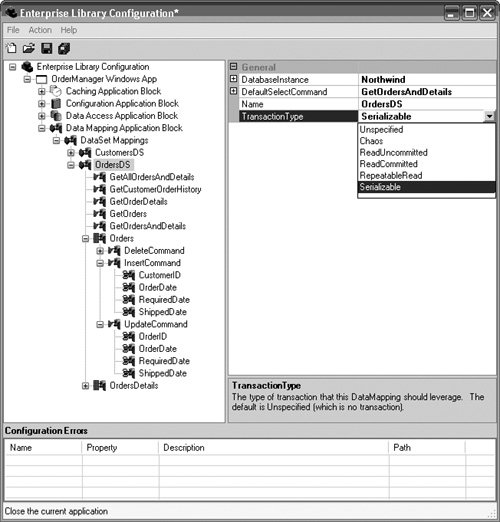

TransactionsA data access logic component can be configured so that the database operations that it performs all occur in the scope of a transaction. For example, the transaction IsolationLevel for the OrdersMapper data access logic component can be configured so that as changes are made to order and order detail records, either all changes will be committed to the data source or none will. Setting the transaction IsolationLevel for a data access logic component can be done by modifying the setting for the transactionType property for the DataSetMapping. Figure A.17 shows how the IsolationLevel is set to Serializable for the OrdersMapper data access logic component. Figure A.17. Setting the Transaction IsolationLevel for a Data Access Logic Component Sometimes, transactions may need to be set at a more granular level. It may be desirable, for example, to have some database operations occur in the scope of a transaction and others that do not. To allow for this, the base DataMapper class contains a protected property named transactionType. Setting the TRansactionType property to a transaction IsolationLevel before a call is made to PutObject will cause the database operations to be performed in the scope of a transaction. Listing A.7 shows how to add order and order detail information in the scope of a serializable transaction. Listing A.7. Programmatically Setting the Transaction Level

|

EAN: 2147483647

Pages: 103