DirectX AudioDirectSound DirectMusic

DirectX Audio = DirectSound + DirectMusic

Before we start, let's look at the DirectX Audio building blocks. To do that, it helps to have a little history. Microsoft created DirectSound back in 1995. DirectSound allowed programmers to access and manipulate audio hardware quickly and efficiently. It provided a low-level mechanism for directly managing the hardware audio channels, hence the "Direct" in its name.

Microsoft introduced DirectMusic several years later, providing a low-level, "direct" programming model, where you also talk directly to a MIDI device, as well as a higher-level content-driven mechanism for loading and playing complete pieces of music. Microsoft named DirectMusic's lower-level model the Core Layer and the content-driven model the Performance Layer, which provides relatively sophisticated mechanisms for loading and playback of music. The Performance Layer also manages the Core Layer.

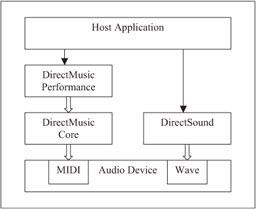

In DirectX 7.0, the host application uses DirectMusic and DirectSound separately (see Figure 8-1). MIDI and style-based music are loaded via the DirectMusic Performance Layer and then fed to the DirectMusic Core, which manages MIDI hardware as well as its own software synthesizer. Sound effects in the form of wave files are loaded into memory, prepared directly by the host application, and fed to DirectSound, which manages the wave hardware as well as software emulation. It became clear after the release of DirectMusic that the content-driven model appealed to many application developers and audio designers since the techniques used by DirectMusic to deliver rich, dynamic music were equally appropriate for sound effects. Microsoft decided to integrate the two technologies so that DirectMusic's Performance Layer would take on both music and sound effect delivery.

Figure 8-1: In DirectX 7.0, DirectMusic and DirectSound are separate components.

Microsoft added sound effect functionality to the Performance Layer, including the ability to play waves in Segments and optionally use clock time instead of music time. The Core Layer assumes wave mixing via the synthesizer, which is really just a sophisticated mix engine that happens to be more efficient than the low-level DirectSound mechanisms. This way, the Core Layer premixes and feeds sound effects into one DirectSound 3D Buffer, dramatically increasing efficiency. Real-time audio processing significantly enhances the DirectMusic/DirectSound audio pipeline.

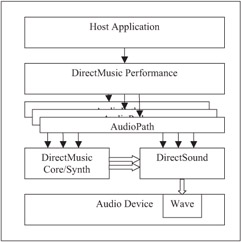

Perhaps the most significant new feature is the introduction of the AudioPath concept. An AudioPath manages a virtual audio channel, or "path," from start to end. This starts with the generation and processing of MIDI and wave control data, continues through the synthesizer/mix engine in the Core Layer, on through the audio effects processing channels, and finally to the final mix, including 3D spatialization.

With DirectX 8.0 and later, the Performance Layer controls one or more AudioPaths, each of which manages the flow of MIDI and wave data into the DirectMusic software synthesizer, on through multiple audio channels into DirectSound, where they are processed through effects, and through their final mix stage into the audio device (see Figure 8-2).

Figure 8-2: In DirectX 8.0, DirectSound and DirectMusic merge, managed by AudioPaths.

This integration of DirectSound and DirectMusic, delivering sounds and music through the Performance Layer via AudioPaths, is what we call DirectX Audio.

| Note | When Microsoft delivered DirectX Audio in DirectX 8.0, there was one unfortunate limitation: The latency through the DirectMusic Core Layer and DirectSound was roughly 85ms, unacceptably high for triggered sound effects. Therefore, although a host application could use the AudioPath mechanism to create rich content-driven sounds and music and channel them through sophisticated real-time processing, it had to bypass the whole chain and write directly to DirectSound for low latency sound effects (in particular, "twitch" triggered sounds like gun blasts) in order to achieve acceptable latency. Fortunately, Microsoft improved latency in the release of DirectX 9.0. With this release, games and other applications can finally use the same technology for all sounds and music. |

Sounds like a lot of stuff to learn to use, eh? There is no denying that all this power comes with plenty of new concepts and programming interfaces to grok. The good news is you only need to know what you need to know. Therefore, it is quite easy to make sounds (in fact, a lot easier than doing the same with the original DirectMusic or DirectSound APIs) and then, if you want to learn to do more, just dig into the following chapters!

In that spirit, we shall write a simple application that makes a sound using DirectX Audio. First, let's acquaint ourselves with the basic DirectX Audio building blocks.

EAN: 2147483647

Pages: 170