17.8 Managing Quorum

17.8 Managing Quorum

As you add members to your cluster using the clu_add_member(8) command or remove members from your cluster using the clu_delete_member(8) command, votes are automatically adjusted to maintain quorum. Generally, this will suffice; however, there may be times when you will need to alter your cluster's expected votes or a member's votes (for example, if you need to remove several members from your cluster for preventative maintenance). In the event that you do need to modify votes administratively, you should use the clu_quorum(8) command.

17.8.1 Viewing Quorum Status

The easiest way to know if you have quorum is to see if you can login to the system (or if you are logged in, see if the system responds when you type in a command). Barring the obvious, you may be curious to see your cluster's current voting and quorum status. You can use the clu_quorum command without any command options.

# clu_quorum Cluster Quorum Data for: babylon5 as of Thu Jan 10 03:37:04 EST 2002 Cluster Common Quorum Data Quorum disk: dsk4h File: /etc/sysconfigtab.cluster Attribute File Value expected votes 3 Member 1 Quorum Data Host name: molari.tcrhb.com Status: UP File: /cluster/members/member1/boot_partition/etc/sysconfigtab Attribute Running Value File Value current votes 3 N/A quorum votes 2 N/A expected votes 3 3 node votes 1 1 qdisk votes 1 1 qdisk major 19 19 qdisk minor 112 112 Member 2 Quorum Data Host name: sheridan.tcrhb.com Status: UP File: /cluster/members/member2/boot_partition/etc/sysconfigtab Attribute Running Value File Value current votes 3 N/A quorum votes 2 N/A expected votes 3 3 node votes 1 1 qdisk votes 1 1 qdisk major 19 19 qdisk minor 112 112

From the output of the clu_quorum command, you can see that our cluster has a quorum disk configured. Additionally, you can see the values that the cluster is currently using ("Running Value") as well as the values that members will use the next time they are booted ("File Value").

The clu_quorum command gets the "File Value" information from the member's sysconfigtab file as it clearly denotes, but where does the "Running Value" come from? This information is stored in the Connection Manager (cnx) kernel subsystem.

17.8.1.1 The cnx Subsystem

The cnx subsystem contains the attributes that the system is currently using.

# sysconfig -q cnx cnx: name = Connection Manager version = 1 cluster_name = babylon5 cluster_founder_csid = 65537 node_cnt = 2 has_quorum = 1 qdisk_trusted = 1 member_cnt = 2 current_votes = 3 expected_votes = 3 quorum_votes = 2 qdisk_major = 19 qdisk_minor = 112 qdisk_votes = 1 mem_seq = 2 add_seq = 2 rem_seq = 0 node_id = 2 node_name = sheridan node_csid = 65537 node_votes = 1 node_expected_votes = 3 msg_level = 1

As you can see from the output, all the attributes (and more) from the clu_quorum command's "Running Value" are represented.

For more information on the cnx subsystem, see the sys_attrs_cnx(5) reference page.

17.8.1.2 The sysconfigtab File and the clubase Subsystem

The cluster base (clubase) subsystem contains the attributes that define the cluster as mentioned in section 17.2.6. These attributes are loaded from the system's sysconfigtab file when the system is booted. Although the attribute values may be modified in a member's sysconfigtab file, they will not be reflected in the clubase subsystem until the system is rebooted. This is not a big deal, though, because these attributes are only used to configure or join the cluster. The running values for the system are stored in the cnx subsystem as we discussed in the previous section.

Here is an example to show how the sysconfigtab file can differ from what the clubase subsystem can see. We have booted a one-member cluster. The system's sysconfigtab file shows that no quorum disk is configured and that the cluster_expected_votes value is 1.

# sysconfigdb -l clubase clubase: cluster_expected_votes = 1 cluster_quorum_conf_active = 0 cluster_qdisk_major = 0 cluster_qdisk_minor = 0 cluster_qdisk_votes = 0 cluster_name = babylon5 cluster_node_name = molari cluster_node_inter_name = molari-ics0 cluster_node_votes = 1 cluster_interconnect = mct cluster_seqdisk_major = 19 cluster_seqdisk_minor = 96

The clubase subsystem on the system confirms these values.

# sysconfig -q clubase cluster_expected_votes \ > cluster_qdisk_major cluster_qdisk_minor cluster_qdisk_votes clubase: cluster_expected_votes = 1 cluster_qdisk_major = 0 cluster_qdisk_minor = 0 cluster_qdisk_votes = 0

We will now add a second member to the cluster as well as a quorum disk. To add a second member we will use the clu_add_member(8) command as we discussed in Chapter 11. We will discuss how to add a quorum disk in section 17.8.3.1. To save a tree, we will not show the output here but instead illustrate how these actions affect the cluster.

Looking at the clubase subsystem on our member after the quorum disk and member have been added, you can see that nothing has changed.

# sysconfig -q clubase cluster_expected_votes \ > cluster_qdisk_major cluster_qdisk_minor cluster_qdisk_votes clubase: cluster_expected_votes = 1 cluster_qdisk_major = 0 cluster_qdisk_minor = 0 cluster_qdisk_votes = 0

The sysconfigtab file, however, does reflect the recent changes.

# sysconfigdb -l clubase | grep -E "expected|_qdisk" cluster_expected_votes = 3 cluster_qdisk_major = 19 cluster_qdisk_minor = 112 cluster_qdisk_votes = 1

| Caution | Do not modify a member's sysconfigtab file to adjust votes. Use the clu_quorum command. The clu_quorum command contains logic to insure that you do not attempt to set a value that would cause the cluster to lose quorum. Furthermore it makes sure that each member's cluster_expected_votes value is the same. Another important point is that the cluster_expected_votes attribute is also stored in a cluster-common file, /etc/sysconfigtab.cluster. The clu_quorum command also ensures that this file is properly updated. |

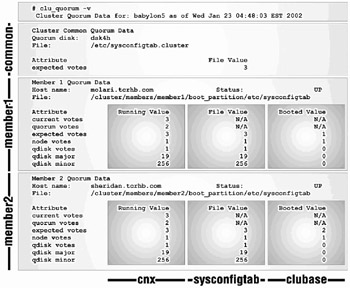

You can also use the clu_quorum command with the "-v" option to see the clubase subsystem values. They are under the "Booted Value" field. See Figure 17-12.

Figure 17-12: "clu_quorum -v" explained

When molari was booted, cluster_expected_votes was 1 and no quorum disk was configured. After the clu_add_member command, you can see that sheridan's cluster_expected_votes value was 2. Finally, we added the quorum disk after sheridan was booted. We know this because both the "Running Value" and "File Value" indicate that a quorum disk is configured but neither member's "Booted Value" shows a quorum disk.

| Note | The clu_quorum command logs changes to the clu_quorum.log file located in the /cluster/admin directory. |

17.8.2 Modifying Votes

It may be necessary on occasion to change a member's status from a voting member (i.e., a member with 1 vote), to a non-voting member (i.e., a member with zero votes).

Why would you want to change a member to non-voting member? Anytime a system will be removed from the cluster for a temporary, but extended, period of time, it is a good idea to adjust the number of votes required to form and maintain the cluster. You could use the clu_delete_member(8) command to remove the member, but this would be overkill. By making the member a non-voting member, the cluster's expected votes will be lowered by one as well.

| IMPORTANT | If turning a member into a non-voting member gives you a cluster with an odd number of voting members, you should also adjust the quorum disk vote to zero (or temporarily remove the quorum disk from the cluster). See section 17.8.3 for more information on managing the quorum disk. |

17.8.2.1 How to Adjust a Member's Votes

You can adjust the number of votes a member can contribute by using the clu_quorum command with the "-m" option.

clu_quorum –m member_name #votes

The only two acceptable values for the #votes parameter as of this writing are 0 or 1.

# clu_quorum -m molari 0 Collecting quorum data for Member(s): 1 2 Member votes successfully adjusted.

To verify that the votes were changed, you can issue the following command:

# clu_get_info -m 1 -full | grep -E "Hostname|votes" Hostname = molari.tcrhb.com Member votes = 0

The "-m 1" option to clu_get_info(8) command gets member1's information. Notice the slight difference in the "-m" option between the two commands – clu_quorum uses the member's name whereas clu_get_info uses the memberid. Due to this inconsistency, it may be easier to stick with the clu_quorum command sans options.

# clu_quorum | grep -E "^Host|^node|Running" Host name: molari.tcrhb.com Status: UP Attribute Running Value File Value node votes 0 0 Host name: sheridan.tcrhb.com Status: UP Attribute Running Value File Value node votes 1 1

In order to adjust a member's votes, the cluster must have access to the member's boot_partition. If the member is down, however, the boot_partition will be unmounted. If the member is down, but at least one of the cluster members has physical access to the member's boot disk, you can add the "-f" option to the clu_quorum command to "force" the operation. The "-f" option mounts the member's boot_partition, modifies the sysconfigtab file, and unmounts the member's boot_partition. This is a good reason why member boot disks should be accessible by multiple cluster members. If the member is down, the clu_quorum command will notify you.

# clu_quorum -m molari 1 Collecting quorum data for Member(s): 1 2 *** Error *** One or more cluster members are DOWN. You may use the -f option to force the quorum operation.

By adding the "-f" option, the clu_quorum command completes successfully.

# clu_quorum –f -m molari 1 Collecting quorum data for Member(s): 1 2 Member votes successfully adjusted.

You can also use "-f" option to see a down system's sysconfigtab values.

# clu_quorum -f | grep -E "^Host|^node|Value" | tail -6 Host name: molari.tcrhb.com Status: DOWN Attribute File Value node votes 0 Host name: sheridan.tcrhb.com Status: UP Attribute Running Value File Value node votes 1 1

17.8.2.2 How to Adjust the Cluster's Expected Votes

It is the cluster's expected votes (CEV) value that is used to determine the cluster's quorum votes (the number of votes that are required to form a cluster and keep the cluster running).

The CEV value will not be lowered once the cluster is formed if a member joins or leaves the cluster. Remember that the CEV value is equal to the maximum of the following:

-

The previous CEV value. This is the CEV value that was calculated the last time a state transition occurred in the current cluster incarnation.

-

The largest cluster_expected_votes value of all members. This value should be the same on every member.

-

The sum of all cluster_node_votes, plus the cluster_qdisk_votes (if the quorum disk is configured and assigned a vote).

So what would happen if enough cluster members were to fail such that one more failure would cause the cluster to lose quorum?

There are only two methods to lower a CEV value. You can delete a member using the clu_delete_member command or use the clu_quorum command with the "-e" option.

For example, say a lightning storm hits a building containing a cluster causing 50% of the cluster members to crash. Due to the severity of the storm, the power transformer that provides power to these members stops functioning, causing the members to be down for an indeterminate period of time.

The cluster contains eight voting members and is configured with a one-vote quorum disk. Given this configuration, the CEV value would be 9, which means that the number of votes required for quorum would be 5. Since 50% of the cluster members crashed, the cluster's current votes value is 5 (4 voting members and the quorum disk).

If there were one more failure, the cluster would lose quorum. Since the members that are down will not have power for a while, we will want to adjust the CEV value to increase the cluster's availability by lowering the number of members required to form the cluster.

You can adjust the cluster_expected_votes attribute (and the cluster's current expected votes value) by using the clu_quorum command with the "-e" option.

clu_quorum -e #votes

The #votes parameter cannot be set to any value that will cause the cluster to lose quorum.

# clu_quorum -e 12 *** Error *** The requested expected vote adjustment would result in an expected votes value of '12'. This value would cause all members of the current cluster configuration to lose quorum.

The #votes parameter cannot be set to any value lower than the current votes value minus one. This would increase the potential to partition the cluster, which is not good.

In our example, the current votes value is 5, so anything lower than 4 is illegal.

# clu_quorum -e 3 *** Error *** The requested expected vote adjustment would result in an illegal expected votes value of '3'. This value is too low.

The #votes parameter cannot be set to a value of zero.

# clu_quorum -e 0 *** Error *** Expected Votes cannot be set to 0 (Zero).

The #votes parameter can generally be set to +1/-1 of the current votes, provided that quorum would not be lost. We will set expected votes to the current votes value.

# clu_quorum -e 5 Collecting quorum data for Member(s): 1 2 3 4 5 6 7 8 *** Error *** One or more cluster members are DOWN. You may use the -f option to force the quorum operation.

Since some members are down, we will need to use the "-f" option.

# clu_quorum -f -e 5 Collecting quorum data for Member(s): 1 2 3 4 5 6 7 8 Expected vote successfully adjusted.

As previously noted, the clu_quorum command modifies the cluster_expected_votes value in each member's sysconfigtab file as well as the /etc/sysconfigtab.cluster file (a cluster-common file).

17.8.3 Managing the Quorum Disk

We've mentioned previously that adding a quorum disk is a way to increase the availability of your cluster by adding a vote to the cluster's current votes value. We also mentioned that the quorum disk should be used only in a cluster that has an even number of members.

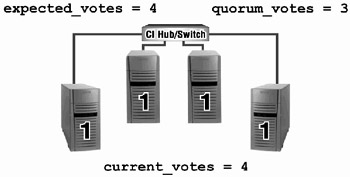

How does this increase the cluster's availability? Let's see. Figure 17-13 shows a four-member cluster that is configured without a quorum disk.

Figure 17-13: Four-Member Cluster without a Quorum Disk

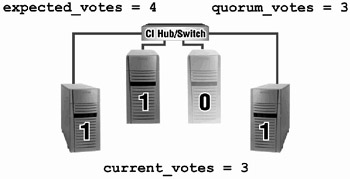

If we were to lose a member, would we lose quorum? Since a picture is worth a thousand words, let's see what Figure 17-14 has to say.

Figure 17-14: Four-Member Cluster without a Quorum Disk – One Member Down

So far, so good. One member is down, but since the current votes is equal to the quorum votes, the cluster will continue. Now, let's take another member down.

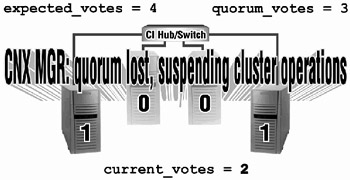

We have lost quorum, and therefore the cluster is suspended (See Figure 17-15).

Figure 17-15: Four-Member Cluster without a Quorum Disk – Two Members Down

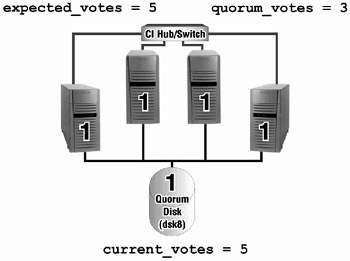

Okay, so let's try this again, this time with a quorum disk. We'll start with Figure 17-16.

Figure 17-16: Four-Member Cluster with a Quorum Disk

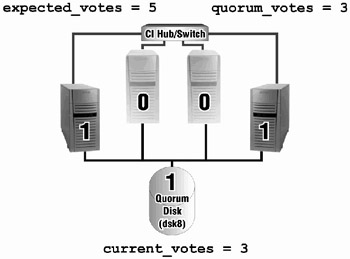

What's the difference? Well, both the cluster's expected votes as well as the current votes have increased, but the quorum votes remain the same. You can probably see where this is going. Let's fast-forward to the same scenario that we had before – two-members down. Will we lose quorum this time? We submit Figure 17-17 for your perusal.

Figure 17-17: Four-Member Cluster with a Quorum Disk – Two Members Down

A cluster with an even number of members with a quorum disk has higher availability.

Besides the quorum disk's adding another vote to a cluster with an even number of members, it also acts as a tiebreaker to resolve a cluster partition (see section 17.4) in the event that there is a cluster interconnect communication failure.

It is also interesting to note that due to the type of information contained on the cnx partition of the quorum disk (see section 17.8.5.1), and the way the CNX identifies and manages the quorum disk, various cluster interconnect communication failures can be diagnosed because cluster members can "sense" something wrong from the markings etched into the mysterious qdisk idol by our cluster's nemesis, the split-brained, anti-cluster from the depths of… scratch that – for some reason the author thought that he was writing a new sci-fi-adventure-mystery novel for a brief moment. Medication has been administered. We now return you to "Managing the Quorum Disk", already in progress…

…various cluster interconnect communication failures can be diagnosed because cluster members can "sense" something wrong by the data written on the quorum disk by some other cluster.

Before the cluster can add the quorum disk's vote to the total current votes toward quorum, the quorum disk must be trusted.

A quorum disk is trusted if all the following conditions are true:

-

The member attempting to claim the disk for the cluster has direct physical access to the disk.

-

Note: this is an important reason why the quorum disk should be a disk on a bus that is accessible directly by every member in the cluster.

-

The member can read from and write to the disk.

-

The member can either claim ownership of the quorum disk, or is a member of a cluster that has already claimed the quorum disk.

You can determine whether or not the quorum disk is trusted from any given member by examining the cnx:qdisk_trusted attribute on that member.

[sheridan] # sysconfig -q cnx qdisk_trusted cnx: qdisk_trusted = 1

As the previous output demonstrates, sheridan trusts the quorum disk.

Any member can claim the quorum disk for the cluster, but only one member will claim it at a time. If a CNX event occurs that causes a cluster state transition to happen, the quorum disk will be reclaimed, which could result in a different member claiming the disk for the cluster.

Approximately every five seconds, every cluster member's qdisk thread will read from and write to the quorum disk to make sure the quorum disk is still accessible and functioning as a quorum disk for this cluster.

Despite the fact that the CNX uses a very small portion of the quorum disk (exactly 1MB located on the "h" partition), this disk should not contain any data on it that you wish to keep. No I/O barriers are placed on the quorum disk; therefore any node can write to the disk. Consequently, it is possible that the data on the disk could be corrupted.

For more information on I/O barriers, see section 15.1.3.

17.8.3.1 How to Add the Quorum Disk

You can add a quorum disk to the cluster using the clu_quorum command with the "-d add" option.

clu_quorum –d add disk_name #votes

The arguments are the disk's device special file name and the number of votes. You can only input 0 or 1 as the #votes value.

# clu_quorum -d add dsk4 1 Collecting quorum data for Member(s): 1 2 Initializing cnx partition on quorum disk : dsk4h Quorum disk successfully created.

Once the quorum disk is added to the cluster, the CNX quorum disk thread is started.

# cnxthreads | grep qdisk [12] "qdisk tick delay" - qdisk t

| Note | The disk does not need to have a disk label on it, since the clu_quorum command will label the disk. The clu_quorum command will let you know there is no label but will continue without a problem. *** Info *** Disk available but has no label: dsk4 |

17.8.3.1.1 Adding a Quorum Disk with Zero Votes

You may be wondering why you would want to add a quorum disk without a vote. One reason is to reserve the disk for use as a quorum disk before actually using it. If you currently have a cluster with an odd number of members, adding a quorum disk with a vote would not be a good idea, so to avoid creating a situation where the quorum disk could hurt instead of help, you can add it with a 0 vote value.

How can it hurt? Consider a three-member cluster. The cluster's expected votes value is 3, which would make quorum votes equal to 2. How many members can fail and still maintain quorum? Only one.

If you add a quorum disk with 1 vote to the three-member cluster, expected votes is increased to 4, which will increase quorum votes to 3. How many members can fail now? Only one. So, how have we hurt ourselves? By adding another potential point of failure.

17.8.3.1.2 Adding a Quorum Disk without Equal Connectivity

If you try to add a quorum disk using a disk that does not have equal connectivity to all members, the clu_quorum command will warn you that this action can lead to madness (or at least quorum loss).

# clu_quorum -d add dsk4 1 Collecting quorum data for Member(s): 1 2 All members do not have direct access to the specified quorum disk: dsk4 Members without direct access to the quorum disk may be more likely to lose quorum than those members with direct access to the quorum disk. Do you want to continue with this operation? [yes]:

Despite the fact that the TruCluster Server Cluster Administration Guide merely states that the quorum disk should be located on a shared bus, we believe that placing the quorum disk anywhere but on a shared bus that is connected to all members is not a good idea at all!

17.8.3.1.3 Adding a Quorum Disk with Existing Data

As of this writing, adding a quorum disk (with existing data) to a cluster is not supported. As stated earlier, no I/O barriers are placed on the quorum disk, and therefore the data on the disk could be overwritten or corrupted. As a safety mechanism, the clu_quorum command will return an error if you attempt to add a disk that has an existing in-use partition.

If the proposed quorum disk contains an AdvFS file system on a partition, the clu_quorum command will return the following error (even if the fileset is not mounted):

# clu_quorum –d add dsk6 1 *** Error *** Disk 'dsk6' has a least one partition that is in use. The partition in use is open as an Advfs Domain. *** Error *** Cannot configure quorum disk.

If the proposed quorum disk contains an LSM partition and LSM is configured, the clu_quorum command will return the following error:

# clu_quorum –d add dsk6 1 *** Error *** Disk 'dsk6' has a least one partition that is in use. The partition in use is open as an LSM volume. *** Error *** Cannot configure quorum disk.

| Warning | If LSM is not in use on the cluster, the clu_quorum command will add the disk as the quorum disk. It will replace the existing disk label with a new one, and no error will be given. |

If the proposed quorum disk contains a UFS file system on a partition, and the file system is mounted, the clu_quorum command will return the following error:

# clu_quorum –d add dsk6 1 *** Error *** Disk 'dsk6' has a least one partition that is in use. The partition in use is open as a UFS Filesystem. *** Error *** Cannot configure quorum disk.

| Warning | If the UFS file system is not mounted, the clu_quorum command will add the disk as the quorum disk. It will replace the existing disk label with a new one, and no error will be given. |

17.8.3.2 How to Remove the Quorum Disk

If you have a quorum disk but wish to remove it, the clu_quorum command with the "-d remove" option should do the trick.

# clu_quorum -d remove Collecting quorum data for Member(s): 1 2 Quorum disk successfully removed.

What reason could you have for removing the quorum disk? If you add or remove a member from the cluster resulting in an odd number of members, you should remove the quorum disk or at least adjust its votes to a value of zero.

Once the quorum disk is removed from the cluster, the CNX quorum disk thread is stopped. We can verify this by running the cnxthreads program we wrote and grep for "qdisk". No output should be displayed.

# cnxthreads | grep qdisk

17.8.3.3 How to Adjust the Quorum Disk Votes

If you have a quorum disk and need to shutdown a member of the cluster for an extended period of time but do not want to remove the quorum disk, you can use the clu_quorum command with the "-d adjust" option.

clu_quorum –d adjust #votes

Set the #votes value to zero.

# clu_quorum -d adjust 0 Collecting quorum data for Member(s): 1 2 Quorum disk votes successfully adjusted.

Once the member is returned to the cluster, you can adjust the quorum disk vote by repeating the command and changing #votes value from 0 to 1.

# clu_quorum -d adjust 1 Collecting quorum data for Member(s): 1 2 Quorum disk votes successfully adjusted.

17.8.3.4 How to Replace a Failed Quorum Disk

Replacement of a failed quorum disk is covered in Chapter 22, section 22.6.

17.8.4 Using the clu_quorum Command When a Member Is Unavailable

Since the clu_quorum command modifies every member's sysconfigtab file, which is located in /.local../boot_partition/etc, it stands to reason that every member's boot_partition needs to be mounted so that the clubase subsystem attributes can be modified. This is just one important reason why member boot disks should be on a bus that is directly accessible by all members.

For example, if you use the clu_quorum command to modify votes or add/remove the quorum disk, you will receive an error if a member is down.

# clu_quorum -d add dsk4 1 Collecting quorum data for Member(s): 1 *** Warning *** Cannot access member 1's member specific /etc/sysconfigtab file. 2 *** Error *** One or more cluster members are DOWN. You may use the -f option to force the quorum operation.

If the disk is on a shared bus, then you can use the "-f" option.

# clu_quorum -f -d add dsk4 1 Collecting quorum data for Member(s): 1 2 Initializing cnx partition on quorum disk : dsk4h Adding the quorum disk could cause a temporary loss of quorum until the disk becomes trusted. Do you want to continue with this operation? [yes]: Quorum disk successfully created.

If the down node's boot disk is not on a bus that a cluster member can mount, then the previous command will fail even with the "-f" switch.

17.8.5 Managing the cnx Partition

The cnx partition can be managed with the Cluster Boot Disk Manager (clu_bdmgr(8)) command although this should rarely be needed.

When a volume is added to the cluster_root domain, the cluster_root domain is brought under LSM control; and when the quorum disk is added or removed, the cnx partition updates automatically. In fact, the only time that the system administrator needs to take direct action to manage a cnx partition is when a member boot disk fails.

The clu_bdmgr command with the "-c" option can be used to prepare a boot disk. The command will create an AdvFS file system on the "a" partition. It will also create a cnx partition on the "h" partition but it will not configure it.

# clu_bdmgr -c dsk6 2 Error: Disk 'dsk6' has at least one partition that is in use. The partition in use is open as an Advfs Domain. Domain root2_domain already exists for member 2. If you continue this domain will be removed and a new domain, of the same name, will be created. Do you want to continue creating this boot disk? [yes]: rmfdmn: domain root2_domain removed. Creating AdvFS domains: Creating AdvFS domain 'root2_domain#root' on partition '/dev/disk/dsk6a'.

As you can see by the previous output, the file system is made. The cnx partition is also created but not configured (evidenced by the disklabel(8) and the clu_bdmgr commands). The clu_bdmgr command with the "-d" option is used the retrieve the configuration information from a cnx partition.

# disklabel -r dsk6 | grep cnx h: 2048 17771476 cnx # 5289*- 5289*

# clu_bdmgr -d dsk6 Bad magic number for ADVFS *** Error *** Cannot produce cnx configuration file for device /dev/disk/dsk6h Failure to process request

If a member boot disk fails, the cnx partition must be restored. The good news is that the cnx partition configuration is saved to a file on the boot_partition every time the member boots. The clu_max startup script, which is executed at runlevel 3, calls the clu_bdmgr command with the "-b" option to save the cnx partition configuration information to the /.local../boot_partition/etc/clu_bdmgr.conf file.

To restore the cnx partition from the configuration file, use the clu_bdmgr command with the "-h" option.

# clu_bdmgr -h dsk6 /.local../boot_partition/etc/clu_bdmgr.conf

Since the cnx partition on every member's boot disk is identical (as of this writing), we used the clu_bdmgr.conf file from the member that we're logged into to restore the cnx partition instead of using the member's restored boot_partition.

Once the cnx partition is restored, the "clu_bdmgr -d" command will return the configuration information.

# clu_bdmgr -d dsk6 # clu_bdmgr configuration file # DO NOT EDIT THIS FILE ::TYP:m:CFS:/dev/vol/cluster_rootvol:LSM:30,/dev/disk/dsk5h|simp,/dev/disk/dsk6g |simp,/dev/disk/dsk5g|simp::

The output from "clu_bdmgr -d" shows the four field identifiers that are defined in the clupt.h file in /sys/include/sys.

# grep -E "[A-Z] field id" /sys/include/sys/clupt.h #define CLUP_TYP_NAME "TYP:" /* TYP field identifier+separator */ #define CLUP_LSM_NAME "LSM:" /* LSM field identifier+separator */ #define CLUP_CFS_NAME "CFS:" /* CFS field identifier+separator */ #define CLUP_CNX_NAME "CNX:" /* CNX field identifier+separator */

The "TYP" field indicates that this is a member boot disk as indicated by the "m" (a "q" represents a quorum disk). The "CFS" field indicates the volumes in the cluster_root domain. The last field is the "LSM" field, which lists the sequence number (30), the devices, and their LSM disk type (/dev/disk/dsk5h|simp, /dev/disk/dsk6g|simp, and /dev/disk/dsk5g|simp).

| Note | The clu_quorum command stores the quorum disk's cnx partition configuration information in the clu_quorum_qdisk file in the /cluster/admin directory. |

We will cover how to restore a member's boot disk in much more detail in Chapter 22. For additional information on the clu_bdmgr command, see the clu_bdmgr(8) reference page.

17.8.5.1 What's on the cnx Partition?

Before digging into the depths of the cnx partition, it should be noted that not much documentation exists on the subject. This is by design because most of the information is not generally useful to managing your cluster on a daily basis, although it is has been brought to our attention that in rare instances it can be useful in troubleshooting some cluster problems related to quorum. Finally, you should be aware that the information presented here is only valid as of this writing since the cluster engineers could choose to modify the layout of the cnx partition in a future TruCluster Server release.

So, why bother presenting the information at all? Well, many, many system administrators, students, and support personnel have requested more information about the cnx partition.

According to the clupt.h header file located in the /sys/include/sys directory, the cnx partition is currently split into three sections:

Both the quorum and member divisions are divided into five segments:

Quorum Division (0 - 255K)

![]()

![]()

The quorum division is used only on a quorum disk while the member division is used only on a member disk. The quorum segment is not used on the member division. The PIT section does not appear to be used in either division as of this writing.

We wrote a little program (cnxread) to check out the information contained on the cnx partition to see what information is actually stored on the disk. We will use it to illustrate the content of the cnx partition on both the quorum and member disks.

17.8.5.1.1 The Quorum Segment

The quorum segment contains two sections:

-

Cluster and Owner Information Section

-

Activity Section

The Cluster and Owner Information Section contains information regarding the cluster and member currently claiming ownership of the quorum disk. This information includes:

-

Cluster Information

-

The cluster's name

-

The cluster incarnation number

-

The cluster founder's CSID

-

The number of members in the cluster

-

The number of votes the quorum disk has

-

The current number of votes the cluster has

-

The number of votes required for the cluster to reach quorum

-

A flag indicating whether or not the cluster has quorum

-

-

Owner Information

-

The owner's CSID

-

The owner's incarnation

-

The owner's name

-

The owner's member ID

-

The time that the node claimed ownership of the disk

-

The Activity Section contains information regarding the last time the quorum disk was accessed and by which member. The information contained in the Activity Section includes:

-

The writer's CSID

-

The writer's incarnation

-

The writer's name

-

The writer's member ID

-

The activity count

-

The time the last write was performed

The quorum disk thread on each member attempts to access the quorum disk approximately every five seconds.

Let's see what's on our quorum disk.

# cnxread dsk4 The CNX Partition info for /dev/rdisk/dsk4h. This is the quorum disk. Quorum Section Information: babylon5 (CSID: 0x10001, incarn: 0x6478c) # Members - Current Votes - Quorum Votes - Qdisk Votes --------- ------------- ------------ ----------- 2 3 2 1 Qdisk claimed on 20020110 at 01:20:19 by member: sheridan (CSID: 0x10002, incarn: 0x7bd63, memberid: 2) Qdisk written on 20020110 at 04:41:04 by member: molari (CSID: 0x20001, incarn: 0x92f6a, memberid: 1) Activity count = 3883

Notice that sheridan claimed the quorum disk but molari was the last member to write to the disk. If we rerun the program, there is an equal chance that either member may be the last writer as all members write to the quorum disk. For example, here is the output from another run of the program where sheridan is the writer.

# cnxread dsk4 The CNX Partition info for /dev/rdisk/dsk4h. This is the quorum disk. Quorum Section Information: babylon5 (CSID: 0x10001, incarn: 0x6478c) # Members - Current Votes - Quorum Votes - Qdisk Votes ----------- ------------- ------------ ----------- 2 3 2 1 Qdisk claimed on 20020110 at 01:20:19 by member: sheridan(CSID: 0x10002, incarn: 0x7bd63, memberid: 2) Qdisk written on 20020110 at 04:41:09 by member: sheridan(CSID: 0x10002, incarn: 0x7bd63, memberid: 2) Activity count = 3884

We will now use cnxread to dump the quorum section on molari's member disk's cnx partition. By default, the cnxread program will not show the quorum section since a member disk's quorum section is not used, but if we use the "-q" option, we can instruct the program to output the quorum section.

# cnxread -q dsk2 The CNX Partition info for /dev/rdisk/dsk2h. This is a member boot disk. Quorum Section Information: nada(CSID: 0, incarn: 0) # Members - Current Votes - Quorum Votes - Qdisk Votes --------- ------------- ------------ ----------- 0 0 0 0 Qdisk claimed on 19691232 at 19:00:00 by member: nobody(CSID: 0, incarn: 0, memberid: 0) Qdisk written on 19691232 at 19:00:00 by member: nobody(CSID: 0, incarn: 0, memberid: 0) Activity count = 0 ...

The quorum section contains nothing of interest on a member disk.

17.8.5.1.2 Partition in Time (PIT) Segment

As of this writing, the PIT segment has been reserved for future use.

17.8.5.1.3 AdvFS cluster_root Devices Segment

This segment is defined in the clupt.h file as containing the following information:

-

A magic number

-

A data sequence number

-

The number of devices in the cluster_root domain

-

An array of device IDs

Using the cnxread program to look at a member's boot disk cnx partition, we can see that the cluster_root domain has only one device.

# cnxread dsk2 The CNX Partition info for /dev/rdisk/dsk2h. This is a member boot disk. Cluster Root Filesystem (AdvFS) Segment Information Magic #: 0x42534, Sequence #: 88 maj,min --- --- Devices: [ 1]: 19, 52

We can quickly determine what device has major/minor number of "19, 52" by using the following command:

# ls -l /dev/disk/* | grep "19, 52" brw------- 1 root system 19, 52 Nov 27 03:59/dev/disk/dsk1b

Let's temporarily add a device to the cluster_root domain.

# addvol /dev/disk/dsk6g cluster_root

# cnxread dsk2 The CNX Partition info for /dev/rdisk/dsk2h. This is a member boot disk. Cluster Root Filesystem (AdvFS) Segment Information Magic #: 0x42534, Sequence #: 90 maj,min --- --- Devices: [ 1]: 19,142 [ 2]: 19, 52

The second device is also shown.

17.8.5.1.4 CLSM cluster_root Devices Segment

The CLSM segment is similar to the AdvFS segment in that it contains device information. This segment contains:

-

A magic number

-

A sequence number

-

The LSM (or Veritas) version number

-

Number of devices

-

An array of device information (device name, type, ID)

Here's the output of a member boot disk's cnx partition using an LSM volume for the cluster_root device. For more information on using LSM in a cluster, see Chapter 14.

# cnxread -l dsk2 The CNX Partition info for /dev/rdisk/dsk2h. This is a member boot disk. Cluster Root Filesystem (AdvFS) Segment Information Magic #: 0x42534, Sequence #: 116 maj,min --- --- Devices: [ 1]: 40, 5 CLSM Segment Information Magic #: 0x46185, Sequence #: 22, Version 3.1 maj,min --- --- Devices: [ 1]: 19,128 dsk5h (simp) [ 2]: 19,142 dsk6g (simp) [ 3]: 19,126 dsk5g (simp)

In our cluster, dsk5g and dsk6g are the two plexes in a mirrored volume that are used for cluster_root. The first device listed (dsk5h) is the private region disk in the rootdg. We are not exactly sure why it shows up because it is not in the cluster_root volume, but it does appear that any device in the rootdg will show up in the output.

# volprint -g rootdg TY NAME ASSOC KSTATE LENGTH PLOFFS STATE TUTIL0 PUTIL0 dg rootdg rootdg - - - - - - dm clu_mroot dsk5g - 8686058 - - - - dm clu_root dsk6g - 8686058 - - - - dm dsk5h dsk5h - 8686058 - - - - v cluster_rootvol cluroot ENABLED 1048576 - ACTIVE - - pl cluster_rootvol-01 cluster_rootvol ENABLED 1048576 - ACTIVE - - sd clu_mroot-01 cluster_rootvol-01 ENABLED 1048576 0 - - - pl cluster_rootvol-02 cluster_rootvol ENABLED 1048576 - ACTIVE - - sd clu_root-01 cluster_rootvol-02 ENABLED 1048576 0 - - -

EAN: 2147483647

Pages: 273