17.2 The CNX Architecture

17.2 The CNX Architecture

The CNX Architecture is composed of several components:

-

The CNX kernel threads.

-

The Cluster System Block (CSB).

-

The Quorum Disk (which includes a cnx partition).

-

A cnx partition on each member's boot disk.

-

The clubase and cnx subsystems.

-

The sysconfigtab and sysconfigtab.cluster files where the clubase attribute values are stored.

We will begin this section with an overview of how the CNX communication takes place in a cluster.

17.2.1 CNX Communication

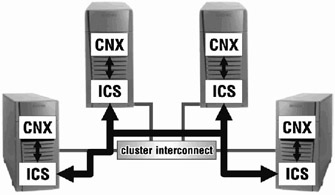

Every system configured to be a cluster member has a CNX that communicates (or attempts to communicate) to the CNX of any other systems connected to the cluster interconnect. The CNX uses the Internode Communication Subsystem (ICS)[2] as the communications interface to other members' CNX as shown in Figure 17-1.

Figure 17-1: CNX - ICS Communication

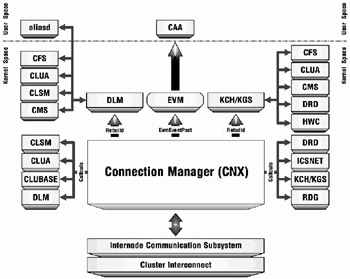

In addition to communicating to the CNX on other systems, the CNX is also responsible for communicating to other cluster subsystems. The CNX uses three primary methods:

-

Posting events using the Event Manager (EVM).

-

Dispatching callouts to those cluster subsystems that registered routines with the CNX.

-

Rebuilding the Distributed Lock Manager (DLM)[2] and Kernel Group Services (KGS)[3].

Figure 17-2 illustrates the communication flow from the CNX to the various cluster subsystems.

Figure 17-2: CNX Cluster Subsystem Communication

The CNX notifies the Cluster Application Availability (CAA) daemon via EVM events. The CAA daemon (caad(8)) subscribes to four specific CNX events:

| sys.unix.clu.cnx.member.join |

| sys.unix.clu.cnx.member.leave |

| sys.unix.clu.cnx.quorum.gain |

| sys.unix.clu.cnx.quorum.loss |

The CNX is also event driven and transaction oriented. In fact, the purpose of the main CNX thread is to handle CNX-related events that will often result in a three-phase transaction to complete the necessary action cluster-wide.

A CNX transaction is needed to form the cluster; to allow a member to join the cluster; to reconfigure the cluster; and to adjust the cluster's expected votes, a member's vote value, or quorum disk's vote value.

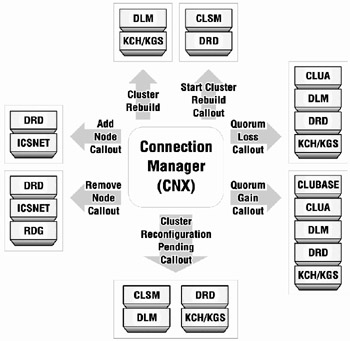

Additionally, certain events will occur that will cause the CNX to dispatch callouts to those cluster subsystems that have registered routines with the CNX when the subsystems were configured. Although we will not detail the specific events that the CNX handles, Table 17-1 lists the events that cause the CNX to dispatch callouts to those subsystems that have registered.

| CNX Callout Events | ||

|---|---|---|

| Callout Event | Description | Subsystems |

| Add Node | This callout is triggered when the CNX handles a "Node Up" event. The "Node Up" event is set when a node becomes a member. | drd, icsnet |

| Remove Node | This callout is triggered when the "Grim Reaper" thread removes a member from the cluster. | drd, icsnet, rdg |

| Quorum Gain | This callout is triggered when enough votes are added to the cluster to gain quorum. | clua, clubase, dlm, drd, kch |

| Quorum Loss | This callout is triggered when enough votes are removed from the cluster to lose quorum. | clua, dlm, drd, kch |

| Cluster Reconfiguration Pending | This callout is triggered when a member is removed from the cluster or if the cluster detects a partition or a communication error. | clsm, dlm, drd, kch |

| Start Cluster Rebuild | This callout is triggered when a cluster membership changes has occurred. | clsm, drd |

| Quorum Disk Loss | This callout is triggered when the quorum disk becomes unavailable due to an error. | none as of this writing |

| Quorum Disk Regain | This callout is triggered once the cluster can once again read from the quorum disk following a quorum disk loss. | none as of this writing |

When a member is added to, or removed from, the cluster, the CNX must inform the DLM and KGS. This is known as a cluster rebuild.

Both the DLM and KGS are used to synchronize resources in a cluster so it is important that these components are made aware of any changes in cluster membership.

Figure 17-3 summarizes the CNX interaction with the other cluster subsystems during a cluster rebuild as well as the callout events.

Figure 17-3: CNX Rebuild and Callouts

We have intentionally not provided too much detail here because most of the inner workings of the CNX will not be visible. We did, however, want to show the importance of the CNX in keeping the cluster functioning. Since the CNX is in charge of which systems can or cannot become cluster members, it must also communicate to the various cluster subsystems so that resources and data can stay synchronized and corruption can be prevented.

As an example, consider the Device Request Dispatcher (DRD). The DRD makes all storage devices anywhere in a cluster available to all members of the cluster. When a "Cluster Reconfiguration Pending" callout event occurs, the CNX is about to remove one or more members from the cluster. In order to prevent those systems that will no longer be part of the cluster from performing any further I/O to cluster storage devices, the DRD must erect I/O barriers. It is the responsibility of the CNX to inform the DRD when to put I/O barriers in place. For more information on DRD and I/O barriers, see Chapter 15.

17.2.2 CNX Threads

As stated earlier, the CNX is multi-threaded; the exact number of threads can vary depending on the cluster's state and whether or not a quorum disk is configured. For example, here is a script that we wrote that displays the CNX threads on one of the members of our cluster.

# ./cnxthreads -p WCHAN ------- [ 1] "wait for rbld" - e616e8 [ 2] "wait for rbld" - e61728 [ 3] "csb event thread" - csb eve [ 4] "wait for csb" - e60fe0 [ 5] "wait for csb" - e60ff8 [ 6] "wait for csb" - e61010 [ 7] "wait for csb" - e61028 [ 8] "wait for csb" - e61040 [ 9] "wait for csb" - e61058 [10] "wait for csb" - e61070 [11] "cnx grim reaper" - cnx gri [12] "qdisk tick delay" - qdisk t USER %CPU PRI SCNT WCHAN USER SYSTEM COMMAND PID root 0.0 38 0 * 0:00.00 1:28.27 [kernel idle] 1048576 0.0 38 0 e616e8 0:00.00 0:00.00 0.0 38 0 e61728 0:00.00 0:00.00 0.0 38 0 csb eve 0:00.00 0:00.00 0.0 38 0 e60fe0 0:00.00 0:00.00 0.0 38 0 e60ff8 0:00.00 0:00.00 0.0 38 0 e61010 0:00.00 0:00.00 0.0 38 0 e61028 0:00.00 0:00.00 0.0 38 0 e61040 0:00.00 0:00.00 0.0 38 0 e61058 0:00.00 0:00.00 0.0 38 0 e61070 0:00.00 0:00.00 0.0 38 0 cnx gri 0:00.00 0:00.00 0.0 38 0 qdisk t 0:00.00 0:01.66

On our member we have twelve threads. Our script shows the actual "wait message" for the thread but also shows what the wait channel (WCHAN) field would be if you used the ps(8) command. The "-p" option runs a "ps -emo THREAD,command,pid" command and displays the CNX threads. Another interesting point to note is that these threads are part of the [kernel idle] task.

Table 17-2 shows the threads that make up the CNX and their various tasks.

| Connection Manager Threads | |||

|---|---|---|---|

| Thread | #of threads | Wait Message | Comments |

| CNX Transaction Thread | 1 | csb event thread | This is the main CNX thread. It sleeps until there is some CNX event that needs to be handled. When a NCX event is queued, this thread wakes up, handles the event, and then goes back to sleep until more events need attention. |

| Pinger Thread | 1 | cnx pinger cnx pinger thread | This thread broadcasts the node's information on the ICS boot channel so that other nodes will know about this node. If there is an existing cluster then communication channels are established with this node. Once the node becomes a cluster member the pinger thread kills itself. |

| Grim Reaper Thread | 1 | cnx grim reaper | The grim reaper sleeps until a member needs to be removed from cluster. This thread performs the necessary clean up tasks to remove a member from the cluster. |

| Qdisk Thread | 1 | qdisk tick delay | If a quorum disk is configured, this thread reads from the disk and writes to the disk at a set interval (currently every 5 seconds). |

| Rebuild Manager Thread | 2 | 1st rbld wait for rbld | These threads are used to rebuild the DLM and KCH/KGS when a CNX event occurs that requires a cluster rebuild. they sleep until a rebuild is requested. |

| Remote Communications Thread | 7 | wait for csb remote csb event thread | These threads wait for remote nodes to send them a CSB. Once the CSB is sent, this thread is awakened and sets up an ICS communication channel to the remote node. Also handles certain CNX events. |

17.2.3 The Cluster System Block (CSB)

As you might have noticed by looking at the names of some of the threads in the previous section, the CNX seems to be interested in something known as the CSB. The CSB (or cluster system block) is actually a data structure that contains information about a cluster member. In fact every member has a CSB for itself and a CSB for each and every other node that the member has received an announcement from and has been able to establish a communication channel with. So the CNX is not exactly driven by the CSB but actually handles events stored in the CSB.

A member uses its list of CSBs to keep track of the state of the nodes as well as other information including the node's name, member ID, votes, expected votes, quorum disk configuration, incarnation, cluster system identification number (csid), connectivity topology, etc. This information is vital to determining whether or not a node can be a cluster member.

17.2.3.1 The Incarnation Number

The incarnation number (or incarn) is a pseudo-random number that uniquely identifies the current existing cluster and member instances. The incarn can be retrieved using the clu_get_info(8) command with the "-full" option.

# clu_get_info -full | grep incarn Cluster incarnation = 0x923f8

17.2.3.2 The Cluster System Identification Number (csid)

Every member has a csid to identify the cluster member to the cluster. The csid is composed of an index number into a csid vector (essentially it indicates the order that the member joined the cluster) and the number of times that the member has joined the cluster since it was formed. For example a csid of 0x30001 would indicate that the first member to join the cluster has (re)joined the cluster three times since it was formed.

You can see the csid by using the clu_get_info command with the "-full" or "-raw" options. It is also the node_csid attribute in the cnx subsystem.

Here's an example from a two-member cluster where the memberid and csid vector index match.

# clu_get_info -raw | awk '/^M/ { FS=":" ; print "memberid: "$2", csid: "$12 }' memberid: 1, csid: 0x30001 memberid: 2, csid: 0x10002 Here's an example from an eight-member cluster where the memberid and csid vector index do not match.

# clu_get_info -raw | awk '/^M/ { FS=":" ; print "memberid: "$2", csid: "$12 }' memberid: 1, csid: 0x10003 memberid: 2, csid: 0x20008 memberid: 3, csid: 0x10002 memberid: 4, csid: 0x10006 memberid: 5, csid: 0x10007 memberid: 6, csid: 0x20005 memberid: 7, csid: 0x10001 memberid: 8, csid: 0x20004

17.2.4 The Quorum Disk

The quorum disk can be thought of as a virtual cluster member in that it can add an additional vote to a cluster with an even number of members to increase the cluster's availability. In order for a disk to be used as a quorum disk, the following conditions must be met:

-

The disk must be unused because the quorum disk is dedicated for use by the CNX.

-

It is highly recommended that the disk be on a shared bus that is connected to all voting cluster members.

-

There can be only one quorum disk in a cluster.

The quorum disk can be used to contribute one vote or zero votes to a cluster.

We will cover the quorum disk in greater detail in section 17.8.3.

17.2.5 The cnx Partition

The quorum disk contains a cnx partition, 1 MB (2048 sectors) in size, located on the "h" partition. In fact, this is the only partition that can be used on the entire disk! Due to the small amount of space that is used on the disk, we recommend using the smallest disk possible.

Every member's boot disk also has a cnx partition that is located on the "h" partition.

Although both types of disks contain cnx partitions, they use different portions of the partition (as we will learn in section 17.8.5.1).

The following series of Korn shell commands displays the cnx partition information from the disk label of our cluster's disks.

# for i in dsk2 dsk3 dsk4 > do > echo ; echo "[${i}] \c" > disklabel -r ${i} | \ > awk '/label:/ { printf ("%11s - ",$2) } \ > / h:/ { printf ("partition: h, type: %s, size: %s sectors\n",$4,$2) }' > done [dsk2] clu_member1 - partition: h, type: cnx, size: 2048 sectors [dsk3] clu_member2 - partition: h, type: cnx, size: 2048 sectors [dsk4] Quorum - partition: h, type: cnx, size: 2048 sectors In section 17.8.5 we will discuss how to manage the cnx partition.

17.2.6 The clubase Subsystem

The configuration information that is required to form (or join) a cluster is stored in the Cluster Base (clubase) subsystem in the member-specific sysconfigtab file located in the /cluster/members/{memb}/boot_partition/etc directory. You can see the attributes and values for the clubase subsystem contained in a member's sysconfigtab file by using the sysconfigdb(8) command with "-l" option.

# sysconfigdb -l clubase clubase: cluster_qdisk_major = 19 cluster_qdisk_minor = 272 cluster_qdisk_votes = 1 cluster_expected_votes = 3 cluster_node_votes = 1 cluster_name = babylon5 cluster_node_name = molari cluster_node_inter_name = molari-ics0 cluster_interconnect = mct cluster_seqdisk_major = 19 cluster_seqdisk_minor = 80

The cluster_expected_votes attribute is also stored in the cluster-wide sysconfigtab.cluster file located in the /etc directory. The subsystem attributes are described in Table 17-3.

| clubase subsystem attributes | |

|---|---|

| cluster_expected_votes | This attribute contains the maximum number of votes that the cluster expects to have if every voting member is up. Note that this value also includes the vote for the quorum disk if it is configured and is assigned a vote. The cluster_expected_votes attribute value should be the same on all cluster members. |

| cluster_interconnect | This attribute contains the cluster interconnect type. As of this writing, this value can:

|

| cluster_name | The cluster_name attribute contains the name of the cluster. All members must have the same cluster_name. The attribute is used as the name of the Default Clauster Alias as well. For more information on cluster aliasing, see Chapter 16. |

| cluster_node_inter_name | This attribute contains the node name for the virtual cluster interconnect(ics0). |

| cluster_node_name | This attribute contains the node name of the cluster member. |

| cluster_node_votes | This attribute contains the number of votes this member will contribute toward quorum. As of this writing, this value can be 0 or 1. |

| cluster_qdick_major, cluster_qdisk_minor | This is the major and minor number of the quorum disk's "h" partition. This partition is the cnx parition and is discussed in section 17.4. |

| cluster_qdisk_votes | This attribute contains the number of votes that the quorum disk will contribute toward quorum. As of this writing this value can be 0 or 1. |

| cluster_seqdisk_major, cluster_seqdisk_minor | This is the major and minor number of the member boot disk's "h" partition . This partition is the cnx partition and is discussed in section 17.4. |

# sysconfigdb -t /etc/sysconfigtab.cluster -l clubase clubase: cluster_expected_votes = 3

The sysconfigtab.cluster file is used to keep subsystem attribute values that should be identical in every member's sysconfigtab file in synch upon reboot. When a member reaches run level 3, the clu_min script is called to propagate entries in the sysconfigtab.cluster file to the member's sysconfigtab file.

For more information on the clubase subsystem, see the sys_attrs_clubase(4) reference page.

[2]See Chapter 18 for a discussion on ICS, DLM, and KCH/KGS.

[3]Also known as the Kernel Cat Herder (KCH); see Chapter 18 for more details.

EAN: 2147483647

Pages: 273