XML Tree Models

Chapter 12 is devoted to a detailed exposition of the DOM, the most commonly used tree model for XML. Once again, in this section, you step back from the details to look at the role of the DOM as part of the wider picture of Java interfaces to XML. It also discusses some of the alternative tree models that have appeared in the Java world, specifically JDOM, DOM4J, and XOM.

It's easy to see why tree models are popular. It's much easier to manipulate the content of an XML document at the level of a tree of nodes than it is to deal with events in the order they are reported by a parser. This method puts the application back in control. Rather than dealing with the data in the order it appears in the document (which is the case when you use either a push or a pull parser interface), a tree representation of the document in memory enables you direct access, navigating around the structure to locate the information that your application needs. It's rather like the difference between processing a sequential file (who does that nowadays?) and using a database.

One of the two problems with tree models is performance. Tree models tend to take up a lot of memory (ten times the original source document size is not untypical), and they also take time to build because of the number of objects that need to be allocated. The other disadvantage is that navigation around the structure can be extremely tedious. Most tree models allow you to mix low-level procedural navigation (getFirstChild(), getNextSibling() and so on) with the use of XPath expressions for a more declarative approach, but using XPath brings its own performance penalty because it usually involves parsing and optimizing XPath expressions on the fly.

The DOM interface, although it remains the most popular tree model used in the Java world, has problems of its own. Most of these stem from its age: It was designed originally to handle HTML rather than XML, and it was first adapted to handle XML before namespaces were invented. The result is that namespaces still feel very much like a bolt-on extra. Another significant problem is that DOM was devised (by W3C) as a programming-language-independent interface, with Java being just one of the language bindings. This means that many of its idioms are very un-Java-like. This includes its exception handling, the way that collections (lists and maps) are handled, the use of shorts to represent enumerated constants, and many other details. This problem is partly cosmetic, but there is a deeper impact because it diminishes the extent to which DOM code fits well architecturally into the Java world view.

Because DOM was developed by a standards committee, it has grown rather large. In fact, the W3C working group no longer exists, having been replaced by an interest group. The specifications are no longer being developed, and some of the work that was done by the working group is now in a somewhat undefined state of limbo. But the material that was published as formal Recommendations is quite large enough. Not all of this has yet made it into Java, which confuses the issue further. Some parts that have made it into Java, notably the Event model, go well beyond what one expects to find in a tree representation of XML documents and are far more concerned with user-interface programming in the browser (or elsewhere). This reflects the historical origins of the DOM as the underpinning object model for Dynamic HTML.

The DOM, remember, is a set of interfaces-not an implementation. Several implementations of these interfaces exist: in fact, there can be any number. You can write your own implementation if you want, and this is not just a fanciful notion. By writing a DOM interface to some underlying Java data source, you can make the data appear to the world as XML, and thus make it directly accessible to any XML process that accepts DOM input, for example XSLT transformations and XQuery queries.

The fact that DOM is a set of interfaces with multiple implementations, combined with the fact that it is a large and complex specification, leads inevitably to the result that different implementations are not always as compatible as one would like. It can be difficult to write application code to DOM interfaces that actually works correctly with all implementations. It's very easy to make assumptions that turn out to be faulty: I once wrote the test

if (x instanceof Element)...

in a DOM application. It failed when it ran on the Oracle DOM implementation because in that implementation some objects that are not element nodes nevertheless implement the DOM Element interface. This is perfectly legal according to the specification, but not what one might expect.

A further criticism of the DOM is that it doesn't reflect modern thinking as to the true information model underlying XML. It retains information that most applications don't care about, such as CDATA sections and entity references. It throws away some information that applications do care about, such as DTD-defined attribute types, and it models namespaces in a way that makes them very difficult to process.

For some users, the final straw is that DOM isn't even stable. Unlike its policy with every other interface in the JDK class library, Sun's policy for DOM allowed incompatible changes to be made to the interfaces in J2SE 5.0, with the result that it can be quite difficult to write applications that work on multiple versions of the JDK. This affects providers of DOM interfaces more than users, but since the interface is only as good as the products that implement it, the effect is a general decline in support for DOM as a preferred interface.

Alternatives to DOM

A number of alternatives to DOM have been proposed to address the shortcomings outlined in the previous section. You look at three of them: JDOM, DOM4J, and XOM. Each of these has an open source implementation and an active user community. None of them is actually part of the JDK, which creates the serious disadvantage that the interfaces are not recognized by other APIs in the Java family such as JAXP and XQJ (discussed later in this chapter). However, as you see in this chapter, this has not meant that they are islands of technology with no interoperability.

JDOM

JDOM, produced by a team led by Jason Hunter, was the first attempt to create an alternative tree model.

JDOM's main design aim was to be significantly easier to use than DOM for the Java programmer. The most striking difference in its design is that it uses concrete classes rather than interfaces. All the complexities and abstractions of factory classes and methods are avoided, which is a great simplification. The decision has another significant effect, which has both advantages and disadvantages: There is only one JDOM. If you write an application that works on a JDOM tree, it will never work on anything else. You can't make a different tree look like a JDOM tree by implementing the JDOM interfaces; the best you can do is to bulk copy your data into a JDOM tree. This might seem like a significant disadvantage, but most people won't need to do this. The big advantage, however, is that because there is only one JDOM, it has no compatibility problems with different implementations.

Another striking feature of the JDOM model is that no abstract class represents a node. Each of the various kinds of node has its own concrete class, such as Element, Comment, and ProcessingInstruction, but there is no generic class that all nodes belong to. This means that you end up using the class Object a lot. A generic interface called Parent exists for nodes that can have children (element and document nodes), and there is also a generic class for nodes that can be children (text nodes, comments, elements, and so on), but nothing for the top level. When programming using JDOM, I have found this is a bewildering omission.

This small example gives a little feel of the navigation interfaces in JDOM. Suppose you have an XML document like the one that follows, and you have this data in a JDOM tree that you want to copy to a Java HashMap:

<currencies> <currency code="USD">1.00</currency> <currency code ="EUR">0.79</currency> <currency code ="GBP">0.53</currency> <currency code ="JPY">118.29</currency> <currency code ="CAD">1.13</currency> </currency>

Here's the Java code to do it:

HashMap map = new HashMap(); Document doc = new SaxBuilder().build(new File('input.xml')); for (Iterator d = doc.getDescendants(); d.hasNext();) { Object node = d.next(): if (node instanceof Element and ((Element)node).getName().equals("currency")) { map.put(node.getAttributeValue("code"), node.getValue()); } } All the tree models struggle with the problem of how to represent namespaces. JDOM, true to its philosophy, concentrates on making the easy cases easy. Elements and attributes have a three-part name (prefix, local name, and namespace URI). Most of the time, that's all you need to know. Occasionally, in some documents, you may need to know about namespaces that have been declared even if they aren't used in any element or attribute names (they might be used in a QName-valued attribute such as xsi:type).

To cover such cases, an element also has a property getAdditionalNamespaces(), which returns namespaces declared on the element, excluding its own namespace. If you want to find all the name-spaces that are in scope for an element, you have to read this property on the element itself, on its parent, and so on-all the way up the tree.

An effect of this design decision is that when you make modifications to a tree, such as moving an element or renaming it, the namespaces usually take care of themselves, except in the case where your document contains QName-valued attributes. In that case, you must take great care to ensure that the namespace declarations remain in scope.

JDOM comes with a raft of "adapters" that allow data to be imported and exported from/to a variety of other formats including, of course, DOM and SAX. To create a tree by parsing XML, you simply write

Document doc = new SaxBuilder().build(new File('input.xml')); Serialization is equally easy: you can serialize the whole document by writing

new XMLOutputter().output(doc, System.out);

If you want to customize the way that serialization is done, you can supply the XMLOutputter with a Format object on which you set preferences. For example, you can set the option setExpandEmptyElements() to control whether empty element tags are used.

JDOM also provides interfaces to allow XPath or XSLT access to the document. This exploits the JAXP interfaces to allow different XSLT and XPath processors to be selected. Of course, you can only choose one that understands the JDOM model. (In fact, Saxon has support for JDOM and doesn't use this mechanism: You can wrap a JDOM document in a wrapper that allows Saxon to recognize it directly as a Source object.)

Overall, the keynote of JDOM is simplicity. Simplicity is its strength, but also its weakness. It makes easy things easy, but it can also make difficult things rather hard or impossible.

For more information about JDOM and to download the software, visit jdom.org/.

DOM4J

DOM4J started as an attempt to build a better JDOM. It is sometimes said that it is a fork of JDOM, but I don't think this is strictly accurate in terms of the actual code. Rather, it is an attempt to redo JDOM the way some people think it should have been done.

Unlike JDOM, DOM4J uses Java interfaces very extensively. All nodes implement the Node interface, and this has sub-interfaces for Element, Attribute, Document, CharacterData, and so on. These interfaces, in turn, have abstract implementation classes, with names such as AbstractElement, and AbstractDocument. AbstractElement has two concrete subclasses: DefaultElement, which is the default DOM4J class representing an element node, and BaseElement, which is a helper class designed to allow you to construct your own implementation. (Why would you want your own implementation? Essentially, you use it to add your own data to a node or use methods that allow you to navigate more easily around the tree.)

So you can see immediately that in contrast to JDOM, this is heavy engineering. The designers have taken the theory of object-oriented programming and applied it rigorously. The result is that you can do far more with DOM4J than you can with JDOM, but you must be a rather serious developer to want all this capability.

Here's the same code that you used earlier for JDOM reworked for DOM4J.

HashMap map = new HashMap(); Document doc = ...; Element root = doc.getRootElement(); for (Iterator d = root.elementIterator("currency"); d.hasNext();) { Element node = (Element)d.next(): map.put(node.attributeValue("code"), node.getStringValue()); } It's not a great deal different. DOM4J methods are a bit more strongly typed than their JDOM equivalents, but any benefit you get from this is eliminated by the fact that both interfaces were designed before Java generics hit the scene in J2SE 5.0. That means the objects returned by iterators have to be cast to the expected type. The DOM4J code is a bit shorter because you have a wider range of methods to choose from, but the downside of that is that you have a longer spec to wade through before you find them!

In addition to the simple navigational methods that allow you to get from a node to its children, parent, or siblings, DOM4J offers some other alternatives. For example you can define a Visitor object which visits nodes in the tree, having its visit() method called to process each node as it is traversed: This feels strangely similar to writing a SAX ContentHandler, except that the visit() method is free to navigate from the node being visited to its neighbors.

An interesting feature of DOM4J is that it's possible to choose implementation classes for the DOM4J nodes that implement DOM interfaces as well as DOM4J interfaces. In principle, this means you can have your cake and eat it, too. However, I'm not sure how useful this really is in practice. Potentially, it creates a lot of extra complexity.

Like JDOM, the DOM4J package includes facilities allowing you to build a DOM4J tree from various sources, including lexical XML, SAX, StAX, and DOM, and to export to various destinations, again including lexical XML, SAX, StAX, and DOM. The serialization facilities work in a very similar way to JDOM. DOM4J also has a built-in XPath 1.0 engine, and you can use it with JAXP XSLT engines.

So if you can sum up JDOM by saying it is about simplicity, you can say the DOM4J is about solid support for advanced object-oriented development approaches and design patterns.

DOM4J is found at dom4j.org/.

XOM

The most recent attempt to create the perfect tree model for XML in the Java world is XOM. The focus of the XOM project is 100% fidelity and conformance to the XML specifications and standards. In particular, it attempts to do a much better job than previous models of handling namespaces. XOM is developed by Elliotte Rusty Harold, and although it's open source, he maintains strong control to ensure that the design principles are adhered to.

It's worth asking why namespaces are such a problem. In lexical XML, a namespace declaration on a particular element binds a prefix to a namespace URI. This prefix can be used anywhere within the content of this element as a shorthand for the namespace URI. When you come to apply this to a tree model, this creates a number of problems:

-

q Are the prefixes significant, or can a processor substitute one prefix for another at whim? After much debate, the consensus now is that you have to try to preserve the original prefixes for two reasons: First, because users have become familiar with them, and second, they can be used in places (such as XPath expressions within attribute content) where the system has no chance of recognizing them and, therefore, changing them.

-

q If prefixes are significant, what happens when an element becomes detached from the tree where the prefix is defined? Tree models support in-situ updates, and they have all struggled with defining mechanisms that allow update of namespace declarations without the risk of introducing inconsistencies.

As well as concentrating on strict XML correctness, XOM also has some interesting internal engineering, designed to reduce the problems that occur when you use tree models to represent very large documents. XOM allows you to build the tree incrementally, to discard the parts of the document you don't need while the tree is being built, to process nodes before tree construction has finished, and to discard parts of the tree you have finished with. This makes it a very strong contender for dealing with documents whose size is 100Mb or larger.

Like JDOM, XOM uses classes rather than interfaces. Harold justifies this choice vigorously and eloquently: He claims that interfaces are more difficult for programmers to use, and more important, interfaces do not enforce integrity. You can write a class that ensures that the String passed to a particular method will always be a valid XML name, but you cannot write an interface that imposes this constraint. In addition, an interface can't impose a rule that one method must be called before another method is called. When you work with interfaces, you recognize the possibility of multiple implementations, and you have no control over the quality or interoperability of the various implementations. This is all good reasoning, but it does mean that, just as there is only one JDOM, there is only one XOM. You can't write a XOM wrapper around your data so that XOM applications can access it as if it were a "real" XOM tree.

XOM does allow and even positively encourages the base classes (such as Element, Attribute, and so on) to be subclassed. The design has been done very carefully to ensure that subclasses can't violate the integrity of the system. Many methods are defined as final to ensure this. Users sometimes find this frustrating, but it's done with good reason. The capability to subclass means that you can add your own application-dependent information to the nodes, and you can add your own navigation methods. So you might have a method getDollarValue() on an Invoice element that returns the total amount invoiced without exposing to the caller the complex navigation needed to find this data.

At the level of navigational methods, XOM is not so very different from JDOM and DOM4J. Here's that familiar fragment of code rewritten for XOM, to prove it:

HashMap map = new HashMap(); Document doc = ...; Element root = doc.getRootElement(); Elements currencies = root.getChildElements("currency"); for (int i=0; i<currencies.size(); i++) { Element node = currencies.get(i): map.put(node.getAttributeValue("code"), node.getValue()); } One interesting observation is that this is the first version of this code that doesn't involve any Java casting. In the absence of Java generics, XOM has defined its own collection class Elements (rather like DOM's NodeList) to achieve type safety.

It won't come as a surprise to learn that XOM also comes with converters to and from DOM and SAX; you can build a document by creating a Builder using any SAX parser (represented by an XMLReader object). It also has interfaces to XSLT and XPath processors; and, of course, XOM also has its own serializer.

Closely related to XOM, but a quite separate package from a different developer (Wolfgang Hoschek) is a toolkit called NUX. This integrates XOM tightly with the Saxon XPath and XQuery engine and with StAX parsers such as Woodstox. It offers binary XML serialization options and a number of features geared towards processing large XML documents at high speed in streaming mode. For users with large documents and a need for high performance, the combination of XOM, Saxon, and NUX is almost certainly the most effective solution available unless the user wants to pay large amounts of money.

So the keywords for XOM are strict conformance to XML standards and high performance engineering, especially for large documents.

You can find out more about XOM at .xom.nu/, and about NUX at http://www.dsd.lbl.gov/nux/. Saxon (which has both open source and commercial versions) is at saxonica.com/.

This concludes our tour of the Java tree models. You don't have any reference information to help you actually program against these models, but there's enough here to help you decide which is right for you and to find the detail you need online.

In the next section of the chapter, you move up a level in the tier of Java XML interfaces, to discuss data binding.

Java/XML Data Binding

So far, the interfaces you've been looking at don't try to hide the fact that the data you are working with is XML. Documents consist of elements, attributes, text nodes, and the like. When you manipulate them at the level of a sequential parser API like SAX or StAX, or at the level of a document object model like DOM, JDOM, DOM4J, or XOM, you must understand what elements, attributes, and text nodes are, and how they relate to each other.

The idea of data binding is to move up a level. To find out the zip code of an address, you no longer need to call element(“address”).getAttributeValue(“zipcode”); instead you call address.getZipCode().

In other words, data binding maps (or binds) the elements and attributes of the XML document representation to objects and methods in your Java program whose structure and representation reflect the semantics of the data you are modeling, rather than reflecting the XML data model.

It's probably true to say that data binding works better for data-oriented XML than for document-oriented XML. In fact, some data binding products have difficulty coping with some of the constructs frequently found in document-oriented XML, such as comments and mixed content. These products aren't actually aiming to achieve fidelity to the XML representation. A number of products and interfaces implement this general idea. The accepted standard, however, is the JAXB interface, also referred to on occasion as JSR (Java Specification Request) 31. JAXB brings together the best ideas from a number of previous technologies, but it hasn't completely displaced them: You may find yourself working with the open-source Castor product, for example, which has similar concepts but is not JAXB-compliant. Other products you might come across include JBind, Quick, and Zeus. Some more specialized products use similar concepts: The C24 Integration Objects package, for example, focuses on conversions between different XML-based and non-XML formats for financial messages. It achieves the interoperability by means of a data binding framework.

JAXB is technically a specification rather than a product, but for many users the term is synonymous with the reference implementation, which comes as part of the Java Web Services Developers Pack (JWSDP). The JAXB 2.0 specification was finalized during 2006, but because this is only a broad overview, you don't need to be too concerned with the differences.

Data binding generally starts with an XML Schema description of the XML data. Among the considerable advantages to using an XML Schema, rather than (for example) a DTD, the most obvious one is that XML Schema defines a rich set of data types for the content of elements and attributes (dates, numbers, strings, Booleans, URIs, durations, and so on). Less obviously, XML Schema has also gone to great lengths to ensure that when you validate a document against a schema you don't get just a yes/no answer and perhaps some error messages. You get a copy of the document (the so-called PSVI, or post-schema-validation-infoset) in which every element and attribute has been annotated with type information derived from the schema. In fact, some of the restrictions in XML Schema (that are so painful when you use it for validation) are there explicitly to support data binding. The notorious UPA (Unique Particle Attribution) rule says that a model is ambiguous (and, therefore, invalid) if more than one way of matching the same data exists. Data binding requires conversion of an XML element to a Java object, and the schema determines which class of object you end up with. It's not enough, for example, to say that a book can consist of a sequence of chapters followed by a sequence of appendices; you must be able to decide without any ambiguity (and also without backtracking) whether the element you're now looking at is a chapter or an appendix. For Relax NG, it would be good enough to say, "If it could be either, that's fine; the document is valid." But for XML Schema, that's not good enough because the XML Schema is about more than validation.

I can explain it with an example. Here's an XML structure containing a name and address:

<customer> <name> <first>Jean</first> <last>Dupont</last> </name> <address> <line>18bis rue d'Anjou</line> <line/> <postal-code>75008</postal-code> <city>Paris</city> <country>France</country> </address> </customer>

Mapped to Java, you might want to see:

Class Customer { PersonalName getName(); Address getAddress(); } Class PersonalName { String getFirstName(); String getLastName(); } Class Address { List<String> getAddressLines(); String getPostalCode(); String getCity(); String getCountry(); } It can get more complicated than this, of course. For example, one might want ID/IDREF links in the XML to be represented as methods that traverse the relationships between Java objects.

Rather than starting with an XML document instance and converting it to a set of Java objects, a data binding tool starts with an XML schema and converts it to a set of Java classes. These classes can then be compiled into executable code. Typically, the executable code contains methods that construct the Java objects from the XML document instance (a process called marshalling) or that create the XML document instance from the Java objects (called unmarshalling).

The process of creating class definitions from a schema can be fully automated. You then end up with a default representation of each construct in the schema. However, you can often get a much more effective representation of the schema if you take the trouble to supply extra configuration information to influence the way the class definitions are generated. JAXB provides two ways of doing this: You can add the information as annotations to the source schema itself, or you can provide it externally. Using external configuration information is more appropriate if you don't control the schema (for example, if you are defining a Java binding for an industry schema such as FpML).

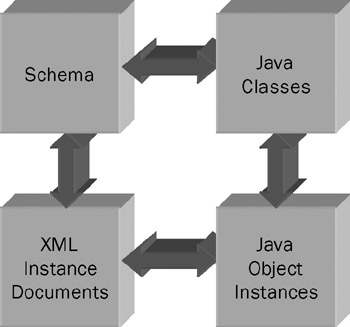

Although it's usual to start with an XML Schema, JAXB also allows you to work in the opposite direction. Instead of generating a set of Java classes from a schema, you can generate a schema from a set of Java classes. The model is pleasingly symmetric, as you can see from Figure 16-5.

Figure 16-5

In JAXP, a Java class is generally derived from an element definition in the schema. Most of the customization options are concerned with how the Java class name relates to the name of the XML element declaration. This is used to get a name that fits in with Java naming conventions, and also to resolve conflicts when the same name is used for more than one purpose.

Java package names are derived by default from the XML namespace URI. For example, the namespace URI http://www.example.com/ipo converts to the java package name com.example.ipo. This works well if you stick to the recommended naming conventions-but very badly if you don't!

Within the package, a number of interfaces represent the complex types defined in the schema, and a number of interfaces represent the element declarations. A class, ObjectFactory, allows instances of the elements to be created. Further classes are generated to represent enumeration data types in the schema by using the Java type-safe enumeration pattern.

Within an element, attributes and simple-valued child elements are represented as properties (with getter and setter methods). Complex-valued child elements map to further Java classes (this includes any element that is allowed to have attributes, as well as elements that can have child elements).

The following table shows a default mapping of the built-in types defined in XML Schema to data types in Java. Some of these are straightforward; others require more discussion.

| XML Schema Type | Java Data Type |

|---|---|

| xs:string | java.lang.String |

| xs:integer | java.math.BigInteger |

| xs:int | int |

| xs.long | long |

| xs:short | short |

| xs:decimal | java.math.BigDecimal |

| xs:float | float |

| xs:double | double |

| xs:boolean | boolean |

| xs:byte | byte |

| xs:QName | javax.xml.namespace.QName |

| xs:dateTime | javax.xml.datatype.XMLGregorianCalendar |

| xs:base64Binary | byte[] |

| xs:hexBinary | byte[] |

| xs:unsignedInt | long |

| xs:unsignedShort | int |

| xs:unsignedByte | short |

| xs:time | javax.xml.datatype.XMLGregorianCalendar |

| xs:date | javax.xml.datatype.XMLGregorianCalendar |

| xs:gYear, gYearMonth, gMonth, gMonthDay, gDay | javax.xml.datatype.XMLGregorianCalendar |

| xs:anySimpleType | java.lang.Object (for elements), java.lang.String (for attributes) |

| xs:duration | javax.xml.datatype.Duration |

| xs:NOTATION | javax.xml.namespace.QName |

| xs:anyURI | java.lang.String |

Notice how, in some cases, no suitable class is available in the core Java class library, so new classes had to be invented. This is particularly true for the date and time types because although a lot of commonality exists between the XML Schema dates and times and classes such as java.util.Date, many differences exist in the details of how things such as timezones are handled.

One might have expected xs:anyURI to correspond to java.net.URI. It doesn't because the detailed rules for the two are different-xs:anyURI accepts URIs whether or not they have been %HH encoded, whereas java.net.URI corresponds more strictly to the RFC definitions.

For derived types, the rules are quite complex. For example, a user-defined type in the schema derived from xs:integer whose value range is within the range of a java int is mapped to a java int.

List-valued elements and attributes (that is, a value containing a space-separated sequence of simple items) are represented by a Java List. Union types in the schema are represented using a flag property in the Java object to indicate which branch of the union is chosen.

When you do the mapping in the opposite direction, from Java to XML Schema, you aren't restricted to the types in the right-hand column of the preceding table. For example, if your data uses the java.util.Date type, this is mapped to an xs:dateTime in the schema.

After you have established the Java class definitions, customized as far as you consider necessary, you can unmarshall an XML document to populate the classes with instances. Suppose that you started with the sample purchase order schema published in the W3C primer (http://www.w3.org/TR/xmlschema-0/), and that the relevant classes are generated into package accounts.ipo.

Start by creating a JAXBContext object, naming this package, and then adding an Unmarshaller:

JAXBContext jaxb = JAXBContext.newInstance("accounts.ipo"); Unmarshaller u = jaxb.createUnmarshaller(); Now you can unmarshall a purchase order from its XML representation:

PurchaseOrder ipo = (PurchaseOrder)u.unmarshall(new FileInputStream("order.xml")); And now you can manipulate this as you would any other Java object:

Items items = ipo.getItems(); for (Iterator iter = items.getItemList().iterator(); iter.hasNext()) { ItemType item = (ItemType)iter.next(); System.out.println(item.getQuantity()); System.out.println(item.getPrice()); } Marshalling (converting a Java object to the XML representation) works in a very similar way using the method

Marshaller.marshal(Object jaxbElement, ...);

Marshalling involves converting values to a lexical representation, and this can be customized with the help of a DatatypeConverter object supplied by the caller.

I hope this overview of JAXB gives you the flavor of the technology and helps you to decide whether it's appropriate for your needs. If you're handling data rather than documents, and if you have a lot of business logic processing the data in Java form, then JAXB or other data binding technologies can certainly save you a lot of effort. This suggests that they are ideal in a world where applications are Java-centric, with XML being used around the edges to interface the applications to the outside world. It's probably less appropriate in an application architecture where XML is central. In that case, you are probably writing the business logic in XSLT or XQuery, and that brings us to the subject of the next and final section of this chapter.

Controlling XSLT, XQuery, and XPath Processing from Java

XSLT, XQuery, and XPath are well-covered in other chapters of this book, and none of that information is repeated here. Rather, the aim here is to show how these languages work together with Java.

In many cases, Java is used simply as a controlling framework to collect the appropriate parameters, fire off an XSLT transformation, and dispose of the results. For example, you may have a Java servlet whose only real role in life is to deal with an incoming HTTP request by running the appropriate transformation. Today, that is probably an XSLT transformation. In the future, XQuery may play an increasing role, especially if you use an XML database.

The key here is to decide which language the business logic is to be written in. You can write it in Java, and then use XSLT simply to format the results. My own inclination, however, is to use XSLT and XQuery as fourth-generation application development languages, containing the bulk of the real business logic. This relegates Java to the role of integration glue, used to bind all the different technology components together.

It is possible to mix applications written in different languages, however. The best approach to doing this in the XML world is the pipeline pattern: Write a series of components, each of which transforms one XML document into another; and then string them together, one after another, in a pipeline. The different components don't have to use the same technology: Some can be XSLT, some XQuery, and some Java.

The components in a pipeline must be managed using some kind of control structure. You can write this yourself in Java; or you can use a high-level pipeline language. The pipeline might well start with an XForms processor that accepts user input from a browser window and feeds it into the pipeline for processing. Different stages in the pipeline might handle input validation (perhaps using a schema processor), database access, and output formatting. No standards exist for high-level pipeline languages yet, but the W3C has started work in this area, and in the meantime, a number of products are available (take a look at Orbeon for an example: http://www.orbeon.com). You can also implement the design pattern using frameworks such as Cocoon.

Why are pipelines so effective? First, they provide a very effective mechanism for component reuse. By splitting your work into a series of independent stages, the code for each stage becomes highly modular and can be deployed in other pipelines. The second reason is that pipelines can be dynamically reconfigured to meet different processing scenarios. For example, if management asks for a report about particular activity, it's easy to add a step at the appropriate place in the pipeline that monitors the relevant data flow and "siphons off" a summarized data feed without changing the existing application except to reconfigure the pipeline.

The reason for discussing pipelines here (yet again) is that this kind of thinking has influenced the design of the APIs you are about to look at-in particular, the JAXP API for controlling XSLT transformations.

XPath APIs fit less well into the structure of this section. If you're using XPath from Java on its own, you're probably using it in conjunction with one of the object models discussed in the second section of this chapter (DOM, JDOM, DOM4J or XOM). That's likely to be a Java-centric architecture, using XPath essentially as a way of navigating around the tree-structured XML representation without all the tedious navigation code that's needed otherwise. For that reason, this section does not cover XPath in any detail. Instead, it focuses on the JAXP interface for XSLT, and the XQJ interface for XQuery.

These APIs all have very different origins. The JAXP transformation API was created (originally under the name TrAX) primarily by the developers of the first four XSLT 1.0 engines to appear on the Java scene around 1999–2000: James Clark's original xt processor, Xalan, Saxon, and Oracle. All these had fairly similar APIs, and TrAX was developed very informally to bring them together. It only became a reality, however, when Sun decided to adopt it and formalize the specification as part of the JDK, developing the XML parser part of the JAXP API at the same time. This history shows why one of the important features of JAXP is that it enables users to select from multiple implementations (that is to choose an XML parser and an XSLT processor) without having compile-time dependencies on any implementation in their code.

XQJ, the XQuery API for Java, was a much later development than JAXP, just as XQuery standardization itself lags behind XSLT by about seven years (and counting). By this stage, the Java Community Process was much more formalized (and politicized), so development was slower and less open. Like JAXP, however, development was driven by vendors. The key participants in the XQJ process were database vendors, and as a result, the specification has a strong JDBC-like feel to it. It supports the notion that there is a client and a server who communicate through a Connection. Some XQJ implementations might not actually work that way internally, but that's the programming style.

Let's now look at these two families of interfaces in turn.

JAXP: The Java API for XML Processing

In its original form, JAXP contained two packages: the javax.xml.parsers package to control XML parsing, and javax.xml.transform to control XSLT transformation and serialization. More recently, other packages have been added, notably javax.xml.validation to control schema validation (not only XML Schema, but other schema languages such as Relax NG), and javax.xml.xpath for stan-dalone XPath processing. In addition, the javax.xml.datatype package contains classes defining Java-to-XML data type mappings (you saw these briefly in the previous section on JAXB data binding), and the javax.xml.namespace package contains a couple of simple utility classes for handling namespaces and QNames.

Be a bit careful about which versions of these interfaces you are using. Java JDK 1.3 was the first version to include XML processing in the JDK, which was achieved by including JAXP 1.1. (Previously, JAXP had been a component that you had to install separately.) JDK 1.4 moved forwards and incorporated JAXP 1.2, whereas JDK 1.5 (or J2SE 5.0 as the Sun marketing people would like us to call it) rolled on to JAXP 1.3. If you want the schema validation and XPath support, or support for DOM level3, you need JAXP 1.3. This comes as standard with JDK 1.5, as already mentioned, but you can also download it to run on JDK 1.4. Unfortunately, it's a messy download and installation because it only comes as part of the very large Java Web Services Developer Pack product. To make matters worse, if you develop an application that uses JAXP 1.3, you're not allowed to distribute JAXP 1.3 with the application. Your end users have to download it and install it individually. Perhaps Sun is just trying to encourage you to move forward to J2SE 5.0.

The same story occurs again with JAXP 1.4, which contains the StAX Streaming API and pull parser discussed at the beginning of this chapter. JAXP 1.4 is bundled with J2SE 6.0. You can get a freestanding download for J2SE 5.0, but it changes every week, so I'm not sure I'd recommend it for serious work.

Also note that when you download or install JAXP, you get both the interface and a reference implementation (usually the Apache implementation). This generates some confusion about whether JAXP is merely an API or a specific product. In fact, it's the API-that's what the "A" stands for-and the product serves as a reference implementation because an API with nothing behind it is very little use.

You'll look at these packages individually in due course. But first, look at the factory mechanism which is shared (more-or-less) by all these packages, for choosing an implementation.

The JAXP Factory Mechanism

The assumption behind the JAXP factory mechanism is that you, as the application developer, want to choose the particular processor to use (whether it's an XML parser, an XSLT processor, or a schema validator). But you also want the capability to write portable code that works with any implementation, which means your code must have no compile-time dependencies on a particular product's implementation classes: It should be possible to switch from one implementation to another simply by changing a configuration file.

The same mechanism is used for all these processors, but the XSLT engine is the example here. JAXP comes bundled with the Apache Xalan engine, but many people use it with other processors, notably Saxon because that supports the more advanced XSLT 2.0 language specification.

You start off by instantiating a Factory class:

TransformerFactory factory = TransformerFactory.newInstance();

This looks simple, but it's actually quite complex. TransformerFactory is a concrete class, delivered as part of the JAXP package. It's actually a factory in more than one sense. The real TransformerFactory class is a factory for its subclasses-its main role is to instantiate one of its subclasses, and there is one in every JAXP-compliant XSLT processor. Its subclasses, as you see later, act as factories for the other classes in the particular implementation. The Saxon implementation, for example, is class net.sf.saxon.TransformerFactoryImpl.

So if you organize things so that Saxon is loaded, then you find that the result of this call is actually an instance of net.sf.saxon.TransformerFactoryImpl. It's often a good idea to put a message into your code at this point to print out the name of the actual class that was loaded, because many problems can occur if you think you're running one processor and you're actually running another. Another tip is to set the Java system property jaxp.debug to the value true (you can do this with the switch -Djaxp.debug=true on the command line). This gives you a wealth of diagnostic information that shows which classes are being loaded and why.

How does the master TransformerFactory decide which implementation-specific TransformerFactory to load? This is where things can get tricky. The procedure works like this:

-

Look to see if there is a Java system property named javax.xml.transform.Transformer Factory. If there is, its value should be the name of a subclass of TransformerFactory. For example, if the value of the system property is net.sf.saxon.TransformerFactoryImpl, Saxon should be loaded.

-

Look for the properties file lib/jaxp.properties in the directory where the JRE (Java runtime) resides. This can contain a value for the property javax.xml.transform.Transformer Factory, which again should be the required implementation class.

-

Search the classpath for a JAR file with appropriate magic in its JAR file manifest. You don't need to worry here what the magic is: The idea is simply that if Saxon is on your classpath, Saxon gets loaded.

-

If all else fails, the default TransformerFactory is loaded. In practice, at least for the Sun JDK, this means Xalan.

A couple of problems can arise in practice, usually when you have multiple XSLT processors around. If you've got more than one XSLT processor on the classpath, searching the classpath isn't very reliable. It can also be difficult to pick up your chosen version of Xalan, rather than the version that shipped with the JDK (which isn't always the most reliable).

As far as choosing the right processor is concerned, my advice is to set the system property. Searching the classpath is simply too unpredictable, especially in a complex Web services environment, where several applications may actually want to make different choices. In fact, if the environment gets really tough, it can be worth ignoring the factory mechanism entirely and just instantiating the chosen factory directly:

TransformerFactory factory = new net.sf.saxon.TransformerFactoryImpl();

But if you don't want that compile time dependency, setting the system property within your code seems to work just as well:

System.setProperty("javax.xml.transform.TransformerFactory", "net.sf.saxon.TransformerFactoryImpl"); TransformerFactory factory = TransformerFactory.newInstance(); Another problem with the JAR file search is that it can be very expensive. With a lot of JAR files on the classpath, it sometimes takes longer to find an XML transformation engine than to do the transformation. Of course, you can and should reduce the impact of this by reusing the factory. You should never have to instantiate it more than once during the whole time the application remains running.

You've seen the mechanism with regard to the TransformerFactory for XSLT processors, and the same approach applies (with minor changes) to factories for SAX and DOM parsers, schema validators, XPath engines, and StAX parsers.

One difference is that some of these factories are parameterized. For the schema validator, you can ask for a SchemaFactory that handles a particular schema language (for example XML Schema or Relax NG), and for XPath you can request an XPath engine that works with a particular document object model (DOM, JDOM, and so on). These choices are expressed through URIs passed as string-valued parameters to the newInstance() method on the factory class. Although they appear to add flexibility, a lot more can also go wrong. One of the things that can go wrong is that some processors have been issued with manifests that the JDK doesn't recognize, because of errors in the documentation. Again, the answer is to keep things as simple as you can by setting system properties explicitly.

The JAXP Parser API

The SAX and DOM specifications already existed before JAXP came along, so the only contribution made by the JAXP APIs is to provide a factory mechanism for choosing your SAX or DOM implementation. Don't feel obliged to use it unless you really need the flexibility it offers to deploy your application with different parsers. Even then, you might find better approaches: For example SAX2 introduced its own factory mechanism shortly after JAXP first came out, and many people suggest using the SAX2 mechanism instead (the XMLReaderFactory class in package org.xml.sax.helpers).

For SAX, the factory class is called javax.xml.parsers.SAXParserFactory. Its newInstance() method returns an implementation-specific SAXParserFactory; you can then call the method newSAXParser() on that to return a SAXParser object, which is in fact just a thin wrapper around the org.xml.sax.XMLReader object that does the real parsing.

The DOM mechanism is similar. The factory class is named javax.xml.parsers.DocumentBuilderFactory; after you have an implementation-specific DocumentBuilderFactory, you can call newDocumentBuilder() to get a DocumentBuilder object, which has a parse() method that takes lexical XML as input and produces a DOM tree as output.

After you've instantiated your parser you're in well-charted waters that are discussed elsewhere in this book.

If you're adventurous, you can write your own parser. Your creation doesn't have to do the hard work of parsing XML; it can delegate that task to a real parser written by experts. It can just pretend to be a parser by implementing the relevant interfaces and making itself accessible to the factory classes. You can exploit this idea in a number of ways. Your parser, when it is loaded, can initialize configuration settings on the real parser underneath, so your parser is, in effect, just a preconfigured version of the standard system parser. Also, your parser can filter the communication between the application and the real parser underneath. For example, you could change all the attribute names to uppercase or you could remove all the whitespace between elements. This is just another way of exploiting the pipeline idea, another way of configuring the components in a pipeline so that each component does just one job. SAX provides a special class XMLFilterImpl to help you write a pseudo-parser in this way.

The JAXP Transformation API

The interface for XSLT transformation differs from the parser interface in that it isn't just a layer of factory methods; it actually controls the whole process.

It's, therefore, quite a complex API, but (as is often the way) 90% of the time you only need a small subset.

As discussed earlier, you start by calling TransformerFactory.newInstance() to get yourself an implementation-specific TransformerFactory.

You can then use this to compile a stylesheet, which you do using the rather poorly named newTemplates() method. This takes as its argument a Source object, which defines where the stylesheet comes from. (A stylesheet is simply an XML document, but it can be represented in many different ways.) Source is a rather unusual interface, in that it's really just a marker for the collection of different kinds of sources that the particular XSLT engine happens to understand. Most accept the three kinds of Source defined in JAXP, namely a StreamSource (lexical XML in a file), a SAXSource (a stream of SAX events, perhaps but not necessarily from an XML parser), and a DOMSource (a DOM tree in memory). Some processor may accept other kinds of Source; for example, Saxon accepts source objects that wrap a JDOM, DOM4J, or XOM document node.

The next stage is to create a Transformer, which is the object that actually runs the transformation. You do this using the newTransformer() method on the Templates object. The Templates object (essentially, the compiled stylesheet in memory) can be used to run the same transformation many times, typically on different source documents or with different parameters-perhaps in different threads. Creating the compiled stylesheet is expensive, and it's a good idea to keep it around in memory if you're going to use it more than once. By contrast, the Transformer is cheap to create, and its main role is just to collect the parameters for an individual run of the stylesheet; so my usual advice is to use it once only. Certainly, you must never try to use it to run more than one transformation at the same time.

Here's a simple example that shows how to put this together. It transforms source.xml using stylesheet style.xsl to create an output file result.html, setting the stylesheet parameter debug to the value no.

TransformerFactory factory = TransformerFactory.newFactory(); Source styleSrc = new StreamSource(new File("style.xsl")); Templates stylesheet = factory.newTemplates(styleSrc); Transformer trans = stylesheet.newTransformer(); trans.setParameter("debug", "no"); Source docSrc = new StreamSource(new File("source.xml")); Result output = new StreamResult(new File("result.html")); trans.transform(docSrc, output); | Important | One little subtlety in this example is worth mentioning. The class StreamSource has various constructors, including one that takes an InputStream. Instead of giving it a new File as the input, you can give it a new FileInputStream. But there's a big difference: With a File as input, the XSLT processor knows the base URI of the source document or stylesheet. With a FileInputStream as input, all the XSLT processor sees is a stream of bytes, and it has no idea where they came from. This means that it can't resolve relative URIs, for example those appearing in xsl:include and xsl:import. You do have the option to supply a base URI separately using the getSystemId() method on the Source object, but it's easy to forget to do it. |

You can call various methods to configure the Transformer, for example to supply values for the <xsl:param> parameters defined in the stylesheet, but its main method is the transform() method which actually runs the transformation. This takes two arguments: a Source object, which this time represents the source document to be transformed, and a Result object, which describes the output of the transformation. Like Source, this is an interface rather than a concrete class. An implementation can decide how many different kinds of Result it will support. Most implementations support the three concrete Result classes defined in JAXP itself, which mirror the Source classes: StreamResult for writing (serialized) lexical XML, SAXResult for sending a stream of events to a SAX ContentHandler, and DOMResult for writing a DOM tree.

That's the essence of the transformation API. It's worth it to be familiar with a few variations, if only to learn which methods you don't need for a particular application:

-

q As well as running an XSLT transformation you can run a so-called identity transformation, which simply copies the input to the output unchanged. To do this, you call the factory's newTemplates() method without supplying a source stylesheet. Although an identity transformation doesn't change the document at the XML level, it can be very useful because it can convert the document from any kind of Source to any kind of Result, for example from a SAXSource to a DOMResult or from a DOMSource to a StreamResult. If your XSLT processor supports additional kinds of source and result, this becomes even more powerful.

-

q Rather than running the transformation using the transform() method, you can run it as a filter in a SAX pipeline. In fact, you can do this in two separate ways. One way is to create a SAXTransformerFactory and call its newTransfomerHandler() method. The resulting SAXTransformerHandler is, in fact, a SAX ContentHandler that can be used to receive (and, of course, transform) the events passed by a SAX parser to its ContentHandler directly. The other way, also starting with a SAXTransformerHandler, is to call the newXMLFilter() method to get an XMLFilter, which as you saw earlier is a pretend-XML-parser. This pretend parser can be used to feed its output document in the form of SAX events to an application that is written in the form of a SAX ContentHandler to process SAX output.

The SAXTransformerFactory also allows the stylesheet to be processed in a pipeline in a similar way, but this feature is rarely needed.

-

q The JAXP interface allows you to supply two important plug-in objects: an ErrorListener, which receives notification of errors and decides how to report them, and a URIResolver, which decides how URIs used in the stylesheet should be interpreted (a common use is to get a cached copy of documents from local filestore, identified by a catalog file). Be aware that the ErrorListener and URIResolver supplied to the TransformerFactory itself are used at compile time, whereas those supplied to the Transformer are used at runtime.

The JAXP Validation API

New in JAXP 1.3, the validation API allows you to control validation of a document against a schema. This is a schema with a small s. The API is designed to be independent of the particular schema technology you use. The idea is that it works equally well with DTDs, XML Schema, or Relax NG, and perhaps even other technologies such as Schematron.

Validation is often done immediately after parsing, and, of course, it can be done inline with parsing-that is, as the next step in the processing pipeline. But it can make sense to do validation at other stages in the pipeline as well. For example it is useful to validate that the output of a transformation is correct XHTML. The validation API is, therefore, quite separate from the parsing API (and some validators-Saxon is an example-are distributed independently of any XML parser).

The API itself is modelled very closely on the transformation API. The factory class is called SchemaFactory, and its newInstance() method takes as a parameter a constant identifying the schema language you want to use. With the resulting implementation-specific SchemaFactory object, you can call a newSchema() method to parse a source schema to create a compiled schema, in much the same way as newTemplates() gives you a compiled stylesheet. The resulting Schema object has a method newValidator() that, as you would expect, returns a Validator object. This is the analog of the Transformer in the transformation API, and sure enough, it has a validate() method with two arguments: a Source to identify the document being validated, and a Result to indicate the destination of the post-validation output document. (You can omit this argument if you only want a success/failure result.)

If you want to do validation in a SAX pipeline, the Schema object offers another method newValidatorHandler(). This returns a ValidatorHandler that, like a TransformerHandler, accepts SAX events from an XML parser and validates the document that they represent.

Here's an example that validates a document supplied in the form of a DOM, creating a new DOM representing the post-validation document. As well as having attribute values normalized and defaults expanded, it differs from the original by having type annotations, which can be interrogated using methods available in DOM level 3 such as Node.getTypeInfo().

SchemaFactory schemaFactory = SchemaFactory.newInstance("http://www.w3.org/2001/XMLSchema"); // create a grammar object. Schema schemaGrammar = schemaFactory.newSchema(new File("schema.xsd")); Validator schemaValidator = schemaGrammar.newValidator(); //validate xml instance against the grammar. schemaValidator.validate(new StreamSource("instance.xml")); System.err.println("Validation successful"); After the discussion of the main components of the JAXP API, move on now to XQJ, the Java API for XQuery, or rather, as the order of the initials implies, the XQuery API for Java. (If you think that's an arbitrary distinction, you need to study the byzantine politics involved in defining the name.)

XQJ: The XQuery API for Java

Unlike the other APIs discussed in this chapter, XQJ is not a completely stable specification at the time of writing. You can get early access copies of the specifications, but there is no reference implementation you can download and play with. Nevertheless, it's implemented in a couple of products already (for example, Saxon and the DataDirect XQuery engine), and it seems very likely that many more will follow. A great many XQuery implementations are trying to catch a share of what promises to be a big market. They won't all be successful, so using a standard API is a good way to hedge your bets when it comes to choosing a product.

Because the detail might well change, in this chapter, you get an overview of the main concepts.

XQJ does reuse a few of the JAXP classes, but it's obvious to the most casual observer that it comes from a different stable. In fact, it owes far more to the design of data-handling APIs like JDBC.

The first object you encounter is an XQDataSource. Each implementation has to provide its own XQDataSource object. Unlike JAXP, there's no factory that enables you to choose the right one; that's left to you (or to a framework such as J2EE) to sort out. You can think of an XQDataSource as an object that represents a database.

From this object you call getConnection() to get a connection to the database. The resulting XQConnection object has methods like getLoginTimeout(), so it's clear that the designers are thinking very much in client-server terms with the connection representing a communication channel between the client and the server. Nevertheless, it's sufficiently abstract that it's quite possible for an implementation to run the server in the same thread as the client (which is what Saxon does).

After you've got a connection, you can compile a query. This is done using the prepareExpression() method on the Connection object. This architecture means that a compiled query is closely tied not only to a specific database but to a specific database connection. The result of the method is an XQPreparedExpression object. Rather surprisingly, this fulfills the roles of both the Templates and the Transformer objects in the JAXP transformation API. You can set query parameters on the XQPreparedExpression object, which means that it's not safe to use it in multiple threads at the same time. (This feels like a design mistake that might well be corrected before the final spec is frozen.)

You can then evaluate the query by calling the executeQuery() method on the XQPreparedExpression. This returns an XQResultSequence, which is a representation of the query results that has characteristics of both a List and an Iterator. In fact, two varieties of XQResultSequence exist, one of which allows only forward scrolling through the results, whereas the other allows navigation in any direction.

The items in the query result are represented using an object model that's fairly close to the abstract XDM data model defined in the XQuery specification itself. The items in the sequence are XQItem objects. These can be either nodes or atomic values. If they are nodes, you can access them using the getNode() method which returns a DOM representation of the node; if they are atomic values you can access the value using a method such as getBoolean() or getInt() according to the actual type of the value. (The mapping between XML Schema data types and Java types seems to be slightly different from the one that JAXB uses.)

Some interesting methods allow the results of a query to be presented using other APIs discussed in this chapter. For example, getItemAsStream() returns a StAX stream representation of a node in the query results, whereas writeItemToSAX() allows it to be represented as a stream of SAX events.

For more details of the XQJ interface, you can download the early access specifications from http://www.jcp.org/en/jsr/detail?id=225. But this is not yet a mature specification, so you must carefully investigate how the interface is implemented in different products.

EAN: 2147483647

Pages: 215