How PostgreSQL Organizes Data

| Before you can really dig into the details of performance tuning, you need to understand some of the basic architecture of PostgreSQL. You already know that in PostgreSQL, data is stored in tables and tables are grouped into databases. At the highest level of organization, databases are grouped into clusters ”a cluster of databases is serviced by a postmaster . Let's see how this data hierarchy is stored on disk. You can see all databases in a cluster using the following query: perf=# SELECT datname, oid FROM pg_database; datname oid -----------+------- perf 16556 template1 1 template0 16555 From this list, you can see that I have three databases in this cluster. You can find the storage for these databases by looking in the $PGDATA directory: $ cd $PGDATA $ ls base pg_clog pg_ident.conf pg_xlog postmaster.opts global pg_hba.conf PG_VERSION postgresql.conf postmaster.pid The $PGDATA directory has a subdirectory named base . The base subdirectory is where your databases reside: $ cd ./base $ ls -l total 12 drwx------ 2 postgres pgadmin 4096 Jan 01 20:53 1 drwx------ 2 postgres pgadmin 4096 Jan 01 20:53 16555 drwx------ 3 postgres pgadmin 4096 Jan 01 22:38 16556 Notice that there are three subdirectories underneath $PGDATA/base . The name of each subdirectory corresponds to the oid of one entry in the pg_database table: the subdirectory named 1 contains the template1 database, the subdirectory named 16555 contains the template0 database, and the subdirectory named 16556 contains the perf database. Let's look a little deeper: $ cd ./1 $ ls 1247 16392 16408 16421 16429 16441 16449 16460 16472 1249 16394 16410 16422 16432 16442 16452 16462 16474 1255 16396 16412 16423 16435 16443 16453 16463 16475 1259 16398 16414 16424 16436 16444 16454 16465 16477 16384 16400 16416 16425 16437 16445 16455 16466 pg_internal.init 16386 16402 16418 16426 16438 16446 16456 16468 PG_VERSION 16388 16404 16419 16427 16439 16447 16457 16469 16390 16406 16420 16428 16440 16448 16458 16471 Again, you see a lot of files with numeric filenames. You can guess that these numbers also correspond to oid s, but which oid s? You know that you can store tables inside a database, so you can expect to find a match between these filenames and table oid s. Let's go back into psql and look for the match: $ psql -q -d template1 template1=# SELECT relname, oid FROM pg_class; template1=# SELECT oid, relname FROM pg_class ORDER BY oid; oid relname -------+--------------------------------- 1247 pg_type 1249 pg_attribute 1255 pg_proc 1259 pg_class 1260 pg_shadow 1261 pg_group 1262 pg_database 16384 pg_attrdef 16386 pg_relcheck ... ... The correspondence between filenames and table oid s is now obvious. Each table is stored in its own disk file and, in most cases, the name of the file is the oid of the table's entry in the pg_class table [1] .

There are a two more columns in pg_class that might help explain PostgreSQL's storage structure: perf=# SELECT relname, oid, relpages, reltuples FROM pg_class perf-# ORDER BY oid relname oid reltuples relpages --------------+------+-----------+---------- pg_type 1247 143 2 pg_attribute 1249 795 11 pg_proc 1255 1263 31 pg_class 1259 101 2 pg_shadow 1260 1 1 pg_group 1261 0 0 ... ... ... ... The reltuples column tells you how many tuples are in each table. The relpages column shows how many pages are required to store the current contents of the table. How do these numbers correspond to the actual on-disk structures? If you look at the table files for a few tables, you'll see that there is a relationship between the size of the file and the number of relpages columns: $ ls -l 1247 1249 -rw------- 1 postgres pgadmin 16384 Jan 01 20:53 1247 -rw------- 1 postgres pgadmin 90112 Jan 01 20:53 1249 The file named 1247 ( pg_type ) is 16384 bytes long and consumes two pages. The file named 1249 ( pg_attribute ) is 90122 bytes long and consumes 11 pages. A little math will show that 16384/2 = 8192 and 90122/11 = 8192: each page is 8192 (8K) bytes long. In PostgreSQL, all disk I/O is performed on a page-by-page basis [2] . When you select a single row from a table, PostgreSQL will read at least one page ”it may read many pages if the row is large. When you update a single row, PostgreSQL will write the new version of the row at the end of the table and will mark the original version of the row as invalid.

The size of a page is fixed at 8,192 bytes. You can increase or decrease the page size if you build your own copy of PostgreSQL from source, but all pages within a database will be the same size. The size of a row is not fixed ”different tables will yield different row sizes. In fact, the rows within a single table may differ in size if the table contains variable length columns. Given that the page size is fixed and the row size is variable, it's difficult to predict exactly how many rows will fit within any given page.

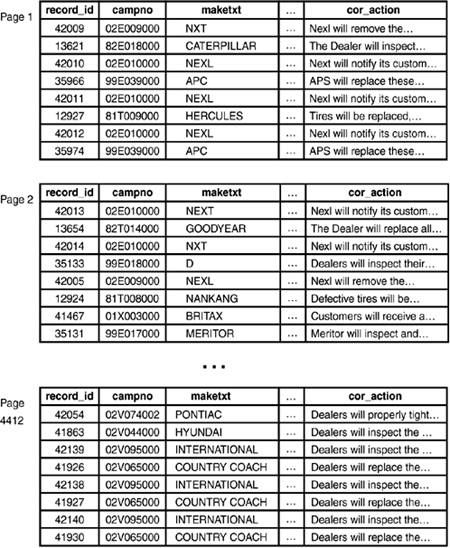

Notice that there is only one index and it covers the record_id column. The recalls table in the perf database contains 39,241 rows in 4,412 pages: perf=# SELECT relname, reltuples, relpages, oid FROM pg_class perf-# WHERE relname = 'recalls'; relname reltuples relpages oid ---------+-----------+----------+------- recalls 39241 4412 96409 Given that a page is 8,192 bytes long, you would expect that the file holding this table ( $PGDATA/base/16556/96409 ) would be 36,143,104 bytes long: $ ls -l $PGDATA/base/16556/96409 -rw------- 1 postgres pgadmin 36143104 Jan 01 23:34 96409 Figure 4.1 shows how the recalls table might look on disk. (Notice that the rows are not sorted ”they appear in the approximate order of insertion.) Figure 4.1. The recalls table as it might look on disk. If a row is too large to fit into a single 8K block [4] , PostgreSQL will write part of the data into a TOAST [5] table. A TOAST table acts as an extension to a normal table. It holds values too large to fit inline in the main table.

Indexes are also stored in page files. A page that holds row data is called a heap page. A page that holds index data is called an index page. You can locate the page file that stores an index by examining the index's entry in the pg_class table. And, just like tables, it is difficult to predict how many index entries will fit into each 8K page [6] . If an index entry is too large, it is moved to an index TOAST table.

In PostgreSQL, a page that contains row data is a heap block . A page that contains index data is an index block . You will never find heap blocks and index blocks in the same page file. Page CachingTwo of the fundamental performance rules in any database system are

Accordingly, PostgreSQL tries very hard to minimize disk I/O by keeping frequently used data in memory. When the first server process starts, it creates an in-memory data structure known as the buffer cache . The buffer cache is organized as a collection of 8K pages ”each page in the buffer cache corresponds to a page in some page file. The buffer cache is shared between all processes servicing a given database. When you select a row from a table, PostgreSQL will read the heap block that contains the row into the buffer cache. If there isn't enough free space in the cache, PostgreSQL will move some other block out of the cache. If a block being removed from the cache has been modified, it will be written back out to disk; otherwise . it will simply be discarded. Index blocks are buffered as well. In the next section, you'll see how to measure the performance of the cache and how to change its size. SummaryThis section gave you a good overview of how PostgreSQL stores data on disk. With some of the fundamentals out of the way, you can move on to more performance issues. |

EAN: 2147483647

Pages: 220