Types of Shaders

| As mentioned earlier, Renderman offered six types of shaders to application developers. However, the current crop of real-time shading languages only offer two types: vertex and fragment shaders. To understand the potential of each type, we must first review a general graphics pipeline. Vertices traverse five stages on their way to the graphics card:

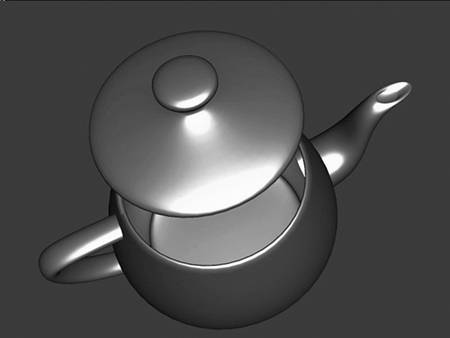

Vertex and fragment shaders allow you to program portions of that pipeline into a shader. Vertex programs, for example, are executed instead of the transformation step. As such, a vertex shader takes input data (vertices, texturing coordinates, colors, lights, and so on) and performs arbitrary transforms on them, making sure the output is projected to device coordinates. Thus, vertex programs can perform any kind of per-vertex processing: geometry animation, texture coordinate processing, coloring, lighting, and so on. Notice how, at this stage, we cannot talk about fragments, so all our work will be done on per-vertex data. Fragment shaders, on the other hand, overwrite the fragment texturing and coloring phase. As a result, we receive texture coordinates and colors, and have the tools and power to generate procedural textures, and so on. If you think about it, real-time shading languages are very different from an interface specification like Renderman. Shader types are a clear example. Where Renderman offered a high-level, very abstract shading language, newer languages offer very strict control on what can be done at each step in the graphics pipeline. Some of these limitations are imposed by the relatively restricted execution environment. We need scripts that run in real time, and that means our language will be limited sometimes. A Collection of ShadersWe now know the basics about what shaders are and how to code them. The rest of the chapter is a collection of popular vertex and pixel shaders, so you can learn firsthand how some classic effects can be created. All the shaders in this chapter are coded using NVIDIA's Cg shading language. I have selected this tool for several reasons. It can compile on Macs, Linux, and Windows boxes. In addition, it is supported by DirectX and OpenGL, and if the latest information is accurate, it can be used on an Xbox as well. Also, it is source compatible with Microsoft's HLSL and DirectX 9, and thus is probably the widest supported standard available for real-time shading. NOTE Check out NVIDIA's web site at www.nvidia.com/view.asp?IO=cg_faq#platforms for more information. Geometry EffectsOne of the easiest shader types can be used to implement geometry effects, such as simple animation, transforms, and so on. In this case, I have designed a Cg shader to create the illusion of wave movement in the sea. Because it is the first example, I will describe the creation process in detail. We must first decide on the chain of responsibility in the program, or in simpler terms, what does what. Because shader languages cannot create vertices at the present time, all geometry and topology must be passed to the shader. Then, the shader will only transform vertex positions, so the wave effect is achieved. We will pass the sea as a grid with each "row" in the grid consisting of a triangle strip. The vertex program will have the following source code: void waves (float3 position: POSITION, float4 color: COLOR, float2 texcoord: TEXCOORD0, out float4 oPosition: POSITION, out float2 oTexCoord: TEXCOORD0, out float3 oColor: COLOR, uniform float timevalue, uniform float4x4 modelviewProj) { float phase=position.x + timevalue; float amplitude=sin(phase); float4 position=float4(position.x,amplitude,position.z,1); oPosition=mul(modelviewProj, position); oTexCoord=texCoord; oColor=color; } This very simple shader starts by computing a wave front along the X axis. I have kept the simulation to a bare minimum so you can familiarize yourself with Cg's syntax. Notice how our shader receives traditional pipeline parameters such as the position, color, and texcoord. It also receives the modelviewProj matrix so we can implement the vertex projection portion. It can also receive user-defined parameters. In this case, it receives the time value that we will need to make the wave advance as time passes. On the opposite end, the shader returns those parameters marked with the reserved word out. In this case, we return a position, texturing coordinate, and color. A much better water simulation can be created using the Cg language. But as our first shader, this one should do. Let's move on to more involved examples. LightingLighting can easily be computed in a shader. All we need to know is the lighting model we want to use, and then build the specific equations into the source code. In fact, lighting can be computed both at a vertex and fragment level. A vertex shader can compute per-vertex illumination, and the API of choice will interpolate pixels in between these sampling points. A fragment shader will compute lighting per pixel, offering much better results. We can also choose to implement more complex lighting effects, such as Bidirectional Reflectance Distribution Functions (BRDFs) (see Figure 21.4). Figure 21.4. A shiny gold teapot implemented using a BRDF on a pixel shader.

Our first example will be a simple vertex shader that computes lighting per vertex using the classic ambient, diffuse, and specular equation. Because this model was already explained in Chapter 17, "Shading," we will focus on the shader: void vertex_light (float4 position : POSITION, float3 normal : NORMAL, out float4 oPosition : POSITION, out float4 oColor : COLOR, uniform float4x4 modelViewProj, uniform float3 globalAmbient, uniform float3 lightColor, uniform float3 lightPosition, uniform float3 eyePosition, uniform float3 Ke, uniform float3 Ka, uniform float3 Kd, uniform float3 Ks, uniform float shininess) { // we will need these float3 P = position.xyz; float3 N = normal; // Compute ambient term float3 ambient = Ka * globalAmbient; // Compute the diffuse term float3 L = normalize(lightPosition - P); float diffuseLight = max(dot(N, L), 0); float3 diffuse = Kd * lightColor * diffuseLight; // Compute the specular term float3 V = normalize(eyePosition - P); float3 H = normalize(L + V); float specularLight = pow(max(dot(N, H), 0), shininess); if (diffuseLight <= 0) specularLight = 0; float3 specular = Ks * lightColor * specularLight; // load outputs oPosition = mul(modelViewProj, position); oColor.xyz = ambient + diffuse + specular; oColor.w = 1; } This code is pretty easy to follow. We compute the position and normal that are used all over, and then we simply implement the equations in order: ambient, diffuse, and specular. Now, this shader won't look very realistic on coarse geometry due to the interpolation done between per-vertex colors. For smoother results, a fragment shader must be used. Remember that fragment shaders are evaluated later in the pipeline in order for each fragment (which is somehow related to each pixel) to be rendered. Here is the source code, so we can compare both versions later. The illumination model is the same. void fragment_light (float4 position : TEXCOORD0, float3 normal : TEXCOORD1, out float4 oColor : COLOR, uniform float3 globalAmbient, uniform float3 lightColor, uniform float3 lightPosition, uniform float3 eyePosition, uniform float3 Ke, uniform float3 Ka, uniform float3 Kd, uniform float3 Ks, uniform float shininess) { float3 P = position.xyz; float3 N = normal; // Compute ambient term float3 ambient = Ka * globalAmbient; // Compute the diffuse term float3 L = normalize(lightPosition - P); float diffuseLight = max(dot(L, N), 0); float3 diffuse = Kd * lightColor * diffuseLight; // Compute the specular term float3 V = normalize(eyePosition - P); float3 H = normalize(L + V); float specularLight = pow(max(dot(H, N), 0), shininess); if (diffuseLight <= 0) specularLight = 0; float3 specular = Ks * lightColor * specularLight; oColor.xyz = ambient + diffuse + specular; oColor.w = 1; } The differences are minimal. Here we do not project vertices because we are not deciding where vertices go but instead how they are shaded. The lighting model is virtually identical, so both shaders should create similar pictures, with the latter having better quality than the former. On the other hand, remember that the vertex shader will be executed once per vertex, whereas the fragment version will be run many more times. So performance does not necessarily have to be the same due to the different number of executions. As a summary, lighting effects are really easy to put into shader code. The complex part is reaching the lighting equations, not coding them. Shaders are just an implementation of the equations. Because these equations tend to have lots of parameters, we need a mechanism to pass them to the shader. That's what the uniform keyword is for: It declares a parameter that comes from an external source (usually the C++ calling program). Then, after passing extra parameters to the shaders, all you need to do is compute the lighting expression in the shader. You can do so at the vertex shader level or in a fragment program. The latter offers better results at a higher cost, but most of the shader's source is the same. |

EAN: N/A

Pages: 261