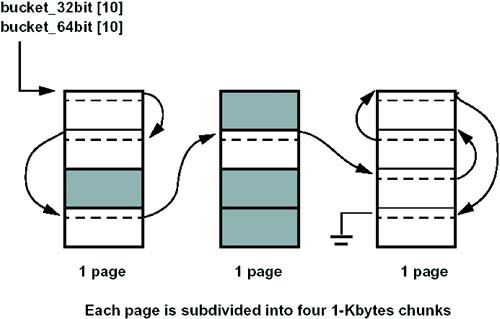

| In Chapter 3, "The Kernel: Basic Organization," we talked about how the kernel manages virtual and physical memory. Memory is divided into pages of 4 KB each. The kernel's page allocator handles allocating pages of memory to processes that need them. However, the kernel itself needs dynamic memory too, and it uses a different process. The memory allocations for the kernel are frequently for small areas of memory the size of a proc structure or the size of a network buffer, for example. It would be impractical to just call the page allocator and request a page each time we need a small piece of memory. That's where the kernel memory allocator comes in. It handles requests for memory from the kernel, getting pages from the page allocator as needed. It then divides these pages into smaller pieces so that it can be used efficiently. Beginning with HP-UX version 11.11, the method of tracking and allocating kernel memory has changed. Prior to 11.11 HP-UX, a scheme known as the McKusick & Karel memory allocator, also called the "bucket allocator," was used. In release 11.11, this was replaced by the new arena allocator. Although our focus in this book is on HP-UX version 11.11, the bucket allocator is pervasive enough that it's worth a brief look. Without going into too much detail, we look at the bucket allocator before examining the newer arena allocator. The Bucket Allocator The bucket allocator divides memory requests into buckets by size, where each size is a power of two. Thus, we have a 32-byte bucket, a 64-byte bucket, a 128-byte bucket, and so on. When a kernel subsystem needs to allocate memory, the request is rounded up to the next power of two. So, for example, if a request is for 100 bytes, it will come from the 128-byte bucket. The arrays bucket_32bit[] (for 32-bit kernels) and bucket_64bit[] (for 64-bit kernels) contain the head of a list of free areas of memory. The index into the array is the power of two of the size of the buckets: 25 is 32, so the head of the 32-byte bucket list is at bucket_64bit[5], and so on. Memory is allocated using the MALLOC macro. This macro checks the freelist pointer to see if an area of memory of the requested size is on the list. If so, then the first address off the list is returned. If not, then a new page is requested from the page allocator by calling kmalloc(). This page then is broken into pieces, the pieces are put on the free list, and the first of them is returned to the requestor. When the memory is no longer needed, it is returned to the bucket lists by use of the FREE macro. If a lot of memory allocations are made and then freed, this can leave many pages of memory on the bucket free lists. The vhand process periodically looks for blocks of memory on the free lists that can be coalesced back into pages and returned to the page pool. When the memory is on the free list, the first word in the memory area is used as the link to the next free area. Figure 13-3 shows an example of three pages, each of which has been broken into four 1-KB areas. In the figure, four are in use and eight are on the free list. The third page in this example would be a candidate for being returned to the page pool. Figure 13-3. Buckets on the Free List

One of the shortcomings of the bucket allocator is the risk of corruption if a freed pointer is reused. When a subsystem frees memory, the first word in that area of memory is now used as a link in the linked list. If the pointer to that memory gets reused after it has been freed, the result is that the entire free list gets corrupted. This corruption causes a panic the next time the system tries to get a piece of memory from that bucket. These types of corruption can be very hard to track down, because by the time the panic occurs, the code that caused the corruption may already be done and gone. The Arena Allocator The arena allocator has several advantages over the bucket allocator. With this allocation method, each subsystem creates its own "arena" of memory. Memory is then requested from and returned to that arena. This allows the allocator to tailor its performance to a particular use and also helps to contain any possible memory corruption. A problem in one arena can't affect another subsystem. The arena allocator refers to each piece of memory allocated or released as a memory object. One feature of the arena allocator is that it distinguishes between fixed-size memory objects and variable-sized memory objects. If an arena is created as a fixed-size arena, then all allocations made from that arena are of the same size. This is a very common operation in the kernel for example, one arena, the CALLOUT arena, is used for allocating callout structures. Fixed-size arenas can manage memory more efficiently than can variable-sized arenas because each page of memory can be divided up optimally for that particular size of data object. Each object in the arena has a header associated with it. When the object is on a free list, the list link is kept in this header, not in the first word of the object itself as with the bucket allocator. By moving the link outside of the memory object, we reduce the chance of someone corrupting the free list by reusing a freed pointer. The arena allocator divides objects into three size categories. A small object is one that is less than 4 KB. A large object is from 4 KB to 32 KB. An xlarge object is an object larger than 32 KB. Arena Allocator Data Structures The top-level data structure for arenas is the struct kmem_arena_globals. There is exactly one of these, called karena, allocated at system initialization time. This holds system global arena information such as the pointer to the linked list of arenas and the number of arenas. Each arena is represented by a struct kmem_arena. The kmem_arena_create() function creates a new kmem_arena, initializes it, and links it onto the list of arenas in the system. kmem_arena_create() takes four arguments: the size of the objects in the arena, then name of the arena, a pointer to a kmem_arena_attr structure, and a flags field. For an arena that stores variable-sized objects, the size will be zero. The attribute structure, kmem_arena_attr, allows for a great deal of tuning of the arena's attributes. Table 13-1 lists the fields in the kmem_arena_attr structure. Table 13-1. The kmem_arena_attr StructureType | Name | Description |

|---|

size_t | kat_struct_size | The size of the attribute structure itself. | int (*) | kat_ctor | Pointer to a constructor function, called when new objects are created. | void (*) | kat_dtor | Pointer to a destructor function, called when objects are destroyed. | long | kat_maxcnt | Maximum number of objects. | long | kat_minfcnt | Minimum number of objects to be kept on the free lists. | long | kat_maxpgcnt | Maximum number of pages in the arena for variable-sized objects. | long | kat_refillcnt | Minimum number of objects to be created at each refill. | kat_flags_t | kat_flags | Attribute flags. | size_t | kat_align | Alignment requirements for objects. |

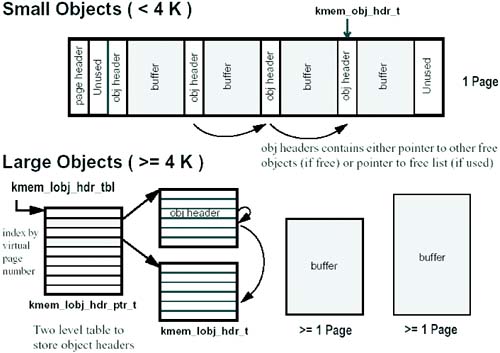

Objects and Object Headers As mentioned, each object has an object header associated with it. When an object is on the free list, this header has a pointer to the next object on the list. When objects are in use, the header points back to the free list header in the arena it came from. Headers are stored differently depending on the size of the object. For small objects, the header is stored immediately before the object in memory. The header in this case needs only a single pointer the one that points to the free list if free or to the free list head if in use. We don't need a pointer to the object itself because we know it immediately follows the header. The small object header looks like this: typedef union kmem_obj_hdr { union kmem_obj_hdr *ko_fnext; /* Next element in free list */ struct kmem_flist_hdr *ko_fhdr; /* Ptr to Free list header */ } kmem_obj_hdr_t;

For large objects, we have a global two-level structure for pointers to the object headers. The top level, kmem_lobj_hdr_tbl, is allocated at system initialization time. It is an array of pointers to second-level tables, which in turn are arrays of pointers to objects. To find a particular object header, the system uses the upper bits of the virtual page number to find the right entry in the first-level table, then follows that pointer and uses the lower bits of the virtual page number to find the second-level table entry. This is the object header. Because the object headers for large objects are not next to the object itself, we need another field in the header. In addition to the pointer to the free list, we need a pointer to the object itself. The large object header builds on the small object header but adds this extra pointer: typedef struct kmem_lobj_hdr { union kmem_obj_hdr kl_ohdr; /* Object header for large objects */ union { void *kl_vaddr; /* Virtual address of object. */ size_t kl_objsize; /* Object size */ } kl_un; } kmem_lobj_hdr_t;

Note the additional object size field. This is used for xlarge objects. Xlarge objects are not cached that is, when freed they don't go back on a free list, they just get released. For small and large objects, the size of the object is in the free list header. But for xlarge objects we have no free list, and therefore no free-list header, so we have to store the size here. Figure 13-4 shows the layout of objects and object headers in memory. Figure 13-4. Memory Arena Objects and Object Headers

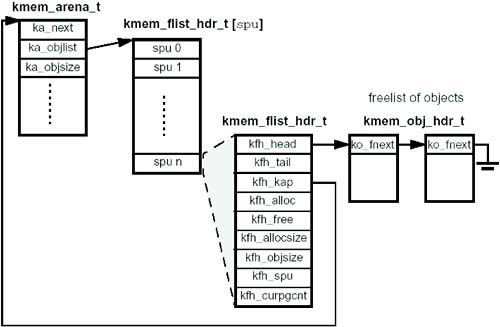

This combination of objects and object headers gives us better performance and better control over memory than does the bucket allocator. Object Free Lists For fixed-size objects' the arena maintains one free list per SPU. The ka_objlist entry in the kmem_arena structure is a pointer to an array of kmem_flist_hdr structures, one for each SPU. This header has a pointer to the first free object, kfh_head. In addition, it has fields used for managing the list, such as the object size, current page count, and a pointer back to the arena it belongs in. Figure 13-5 illustrates the free list for fixed-size objects. Figure 13-5. Free List for Fixed-Size Objects

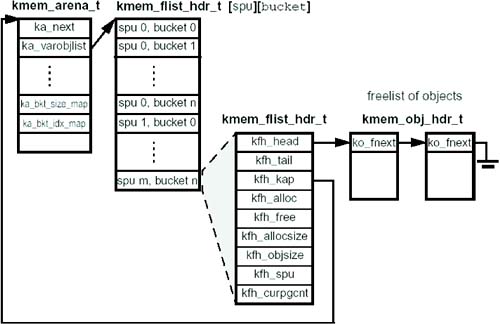

The free lists for variable-sized objects are different. Free memory objects are handled similarly to the way they were with the bucket allocator, with a set of buckets pointing to lists of different sized objects. In this way, when a new object is needed, the allocator can go to the free list and get an object that is already the correct size. One difference between this scheme and the older bucket allocator is that the bucket sizes are different. Because of the extra overhead of the object headers, it doesn't make sense to make the buckets exact powers of two. For example, if you have a 1024-byte bucket, then you could only get three of these on a page. If instead we use a 960-byte bucket, then we can get four objects of up to 960 bytes on a page efficiently. The next smaller bucket would be a 768-byte bucket, which gives you five objects on a page, and so on. The size of the various buckets is kept in an array, kmem_bkt_default_size_map. When the arena is created, the creator can opt to use the standard power-of-two buckets if that layout is required for compatibility with older code, but it is a less efficient use of memory. For these bucket lists for variable-sized objects, we use the ka_varobjlist pointer in the arena structure. This points to a two-dimensional array, indexed by CPU number and bucket number. Then, entries in this array are the kmem_flist_hdr structures, just like for the fixed objects. Figure 13-6 illustrates the free lists for variable-sized objects. Figure 13-6. Free List for Variable-Sized Objects

The arena allocator is an improvement over the older bucket allocator in that it gives us more control over the size and alignment of objects, makes more efficient use of memory, and helps isolate one area of the kernel from another in terms of memory usage. Managing Kernel Virtual Addresses: The Sysmap In addition to allocating and freeing memory, the kernel has to keep track of its own virtual address space. Recall that for user processes, this is handled by the VAS, pregion, and region structures associated with each thread. The kernel doesn't have these structures for its own address space. All kernel addresses are in the same space space 0, or KERNELSPACE and it has to manage the available virtual addresses within that space. When physical pages are assigned for kernel use, the kernel has to map new virtual addresses to those physical pages, and when the pages are freed, the virtual addresses must be freed too. The kernel keeps track of available addresses using a resource map. A resource map is a collection of structures, each of which describes a range of address space. Resource maps are allocated at system initialization time and have a fixed size. The first entry in the map is a header that points to the end of the map and has a pointer to the name of the map: struct map { struct mapent *m_limit; /* address of last slot in map */ char *m_name; /* name of resource - use in overflow warning message */ };

All the remaining entries contain a size and an address: struct mapent { unsigned long m_size; /* size of this segment of the map */ unsigned long m_addr; /* resource-space addr of start of segment */ };

The m_addr field is the address of the beginning of a range of free addresses, and the m_size field is the size of the range. The kernel stores its dynamic and static data in the first quadrant of space zero. On a 32-bit kernel this is a 1-GB range, on a 64-bit kernel it's 4 TB. A resource map called sysmap_32bit manages the first 1 GB of this address space. On the 64-bit kernel there is also a resource map called sysmap_64bit that manages the remaining part of the first quadrant, beyond the first gigabyte. The resource map is arranged in sorted order by address. Entries in the map are moved to make room to insert a new entry and shifted to fill in a hole created by a deleted entry. There are no blank entries the map starts with the second entry (the first is the header), and the end of the map is indicated by an m_addr value of zero. Because zero is used as an end-of-list marker, all the actual page numbers are incremented by one so that we can manage page zero as well. So, page zero is indicated by an m_addr of one, and page 0x33a7 would be indicated by an m_addr of 0x33a8. When a new range of virtual addresses is needed, the kernel uses a "first fit" algorithm to find a range. It traverses the sysmap starting at the beginning and looks for the first range that is big enough for the range it needs. When it finds one, it uses that address range and adjusts the size field to indicate that the available range is now smaller. So, for example, if we need four pages of addresses and the first entry we find in the map that is big enough is [9, 0x1b37,] then we've found a range at 0x1b37 that is nine pages long. The kernel uses pages 0x1b37 to 0x1b3a for the four pages and changes the entry in the sysmap to read [5, 0x1b3b]. If we ever run into a case where a range of virtual addresses is needed and there is no entry in the sysmap big enough, we will panic with the message kalloc: out of virtual space. This can be caused if the sysmap has become fragmented, containing many small segments and no large ones. Of course, it can also be caused if we really are out of virtual addresses, but that is rare unless a buggy subsystem has run away and grabbed up all the address ranges. As memory is freed and the address ranges associated with it are no longer needed, those address ranges get put back into the table. If possible, the kernel coalesces adjacent ranges into one larger range; otherwise a new entry is inserted into the table. The size of the sysmap is fixed at system boot time. The nsysmap and nsysmap64 tunables set the initial size of the sysmaps. In addition, the kernel adjusts those numbers upward at boot time based on the amount of memory in the system. If the sysmap ever becomes full, it will be unable to accept new entries. Again, this can happen if the address space becomes fragmented such that the table is used up by a lot of small, disjoint addresses. When the kernel needs to free a range of addresses and can't record it in the sysmap, it prints a message to syslog similar to this: sysmap: rmap ovflo, lost [3f600, 3f6a3]. This tells us that this address range has been lost and can't be used again. |