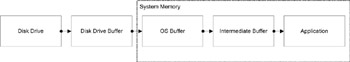

Flow of Data between an Application and the Disk

Before we start talking about streams, let’s look at what happens when a file is accessed. This information is crucial to understand how to achieve optimal IO performance. The remainder of this chapter will refer to the process explained here. Figure 5.1 shows the path that some data must take to make it from the disk drive to an application.

Figure 5.1: Flow of data when read request occurs.

The journey starts from the disk drive and ends in the application. When writing to a file, as opposed to reading from a file, the flow of data is in the reverse order. Some applications may read a single byte at a time, whereas others may read multiple bytes at a time.

The cost of transferring data from the disk to the application is very expensive when compared to transferring data in memory. By using caching or buffer techniques, the overall cost of reading data from the disk can be significantly reduced. The general goal behind caching data is to reduce the number of times expensive operations must be performed. As you can see from Figure 5.1, multiple caches exist between the disk drive and the application. The drive buffer, OS buffer, and intermediate buffer each play a part in reducing the cost of reading from the disk.

The drive buffer is the first attempt to reduce the overall cost of reading from or writing to the drive and can result in a noticeable performance gain. The drive buffer, shown in Figure 5.1, is built in to every hard drive and is a form of RAM. The larger the drive buffer, the better overall behavior can be expected, and the more you can expect to pay for the drive. The cost of getting the data from the disk surface to the drive buffer is by far the most expensive step in the pipeline. This high cost is because reading data from the disk surface involves physical movement of the heads among other things, which depends on the RPM of the drive.

The drive buffer is actually composed of a separate read cache and write cache. When reading from the drive, the read cache allows a chunk of data to be read at once. If a request to read a single byte is made to the drive, the drive also reads the data near the requested byte and makes it available to the operating system (OS). By doing so, if some data must be re-retrieved from the disk, if the data has already been read, the disk surface does not have to be accessed. This is a form of investment with some risk. There is no guarantee that reading more data than has been requested will end up paying off because a later request may want to read from an entirely different location on the disk. However, on average it is an investment worth making.

When writing to the drive, the data that must be physically written to the drive is simply written to the drive buffer instead. The drive can then write the data to the disk when it gets the chance to do so. Without a write buffer, a request to write some data would have to block the system until every byte is physically written to the drive. When a write buffer exists, if the data that needs to be written to the disk fits in the drive’s cache, the request can return immediately and let the drive write the data in its cache whenever it gets the chance to do so.

Besides the caching that is performed at the drive level, the OS does its own buffering because the OS can cache more data than the drive and can manage the data elegantly. In addition, the OS can perform optimizations that are not possible at the drive level. Unlike the drive, the OS is quite aware of the state of the system. For example, the OS knows which applications are trying to read from a file. If multiple applications are reading from the same file, the OS can eliminate redundant requests to the device altogether.

In addition to the drive-level and OS-level caching of file data, most applications do their own buffering. Accessing the OS buffer can be more expensive than accessing data local to the application. Note that this buffering is optional and is not performed by every application. This buffer has been labeled as the intermediate buffer in Figure 5.1. The decision as to whether it is worth caching data at the application level depends on the total amount of data and the size of data that needs to be used by the specific application. Some applications need to read very small chunks of data, whereas others may need to read very large chunks or even copy an entire file. If the application needs to read or write a few bytes at a time, using the intermediate buffer can pay off tremendously. However, if an application reads and writes data in chunks that are optimal in size, using an intermediate buffer will become strictly an overhead.

To get a better feel for what happens when data is read from the disk, let’s consider the process of duplicating a file. A file can be duplicated by opening the source file, opening a destination file, reading data from the source file, and writing the data to the destination file. Even though the procedure is generally the same, the details of how much data is copied at a time and how far the data has to make it in the chain of buffers shown in Figure 5.1 can make a substantial difference.

If a single byte is read at a time but an intermediate buffer exists, the first time a single byte is read, the intermediate buffer is filled. Depending on the size of the intermediate buffer, the implementation details of the OS buffering, and the size of the drive buffer, enough accesses are carried out at OS and disk level to fill the intermediate buffer. Thereafter, single-byte reads will simply read from the intermediate buffer instead of accessing the disk. This process continues until some data that is not in the intermediate buffer must be read, in which case the process repeats and the intermediate buffer is filled again.

If a single byte is read at a time and no intermediate buffer exists, the OS will still read a chunk of data from the disk. Because the OS deals with memory in terms of pages, it reads at least one page, or 4K, of data at a time. After that point, single-byte reads end up reading the cached data at the OS level until there is a cache miss, in which case the process repeats.

It is better to read data from the file in, say, 4K chunks and not use an intermediate buffer as opposed to reading data one or a few bytes at a time and using an intermediate buffer. If data is already read in optimal chunks, there is no need to use an intermediate buffer. In fact, in such a scenario, using an intermediate buffer can slow down the process because it forces an unnecessary copying of data. We will come back to this point in the performance section of this chapter.

An even better approach to duplicating a file would be to ask the OS to duplicate the file instead of having the application duplicate the file. If the OS is responsible for duplicating the file, the data of the file will not even have to make it to the intermediate buffer or the application. The OS can directly duplicate a file by reading the data of the file into its buffer and copying the data from its buffer back to the disk. Note that this process can allow the application to stay out of the loop and leave all the work to the OS. Because this approach is OS dependent and there already are alternative ways to duplicate a file, Java does not expose such OS-level functions. If, and only if, your game needs to perform a reasonable amount of copying or moving of files, you may want to consider writing a simple native function that calls the corresponding OS functions. For more information on wrapping native functions, refer to Chapter 11, “Java Native Interface.”

Prior to JDK 1.4, every IO operation—including operations that sent data to a device, such as the video card or the sound card—resulted in extra copying of the data. With the introduction of direct-byte buffers in JDK 1.4, the extra copy can be avoided, which can result in a substantial performance gain. We will discuss this topic in detail in an upcoming section.

Memory-mapped files were also introduced in JDK 1.4. Memory-mapped files are files that are dealt with entirely at the OS level. In other words, they can be used to expose the OS buffer directly. When used appropriately, they can result in substantial performance gain. A specific section in this chapter is dedicated to discussing mapped byte buffers.

EAN: 2147483647

Pages: 171