Testing the failover procedure

|

| < Day Day Up > |

|

Testing the HACMP failover is a procedure that can take several days, depending upon the complexity of the configuration. The configuration that we test here has no complicated failover requirements, but it must still be tested and understood. As we gain further experience in this area, we will begin to understand and tune both our HACMP environment and its test procedures.

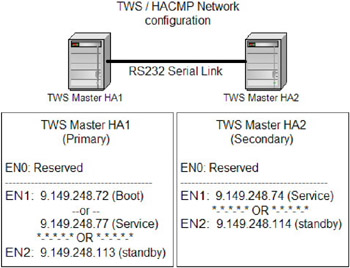

Figure A-4 shows our implementation environment in detail.

Figure A-4: Our environment in more detail

The details for specific configurations on our IBM Tivoli Workload Scheduler HACMP environment are described in the following sections.

HACMP Cluster topology

Example A-1 on page 583 shows our HACMP Cluster topology.

Example A-1: /usr/es/sbin/cluster/utilities/cllscf > cl_top.txt

Cluster Description of Cluster tws Cluster ID: 71 There were 2 networks defined : production, serialheartbeat There are 2 nodes in this cluster. NODE tehnigaxhasa01: This node has 2 service interface(s): Service Interface emeamdm: IP address: 9.149.248.77 Hardware Address: Network: production Attribute: public Aliased Address?: Not Supported Service Interface emeamdm has 1 boot interfaces. Boot (Alternate Service) Interface 1: tehnigaxhasa01 IP address: 9.149.248.72 Network: production Attribute: public Service Interface emeamdm has 1 standby interfaces. Standby Interface 1: ha01stby IP address: 9.149.248.113 Network: production Attribute: public Service Interface nodetwo: IP address: /dev/tty1 Hardware Address: Network: serialheartbeat Attribute: serial Aliased Address?: Not Supported Service Interface nodetwo has no boot interfaces. Service Interface nodetwo has no standby interfaces. NODE tehnigaxhasa02: This node has 2 service interface(s): Service Interface tehnigaxhasa02: IP address: 9.149.248.74 Hardware Address: Network: production Attribute: public Aliased Address?: Not Supported Service Interface tehnigaxhasa02 has no boot interfaces. Service Interface tehnigaxhasa02 has 1 standby interfaces. Standby Interface 1: ha02stby IP address: 9.149.248.114 Network: production Attribute: public Service Interface nodeone: IP address: /dev/tty1 Hardware Address: Network: serialheartbeat Attribute: serial Aliased Address?: Not Supported Service Interface nodeone has no boot interfaces. Service Interface nodeone has no standby interfaces. Breakdown of network connections: Connections to network production Node tehnigaxhasa01 is connected to network production by these interfaces: tehnigaxhasa01 emeamdm ha01stby Node tehnigaxhasa02 is connected to network production by these interfaces: tehnigaxhasa02 ha02stby Connections to network serialheartbeat Node tehnigaxhasa01 is connected to network serialheartbeat by these interfaces: nodetwo Node tehnigaxhasa02 is connected to network serialheartbeat by these interfaces: nodeone

HACMP Cluster Resource Group topology

Example A-2 shows our HACMP Cluster Resource Group topology.

Example A-2: /usr/es/sbin/cluster/utilities/clshowres -g'twsmdmrg' > rg_top.txt

Resource Group Name twsmdmrg Node Relationship cascading Site Relationship ignore Participating Node Name(s) tehnigaxhasa01 tehnigaxhasa02 Dynamic Node Priority Service IP Label emeamdm Filesystems /opt/tws Filesystems Consistency Check fsck Filesystems Recovery Method sequential Filesystems/Directories to be exported /opt/tws Filesystems to be NFS mounted /opt/tws Network For NFS Mount Volume Groups twsvg Concurrent Volume Groups Disks GMD Replicated Resources PPRC Replicated Resources Connections Services Fast Connect Services Shared Tape Resources Application Servers twsmdm Highly Available Communication Links Primary Workload Manager Class Secondary Workload Manager Class Miscellaneous Data Automatically Import Volume Groups false Inactive Takeover false Cascading Without Fallback true SSA Disk Fencing false Filesystems mounted before IP configured false Run Time Parameters: Node Name tehnigaxhasa01 Debug Level high Format for hacmp.out Standard Node Name tehnigaxhasa02 Debug Level high Format for hacmp.out Standard

ifconfig -a

Example A-3 shows the output of ifconfig -a in our environment.

Example A-3: fconfig -a output

Node01 $ ifconfig -a en0: flags=e080863<UP,BROADCAST,NOTRAILERS,RUNNING,SIMPLEX,MULTICAST,GROUPRT,64BIT> inet 9.164.212.104 netmask 0xffffffe0 broadcast 9.164.212.127 en1: flags=4e080863<UP,BROADCAST,NOTRAILERS,RUNNING,SIMPLEX,MULTICAST,GROUPRT,64BIT, PSEG> inet 9.149.248.72 netmask 0xffffffe0 broadcast 9.149.248.95 en2: flags=7e080863<UP,BROADCAST,NOTRAILERS,RUNNING,SIMPLEX,MULTICAST,GROUPRT,64BIT, CHECKSUM_OFFLOAD,CHECKSUM_SUPPORT,PSEG> inet 9.149.248.113 netmask 0xffffffe0 broadcast 9.149.248.127 lo0: flags=e08084b<UP,BROADCAST,LOOPBACK,RUNNING,SIMPLEX,MULTICAST,GROUPRT,64BIT> Node02 $ ifconfig -a en0: flags=e080863<UP,BROADCAST,NOTRAILERS,RUNNING,SIMPLEX,MULTICAST,GROUPRT,64BIT> inet 9.164.212.105 netmask 0xffffffe0 broadcast 9.164.212.127 en1: flags=4e080863<UP,BROADCAST,NOTRAILERS,RUNNING,SIMPLEX,MULTICAST,GROUPRT,64BIT, PSEG> inet 9.149.248.74 netmask 0xffffffe0 broadcast 9.149.248.95 en2: flags=7e080863<UP,BROADCAST,NOTRAILERS,RUNNING,SIMPLEX,MULTICAST,GROUPRT,64BIT, CHECKSUM_OFFLOAD,CHECKSUM_SUPPORT,PSEG> inet 9.149.248.114 netmask 0xffffffe0 broadcast 9.149.248.127 lo0: flags=e08084b<UP,BROADCAST,LOOPBACK,RUNNING,SIMPLEX,MULTICAST,GROUPRT,64BIT>

|

| < Day Day Up > |

|

EAN: 2147483647

Pages: 92