5.1 Implement IBM Tivoli Management Framework in an HACMP cluster

|

| < Day Day Up > |

|

5.1 Implement IBM Tivoli Management Framework in an HACMP cluster

IBM Support officially does not recognize implementing two instances of IBM Tivoli Management Framework on a single operating system image. While it is technically possible to implement this configuration, it is not supported. You can read more about this configuration in the IBM Redbook High Availability Scenarios for Tivoli Software, SG24-2032. In this chapter, we show a supported HA configuration for a Tivoli server.

| Important: | Even though both this chapter and 4.1.11, "Add IBM Tivoli Management Framework" on page 303 deal with configuring IBM Tivoli Management Framework for HACMP, they should be treated as separate from each other:

This chapter also provides implementation details for IBM Tivoli Management Framework 4.1. For a discussion on how to implement IBM Tivoli Management Framework 3.7b on the MSCS platform, refer to Appendix B, "TMR clustering for Tivoli Framework 3.7b on MSCS" on page 597. |

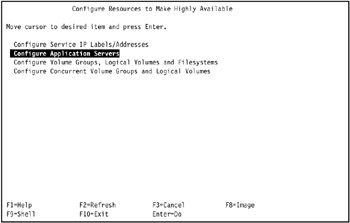

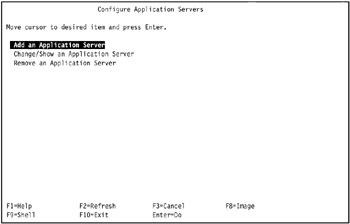

We also discuss how to configure Managed Nodes and Endpoints for high availability. The general steps to implement IBM Tivoli Management Framework for HACMP are:

-

"Inventory hardware" on page 413

-

"Planning the high availability design" on page 414

-

"Create the shared disk volume" on page 416

-

"Install IBM Tivoli Management Framework" on page 449

-

"Tivoli Web interfaces" on page 460

-

"Tivoli Managed Node" on page 460

-

"Tivoli Endpoints" on page 462

-

"Configure HACMP" on page 476

The following sections break down each step into the following operations.

5.1.1 Inventory hardware

Here we present an inventory of the hardware we used for writing this redbook. This enables you to determine what changes you may need to make when using this book as a guide in your own deployment by comparing your environment against what we used.

Our environment consisted of two IBM RS/6000 7025-F80s. They are identically configured. There are four PowerPC RS64-III 450 MHz processors in each system. There is 1 GB of RAM in each system. We determined the amount of RAM by using the lsattr command:

lsattr -El mem0

The firmware is at level CL030829, which we verified by using the lscfg command:

lscfg -vp | grep -F .CL

Best practice is to bring your hardware up to the latest firmware and microcode levels. Download the most recent firmware and microcode from:

-

http://www-1.ibm.com/servers/eserver/support/pseries/fixes/hm.html

Onboard the system, the following devices are installed:

-

SCSI 8mm Tape Drive (20000 MB)

-

5 x 16-bit LVD SCSI Disk Drive (9100 MB)

-

16-bit SCSI Multimedia CD-ROM Drive (650 MB)

There are four adapter cards in each system:

-

IBM 10/100 Mbps Ethernet PCI Adapter

-

IBM 10/100/1000 Base-T Ethernet PCI Adapter (14100401)

-

IBM SSA 160 SerialRAID Adapter

-

IBM PCI Token ring Adapter

We did not use the IBM PCI Token ring Adapter.

Shared between the two systems is an IBM 7133 Model 010 Serial Disk System disk tray. Download the most recent SSA drive microcode from:

-

http://www.storage.ibm.com/hardsoft/products/ssa/index.html

The IBM SSA 160 SerialRAID Adapter is listed in this Web site as the Advanced SerialRAID Adapter. In our environment, the adapters are at loadable microcode level 05, ROS level BD00.

There are 16 SSA drives physically installed in the disk tray, but only 8 are active. The SSA drives are 2 GB type DFHCC2B1, at microcode level 8877. In the preceding Web page, the drives are listed as type DFHC (RAMST).

5.1.2 Planning the high availability design

The restriction against two instances of IBM Tivoli Management Framework on the same operating system image prevents mutual takeover implementations. Instead, we show in this section how to install IBM Tivoli Management Framework and configure it in AIX HACMP for a two-node hot standby cluster.

In this configuration, IBM Tivoli Management Framework is active on only one cluster node at a time, but is installed onto a shared volume group available to all cluster nodes. It is configured to always run from the service IP label and corresponding IP address of the cluster node it normally runs upon. Tivoli Desktop sessions connect to this IP address.

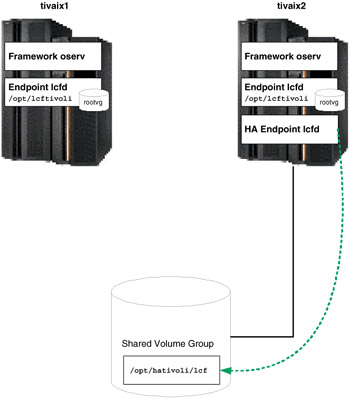

In our environment we configured the file system /opt/hativoli on the shared volume group. In normal operation in our environment, the oserv server of IBM Tivoli Management Framework runs on tivaix1 as shown in Figure 5-1 on page 415.

Figure 5-1: IBM Tivoli Management Framework in normal operation on tivaix1

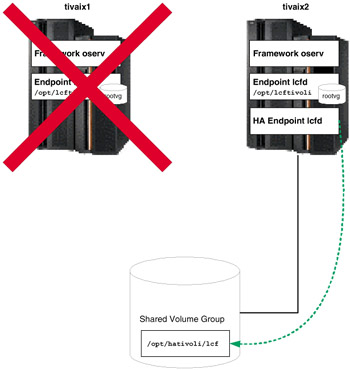

If IBM Tivoli Management Framework on tivaix1 falls over to tivaix2, the IP service label and shared file system are automatically configured by HACMP onto tivaix2. Tivoli Desktop sessions are restarted when the oserv server is shut down, so users of Tivoli Desktop will have to log back in. The fallover scenario is shown in Figure 5-2 on page 416.

Figure 5-2: State of cluster after IBM Tivoli Management Framework falls over to tivaix2

All managed resources are brought over at the same time because the entire object database is contained in /opt/hativoli. As far as IBM Tivoli Management Framework is concerned, there is no functional difference between running on tivaix1 or tivaix2.

5.1.3 Create the shared disk volume

In this section, we show you how to create and configure a shared disk volume to install IBM Tivoli Management Framework into. Before installing HACMP, we create the shared volume group and install the application servers in them. We can then manually test the fallover of the application server before introducing HACMP.

Plan the shared disk

The cluster needs a shared volume group to host the IBM Tivoli Management Framework upon so that participating cluster nodes can take over and vary on the volume group during a fallover. Here we show how to plan shared volume groups for an HACMP cluster that uses SSA drives.

Start by making an assessment of the SSA configuration on the cluster.

Assess SSA links

Ensure that all SSA links are viable, to rule out any SSA cabling issues before starting other assessments. To assess SSA links:

-

Enter: smit diag.

-

Go to Current Shell Diagnostics and press Enter. The DIAGNOSTIC OPERATING INSTRUCTIONS diagnostics screen displays some navigation instructions.

-

Press Enter. The FUNCTION SELECTION diagnostics screen displays diagnostic functions.

-

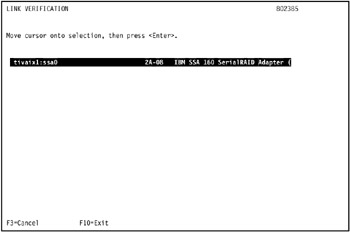

Go to Task Selection (Diagnostics, Advanced Diagnostics, Service Aids, etc.) -> SSA Service Aids -> Link Verification and press Enter. The LINK VERIFICATION diagnostics screen displays a list of SSA adapters to test upon. Go to an SSA adapter to test and press Enter.

In our environment, we selected the SSA adapter ssa0 on tivaix1 as shown in Figure 5-3 on page 418.

Figure 5-3: Start SSA link verification on tivaix1 -

The link verification test screen displays the results of the test.

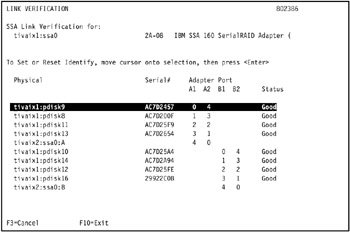

The results of the link verification test in our environment are shown in Figure 5-4 on page 419.

Figure 5-4: Results of link verification test on SSA adapter ssa0 in tivaix1The link verification test indicates only the following SSA disks are available on tivaix1: pdisk9, pdisk8, pdisk11, pdisk13, pdisk10, pdisk14, pdisk14, pdisk12, and pdisk16.

-

Repeat the operation for remaining cluster nodes.

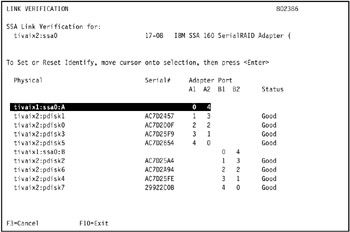

In the environment, we tested the link verification for SSA adapter ssa0 on tivaix2, as shown in Figure 5-5 on page 420.

Figure 5-5: Results of SSA link verification test on SSA adapter ssa0 in tivaix2The link verification test indicates only the following SSA disks are available on tivaix2: pdisk0, pdisk1, pdisk2, pdisk3, pdisk4, pdisk5, pdisk6, and pdisk7.

Identify the SSA connection addresses

The connection address uniquely identifies a SSA device. To display the connection address of a physical disk, follow these steps:

-

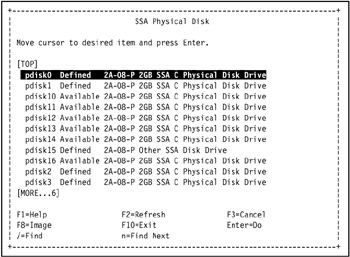

Enter: smit chgssapdsk. The SSA Physical Disk SMIT selection screen displays a list of known physical SSA disks.

Note You can also enter: smit devices. Then go to SSA Disks -> SSA Physical Disks -> Change/Show Characteristics of an SSA Physical Disk and press Enter.

-

Go to a SSA disk and press Enter, as shown in Figure 5-6 on page 421.

Figure 5-6: Select an SSA disk from the SSA Physical Disk SMIT selection screen -

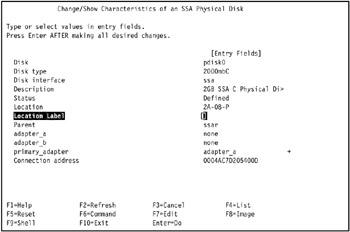

The Change/Show Characteristics of an SSA Physical Disk SMIT screen displays the characteristics of the selected SSA disk. The Connection address field displays the SSA connection address of the selected disk, as shown in Figure 5-7 on page 422.

Figure 5-7: Identify the connection address of an SSA disk -

Repeat the operation for all remaining SSA drives.

-

Repeat the operation for all remaining cluster nodes.

An SSA connection address is unique throughout the cluster. Identify the relationship between each connection address and the AIX physical disk definition it represents on each cluster node. This establishes an actual physical relationship between the defined physical disk in AIX and the hardware disk, as identified by its SSA connection address.

In our environment, we identified the SSA connection address of the disks on tivaix1 and tivaix2 as shown in Table 5-1.

| Physical disk on tivaix1 | Connection address | Physical disk on tivaix2 |

|---|---|---|

| pdisk0 | 0004AC7D205400D | pdisk8 |

| pdisk1 | 0004AC7D20A200D | pdisk9 |

| pdisk2 | 0004AC7D22A800D | pdisk10 |

| pdisk3 | 0004AC7D240D00D | pdisk11 |

| pdisk4 | 0004AC7D242500D | pdisk12 |

| pdisk5 | 0004AC7D25BC00D | pdisk13 |

| pdisk6 | 0004AC7D275E00D | pdisk14 |

| pdisk7 | 0004AC7DDACC00D | pdisk15 |

| pdisk8 | 0004AC7D200F00D | pdisk0 |

| pdisk9 | 0004AC7D245700D | pdisk1 |

| pdisk10 | 0004AC7D25A400D | pdisk2 |

| pdisk11 | 0004AC7D25F900D | pdisk3 |

| pdisk12 | 0004AC7D25FE00D | pdisk4 |

| pdisk13 | 0004AC7D265400D | pdisk5 |

| pdisk14 | 0004AC7D2A9400D | pdisk6 |

| pdisk15 | 08005AEA42BC00D | n/a |

| pdisk16 | 000629922C0B00D | pdisk7 |

Using the list of disks identified in the link verification test in the preceding section, we highlight (in bold in Table 5-1 on page 422) the disks on each cluster node that are physically available to be shared on both nodes. From this list we identify which disks are also available to be shared as logical elements by using the assessments in the following sections.

Assess tivaix1

In our environment, the available SSA physical disks on tivaix1 are shown in Example 5-1.

Example 5-1: Available SSA disks on tivaix1 before configuring shared volume groups

[root@tivaix1:/home/root] lsdev -C -c pdisk -s ssar -H name status location description pdisk0 Defined 2A-08-P 2GB SSA C Physical Disk Drive pdisk1 Defined 2A-08-P 2GB SSA C Physical Disk Drive pdisk10 Available 2A-08-P 2GB SSA C Physical Disk Drive pdisk11 Available 2A-08-P 2GB SSA C Physical Disk Drive pdisk12 Available 2A-08-P 2GB SSA C Physical Disk Drive pdisk13 Available 2A-08-P 2GB SSA C Physical Disk Drive pdisk14 Available 2A-08-P 2GB SSA C Physical Disk Drive pdisk15 Defined 2A-08-P Other SSA Disk Drive pdisk16 Available 2A-08-P 2GB SSA C Physical Disk Drive pdisk2 Defined 2A-08-P 2GB SSA C Physical Disk Drive pdisk3 Defined 2A-08-P 2GB SSA C Physical Disk Drive pdisk4 Defined 2A-08-P 2GB SSA C Physical Disk Drive pdisk5 Defined 2A-08-P 2GB SSA C Physical Disk Drive pdisk6 Defined 2A-08-P 2GB SSA C Physical Disk Drive pdisk7 Defined 2A-08-P 2GB SSA C Physical Disk Drive pdisk8 Available 2A-08-P 2GB SSA C Physical Disk Drive pdisk9 Available 2A-08-P 2GB SSA C Physical Disk Drive

The logical disks on tivaix1 are defined as shown in Example 5-2. Note the physical volume ID (PVID) field in the second column, and the volume group assignment field in the third column.

Example 5-2: Logical disks on tivaix1 before configuring shared volume groups

[root@tivaix1:/home/root] lspv hdisk0 0001813fe67712b5 rootvg active hdisk1 0001813f1a43a54d rootvg active hdisk2 0001813f95b1b360 rootvg active hdisk3 0001813fc5966b71 rootvg active hdisk4 0001813fc5c48c43 None hdisk5 0001813fc5c48d8c None hdisk6 000900066116088b tiv_vg1 hdisk7 000000000348a3d6 tiv_vg1 hdisk8 00000000034d224b tiv_vg2 hdisk9 none None hdisk10 none None hdisk11 none None hdisk12 00000000034d7fad tiv_vg2 hdisk13 none None

The logical-to-physical SSA disk relationship of configured SSA drives on tivaix1 is shown in Example 5-3.

Example 5-3: How to show logical to physical SSA disk relationships on tivaix1.

[root@tivaix1:/home/root] for i in $(lsdev -CS1 -t hdisk -sssar -F name) > do > echo "$i: "$(ssaxlate -l $i) > done hdisk10: pdisk12 hdisk11: pdisk13 hdisk12: pdisk14 hdisk13: pdisk16 hdisk6: pdisk8 hdisk7: pdisk9 hdisk8: pdisk10 hdisk9: pdisk11

Assess tivaix2

The same SSA disks in the same SSA loop that are available on tivaix2 are shown in Example 5-4.

Example 5-4: Available SSA disks on tivaix2 before configuring shared volume groups

[root@tivaix2:/home/root] lsdev -C -c pdisk -s ssar -H name status location description pdisk0 Available 17-08-P 2GB SSA C Physical Disk Drive pdisk1 Available 17-08-P 2GB SSA C Physical Disk Drive pdisk10 Defined 17-08-P 2GB SSA C Physical Disk Drive pdisk11 Defined 17-08-P 2GB SSA C Physical Disk Drive pdisk12 Defined 17-08-P 2GB SSA C Physical Disk Drive pdisk13 Defined 17-08-P 2GB SSA C Physical Disk Drive pdisk14 Defined 17-08-P 2GB SSA C Physical Disk Drive pdisk15 Defined 17-08-P 2GB SSA C Physical Disk Drive pdisk2 Available 17-08-P 2GB SSA C Physical Disk Drive pdisk3 Available 17-08-P 2GB SSA C Physical Disk Drive pdisk4 Available 17-08-P 2GB SSA C Physical Disk Drive pdisk5 Available 17-08-P 2GB SSA C Physical Disk Drive pdisk6 Available 17-08-P 2GB SSA C Physical Disk Drive pdisk7 Available 17-08-P 2GB SSA C Physical Disk Drive pdisk8 Defined 17-08-P 2GB SSA C Physical Disk Drive pdisk9 Defined 17-08-P 2GB SSA C Physical Disk Drive

The logical disks on tivaix2 are defined as shown in Example 5-5.

Example 5-5: Logical disks on tivaix2 before configuring shared volume groups

[root@tivaix2:/home/root] lspv hdisk0 0001814f62b2a74b rootvg active hdisk1 none None hdisk2 none None hdisk3 none None hdisk4 none None hdisk5 000900066116088b tiv_vg1 hdisk6 000000000348a3d6 tiv_vg1 hdisk7 00000000034d224b tiv_vg2 hdisk8 0001813f72023fd6 None hdisk9 0001813f72025253 None hdisk10 0001813f71dd8f80 None hdisk11 00000000034d7fad tiv_vg2 hdisk12 0001814f7ce1d08d None hdisk16 0001814fe8d10853 None

The logical-to-physical SSA disk relationship of configured SSA drives on tivaix2 is shown in Example 5-6.

Example 5-6: Show logical-to-physical SSA disk relationships on tivaix2

[root@tivaix2:/home/root] for i in $(lsdev -CS1 -t hdisk -sssar -F name) > do > echo "$i: "$(ssaxlate -l $i) > done hdisk10: pdisk5 hdisk11: pdisk6 hdisk12: pdisk7 hdisk5: pdisk0 hdisk6: pdisk1 hdisk7: pdisk2 hdisk8: pdisk3 hdisk9: pdisk4

Identify the volume group major numbers

Each volume group is assigned a major device number, a unique number on a cluster node different from the major number of any other device on the cluster node. Creating a new shared volume group, on the other hand, requires a new major device number assigned to it with the following characteristics:

-

It is different from any other major number of any device on the cluster node.

-

It is exactly the same as the major number assigned to the same shared volume group on all other cluster nodes that share the volume group.

Satisfy these criteria by identifying the existing volume group major numbers that exist on each cluster node so a unique number can be assigned for the new shared volume group. If any other shared volume groups already exist, also identify the major numbers used for these devices. Whenever possible, try to keep major numbers of similar devices in the same range. This eases the administrative burden of keeping track of the major numbers to assign.

In our environment, we used the following command to identify all major numbers used by all devices on a cluster node:

ls -al /dev/* | awk '{ print $5 }' | awk -F',' '{ print $1 }' | sort | uniq In our environment, the major numbers already assigned include the ones shown in Example 5-7 on page 427. We show only a portion of the output for brevity; the parts we left out are indicated by vertical ellipses (...).

Example 5-7: How to list major numbers already in use on tivaix1

[root@tivaix1:/home/root] ls -al /dev/* | awk '{ print $5 }' | \ > awk -F',' '{ print $1 }' | sort -n | uniq . . . 8 11 . . . 43 44 45 46 47 512 . . . In this environment, the volume groups tiv_vg1 and tiv_vg2 are shared volume groups that already exist. We use the ls command on tivaix1, as shown in Example 5-8,to identify the major numbers used for these shared volume groups.

Example 5-8: Identify the major numbers used for shared volume groups on tivaix1

[root@tivaix1:/home/root] ls -al /dev/tiv_vg1 crw-rw---- 1 root system 45, 0 Nov 05 15:51 /dev/tiv_vg1 [root@tivaix1:/home/root] ls -al /dev/tiv_vg2 crw-r----- 1 root system 46, 0 Nov 10 17:04 /dev/tiv_vg2

Example 5-8 shows that shared volume group tiv_vg1 uses major number 45, and shared volume group tiv_vg2 uses major number 46. We perform the same commands on the other cluster nodes that access the same shared volume groups. In our environment, these commands are entered on tivaix2, as shown in Example 5-9.

Example 5-9: Identify the major numbers used for shared volume groups on tivaix2

[root@tivaix2:/home/root] ls -al /dev/tiv_vg1 crw-r----- 1 root system 45, 0 Dec 15 20:36 /dev/tiv_vg1 [root@tivaix2:/home/root] ls -al /dev/tiv_vg2 crw-r----- 1 root system 46, 0 Dec 15 20:39 /dev/tiv_vg2

Again, you can see that the major numbers are the same on tivaix2 for the same volume groups. Between the list of all major numbers used by all devices, and the major numbers already used by the shared volume groups in our cluster, we choose 49 as the major number to assign to the next shared volume group on all cluster nodes that will access the new shared volume group.

Analyze the assessments

Use the assessment data gathered in the preceding sections to plan the disk sharing design.

Identify which physical disks are not yet assigned to any logical elements. List the physical disks available on each cluster node, as well as each disk's physical volume ID (PVID), its corresponding logical disk, and the volume group the physical disk is assigned to.

If a physical disk is not assigned to any logical elements yet, describe the logical elements as "not available". Disks listed as defined but not available usually indicate connection problems or hardware failure on the disk itself, so do not include these disks in the analysis.

The analysis of tivaix1 indicates that four SSA disks are available as logical elements (highlighted in bold in Table 5-2) because no volume groups are allocated to them: pdisk11, pdisk12, pdisk13, and pdisk16.

| Physical Disk | PVID | Logical Disk | Volume Group |

|---|---|---|---|

| pdisk8 | 000000000348a3d6 | hdisk6 | tiv_vg1 |

| pdisk9 | 000000000348a3d6 | hdisk7 | tiv_vg1 |

| pdisk10 | 00000000034d224b | hdisk8 | tiv_vg2 |

| pdisk11 | n/a | hdisk9 | n/a |

| pdisk12 | n/a | hdisk10 | n/a |

| pdisk13 | n/a | hdisk11 | n/a |

| pdisk14 | 00000000034d7fad | hdisk12 | tiv_vg2 |

| pdisk16 | n/a | hdisk13 | n/a |

We want the two cluster nodes in our environment to share a set of SSA disks, so we have to apply the same analysis of available disks to tivaix2; see Table 5-3 on page 429.

| Physical Disk | PVID | Logical Disk | Volume Group |

|---|---|---|---|

| pdisk0 | 000900066116088b | hdisk5 | tiv_vg1 |

| pdisk1 | 000000000348a3d6 | hdisk6 | tiv_vg1 |

| pdisk2 | 00000000034d224b | hdisk7 | tiv_vg2 |

| pdisk3 | 0001813f72023fd6 | hdisk8 | n/a |

| pdisk4 | 0001813f72025253 | hdisk9 | n/a |

| pdisk5 | 0001813f71dd8f80 | hdisk10 | n/a |

| pdisk6 | 00000000034d7fad | hdisk11 | tiv_vg2 |

| pdisk7 | 0001814f7ce1d08d | hdisk12 | n/a |

The analysis of tivaix2 indicates that four SSA disks are available as logical elements (highlighted in bold in Table 5-3) because no volume groups are allocated to them: pdisk3, pdisk4, pdisk5, and pdisk7.

Pooling together the separate analyses from each cluster node, we arrive at the map shown in Table 5-4. The center two columns show the actual, physical SSA drives as identified by their connection address and the shared volume groups hosted on these drives. The outer two columns show the AIX-assigned physical and logical disks on each cluster node, for each SSA drive.

| tivaix1 disks | Connection address | Volume group | tivaix2 disks | ||

|---|---|---|---|---|---|

| Physical | Logical | Physical | Logical | ||

| pdisk8 | hdisk6 | 0004AC7D200F00D | tiv_vg1 | pdisk0 | hdisk5 |

| pdisk9 | hdisk7 | 0004AC7D245700D | tiv_vg1 | pdisk1 | hdisk6 |

| pdisk10 | hdisk8 | 0004AC7D25A400D | tiv_vg2 | pdisk2 | hdisk7 |

| pdisk11 | hdisk9 | 0004AC7D25F900D | pdisk3 | hdisk8 | |

| pdisk12 | hdisk10 | 0004AC7D25FE00D | pdisk4 | hdisk9 | |

| pdisk13 | hdisk11 | 0004AC7D265400D | pdisk5 | hdisk10 | |

| pdisk14 | hdisk12 | 0004AC7D2A9400D | tiv_vg2 | pdisk6 | hdisk11 |

| pdisk16 | hdisk13 | 000629922C0B00D | pdisk7 | hdisk12 | |

You can think of the AIX physical disk as the handle by which the SSA drivers in AIX use to communicate with the actual SSA hardware drive. Think of the AIX logical disk as the higher level construct that presents a uniform interface to the AIX volume management system. These logical disks are allocated to volume groups, and they map back through a chain (logical disk to physical disk to connection address) to reach the actual SSA hardware drive.

Allocate the SSA disks to a new volume group

The assessments and the analyses shows us that four SSA drives are available to allocate to a volume group for IBM Tivoli Management Framework, and be assigned as a volume group amongst both nodes in our two-node cluster. These are highlighted in bold in the preceding table.

A basic installation of IBM Tivoli Management Framework requires no more than 2 GB. Our assessments in the preceding sections ("Assess tivaix1" on page 423 and , "Assess tivaix2" on page 425) show us that our SSA storage system uses 2 GB drives, so we know the physical capacity of each drive.

We will use two drives for the volume group that will hold IBM Tivoli Management Framework, as shown in the summary analysis table (Table 5-5) that distills all the preceding analysis into the concluding analysis identifying the physical SSA disks to use, and the order in which we specify them when defining them into a volume group.

| tivaix1 Disks | Connection Address | Volume Group | tivaix2 Disks | ||

|---|---|---|---|---|---|

| Physical | Logical | Physical | Logical | ||

| pdisk11 | hdisk9 | 0004AC7D25F900D | itmf_vg | pdisk3 | hdisk8 |

| pdisk12 | hdisk10 | 0004AC7D25FE00D | itmf_vg | pdisk4 | hdisk9 |

The following section describes how to allocate the new volume group on the selected SSA drives.

Configure volume group on SSA drives

Use the SSA drives selected during analysis to configure a volume group upon. This volume group is shared among all the cluster nodes.

To configure a volume group on SSA drives:

-

Select a cluster node from the final analysis table (Table 5-5). Log into that cluster node as root user.

In our environment, we logged into tivaix1 as root user.

-

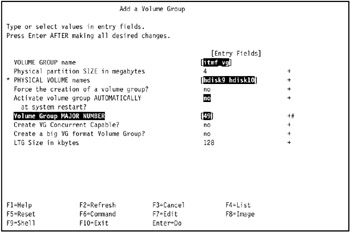

Enter the SMIT fast path command: smit mkvg. The Add a Volume Group SMIT screen appears.

-

Enter: itmf_vg in the VOLUME GROUP name field.

-

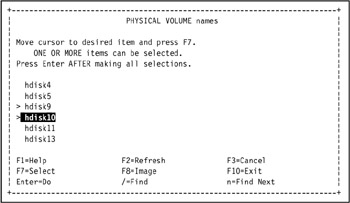

Go to the PHYSICAL VOLUME names field and press F4. The PHYSICAL VOLUME names SMIT dialog appears.

-

Select the physical volumes to include in the new volume group and press Enter. The Add a Volume Group SMIT selection screen appears.

In our environment, we used the summary analysis table to determine that because we are on tivaix1, we need to select hdisk9 and hdisk10 in the Add a Volume Group SMIT selection screen, as shown in Figure 5-8.

Figure 5-8: Select physical volumes for volume group itmf_vg -

Go to the Volume Group MAJOR NUMBER field and enter a unique major number. This number must be unique in every cluster node that the volume group is shared in. Ensure the volume group is not automatically activated at system restart (HACMP needs to automatically activate it) by setting the Activate volume group AUTOMATICALLY at system restart field to no.

Tip Record the volume group major number and the first physical disk you use for the volume group, for later reference in "Import the volume group into the remaining cluster nodes" on page 444.

In our environment, we entered 49 in the Volume Group MAJOR NUMBER field, and set the Activate volume group AUTOMATICALLY at system restart field to no, as shown in Figure 5-9. We use 49 as determined in "Identify the volume group major numbers" on page 426, so it will not conflict with the major numbers chosen for other volume groups and devices.

Figure 5-9: Configure settings to add volume group itmf_vg -

Press Enter. The volume group is created.

-

Use the lsvg and lspv commands to verify the new volume group exists, as shown in Example 5-10.

Example 5-10: Verify creation of shared volume group itmf_vg on tivaix1

[root@tivaix1:/home/root] lsvg rootvg tiv_vg1 tiv_vg2 itmf_vg [root@tivaix1:/home/root] lspv hdisk0 0001813fe67712b5 rootvg active hdisk1 0001813f1a43a54d rootvg active hdisk2 0001813f95b1b360 rootvg active hdisk3 0001813fc5966b71 rootvg active hdisk4 0001813fc5c48c43 None hdisk5 0001813fc5c48d8c None hdisk6 000900066116088b tiv_vg1 hdisk7 000000000348a3d6 tiv_vg1 hdisk8 00000000034d224b tiv_vg2 hdisk9 0001813f72023fd6 itmf_vg active hdisk10 0001813f72025253 itmf_vg active hdisk11 0001813f71dd8f80 None hdisk12 00000000034d7fad tiv_vg2 hdisk13 none None

Create the logical volume and Journaled File System

Create a logical volume and a Journaled File System (JFS) on the new volume group. This makes the volume group available to applications running on AIX.

To create a logical volume and Journaled File System on the new volume group:

-

Create the mount point for the logical volume's file system. Do this on all cluster nodes.

In our environment, we used the following command:

mkdir -p /opt/hativoli

-

Enter: smit crjfsstd.

-

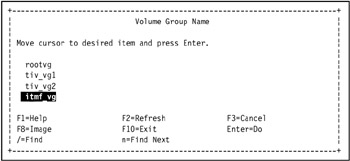

The Volume Group Name SMIT selection screen displays a list of volume groups. Go to the new volume group and press Enter. The Add a Standard Journaled File System SMIT screen displays the attributes for a new standard Journaled File System.

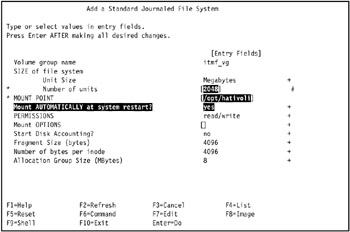

In our environment, we selected itmf_vg, as shown in Figure 5-10 on page 434.

Figure 5-10: Select a volume group using the Volume Group Name SMIT selection screen -

Enter values into the fields.

Number of units

Enter the number of megabytes to allocate for the standard Journaled File System.

MOUNT POINT

The mount point, which is the directory where the file system is available or will be made available.

Mount AUTOMATICALLY at system restart?

Indicates whether the file system is mounted at each system restart. Possible values are:

yes - meaning that the file system is automatically mounted at system restart

no - meaning that the file system is not automatically mounted at system restart.

In our environment, we entered 2048 in the Number of units field, /opt/hativoli in the MOUNT POINT field, and yes in the Mount AUTOMATICALLY at system restart? field, as shown in Figure 5-11 on page 435.

Figure 5-11: Create a standard Journaled File System on volume group itmf_vg in tivaix1 -

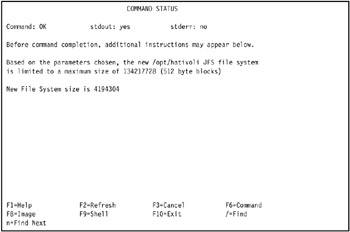

Press Enter to create the standard Journaled File System. The COMMAND STATUS SMIT screen displays the progress and result of the operation. A successful operation looks similar to Figure 5-12 on page 436.

Figure 5-12: Successful creation of JFS file system /opt/hativoli on tivaix1 -

Use the ls, df, mount, and umount commands to verify the new standard Journaled File System, as shown in Example 5-11.

Example 5-11: Verify successful creation of a JFS file system

[root@tivaix1:/home/root] ls /opt/hativoli [root@tivaix1:/home/root] df -k /opt/hativoli Filesystem 1024-blocks Free %Used Iused %Iused Mounted on /dev/hd10opt 262144 68724 74% 3544 6% /opt [root@tivaix1:/home/root] mount /opt/hativoli [root@tivaix1:/home/root] df -k /opt/hativoli Filesystem 1024-blocks Free %Used Iused %Iused Mounted on /dev/lv09 2097152 2031276 4% 17 1% /opt/hativoli [root@tivaix1:/home/root] ls /opt/hativoli lost+found [root@tivaix1:/home/root] umount /opt/hativoli

The new volume group is now populated with a new standard Journaled File System.

| Important: | Our environment does not use multiple SSA adapters due to resource constraints. In a production high availability environment, you use multiple disk controllers. Best practice for HACMP is to use multiple disk controllers and multiple disks for volume groups. Specifically, to ensure disk availability, best practice for each cluster node is to split a volume group between at least two disk controllers and three disks, mirroring across all the disks. |

Configure the logical volume

Rename the new logical volume and its log volume so it is guaranteed to be a unique name in any cluster node. The new name will be the same name on any cluster node that varies on the logical volume's volume group, and must be unique from any other logical volume on all cluster nodes. You only need to perform this operation from one cluster node. The volume group must be online on this cluster node.

In our environment, we wanted to rename logical volume lv09 to itmf_lv, and logical log volume loglv00 to itmf_loglv.

To rename the logical volume and logical log volume:

-

Use the lsvg command as shown in Example 5-12 to identify the logical volumes on the new volume group.

Example 5-12: Identify logical volumes on new volume group

[root@tivaix1:/home/root] lsvg -l itmf_vg itmf_vg: LV NAME TYPE LPs PPs PVs LV STATE MOUNT POINT loglv00 jfslog 1 1 1 closed/syncd N/A lv09 jfs 512 512 1 closed/syncd /opt/hativoli

In our environment, the volume group itmf_vg contains two logical volumes. Logical volume lv09 is for the standard Journal File System /opt/hativoli. Logical volume loglv00 is the log logical volume for lv09.

-

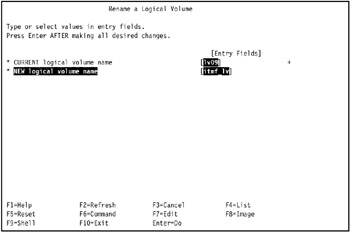

Enter: smit chlv2. You can also enter: smit storage, go to Logical Volume Manager -> Logical Volumes -> Set Characteristic of a Logical Volume -> Rename a Logical Volume and press Enter. The Rename a Logical Volume SMIT screen is displayed.

-

Enter the name of the logical volume to rename in the CURRENT logical volume name field. Enter the new name of the logical volume in the NEW logical volume name field.

In our environment, we entered lv09 in the CURRENT logical volume name field, and itmf_lv in the NEW logical volume name field, as shown in Figure 5-13.

Figure 5-13: Rename a logical volume -

Press Enter to rename the logical volume. The COMMAND STATUS SMIT screen displays the progress and the final status of the renaming operation.

-

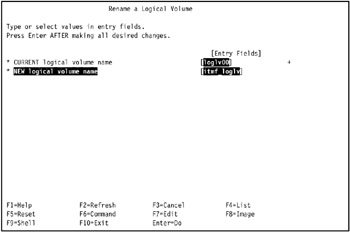

Repeat the operation for the logical log volume.

In our environment, we renamed logical volume loglv00 to itmf_loglv, as shown in Figure 5-14 on page 439.

Figure 5-14: REname the logical log volume -

Run the chfs command as shown in Example 5-13 to update the relationship between the logical volume itmf_lv and logical log volume itmf_loglv.

Example 5-13: Update relationship between renamed logical volumes and logical log volumes

[root@tivaix1:/home/root] chfs /opt/hativoli

-

Verify the chfs command modified the /etc/filesystems file entry for the file system.

In our environment, we used the grep command as shown in Example 5-14 on page 440 to verify that the /etc/filesystems entry for /opt/hativoli matches the new names of the logical volume and logical log volume.

Example 5-14: Verify the chfs command

[root@tivaix1:/home/root] grep -p /opt/hativoli /etc/filesystems /opt/hativoli: dev = /dev/itmf_lv vfs = jfs log = /dev/itmf_loglv mount = true check = false options = rw account = false

The attributes dev and log contain the new names itmf_lv and itmf_loglv, respectively.

Export the volume group

Export the volume group from the cluster node it was created upon to make it available to other cluster nodes.

To export a volume group:

-

Log into the cluster node that the volume group was created upon.

In our environment, we logged into tivaix1 as root user.

-

Note that the volume group is varied on as soon as it is created. Vary off the volume group if necessary, so it can be exported.

In our environment, we varied off the volume group itmf_vg by using the following command:

varyoffvg itmf_vg

-

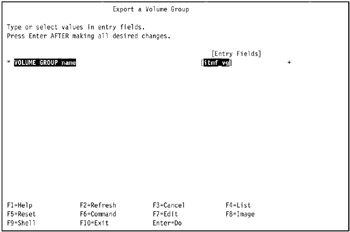

Enter: smit exportvg. The Export a Volume Group SMIT screen displays a VOLUME GROUP name field.

-

Enter the new volume group in the VOLUME GROUP name field.

In our environment, we entered itmf_vg in the VOLUME GROUP name field, as shown in Figure 5-15 on page 441.

Figure 5-15: Export a Volume Group SMIT screen -

Press Enter to export the volume group. The COMMAND STATUS SMIT screen displays the progress and final result of the export operation.

-

Use the lsvg and lspv commands as shown in Example 5-15 to verify the export of the volume group. Notice that the volume group name does not appear in the output of either command.

Example 5-15: Verify the export of volume group itmf_vg from tivaix1

[root@tivaix1:/home/root] lsvg rootvg tiv_vg1 tiv_vg2 [root@tivaix1:/home/root] lspv hdisk0 0001813fe67712b5 rootvg active hdisk1 0001813f1a43a54d rootvg active hdisk2 0001813f95b1b360 rootvg active hdisk3 0001813fc5966b71 rootvg active hdisk4 0001813fc5c48c43 None hdisk5 0001813fc5c48d8c None hdisk6 000900066116088b tiv_vg1 hdisk7 000000000348a3d6 tiv_vg1 hdisk8 00000000034d224b tiv_vg2 hdisk9 0001813f72023fd6 None hdisk10 0001813f72025253 None hdisk11 0001813f71dd8f80 None hdisk12 00000000034d7fad tiv_vg2 hdisk13 none None

Re-import the volume group

Once we export a volume group, we import it back into the same cluster node we first exported it from. We then log into the other cluster nodes on the same SSA loop as the cluster node we create the volume group upon in "Configure volume group on SSA drives" on page 430, and import the volume group so we can make it a shared volume group.

To import the volume group back to the same cluster node we first exported it from:

-

Log into the cluster node as root user.

In our environment, we logged into tivaix1 as root user.

-

Use the lsvg command as shown in Example 5-16 to verify the volume group is not already imported.

Example 5-16: Verify volume group itmf_vg is not already imported into tivaix1

[root@tivaix1:/home/root] lsvg -l itmf_vg 0516-306 : Unable to find volume group i in the Device Configuration Database.

-

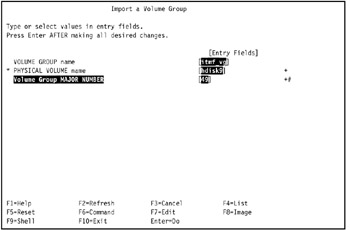

Enter: smit importvg. You can also enter: smit storage, go to Logical Volume Manager -> Volume Groups -> Import a Volume Group, and press Enter. The Import a Volume Group SMIT screen is displayed.

-

Enter the following values. Use the values determined in "Configure volume group on SSA drives" on page 430.

VOLUME GROUP name

The volume group name. The name must be unique system-wide, and can range from 1 to 15 characters.

PHYSICAL VOLUME name

The name of the physical volume. Physical volume names are typically in the form "hdiskx" where x is a system-wide unique number. This name is assigned when the disk is detected for the first time on a system startup or when the system management commands are used at runtime to add a disk to the system.

Volume Group MAJOR NUMBER

The major number of the volume group. The system kernel accesses devices, including volume groups, through a major and minor number combination. To see what major numbers are available on your system, use the SMIT "List" feature.

In our environment, we entered itmf_vg in the VOLUME GROUP name field, hdisk9 in the PHYSICAL VOLUME name field, and 49 in the Volume Group MAJOR NUMBER, as shown in Figure 5-16.

Figure 5-16: Import a volume group -

Press Enter to import the volume group. The COMMAND STATUS SMIT screen displays the progress and final result of the volume group import operation.

-

Vary on the volume group using the varyonvg command.

In our environment, we entered the command:

varyonvg itmf_vg

-

Use the lsvg command as shown in Example 5-17 to verify the volume group import.

Example 5-17: Verify import of volume group itmf_vg into tivaix1

[root@tivaix1:/home/root] lsvg -l itmf_vg itmf_vg: LV NAME TYPE LPs PPs PVs LV STATE MOUNT POINT itmf_loglv jfslog 1 1 1 closed/syncd N/A itmf_lv jfs 512 512 1 closed/syncd /opt/hativoli

-

Vary off the volume group using the varyoffvg command so you can import the volume group into the remaining cluster nodes.

In our environment, we entered the command:

varyoffvg itmf_vg

Import the volume group into the remaining cluster nodes

Import the volume group into the remaining cluster nodes so it becomes a shared volume group.

In our environment, we imported volume group itmf_vg into cluster node tivaix2.

| Note | Importing a volume group also varies it on, so be sure to vary it off first with the varyoffvg command if it is in the ONLINE state on a cluster node. |

To import a volume group defined on SSA drives so it becomes a shared volume group with other cluster nodes:

-

Log into another cluster node as root user.

In our environment, we logged into tivaix2 as root user.

-

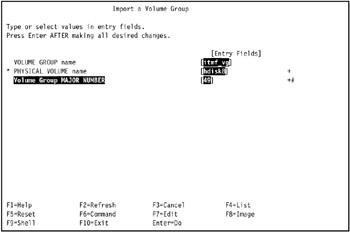

Enter the SMIT fast path command: smit importvg. You can also enter: smit storage, go to Logical Volume Manager -> Volume Groups -> Import a Volume Group, and press Enter. The Import a Volume Group SMIT screen is displayed.

-

Use the same volume group name that you used in the preceding operation for the VOLUME GROUP name field.

In our environment, we entered itmf_vg in the VOLUME GROUP name field.

-

Use the summary analysis table created in "Plan the shared disk" on page 417 to determine the logical disk to use. The volume group major number is the same on all cluster nodes, so use the same volume group major number as in the preceding operation.

In our environment, we observed that hdisk9 on tivaix1 corresponds to hdisk8 on tivaix2, so we used hdisk8 in the PHYSICAL VOLUME name field, as shown in Figure 5-17.

Figure 5-17: Import volume group itmf_vg on tivaix2 -

Press Enter to import the volume group. The COMMAND STATUS SMIT screen displays the progress and final result of the volume group import operation.

-

Use the lsvg and lspv commands to verify the volume group import. The output of these commands contains the name of the imported volume group.

In our environment, we verified the volume group import as shown in Example 5-18 on page 446.

Example 5-18: Verify the import of volume group itmf_vg into tivaix2

[root@tivaix2:/home/root] lsvg rootvg tiv_vg1 tiv_vg2 itmf_vg [root@tivaix2:/home/root] lsvg -l itmf_vg itmf_vg: LV NAME TYPE LPs PPs PVs LV STATE MOUNT POINT itmf_loglv jfslog 1 1 1 closed/syncd N/A itmf_lv jfs 512 512 1 closed/syncd /opt/hativoli [root@tivaix2:/home/root] lspv hdisk0 0001814f62b2a74b rootvg active hdisk1 none None hdisk2 none None hdisk3 none None hdisk4 none None hdisk5 000900066116088b tiv_vg1 hdisk6 000000000348a3d6 tiv_vg1 hdisk7 00000000034d224b tiv_vg2 hdisk8 0001813f72023fd6 itmf_vg active hdisk9 0001813f72025253 itmf_vg active hdisk10 0001813f71dd8f80 None hdisk11 00000000034d7fad tiv_vg2 hdisk12 0001814f7ce1d08d None hdisk16 0001814fe8d10853 None

-

Vary off the volume group using the varyoffvg command.

In our environment, we entered the following command into tivaix2:

varyoffvg itmf_vg

Verify the volume group sharing

Manually verify that all imported volume groups can be shared between cluster nodes before configuring HACMP. If volume group sharing fails under HACMP, manual verification usually allows you to rule out a problem in the configuration of the volume groups, and focus upon the definition of the shared volume groups under HACMP.

To verify volume group sharing:

-

Log into a cluster node as root user.

In our environment, we logged into tivaix1 as root user.

-

Verify the volume group is not already active on the cluster node. Use the lsvg command as shown in Example 5-19 on page 447. The name of the volume group does not appear in the output of the command if the volume group is not active on the cluster node.

Example 5-19: Verify a volume group is not already active on a cluster node

[root@tivaix1:/home/root] lsvg -o rootvg

-

Vary on the volume group using the varyonvg command.

In our environment, we entered the command:

varyonvg itmf_vg

-

Use the lspv and lsvg commands as shown in Example 5-20 to verify the volume group is put into the ONLINE state. The name of the volume group appears in the output of these commands now, where it did not before.

Example 5-20: How to verify volume group itmf_vg is online on tivaix1

[root@tivaix1:/home/root] lsvg -o itmf_vg rootvg [root@tivaix1:/home/root] lsvg -l itmf_vg itmf_vg: LV NAME TYPE LPs PPs PVs LV STATE MOUNT POINT itmf_loglv jfslog 1 1 1 closed/syncd N/A itmf_lv jfs 512 512 1 closed/syncd /opt/hativoli [root@tivaix1:/home/root] lspv hdisk0 0001813fe67712b5 rootvg active hdisk1 0001813f1a43a54d rootvg active hdisk2 0001813f95b1b360 rootvg active hdisk3 0001813fc5966b71 rootvg active hdisk4 0001813fc5c48c43 None hdisk5 0001813fc5c48d8c None hdisk6 000900066116088b tiv_vg1 hdisk7 000000000348a3d6 tiv_vg1 hdisk8 00000000034d224b tiv_vg2 hdisk9 0001813f72023fd6 itmf_vg active hdisk10 0001813f72025253 itmf_vg active hdisk11 0001813f71dd8f80 None hdisk12 00000000034d7fad tiv_vg2 hdisk13 none None

-

Use the df, mount, touch, and ls and umount commands to verify the availability of the logical volume, and to create a test file. The file system and mount point changes after mounting the logical volume.

In our environment, we created the test file /opt/hativoli/node_tivaix1.

Example 5-21: Verify availability of a logical volume in a shared volume group

[root@tivaix1:/home/root] df -k /opt/hativoli Filesystem 1024-blocks Free %Used Iused %Iused Mounted on /dev/hd10opt 262144 68724 74% 3544 6% /opt [root@tivaix1:/home/root] mount /opt/hativoli [root@tivaix1:/home/root] df -k /opt/hativoli Filesystem 1024-blocks Free %Used Iused %Iused Mounted on /dev/itmf_lv 2097152 2031276 4% 17 1% /opt/hativoli [root@tivaix1:/home/root] touch /opt/hativoli/node_tivaix1 [root@tivaix1:/home/root] ls -l /opt/hativoli/node_tivaix* -rw-r--r-- 1 root sys 0 Dec 17 15:25 /opt/hativoli/node_tivaix1 [root@tivaix1:/home/root] umount /opt/hativoli

-

Vary off the volume group using the varyoffvg command.

In our environment, we used the command:

varyoffvg itmf_vg

-

Repeat the operation on all remaining cluster nodes. Ensure test files created on other cluster nodes sharing this volume group exist.

In our environment, we repeated the operation on tivaix2 as shown in Example 5-22.

Example 5-22: Verify shared volume group itmf_vg on tivaix2

[root@tivaix2:/home/root] lsvg -o rootvg [root@tivaix2:/home/root] varyonvg itmf_vg [root@tivaix2:/home/root] lsvg -o itmf_vg rootvg [root@tivaix2:/home/root] lsvg -l itmf_vg itmf_vg: LV NAME TYPE LPs PPs PVs LV STATE MOUNT POINT itmf_loglv jfslog 1 1 1 closed/syncd N/A itmf_lv jfs 512 512 1 closed/syncd /opt/hativoli [root@tivaix2:/home/root] lspv hdisk0 0001814f62b2a74b rootvg active hdisk1 none None hdisk2 none None hdisk3 none None hdisk4 none None hdisk5 000900066116088b tiv_vg1 hdisk6 000000000348a3d6 tiv_vg1 hdisk7 00000000034d224b tiv_vg2 hdisk8 0001813f72023fd6 itmf_vg active hdisk9 0001813f72025253 itmf_vg active hdisk10 0001813f71dd8f80 None hdisk11 00000000034d7fad tiv_vg2 hdisk12 0001814f7ce1d08d None hdisk16 0001814fe8d10853 None [root@tivaix2:/home/root] df -k /opt/hativoli Filesystem 1024-blocks Free %Used Iused %Iused Mounted on /dev/hd10opt 262144 29992 89% 3587 6% /opt [root@tivaix2:/home/root] mount /opt/hativoli [root@tivaix2:/home/root] df -k /opt/hativoli Filesystem 1024-blocks Free %Used Iused %Iused Mounted on /dev/itmf_lv 2097152 2031276 4% 17 1% /opt/hativoli [root@tivaix2:/home/root] touch /opt/hativoli/node_tivaix2 [root@tivaix2:/home/root] ls -l /opt/hativoli/node_tivaix* -rw-r--r-- 1 root sys 0 Dec 17 15:25 /opt/hativoli/node_tivaix1 -rw-r--r-- 1 root sys 0 Dec 17 15:26 /opt/hativoli/node_tivaix2 [root@tivaix2:/home/root] umount /opt/hativoli [root@tivaix2:/home/root] varyoffvg itmf_vg

5.1.4 Install IBM Tivoli Management Framework

In this section we show how to install IBM Tivoli Management Framework Version 4.1 with all available patches as of the time of writing; specifically, how to install on tivaix1 in the environment used for this redbook. We only need to install once, because we used a hot standby configuration. After installing IBM Tivoli Management Framework, we describe how to install and configure HACMP for it on both tivaix1 and tivaix2.

Concurrent access requires application support of the Cluster Lock Manager. IBM Tivoli Management Framework does not support Cluster Lock Manager, so we use shared Logical Volume Manager (LVM) access.

Plan for high availability considerations

We install the IBM Tivoli Management Framework before installing and configuring HACMP—so if IBM Tivoli Management Framework exhibits problems after introducing HACMP, we will know the root cause is likely an HACMP configuration issue.

It helps the overall deployment if we plan around some of the high availability considerations while installing IBM Tivoli Management Framework.

Installation directories

IBM Tivoli Management Framework uses the following directories on a Tivoli server:

-

/etc/Tivoli

-

Tivoli home directory, where IBM Tivoli Management Framework is installed under, and most Tivoli Enterprise products are usually installed in.

| Important: | These are not the only directories used in a Tivoli Enterprise deployment of multiple IBM Tivoli products. |

In our environment, we left /etc/Tivoli on the local drives of each cluster node. This enabled the flexibility to easily use multiple, local Endpoint installations on each cluster node. Putting /etc/Tivoli on the shared disk volume is possible, but it involves adding customized start and stop HACMP scripts that would "shuffle" the contents of /etc/Tivoli depending upon what Endpoints are active on a cluster node.

We use /opt/hativoli as the Tivoli home directory. Following best practice, we first install IBM Tivoli Management Framework into /opt/hativoli, then install and configure HACMP.

| Note | In an actual production deployment, best practice is to implement /etc/Tivoli on a shared volume group because leaving it on the local disk of a system involves synchronizing the contents of highly available Endpoints across cluster nodes. |

Associated IP addresses

Configuring the Tivoli server as a resource group in a hot standby two-node cluster requires that the IP addresses associated with the server remain with the server, regardless of which cluster node it runs upon. The IP address associated with the installation of the Tivoli server should be the service IP address. When the cluster node the Tivoli server is running on falls over, the service IP label falls over to the new cluster node, along with the resource group that contains the Tivoli server.

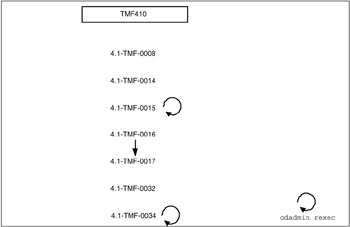

Plan the installation sequence

Before installing, plan the sequence of the packages you are going to install. Refer to Tivoli Enterprise Installation Guide Version 4.1, GC32-0804, for detailed information about what needs to be installed. Figure 5-18 on page 451 shows the sequence and dependencies of packages we planned for IBM Tivoli Management Framework Version 4.1 for the environment we used for this redbook.

Figure 5-18: IBM Tivoli Framework 4.1.0 application and patch sequence and dependencies as of December 2, 2003

Stage installation media

Complete the procedures listed in "Stage installation media" on page 313 to stage the IBM Tivoli Management Framework installation media.

Modify /etc/hosts and name resolution order

Complete the procedures in "Modify /etc/hosts and name resolution order" on page 250 to configure IP hostname lookups.

Install base Framework

In this section we show you how to install IBM Tivoli Management Framework so that it is specifically configured for IBM Tivoli Workload Scheduler on HACMP. This enables you to transition the instances of IBM Tivoli Management Framework used for IBM Tivoli Workload Scheduler to a mutual takeover environment if that becomes a supported feature in the future. We believe the configuration as shown in this section can be started and stopped directly from HACMP in a mutual takeover configuration.

When installing IBM Tivoli Management Framework on an HACMP cluster node in support of IBM Tivoli Workload Scheduler, use the primary IP hostname as the hostname for IBM Tivoli Management Framework. Add an IP alias later for the service IP label. When this configuration is used with the multiple Connector object configuration described in section, this enables Job Scheduling Console users to connect through any instance of IBM Tivoli Management Framework, no matter which cluster nodes fall over.

IBM Tivoli Management Framework itself consists of a base install, and various components. You must first prepare for the base install by performing the commands as shown in Example 5-23 for cluster node tivaix1 in our environment. On tivaix2, we replace the IP hostname in the first command from tivaix1_svc to tivaix2_svc.

Example 5-23: Preparing for installation of IBM Tivoli Management Framework 4.1

[root@tivaix1:/home/root] HOST=tivaix1_svc [root@tivaix1:/home/root] echo $HOST > /etc/wlocalhost [root@tivaix1:/home/root] WLOCALHOST=$HOST [root@tivaix1:/home/root] export WLOCALHOST [root@tivaix1:/home/root] mkdir /opt/hativoli/install_dir [root@tivaix1:/home/root] cd /opt/hativoli/install_dir [root@tivaix1:/opt/hativoli/install_dir] /bin/sh \ > /usr/sys/inst.images/tivoli/fra/FRA410_1of2/WPREINST.SH to install, type ./wserver -c /usr/sys/inst.images/tivoli/fra/FRA410_1of2 [root@tivaix1:/opt/hativoli/install_dir] DOGUI=no [root@tivaix1:/opt/hativoli/install_dir] export DOGUI

After you prepare for the base install, perform the initial installation of IBM Tivoli Management Framework by running the command shown in Example 5-24. You will see output similar to this example; depending upon the speed of your server, it will take 5 to 15 minutes to complete.

Example 5-24: Initial installation of IBM Tivoli Management Framework Version 4.1

[root@tivaix1:/home/root] cd /usr/local/Tivoli/install_dir [root@tivaix1:/usr/local/Tivoli/install_dir] sh ./wserver -y \ -c /usr/sys/inst.images/tivoli/fra/FRA410_1of2 \ -a tivaix1_svc -d \ BIN=/opt/hativoli/bin! \ LIB=/opt/hativoli/lib! \ ALIDB=/opt/hativoli/spool! \ MAN=/opt/hativoli/man! \ APPD=/usr/lib/lvm/X11/es/app-defaults! \ CAT=/opt/hativoli/msg_cat! \ LK=1FN5B4MBXBW4GNJ8QQQ62WPV0RH999P99P77D \ RN=tivaix1_svc-region \ AutoStart=1 SetPort=1 CreatePaths=1 @ForceBind@=yes @EL@=None Using command line style installation..... Unless you cancel, the following operations will be executed: need to copy the CAT (generic) to: tivaix1_svc:/opt/hativoli/msg_cat need to copy the CSBIN (generic) to: tivaix1_svc:/opt/hativoli/bin/generic need to copy the APPD (generic) to: tivaix1_svc:/usr/lib/lvm/X11/es/app-defaults need to copy the GBIN (generic) to: tivaix1_svc:/opt/hativoli/bin/generic_unix need to copy the BUN (generic) to: tivaix1_svc:/opt/hativoli/bin/client_bundle need to copy the SBIN (generic) to: tivaix1_svc:/opt/hativoli/bin/generic need to copy the LCFNEW (generic) to: tivaix1_svc:/opt/hativoli/bin/lcf_bundle.40 need to copy the LCFTOOLS (generic) to: tivaix1_svc:/opt/hativoli/bin/lcf_bundle.40/bin need to copy the LCF (generic) to: tivaix1_svc:/opt/hativoli/bin/lcf_bundle need to copy the LIB (aix4-r1) to: tivaix1_svc:/opt/hativoli/lib/aix4-r1 need to copy the BIN (aix4-r1) to: tivaix1_svc:/opt/hativoli/bin/aix4-r1 need to copy the ALIDB (aix4-r1) to: tivaix1_svc:/opt/hativoli/spool/tivaix1.db need to copy the MAN (aix4-r1) to: tivaix1_svc:/opt/hativoli/man/aix4-r1 need to copy the CONTRIB (aix4-r1) to: tivaix1_svc:/opt/hativoli/bin/aix4-r1/contrib need to copy the LIB371 (aix4-r1) to: tivaix1_svc:/opt/hativoli/lib/aix4-r1 need to copy the LIB365 (aix4-r1) to: tivaix1_svc:/opt/hativoli/lib/aix4-r1 Executing queued operation(s) Distributing machine independent Message Catalogs --> tivaix1_svc ..... Completed. Distributing machine independent generic Codeset Tables --> tivaix1_svc .... Completed. Distributing architecture specific Libraries --> tivaix1_svc ...... Completed. Distributing architecture specific Binaries --> tivaix1_svc ............. Completed. Distributing architecture specific Server Database --> tivaix1_svc .......................................... Completed. Distributing architecture specific Man Pages --> tivaix1_svc ..... Completed. Distributing machine independent X11 Resource Files --> tivaix1_svc ... Completed. Distributing machine independent Generic Binaries --> tivaix1_svc ... Completed. Distributing machine independent Client Installation Bundle --> tivaix1_svc ... Completed. Distributing machine independent generic HTML/Java files --> tivaix1_svc ... Completed. Distributing architecture specific Public Domain Contrib --> tivaix1_svc ... Completed. Distributing machine independent LCF Images (new version) --> tivaix1_svc ............. Completed. Distributing machine independent LCF Tools --> tivaix1_svc ....... Completed. Distributing machine independent 36x Endpoint Images --> tivaix1_svc ............ Completed. Distributing architecture specific 371_Libraries --> tivaix1_svc .... Completed. Distributing architecture specific 365_Libraries --> tivaix1_svc .... Completed. Registering installation information...Finished.

On tivaix2 in our environment, we run the same command except we change the third line of the command from tivaix1_svc to tivaix2_svc.

Load Tivoli environment variables in .profile files

The Tivoli environment variables contain pointers to important directories that IBM Tivoli Management Framework uses for many commands. Loading the variables in the .profile file of a user account ensures that these environment variables are always available immediately after logging into the user account.

Use the commands in Example 5-25 to modify the .profile files of the root user account on all cluster nodes to source in all Tivoli environment variables for IBM Tivoli Management Framework.

Example 5-25: Load Tivoli environment variables on tivaix1

PATH=${PATH}:${HOME}/bin if [ -f /etc/Tivoli/setup_env.sh ] ; then . /etc/Tivoli/setup_env.sh fi Also enter these commands on the command line, or log out and log back in to activate the environment variables for the following sections.

Install Framework components and patches

After the base install is complete, you can install all remaining Framework components and patches by running the script shown in Example 5-26. If you use this script on tivaix2, change the line that starts with the string "HOST=" so that tivaix1 is replaced with tivaix2.

Example 5-26: Script for installing IBM Tivoli Management Framework Version 4.1 with patches

#!/bin/ksh if [ -d /etc/Tivoli ] ; then . /etc/Tivoli/setup_env.sh fi reexec_oserv() { echo "Reexecing object dispatchers..." if [ `odadmin odlist list_od | wc -l` -gt 1 ] ; then # # Determine if necessary to shut down any clients tmr_hosts=`odadmin odlist list_od | head -1 | cut -c 36-` client_list=`odadmin odlist list_od | grep -v ${tmr_hosts}$` if [ "${client_list}" = "" ] ; then echo "No clients to shut down, skipping shut down of clients..." else echo "Shutting down clients..." odadmin shutdown clients echo "Waiting for all clients to shut down..." sleep 30 fi fi odadmin reexec 1 sleep 30 odadmin start clients } HOST="tivaix1_svc" winstall -c /usr/sys/inst.images/tivoli/fra/FRA410_2of2/JAVA -y -i JRE130 $HOST winstall -c /usr/sys/inst.images/tivoli/fra/FRA410_2of2/JAVA -y -i JHELP41 $HOST winstall -c /usr/sys/inst.images/tivoli/fra/FRA410_2of2/JAVA -y -i JCF41 $HOST winstall -c /usr/sys/inst.images/tivoli/fra/FRA410_2of2/JAVA -y -i JRIM41 $HOST winstall -c /usr/sys/inst.images/tivoli/fra/FRA410_2of2/JAVA -y -i MDIST2GU $HOST winstall -c /usr/sys/inst.images/tivoli/fra/FRA410_2of2/JAVA -y -i SISDEPOT $HOST winstall -c /usr/sys/inst.images/tivoli/fra/FRA410_2of2/JAVA -y -i SISCLNT $HOST winstall -c /usr/sys/inst.images/tivoli/fra/FRA410_1of2 -y -i ADE $HOST winstall -c /usr/sys/inst.images/tivoli/fra/FRA410_1of2 -y -i AEF $HOST wpatch -c /usr/sys/inst.images/tivoli/fra/41TMF008 -y -i 41TMF008 $HOST wpatch -c /usr/sys/inst.images/tivoli/fra/41TMF014 -y -i 41TMF014 $HOST wpatch -c /usr/sys/inst.images/tivoli/fra/41TMF015 -y -i 41TMF015 $HOST reexec_oserv wpatch -c /usr/sys/inst.images/tivoli/fra/41TMF016 -y -i 41TMF016 $HOST wpatch -c /usr/sys/inst.images/tivoli/fra/41TMF017 -y -i TMA2928 $HOST wpatch -c /usr/sys/inst.images/tivoli/fra/41TMF017 -y -i TMA2929 $HOST wpatch -c /usr/sys/inst.images/tivoli/fra/41TMF017 -y -i TMA2931 $HOST wpatch -c /usr/sys/inst.images/tivoli/fra/41TMF017 -y -i TMA2932 $HOST wpatch -c /usr/sys/inst.images/tivoli/fra/41TMF017 -y -i TMA2962 $HOST wpatch -c /usr/sys/inst.images/tivoli/fra/41TMF017 -y -i TMA2980 $HOST wpatch -c /usr/sys/inst.images/tivoli/fra/41TMF017 -y -i TMA2984 $HOST wpatch -c /usr/sys/inst.images/tivoli/fra/41TMF017 -y -i TMA2986 $HOST wpatch -c /usr/sys/inst.images/tivoli/fra/41TMF017 -y -i TMA2987 $HOST wpatch -c /usr/sys/inst.images/tivoli/fra/41TMF017 -y -i TMA2989 $HOST wpatch -c /usr/sys/inst.images/tivoli/fra/41TMF034 -y -i 41TMF034 $HOST reexec_oserv wpatch -c /usr/sys/inst.images/tivoli/fra/41TMF032 -y -i JRE130_0 $HOST This completes the installation of IBM Tivoli Management Framework Version 4.1. The successful completion of the installation performs a gross level verification of IBM Tivoli Management Framework.

After installing IBM Tivoli Management Framework, configure it to meet the requirements of integrating with IBM Tivoli Workload Scheduler over HACMP.

Add IP alias to oserv

Installing IBM Tivoli Management Framework using the service IP hostname of the server binds the Framework server (also called oserv) to the corresponding service IP address.

It only listens for Framework network traffic on this IP address. This ensures a highly available IBM Tivoli Management Framework only starts after HACMP is running.

In our environment, we also need oserv to listen on the persistent IP address. The persistent IP label/address is not moved between cluster nodes when a resource group is moved, but remains on the cluster node to ease administrative access (that is why it is called the persistent IP label/address). Job Scheduling Console users depend upon using the service IP address to access IBM Tivoli Workload Scheduler services.

As a security precaution, IBM Tivoli Management Framework only listens on the IP address it is initially installed against unless the feature specifically disabled to bind against other addresses. We show you how to disable this feature in this section.

To add the service IP label as a Framework oserv IP alias:

-

Log in as root user on a cluster node.

In our environment, we logged in as root user on cluster node tivaix1.

-

Use the odadmin command as shown in Example 5-27 to verify the current IP aliases of the oserv, add the service IP label as an IP alias to the oserv, and then verify that the service IP label is added to the oserv as an IP alias.

Example 5-27: Add IP alias to Framework oserv server

[root@tivaix1:/home/root] odadmin odlist Region Disp Flags Port IPaddr Hostname(s) 1369588498 1 ct- 94 9.3.4.3 tivaix1_svc [root@tivaix1:/home/root] odadmin odlist add_ip_alias 1 tivaix1 [root@tivaix1:/home/root] odadmin odlist Region Disp Flags Port IPaddr Hostname(s) 1369588498 1 ct- 94 9.3.4.3 tivaix1_svc 9.3.4.194 tivaix1,tivaix1.itsc.austin.ibm.com

Note that the numeral 1 in the odadmin odlist add_ip_alias command should be replaced by the dispatcher number of your Framework installation.

The dispatcher number is displayed in the second column of the odadmin odlist command, on the same line as the primary IP hostname of your Framework installation. In Example 5-28, the dispatcher number is 7.

Example 5-28: Identify dispatcher number of Framework installation

[root@tivaix1:/home/root] odadmin odlist Region Disp Flags Port IPaddr Hostname(s) 1369588498 7 ct- 94 9.3.4.3 tivaix1_svc

The dispatcher number will be something other than 1 if you delete and re-install Managed Nodes, or if your Framework server is part of an overall Tivoli Enterprise installation.

-

Use the odadmin command as shown in Example 5-29 to verify that IBM Tivoli Management Framework currently binds against the primary IP hostname, then disable the feature, and then verify that it is disabled.

Example 5-29: Disable set_force_bind object dispatcher option

[root@tivaix1:/home/root] odadmin | grep Force Force socket bind to a single address = TRUE [root@tivaix1:/home/root] odadmin set_force_bind FALSE 1 [root@tivaix1:/home/root] odadmin | grep Force Force socket bind to a single address = FALSE

Note that the numeral 1 in the odadmin set_force_bind command should be replaced by the dispatcher number of your Framework installation.

The dispatcher number is displayed in the second column of the odadmin odlist command, on the same line as the primary IP hostname of your Framework installation. In Example 5-30, the dispatcher number is 7.

Example 5-30: Identify dispatcher number of Framework installation

[root@tivaix1:/home/root] odadmin odlist Region Disp Flags Port IPaddr Hostname(s) 1369588498 7 ct- 94 9.3.4.3 tivaix1_svc

The dispatcher number will be something other than 1 if you delete and re-install Managed Nodes, or if your Framework server is part of an overall Tivoli Enterprise installation.

Important: Disabling the set_force_bind variable can cause unintended side effects for installations of IBM Tivoli Management Framework that also run other IBM Tivoli server products, such as IBM Tivoli Monitoring and IBM Tivoli Configuration Manager. Consult your IBM service provider for advice on how to address this potential conflict if you plan on deploying other IBM Tivoli server products on top of the instance of IBM Tivoli Management Framework that you use for IBM Tivoli Workload Scheduler.

Best practice is to dedicate an instance of IBM Tivoli Management Framework for IBM Tivoli Workload Scheduler, typically on the Master Domain Manager, and not to install other IBM Tivoli server products into it. This simplifies these administrative concerns and does not affect the functionality of a Tivoli Enterprise environment.

-

Repeat the operation on all remaining cluster nodes.

For our environment, we repeat the operation on tivaix2, replacing tivaix1 with tivaix2 in the commands.

Move the .tivoli directory

The default installation of IBM Tivoli Management Framework on a UNIX system creates the /tmp/.tivoli directory. This directory contains files that are required by the object dispatcher process. In a high availability implementation, the directory needs to move with the resource group that contains IBM Tivoli Management Framework. This means we need to move the directory into the shared volume group's file system. In our environment, we moved the directory to /opt/hativoli/tmp/.tivoli.

To use a different directory, you must set an environment variable in both the object dispatcher and the shell. After installing IBM Tivoli Management Framework, perform the following steps to set the necessary environment variables:

-

Create a directory. This directory must have at least public read and write permissions. However, define full permissions and set the sticky bit to ensure that users cannot modify files that they do not own.

In our environment, we ran the commands shown in Example 5-31.

Example 5-31: Create the new .tivoli directory

mkdir -p /opt/hativoli/tmp/.tivoli chmod ugo=rwx /opt/hativoli/tmp/.tivoli chmod u+s /opt/hativoli/tmp/.tivoli

-

Set the environment variable in the object dispatcher:

-

Enter the following command:

odadmin environ get > envfile

-

Add the following line to the envfile file and save it:

TIVOLI_COMM_DIR=new_directory_name

-

Enter the following command:

odadmin environ set < envfile

-

-

Edit the Tivoli-provided set_env.csh, setup_env.sh, and oserv.rc files in the /etc/Tivoli directory to set the TIVOLI_COMM_DIR variable.

-

For HP-UX and Solaris systems, add the following line to the file that starts the object dispatcher:

TIVOLI_COMM_DIR=new_directory_name

Insert the line near where the other environment variables are set, in a location that runs before the object dispatcher is started. The following list contains the file that needs to be changed on each operating system:

-

For HP-UX operating systems: /sbin/init.d/Tivoli

-

For Solaris operating systems: /etc/rc3.d/S99Tivoli

-

-

Shut down the object dispatcher by entering the following command:

odadmin shutdown all

-

Restart the object dispatcher by entering the following command:

odadmin reexec all

5.1.5 Tivoli Web interfaces

IBM Tivoli Management Framework provides access to Web-enabled Tivoli Enterprise applications from a browser. When a browser sends an HTTP request to the Tivoli server, the request is redirected to a Web server. IBM Tivoli Management Framework provides this Web access by using some servlets and support files that are installed on the Web server. The servlets establish a secure connection between the Web server and the Tivoli server. The servlets and support files are called the Tivoli Web interfaces.

IBM Tivoli Management Framework provides a built-in Web server called the spider HTTP service. It is not as robust or secure as a third-party Web server, so if you plan on deploying a Tivoli Enterprise product that requires Web access, consult your IBM service provider for advice about selecting a more appropriate Web server.

IBM Tivoli Management Framework supports any Web server that implements the Servlet 2.2 specifications, but the following Web servers are specifically certified for use with IBM Tivoli Management Framework:

-

IBM WebSphere Application Server, Advanced Single Server Edition

-

IBM WebSphere Application Server, Enterprise Edition

-

IBM WebSphere Enterprise Application Server

-

Jakarta Tomcat

The Web server can be hosted on any computer system. If you deploy a Web server on a cluster node, you will likely want to make it highly available. In this redbook we focus upon high availability for IBM Tivoli Workload Scheduler and IBM Tivoli Management Framework. Refer to IBM WebSphere V5.0 Performance, Scalability, and High Availability: WebSphere Handbook Series, SG24-6198-00, for details on configuring WebSphere Application Server for high availability. Consult your IBM service provider for more details on configuring other Web servers for high availability.

5.1.6 Tivoli Managed Node

Managed Nodes are no different from IBM Tivoli Management Framework Tivoli servers in terms of high availability design. They operate under the same constraint of only one instance per operating system instance. While the AutoStart install variable of the wclient command implies we can configure multiple instances of the object dispatcher on a single operating system instance, IBM Tivoli Support staff confirmed for us that this is not a supported configuration at the time of writing.

Use the wclient command to install a Managed Node in a highly available cluster, as shown in Example 5-32.

Example 5-32: Install a Managed Node

wclient -c /usr/sys/inst.images/tivoli/fra/FRA410_1of2 \ -p ibm.tiv.pr -P @AutoStart@=0 @ForceBind@=yes \ BIN=/opt/hativoli/bin! \ LIB=/opt/hativoli/lib! \ DB=/opt/hativoli/spool! \ MAN=/opt/hativoli/man! \ APPD=/usr/lib/lvm/X11/es/app-defaults! \ CAT=/opt/hativoli/msg_cat! \ tivaix3_svc

In this example, we installed a Managed Node named tivaix3_svc on a system with the IP hostname tivaix3_svc (the service IP label of the cluster node) from the CD image we copied to the local drive in "Stage installation media" on page 451, into the directory /opt/hativoli. We also placed the managed resource object in the ibm.tiv.pr policy region. See about how to use the wclient command.

Except for the difference in the initial installation (using the wclient command instead of the wserver command), planning and implementing a highly available Managed Node is the same as for a Tivoli server, as described in the preceding sections.

If the constraint is lifted in future versions of IBM Tivoli Management Framework, or if you still want to install multiple instances of the object dispatcher on a single instance of an operating system, configure each instance with a different directory.

To configure a different directory, change the BIN, LIB, DB, MAN, CAT and (optionally) APPD install variables that are passed to the wclient command. Configure the Tivoli environment files and the oserv.rc executable in /etc/Tivoli to accommodate the multiple installations. Modify external dependencies upon /etc/Tivoli where appropriate. We recommend using multiple, separate directories, one for each instance of IBM Tivoli Management Framework. Consult your IBM service provider for assistance with configuring this design.

5.1.7 Tivoli Endpoints

Endpoints offer more options for high availability designs. When designing a highly available Tivoli Enterprise deployment, best practice is to keep the number of Managed Nodes as low as possible, and to use Endpoints as much as possible. In some cases (such as, for very old versions of Plus Modules) this might not be feasible, but the benefits of using Endpoints can often justify the cost of refactoring these older products into an Endpoint form.

Unlike Managed Nodes, multiple Endpoints on a single instance of an operating system are supported. This opens up many possibilities for high availability design. One design is to create an Endpoint to associate with a highly available resource group on a shared volume group, as shown in Figure 5-19.

Figure 5-19: Normal operation of highly available Endpoint

Under normal operation, cluster node tivaix1 runs the highly available Endpoint from the directory /opt/hativoli/lcf on the shared volume group. When the resource group falls over, tivaix1 is unavailable and the resource group moves to tivaix2. The Endpoint continues to listen on the IP service address of tivaix1, but runs off tivaix2 instead, as shown in Figure 5-20.

Figure 5-20: Fallover operation of highly available Endpoint

We recommend that you use this configuration to manage HACMP resource group-specific system resources. Examples of complementary IBM Tivoli products that leverage Endpoints in a highly available environment include:

-

Monitor a file system in a resource group with IBM Tivoli Monitoring.

-

Monitor a highly available database in a resource group with IBM Tivoli Monitoring for Databases.

-

Inventory and distribute software used in a resource group with IBM Tivoli Configuration Manager.

-

Enforce software license compliance of applications in a resource group with IBM Tivoli License Manager.

Specific IBM Tivoli products may have specific requirements that affect high availability planning and implementation. Consult your IBM service provider for assistance with planning and implementing other IBM Tivoli products on top of a highly available Endpoint.

Another possible design builds on top of a single highly available Endpoint. The highly available Endpoint is sufficient for managing the highly available resource group, but is limited in its ability to manage the cluster hardware. A local instance of an Endpoint can be installed to specifically manage compute resources associated with each cluster node.

For example, assume we use a cluster configured with a resource group for a highly available instance of IBM WebSphere Application Server. The environment uses IBM Tivoli Monitoring for Web Infrastructure to monitor the instance of IBM WebSphere Application Server in the resource group. This is managed through a highly available Endpoint that moves with the Web server's resource group. It also needs to use IBM Tivoli Monitoring to continuously monitor available local disk space on each cluster node.

In one possible fallover scenario, the resource group moves from one cluster node to another such that it leaves both the source and destination cluster nodes running. A highly available Endpoint instance can manage the Web server because they both move with a resource group, but it will no longer be able to manage hardware-based resources because the cluster node hardware itself is changed when the resource group moves.

Under this design, the normal operation of the cluster we used for this redbook is shown in Figure 5-21 on page 465.

Figure 5-21: Normal operation of local and highly available Endpoints

In normal operation then, three Endpoints are running. If the cluster moves the resource group containing the highly available Endpoint from tivaix1 to tivaix2, the state of the cluster would still leave three Endpoints, as shown in Figure 5-22 on page 466.

Figure 5-22: Cluster state after moving highly available Endpoint to tivaix2

However, if cluster node tivaix1 fell over to tivaix2 instead, it would leave only two Endpoint instances running, as shown in Figure 5-23 on page 467.

Figure 5-23: Cluster state after falling over tivaix1 to tivaix2

In each scenario in this alternate configuration, an Endpoint instance is always running on all cluster nodes that remain operational, even if HACMP on that cluster node is not running. As long as the system is powered up and the operating system functional, the local Endpoint remains to manage that system.

In this redbook we show how to install and configure a highly available Endpoint, then add a local Endpoint to the configuration. We use the same two-node cluster used throughout this document as the platform upon which we implement this configuration.

Endpoints require a Gateway in the Tivoli environment to log into so they can reach the Endpoint Manager. In our environment, we create a Gateway using the wcrtgate command, and verify the operation using the wlookup and wgateway commands as shown in Example 5-33 on page 468.

Example 5-33: Create a Gateway on tivaix1

[root@tivaix1:/home/root] wlookup -Lar Gateway [root@tivaix1:/home/root] wcrtgate -h tivaix1 -n tivaix1-gateway 1369588498.1.680#TMF_Gateway::Gateway# [root@tivaix1:/home/root] wlookup -Lar Gateway tivaix1-gateway [root@tivaix1:/home/root] wgateway tivaix1-gateway describe Object : 1369588498.1.680#TMF_Gateway::Gateway# Protocols : TCPIP Hostname : tivaix1 TCPIP Port : 9494 Session Timeout : 300 Debug level : 0 Start Time : 2003/12/22-18:53:05 Log Dir : /opt/hativoli/spool/tivaix1.db Log Size : 1024000 RPC Threads : 250 Max. Con. Jobs : 200 Gwy Httpd : Disabled mcache_bwcontrol : Disabled

In Example 5-33, we create a Gateway named tivaix1-gateway on the Managed Node tivaix1. Best practice is to design and implement multiple sets of Gateways, each set geographically dispersed when possible, to ensure that Endpoints always have a Gateway to log into.

Gateways are closely related to repeaters. Sites that use IBM Tivoli Configuration Manager might want to consider using two parallel sets of Gateways to enable simultaneous use of inventory and software distribution operations, which require different bandwidth throttling characteristics. See Tivoli Enterprise Installation Guide Version 4.1, GC32-0804, for more information about how to design a robust Gateway architecture.

As long as at least one Gateway is created, all Endpoints in a Tivoli Enterprise installation can log into that Gateway. To install a highly available Endpoint:

-

Use the wlookup command to verify that the Endpoint does not already exist.

In our environment, no Endpoints have been created yet, so the command does not return any output, as shown in Example 5-34.

Example 5-34: Verify no Endpoints exist within a Tivoli Enterprise installation

[root@tivaix1:/home/root] wlookup -Lar Endpoint [root@tivaix1:/home/root]

-

Use the winstlcf command as shown in Example 5-35 to install the Endpoint. Refer to Tivoli Management Framework Reference Manual Version 4.1, SC32-0806 for details about how to use the winstlcf command.

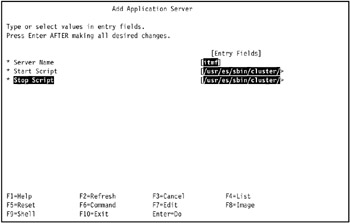

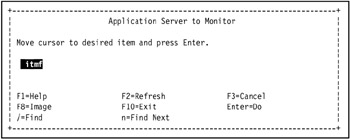

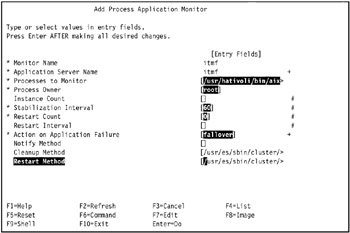

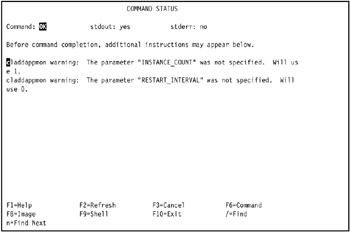

Example 5-35: Install a highly available Endpoint on cluster node tivaix1