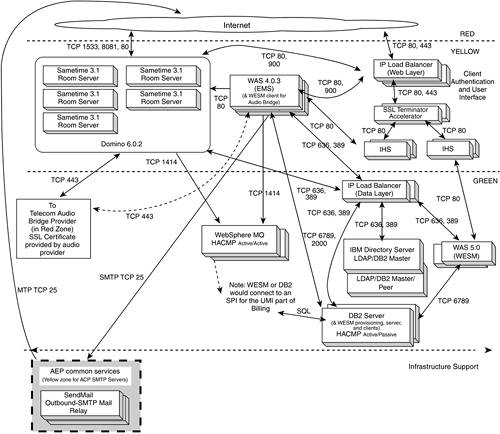

| In this section, we discuss the design of a project that provides an "on demand" Web conferencing service and that incorporates both Domino and WAS product functions. It illustrates various ways in which Domino and WAS-based products can be combined to build applications providing complex function without a lot of custom application development. The basic function of the service is to allow anyone with Internet access to register, schedule, and join browser-based conferences. The conferences include chat, multi-media, and whiteboard sharing functions. The Domino-based Lotus Instant Messaging (nE9e Sametime) Room Server product is used for hosting the Web conferences. To register the Web conferences, the WAS based WebSphere Everyplace Subscription Manager (WESM) product is used. The Lotus Enterprise Meeting Server (EMS) product, which is a J2EE application, thus WAS-based, is used to manage the meetings and perform load balancing for the Sametime room servers. The EMS keeps track of logging into and exiting meetings for registered users. The Sametime room servers run on Domino servers, as they don't require transaction capability. The user registry is provided by the IBM Directory Server product and is shared across the room, subscription, and meeting servers. There was also a requirement for an outbound mail function, and this was provided by a stand-alone SMTP server. The project was designed for 24x7 availabilty, so many of the server nodes were built as clusters using an IBM clustering product, HACMP. Figure 7-1 shows the overall architecture of the service and where the different types of servers are placed in the network tier layout. We point out that due to product requirements, not all the WAS servers are at the same WAS version. WESM runs on WAS V5, but the EMS product supports only up to WAS V4. For this reason, the WESM and EMS servers were built on separate server systems. Figure 7-1. Web Conferencing logical server architecture.

Directory Master-Peer Design for High Availability The directory function of the Web conferencing service had two key requirements ”that it be shared among the functional servers (e.g., for single sign-on) and that it be highly available. The approach taken was to place the directory server behind a shared load balancer and to make use of a master-peer replication topology provided by the IBM Directory Server product. Figure 7-2 depicts the directory server configuration. Figure 7-2. Design for the shared directory.

In the master-peer approach, the directory client will read/write to the master. If the master server fails, the client requests are directed to the peer server until the master is made active again. The replication process keeps the data in the master and peer directories synchronized. Whenever a write (or update) is to be done against the directory, it goes to the master. After the write/update is complete, it replicates it to peer server immediately. When the master server is down, the write/update goes to peer server, and it will be queued for replication once the master is restarted. For directory sharing, the Domino, WESM, and EMS servers are configured to the virtual IP address of the directory server. As part of their user management functions, the EMS and WESM applications perform write/update operations to the directory server. WebSphere MQ Application Details EMS communicates with the Sametime servers using the Java Message Service (JMS). This JMS communication is implemented using IBM WebSphere MQ message queuing. The JMS communication supports load balancing, server independence, and failover necessary to provide central meeting management for all of the clustered Sametime servers. It also allows centralized logging, statistics, and configuration change notification. The Sametime Room servers must be able to communicate to EMS/WAS using TCP/IP port 900 (WebSphere 4.x JNDI port). The EMS and Sametime Room servers must be able to communicate to WebSphere MQ using TCP/IP port 1414. WebSphere MQ Cluster Design Because the WebSphere MQ (WMQ) server acts as the blood supply of the service, availability is a key criterion for these servers. It is also important that the design allow for the easy addition of MQ servers. IBM's High Availability Cluster Multi-Processing (HACMP) product (for AIX) is a control application that can link servers into highly available clusters. Clustering servers enables parallel access to data, which can help provide the redundancy and fault resilience required for business-critical applications. The WMQ clusters reduce administration and provide load balancing of messages across instances of cluster queues. They also offer higher availability than a single queue manager because following a failure of a queue manager, messaging applications can still access surviving instances of a cluster queue. However, WMQ clusters alone will not provide automatic detection of queue manager failure and automatic triggering of queue manager restart or failover. HACMP clusters provide these features. The two types of cluster can used together to good effect. By using WMQ and HACMP together, it is possible to further enhance the availability of the WMQ queue managers. With a suitably configured HACMP cluster, it is possible for failures of power supplies , nodes, disks, disk controllers, networks, network adapters, or queue manager processes to be detected and automatically trigger recovery procedures to bring an disabled queue manager back on-line as quickly as possible. An HACMP cluster is a collection of nodes and resources (such as disks and networks), which cooperate to provide high availability of services running within the cluster. Hopefully, we've been making a clear distinction between such an HACMP cluster and a WMQ cluster, which refers to a collection of queue managers that can allow access to their queues by other queue managers in the cluster. A "mutual takeover" configuration is one in which all nodes are performing highly available (movable) work. This type of cluster configuration is also referred to as "Active/Active" to indicate that all nodes are actively processing critical workload. An Active/Active cluster configuration is used for the Web conferencing service. The load balancing is based on client connections and not the message distribution. Each Sametime room server has a connection to either of the cluster nodes through connection channel. If the queue exists on the queue manager node to which it is connected, it will always go there for the duration of the connection. Figure 7-3 depicts the overall cluster design. Figure 7-3. WebSphere MQ HACMP cluster (active-active) design.

|