11.2 Techniques

11.2 Techniques

There are some known techniques used to provide availability and performance. This section is just a quick overview of the techniques with some specific details in regards to "Pervasive Portals". For more information about scalability and availability, refer to the redbook Patterns for the Edge of Network , SG24-6822.

Clustering

In a computer system, a cluster is a group of servers and other resources that act as a single system and enable high availability and, in some cases, load balancing and parallel processing (performance).

Clustering configurations for high availability

The goal of these configurations is to improve availability. There are two basic configurations for high availability.

-

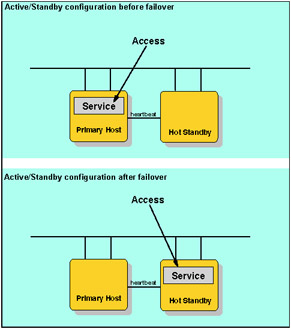

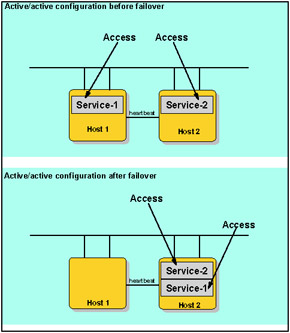

The simplest high-availability cluster configuration is a two-node cluster. There is one primary system for all cluster resources and a second system that is a back-up, ready to take over during an outage of the primary system.

Figure 11-3: Active/standby configuration -

Another typical configuration is the mutual takeover cluster. Each node in this environment serves as the primary node for some sets of resources and as the back-up node for other sets of resources. With mutual takeover, every system or node is used for production work, and all critical production work is accessible from multiple systems, multiple nodes, or a cluster. The goal of this technique is to improve availability and scalability.

Figure 11-4: Active/active configuration

In both of these scenarios, replication is key. Replication means that a copy of something is produced in real time, for instance, copying objects from one node in a cluster to one or more other nodes in the cluster. Replication makes and keeps the objects on your systems identical. If you make a change to an object on one node in a cluster, this change is replicated to other nodes in the cluster.

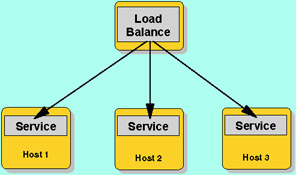

Load balancing

The goal of this technique is to improve performance and availability by supporting horizontal scalability. Load balancing is dividing the amount of work that a computer has to perform between two or more computers so that more work gets done in the same amount of time and, in general, all users are served faster.

Figure 11-5: Load balance configuration

On the Internet, companies whose Web sites get a great deal of traffic usually use load balancing. To load balance Web traffic, there are several approaches. For Web serving, one approach is to route each request in turn to a different server host address in a domain name system (DNS) table, round- robin fashion. Usually, if two servers are used to balance a work load, a third server is needed to determine which server to assign the work to. Since load balancing requires multiple servers, it is usually combined with failover and back-up services. In some approaches, the servers are distributed over different geographic locations.

Caching

The goal of this technique is to improve performance. A cache is a special high-speed storage mechanism. It can be either a reserved section of main memory or an independent high-speed storage device. Two types of caching techniques are commonly used: memory caching and disk caching.

When data is found in the cache, it is called a cache hit, and the effectiveness of a cache is judged by its hit rate. Many cache systems use a technique known as smart caching, in which the system can recognize certain types of frequently used data.

Content delivery

The goal of this technique is to improve performance and availability. Content delivery (sometimes called content distribution) is the service of copying the pages of a Web site to geographically dispersed servers and, when a page is requested , dynamically identifying and serving page content from the closest server to the user , enabling faster delivery. Typically, high-traffic Web site owners and Internet service providers (ISPs) hire the services of the company that provides content delivery.

A common content delivery approach involves the placement of cache servers at major Internet access points around the world and the use of a special routing code that redirects a Web page request (technically, a Hypertext Transfer Protocol (HTTP) request) to the closest server. When the Web user clicks a URL that is content-delivery enabled, the content delivery network re-routes that user's request away from the site's originating server to a cache server closer to the user. The cache server determines what content in the request exists in the cache, serves that content, and retrieves any non-cached content from the originating server. Any new content is also cached locally. Other than faster loading times, the process is generally transparent to the user, except that the URL served may be different from the one requested.

The three main techniques for content delivery are: HTTP redirection, Internet Protocol (IP) redirection, and domain name system (DNS) redirection. In general, DNS redirection is the most effective technique.

EAN: 2147483647

Pages: 83