4.3 Multimedia Data Models: Implementation and Communication

|

| < Day Day Up > |

|

4.3 Multimedia Data Models: Implementation and Communication

The multimedia data model deals with the issue of representing the content of all multimedia objects in a database; that is, designing the high- and low-level abstraction model of the raw media objects and their correlations to facilitate various operations. These operations may include media object selection, insertion, editing, indexing, browsing, querying, and retrieval. The data model relies, therefore, on the feature extraction vectors and their respective representations obtained during the indexing process. This section is divided into four main parts. In the first part, we concentrate on the conceptual design of multimedia data models. In the second part, we introduce the object type hierarchy of SQL/MM and compare it with MPEG-7. In the third part, we focus on the implementation of MPEG-7 in a DBMS and on the realization of descriptive multimedia information exchange in general. Finally, in the fourth part, we are showing how the MPEG-21 DID may support legacy and semantic data integration in multimedia databases.

4.3.1 Conceptual Multimedia Data Models

Continuous time-based multimedia such as video and audio involve notions of data flow, timing, temporal composition, and synchronization. These notions are quite different from the conventional data models, and as a result, conventional data models are not well suited for multimedia database systems. Hence, a key problem confronting a MMDBMS is the description of the structure of time-constraint media in a form appropriate for querying, updating, retrieval, and presentation.

One of the first pertinent approaches is introduced in Kim and Um. [106] In this approach, a data model called timed stream is developed that addresses the representation of time-based media. The model includes the notions of media objects, media elements, and timed streams. Three general structure mechanisms are used: interpretation, derivation, and composition. This kind of data model is specialized in continuous media like audio and video.

A more sophisticated approach is the VideoSTAR model. [107] The proposed database system is closely aligned to the multimedia information system architecture designed by Gross et al. [108] It constitutes a video database framework and is designed to support video applications in sharing and reusing video data and metadata. The metadata are implemented as a complex database schema. This schema realizes a kernel-indexing model valid for many application domains with a few precategorized entities like persons, events, and so on. Specific applications are able to enhance the descriptive power of the kernel model with specific types of content indexes.

The idea of the precategorization of content has been employed in many further models. Weiss et al. [109] have proposed a data model called algebraic video, which is used for composing, searching, and playing back digital video presentations. It is an important model in the sense that many of its features have been adopted in subsequent works. The authors use algebraic combinations of video segments (nodes) to create new video presentations. Their data model consists of hierarchical compositions of video expressions created and related to one another by four algebra operations: creation, composition, output, and description. Once a collection of algebraic video nodes is defined and made persistent, users can query, navigate, and browse the stored video presentations. Note that each algebraic video expression denotes a video presentation. An interesting feature of the proposed model, concerning the so-called interface operations like search, playback, and so forth, is that the hierarchical relations between the algebraic video nodes allow nested stratification. Stratification is a mechanism in which textual descriptions called strata are related to possibly overlapping parts of a linear video stream. [110] In the proposed model, a stratum is just an algebraic video node, which refers to a raw video file and a sequence of relevant frames. All nodes referring to the same video stream are used to provide multiple coexisting views and annotations and allow users to assign multiple meanings to one logical video segment. Moreover, the algebraic video model provides nested relationships between strata and thereby allows the user to explore the context in which a stratum appears. [111]

The algebraic video model has some shortcomings: first, the difficulty in adding automatic methods for segmenting video streams, and second, the need for an information retrieval engine for content-query execution because of the exclusive use of textual descriptions.

Zhong et al. [112] present a general scheme for video objects modeling, which incorporates low-level visual features and hierarchical grouping. It provides a general framework for video object extraction, indexing, and classification and presents new video segmentation and object tracking algorithms based on salient color and motion features. By video objects, the authors refer to objects of interest, including salient low-level image regions, moving foreground objects, and groups of objects satisfying spatio-temporal constraints. These video objects can be automatically extracted at different levels through object segmentation and object tracking mechanisms. Then, they are stored in a digital library for further access. Video objects can be grouped together, and high-level semantic annotations can be associated with the defined groups. The problem of this process is that visual features tend to provide only few direct links to high-level semantic concepts. Therefore, a hierarchical object representation model is proposed in which objects in different levels can be indexed, searched, and grouped to high-level concepts. However, content-based queries based on the proposed model can only retrieve whole video segments and set the video entry points according to some spatio-temporal features of the queried video objects rather than retrieving dedicated video units out of the whole video stream.

Jiang et al. [113], [114], [115] present a video data model, called Logical Hypervideo Data Model (LHVDM), which is capable of multilevel video abstractions. Multilevel abstractions are representations of video objects users are interested in, called hot objects. They may have semantic associations with other logical video abstractions, including other hot objects. The authors noted that very little work so far has been done on the problem of modeling and accessing video objects based on their spatio-temporal characteristics, semantic content descriptions, and associations with other video clips among the objects themselves. Users may want to navigate video databases in a nonlinear or nonsequential way, similar to what they can do with hypertext documents. Therefore, the proposed model supports semantic associations called video hyperlinks and video data dealing with such properties, called hypervideo. The authors provide a framework for defining, evaluating, and processing video queries using temporal, spatial, and semantic constraints, based on the hypervideo data model. A video query can contain spatio-temporal constraints and information retrieval subqueries. The granularity of a video query can be logical videos, logical video segments, or hot objects, whereas concerning hot objects, the results are always returned as logical video segments in which the hot objects occur.

LHVDM is one of the first models to integrate the concepts of hyper-video and multimedia data models and, therefore, attracted much attention. It has some shortcomings concerning the video abstraction levels and the free text annotations: first, the video abstraction hierarchy is not designed in full detail, meaning that the model does not distinguish between different video granularities, and second, there are no means for structured querying of the data model, only an information retrieval (IR) engine for querying is provided.

It has to be noted that in addition to LHVDM, many other related models also use exclusively, or as well as an MMDBMS, an IR engine to retrieve text documents containing multimedia elements. The domain of MultiMedia IR (MM-IR) has attracted interest in the last few years. [116] However, most of the annotations were keyword based.

Recently, researchers concentrated on an integrated approach for MM information systems, where MMDBMSs handle multimedia data in a structured way, whereas MM-IRs are used for retrieval of keywords. [117]

In this perspective, we proposed a generic indexing model, VIDEX, [118] which describes a narrative world as a set of semantic classes and semantic relations, including spatial and temporal relationships among these classes and media segments. The core of the indexing model defines base classes for an indexing system, whereas application-specific classes are added by declaring subclasses—so-called content classes—of the base classes. Furthermore, VIDEX introduces concepts for detailed structuring of video streams and for relating the instances of the semantic classes to the media segments. This approach tries to combine the advantages of the above-described methods, for instance, those in Weiss et al., [119] Zhong and Chang, [120] Jiang et al., [121] Little et al., [122] and Hjelsvold et al., [123] and extends them by the introduction of both means for structuring video steams and genericity in the indexing process. The SMOOTH multimedia information system realizes the high-level indexing part of the VIDEX video indexing model [124], [125], [126] and is detailed in Section 4.6 (Use Case 1).

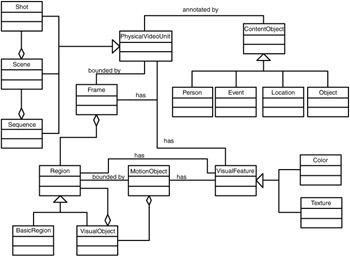

Exhibit 4.9 shows the generic low- and high-level indexing parts of VIDEX in Unified Modeling Language notation. [127] It contains the basic set of high-level content classes, which are events, objects, persons, and locations. These classes are subclasses of ContentObject, which builds the interface between the low- and high-level indexing parts with MotionObject. VIDEX provides a means for segmenting video streams in different granularities, such as shot, scene, and sequence. The class Frame denotes the entry point for low-level video access. It consists of one or more regions, realized through the abstract class Region, that can be either BaseRegions or VisualObjects. A region is a spatial object, whereas a Motion Object is a temporal and spatial object. This allows us to express temporal, spatial, and spatio-temporal relations between different low- and high-level objects.

Exhibit 4.9: VIDEX generic low- and high-level indexing parts. [128]

4.3.2 Use Case Study: MPEG-7 versus SQL/MM

The International Standards Organization (ISO) subcommittee SC29, WG11, MPEG published in Spring 2002 their standard MPEG-7, the so-called Multimedia Content Description Interface. [129] MPEG-7 specifies a standard way of describing the content of different types of multimedia data. Chapter 2 provided a detailed description of the principles introduced in MPEG-7. It is important to remember here that the elements of MPEG-7 are coded as Extensible Markup Language (XML) documents following the rules of a data definition language (DDL), based on XML Schema. The DDL provides the means for defining the structure, content, and semantics of XML documents.

In this context, another International Organization for Standardization and International Electrotechnical Commission (ISO-IEC) Working Group, SQL, developed the SQL/MM-Multimedia extensions to SQL for creating multimedia extensions. In 2002, ISO-IEC also released the International Standard ISO-IEC 13249-5:2002 for image storage, manipulation and query. [130] A first version appeared in 2001; a second and improved version will appear in autumn 2003. [131] In the second version of the standard, a SI_StillImage type together with format descriptions and types for representing low-level features, such as SI_AverageColor, SI_ColorHistogram, and SI_Positional_Color, are proposed.

Both MPEG-7 and SQL/MM introduce a conceptual multimedia data model for use in multimedia database systems. MPEG-7 uses an extension to XML Schema, as SQL/MM extends the concept of the object-relational SQL-99. [132] To illustrate differences and commonness of the two models, consider the definition of the SI_StillImageType and related feature vector types in SQL/MM and of the StillRegionType in MPEG-7.

The SI_StillImageType in SQL/MM is defined as follows (main components):

CREATE TYPE SI_StillImage AS ( SI_content BINARY LARGE OBJECT(SI_MaxContentLength), SI_contentLength INTEGER, ... SI_format CHARACTER VARYING(SI_MaxFormatLength), SI_height INTEGER, SI_width INTEGER ) INSTANTIABLE NOT FINAL

SI_MaxContentLength is the implementation-defined maximum length for the binary representation of the SI_StillImage in the attribute SI_content. SI_MaxFormatLength is the implementation-defined maximum length for the character representation of an image format. The image format contains all information about format, compression, and so on. Height and width are self-explanatory.

In addition to the definition of an image, types for defining features for image comparison are proposed. The type SI_FeatureList provides the list of all available low-level features, and its definition is given below. The feature list principally contains means for color and texture indexing. It can be seen that for color, one histogram value is proposed (SI_ColorHistogram) and two nonhistogram color features are proposed: an average color (SI_AverageColor) and an array of dominant colors (SI_PositionalColor). The texture feature (SI_Texture) contains values represent the image texture characteristics.

The average color of an image is similar, but not identical, to the predominant color of an image. The average color is defined as the sum of the color values for all pixels in the image divided by the number of pixels in the image. For example, if 50 percent of the pixels in an image are blue and the other 50 percent are red, the average color for that image is purple. The histogram color of an image is specified as a distribution of colors in the image measured against a spectrum of N colors (typically 64 to 256). Positional color values indicate the average color values for pixels in a specific area of the image. The texture of an image is measured by three factors: coarseness, contrast, and directionality of the image. Coarseness indicates the size of repeating items in the image (e.g., pebbles versus boulders). Contrast is the variation of light versus dark. Directionality indicates whether the image has a predominant direction or not. An image of a picket fence, for example, has a predominant vertical direction, whereas an image of sand has no directionality. Texture is used to search for images that have a particular pattern.

SI_FeatureList is defined as:

CREATE TYPE SI_FeatureList AS ( SI_AvgClrFtr SI_AverageColor, SI_AvgClrFtrWght DOUBLE PRECISION DEFAULT 0.0, SI_ClrHstgrFtr SI_ColorHistogram, SI_ClrHstgrFtrWght DOUBLE PRECISION DEFAULT 0.0, SI_PstnlClrFtr SI_PositionalColor, SI_PstnlClrFtrWght DOUBLE PRECISION DEFAULT 0.0, SI_TextureFtr SI_Texture, SI_TextureFtrWght DOUBLE PRECISION DEFAULT 0.0 ) INSTANTIABLE NOT FINAL

The SI_ColorHistogramm is defined as follows:

CREATE TYPE SI_ColorHistogram AS ( SI_ColorsList SI_Color ARRAY[SI_MaxHistogramLength], SI_FrequenciesList DOUBLE PRECISION ARRAY[SI_MaxHistogramLength] ) INSTANTIABLE NOT FINAL

SI_MaxHistogramLength is the implementation-defined maximum number of color/frequency pairs that are admissible in a SI_ColorHistogram feature value.

SQL/MM also defines elements for internal DBMS system behavior, such as SI_DLRead/WritePermission and the SI_DLRecoveryOption that fit well with the other system functionality.

Compared with SQL/MM, the MPEG-7 conceptual data model is richer; the following part of the DDL points out elements that are not covered by SQL/MM, such as:

... <element name="TextAnnotation" minOccurs="0" maxOccurs="unbounded"> ... </element> <choice minOccurs="0" maxOccurs="unbounded"> <element name="Semantic" type="mpeg7:SemanticType"/> <element name="SemanticRef" type="mpeg7:ReferenceType"/> </choice> ... <element name="SpatialDecomposition" type="mpeg7:StillRegionSpatialDecompositionType" minOccurs="0" maxOccurs="unbounded"/> ...

As a conclusion, the data model of SQL/MM covers the syntactical part of multimedia descriptions but allows no means for decomposition of an image, nor means for semantic description is given. Reconsidering the indexing pyramid, as shown in Exhibit 4.3, only features until level 3 may be presented (Local Structure). Means for a Global Structure are not given.

Note that almost all multimedia data models available in object-relational DBMS follow this conceptual modeling principle; for instance, Oracle 9i proposes in its interMedia Data Cartridge the type ORDImage that has similar elements as SI_StillImage enriched with more detailed format and compression information. More information on the retrieval capabilities of Oracle 9i is given in Section 4.5.

SQL/MM integrates well into the DBMS, as it proposes means for specifying access permissions, recovery options, and so on, and it is operational in the sense that the methods for retrieval and image processing are associated with the type hierarchy. MPEG-7 must rely on a DBMS implementation for storing and indexing MPEG-7 documents and must be associated with proprietary retrieval and processing mechanisms to be as functional as SQL/MM is. However, SQL/MM does not provide means for exchange of multimedia data and metadata.

The next section discusses implementation issues of MPEG-7 in DBMS and for information exchange in a distributed multimedia system.

4.3.3 Multimedia Data Model Implementation and Information Exchange in a Distributed Multimedia System

Most of the above-mentioned conceptual multimedia data models are implemented as database schemas or as object-relational types, benefiting from standard DBMS services like type extensions, data independence (data abstraction), application neutrality (openness), controlled multiuser access (concurrency control), fault tolerance (transactions, recovery), and access control. In our opinion, this will remain the predominant way of implementing a multimedia data model.

In addition to the above-mentioned criteria, a DBMS can provide a uniform query interface that is based on the structure of descriptive information implemented and the relationships between descriptive data. Finally, multimedia applications are generally distributed, requiring multiple servers to satisfy their storage and processing requirements. A well-developed distributed DBMS technology can be used to manage data distribution of the raw multimedia data efficiently, for instance, as BLOBs, and to manage remote access with the support of broadly used connection protocols, like JDBC (Java Database Connectivity).

However, none of the related works attributed significance to the question of how to communicate metadata effectively, in terms of interoperability of the information exchange in a distributed multimedia system. Designing models for information exchange, that is, descriptive information together with the described raw material, or as a standalone stream is a very important issue in a distributed multimedia system. Descriptive multimedia information can provide, for instance, active network components such as network routers and proxy caches, with valuable information to govern or enhance their media scaling, buffering, and caching policies on the delivery path to the client. Descriptive information can assist the client in the selection of multimedia data; for example, immediate or later viewing. It personalizes the data offered; for example, a user filters from broadcast streams according to the location encoded in the metadata.

In this context, MPEG-7 provides a coding schema, the BiM (binary) representation, for document transport. This choice has been made by taking into consideration the general development that XML documents are becoming a standard for a shared vocabulary in distributed systems. [133] Furthermore, it is based on the fact that compression is also important for MPEG-7 documents, as these documents may include, among others, large feature vectors for describing various low-level characteristics of the media data. The descriptive information contained in the DSs and Descriptors values (Ds) can be considered, to some extent, a summary of the contents and principles introduced by the models mentioned above.

However, the structural definition of the content differs, based on whether it is made for the storage in a database and further content querying, as considered in most related works, or whether it is designed for information exchange. This fundamental difference is revealed at several levels. The first one is in the overall organization of the content types. MPEG-7 tries to keep the hierarchy of content descriptions as flat as possible to minimize the number of dereferencing before reaching the desired information. This stems from the simple requirement that some network components and the client may not have enough computing power or storage space to process complicated unfolding of descriptive information. In contrast to this, multimedia database schema modeling semantically rich content, like VIDEX [134] and VideoSTAR, [135] for instance, have complicated hierarchies, in order to be as extensible as possible. Second, the difference is revealed in how both approaches attribute importance to single descriptive elements. For example, MPEG-7 proposes a variety of tools (MediaLocator DS) to specify the "location" of a particular image or audio or video segment by referencing the media data. This is important for cross-referencing of metadata and media data. However, such an effort is not of so much interest for a multimedia data model in a DBMS, as in most cases a simple reference to the video data, for example, as BFILE in Oracle 9i or other DBMSs, is sufficient for location (see also Kosch [136] for a discussion of commonness and differences). Obviously, a combination of data models for storage and querying on the one hand, and for information exchange on the other, must make a compromise between the requirements of both issues.

To store and communicate the XML-based MPEG-7 descriptions in and from a database, a first approach is the use of an extensible DBMS; for instance, an object-relational DBMS. Such an approach can be realized, for example, with the XML SQL Utility (XSU), a utility from Oracle (for more information on XSU, please visit http://technet.oracle.com). The MDC developed by us is an Oracle 9i system extension to store XML-based MPEG-7 documents and provide an interface for their exchange. The MDC is described in detail in Section 4.6, Use Case 2.

Alternatively, one can use a so-called XML-DBMS, which no longer relies on traditional database schemes and that stores XML documents directly. Such an approach is supported by recently developed multimedia data models, which rely on semistructured documents such as that proposed by Hacid et al. [137]

An XML-DBMS provides query interfaces and languages [138] with respect to the nature of XML documents. The broadly used query language is XPATH. XPATH 1.0 provides the so-called path-query expression, and its specification can be found at http://www.w3.org/TR/xpath. XPATH 2.0 is under development and will simplify the manipulation of XML Schema-typed content. This is of obvious advantage for MPEG-7. The newest developments for XPATH 2.0 can be found at http://www.w3.org/TR/xpath20req.

At present, several XML-DBMS products are in use. Examples are IPSIS from the GMD Darmstadt (http://xml.darmstadt.gmd.de/xql/) and Tamino from SoftwareAG (http://www.softwareag.com/tamino). These products are supported by the XML:DB initiative (http://www.xmldb.org), which intends to make XML-DBMS client-server capable by defining an XDBC standard similar to JDBC/ODBC. Whether an XML-DBMS or an extensible DBMS should be used depends mainly on the application area. An application requiring transaction management and recovery services will rely more on an extensible ORDBMS than on an XML-DBMS. However, in other applications, extensible DBMS technology might be too expensive in terms of storage space and processing costs. For instance, the M3box [139] project developed by Siemens Corporate Technology (IC 2) aims at the realization of an adaptive multimedia message box. One of its main components is a multimedia database for retrieving multimedia mails based on their structured content. The inputs to the database are MPEG-7 documents generated in the input devices (e.g., videophone, PDAs) and the outputs are again MPEG-7 documents to be browsed at the output devices. The system is performance critical and, therefore, relies on an XML-DBMS—actually dbXML—to avoid scheme translations of both the input and the output. [140]

4.3.4 MPEG-21 Digital Item: Legacy and Semantic Data Integration in MMDBMS

The importance of MPEG-7 serving as the conceptual data model in a MMDBMS has been intensively discussed. However, the fact that there exist many related multimedia metadata standards, which are in industrial use, should not be ignored. As described in Section 1.2, the Dublin Core metadata set,[141] based on RDF (Resource Description Framework), describes semantic Web documents. Dublin Core elements include creator, title, subject keywords, resource type, and format. The standards lack, however, elaborated means for describing the content of multimedia data. For instance, the only image-related aspects of the element set are the ability to define the existence of an image in a document ("DC.Type = image") and to describe an image's subject content by the use of keywords.

The issue of legacy in multimedia database is an important one, as many content providers and archives rely on metadata standards like Dublin Core and SMPTE (Society of Motion Picture and Television Engineers) and started to use MPEG-7 for content descriptions. A MMDBMS system must be able to deal with these different standards at the same time without bothering about complex transformation processes from one standard into the others, which is in general not supported by the providers.

In this context, the MPEG-21 Digital Item Declaration (DID) model may effectively be employed. As discussed in Chapter 2, the MPEG-21 DID provides means for integrating different description schema into one descriptive framework.

The following example shows how different descriptor formats can be used to express complementary metadata sets. The example shows an MPEG-7 description for the content and a RDF-based Dublin Core descriptor for title and rights of my passport image.

<?xml version="1.0" encoding="UTF-8?"> <DIDL xmlns="urn:mpeg:mpeg21:2002:01-DIDL-NS" xmlns:mpeg7="urn:mpeg:mpeg7:schema:2001" xmlns:RDF="http://www.w3.org/1999/02/22-rdf-syntax- ns#" xmlns:dc="http://purl.org/dc/elements/1.1/"> <Item> <Descriptor> <Statement mimeType="text/xml"> <mpeg7:Mpeg7> <mpeg7:DescriptionUnit xsi:type="mpeg7:AgentObjectType"> <mpeg7:Label> <mpeg7:Name> Harald Kosch</mpeg7:Name> </mpeg7:Label> <mpeg7:Agent xsi:type=" mpeg7:PersonType"> <mpeg7:Name> <mpeg7:GivenName> Harald </mpeg7:GivenName> <mpeg7:FamilyName> Kosch </mpeg7:FamilyName> </mpeg7:Name> </mpeg7:Agent> </mpeg7:DescriptionUnit> </mpeg7:Mpeg7> </Statement> </Descriptor> <Descriptor> <Statement mimeType="text/xml"> <RDF:Description> <dc:creator>Nowhere Photoshop</dc:creator> <dc:description>Passport Photo< /dc:description> <dc:date>2002-12-18</dc:date> <dc:rights> No reproduction allowed. </dc:rights> </RDF:Description> </Statement> </Descriptor> <Component> <Resource ref="myself.jpeg" mimeType= "image/jpeg"/> </Component> </Item> </DIDL>

In addition to the legacy, the semantic integration aspect of the MPEG-21 DID is important. The syntactic properties of the MPEG-21 DID model facilitates interoperability among the semantic description formats, such as RDF(S)[142] or DAML+OIL.[143] This increases reusability of description, for example, MPEG-7 with metadata descriptions from other domains where multimedia data plays an important role, such as the Multimedia Home Platform (MHP) (as part of the Digital Video Broadcasting [DVB] Project) (http://www.dvb.org/), the TV Anytime Forum (http://www.tv-any-time.org/), the (NewsML,http://www.newsml.org/), the Gateway to Educational Materials project (http://www.thegateway.org/), or the Art and Architecture Thesaurus browser (http://www.getty.edu/research/tools/vocabulary/aat).

[106]Gibbs, S. Breiteneder, C., and Tsichritzis, D., Data modeling of time-based media, in Proceedings of the ACM SIGMOD International Conference on Management of Data, Minneapolis, May 1994, Snodgrass, R.T., and Winslett, M., eds., Vol. 23, pp. 91–102.

[107]Hjelsvold, R. and Midtstraum, R., Databases for video information sharing, in IS&T/SPIE's Symposium on Electronic Imaging Science and Technology, 1994, pp. 268–279.

[108]Gross, M.H., Koch, R., Lippert, L., and Dreger, A., Multiscale image texture analysis in wavelet spaces, in Proceedings of the IEEE International Conference on Image Processing, 1994.

[109]Weiss, R., Duda, A., and Gifford, D.K., Content-based access to algebraic video, in Proceedings of the International Conference on Multimedia Computing and Systems, Boston, May 1994, pp. 140–151.

[110]Smith, T.G.A. and Davenport, G., The stratification system: a design environment for random access video, in Proceedings of the 3rd International Workshop on Network and Operating System Support for Digital Audio and Video, 1992.

[111]Weiss, R., Duda, A., and Gifford, D.K., Composition and search with a video algebra, IEEE MultiMedia, 2, 12–25, 1995.

[112]Zhong, D. and Chang, S.-F., Video object model and segmentation for content-based video indexing, in IEEE International Symposium on Circuits and Systems (ISCAS'97), Hong-Kong, June 1997.

[113]Jiang, H., Montesi, D., and Elmagarmid, A.K., Integrated video and text for contentbased access to video databases, Multimedia Tools Appl., 9, 227–249, 1999.

[114]Jiang, H. and Elmagarmid, A.K., Spatial and temporal content-based access to hypervideo databases, VLDB J., 7, 226–238, 1998.

[115]Jiang, H. and Elmagarmid, A.K., WVTDB—a semantic content-based videotext database system on the World Wide Web, IEEE Trans. Knowl. Data Eng., 10, 947–966, 1998.

[116]Bertino, E., Catania, B., and Ferrari, E., Multimedia IR: models and languages, in Modern Information Retrieval, Baeza-Yates, R. and Ribeiro-Neto, B., Eds., Addison-Wesley, Reading, MA, 1999.

[117]Lu, G., Multimedia Database Management Systems, Artech House, 1999.

[118]Tusch, R., Kosch, H., and Böszörményi, L., VideX: an integrated generic video indexing approach, in Proceedings of the ACM Multimedia Conference, Los Angeles, October–November 2000, pp. 448–451.

[119]Weiss, R., Duda, A., and Gifford, D.K., Composition and search with a video algebra, IEEE MultiMedia, 2, 12–25, 1995.

[120]Zhong, D. and Chang, S.-F., Video object model and segmentation for content-based video indexing, in IEEE International Symposium on Circuits and Systems (ISCAS'97), Hong-Kong, June 1997.

[121]Jiang, H., Montesi, D., and Elmagarmid, A.K., Integrated video and text for contentbased access to video databases, Multimedia Tools Appl., 9, 227–249, 1999.

[122]Little, T.D.C., Ahanger, G., Folz, R.J., Gibbon, J.F., Reeve, F.W., Schelleng, D.H., and Venkatesh, D., A digital on-demand video service supporting content-based queries, in Proceedings of 1st ACM International Conference on Multimedia, 1993, pp. 427–436.

[123]Hjelsvold, R. Midtstraum, R., and Sandstå, O. Multimedia Database Systems: Design and Implementation Strategies, Kluwer, Dordrecht, 1996, pp. 89–122.

[124]Kosch, H., Tusch, R., Böszörményi, L., Bachlechner, A., Dörflinger, B., Hofbauer, C., Riedler, C., Lang, M., and Hanin, C., SMOOTH—a distributed multimedia database system, in Proceedings of the International VLDB Conference, Rome, Italy, September 2001, pp. 713–714.

[125]Kosch, H., Slota, R., Böszörményi, L., Kitowski, J., and Otfinowski, J., An information system for long-distance cooperation in medicine, in Proceedings of the 5th International Workshop on Applied Parallel Computing, New Paradigms for HPC in Industry and Academia (PARA), Bergen, Norway, June 2000. Springer-Verlag, Heidelberg, LNCS 1947, pp. 233–241.

[126]Kosch, H., Tusch, R., Böszörményi, L., Bachlechner, A., Dörflinger, B., Hofbauer, C., and Riedler, C., The SMOOTH Video DB—demonstration of an integrated generic indexing approach, in Proceedings of the ACM Multimedia Conference, Los Angeles, October–November 2000, pp. 495–496.

[127]Tusch, R., Kosch, H., and Böszörményi, L., VideX: an integrated generic video indexing approach, in Proceedings of the ACM Multimedia Conference, Los Angeles, October–November 2000, pp. 448–451.

[128]Tusch, R., Kosch, H., and Böszörményi, L., VideX: an integrated generic video indexing approach, in Proceedings of the ACM Multimedia Conference, Los Angeles, October–November 2000, pp. 448–451.

[129]Martínez, J.M. Overview of the MPEG-7 Standard. ISO/IEC JTC1/SC29/WG11 N4980, (Klagenfurt Meeting), July 2002, http://www.chiariglione.org/mpeg/.

[130]Melton, J. and Eisenberg, A., SQL multimedia and application packages (SQL/MM), SIGMOD Rec., 30(4), 97–102, 2001.

[131]Information technology—Database languages—SQL Multimedia and Application Packages—Part 5: Still Image, ISO/IEC FCD 13249-5, 2003(E), December 2002.

[132]Melton, J. and Eisenberg, A., SQL: 1999, formerly known as SQL3, SIGMOD Rec., 28(1), 131–138, 1999.

[133]Harold, E.R. and Means, W.S., XML in a Nutshell, 2nd ed., O'Reilly and Associates, 2002.

[134]Tusch, R., Kosch, H., and Böszörményi, L., VideX: an integrated generic video indexing approach, in Proceedings of the ACM Multimedia Conference, Los Angeles, October–November 2000, pp. 448–451.

[135]Hjelsvold, R. and Midtstraum, R., Databases for video information sharing, in IS&T/SPIE's Symposium on Electronic Imaging Science and Technology, 1994, pp. 268–279.

[136]Kosch, H., MPEG-7 and Multimedia Database Systems, SIGMOD Rec., 31(2), 34–39, 2002.

[137]Hacid, M.-S., Decleir, C., and Kouloumdjian, J., A database approach for modeling and querying video data, IEEE Trans. Knowl. Data Eng., 12, 729–750, 2000.

[138]Bonifati, A., Comparative analysis of five XML query languages, SIGMOD Rec., 29(1), 68–79, 2000.

[139]Heuer, J., Casas, J.L., and Kaup, A., Adaptive multimedia messaging based on MPEG-7—the M3 box, in Proceedings of the Second International Symposium on Mobile Multimedia Systems & Applications, Delft, November 2000.

[140]Kofler, A., Evaluation of Storage Strategies for XML Based MPEG-7 Data. Master's thesis, Institute of Information Technology, University Klagenfurt, 2001.

[141]http://dublincore.org.

[142]http://www.w3.org/RDF/.

[143]http://www.ontoknowledge.org/oil/.

|

| < Day Day Up > |

|

EAN: 2147483647

Pages: 77