An Unconventional Approach: Using a Single Stonith Device

In this chapter, I will use an unconventional approach to deploying a high-availability configuration. I will describe how to make resources highly available using only one Stonith device. Normally, high-availability configurations are built using two Stonith devices (one connected to the primary server and one connected to the backup server). In this chapter, however, I will describe how to deploy Heartbeat using only one Stonith device, which introduces two important limitations into your high-availability configuration:

-

All resources must run on the primary server (no resources are allowed on the backup server as long as the primary server is up).

-

A failover event can only occur one time and in one direction. In other words, the resources running on the primary server can only failover to the backup server once. When the backup server takes ownership of the resources, the primary server is shut down, and operator intervention is required to restore the primary server to normal operation.

When you use only one Stonith device, you must run all of the highly available resources on the primary server, because the primary server will not be able to reset the power to the backup server (the primary server needs to reset the power to the backup server when the primary server wants to take back ownership of its resources after it has recovered from a crash). Resources running on the backup server, in other words, are not highly available without a second Stonith device. Operator intervention is also required after a failover event when you use only one Stonith device, because the primary server will go down and stay down—you will no longer have a highly available server pair.

With a clear understanding of these two limitations, we can continue our discussion of Heartbeat and Stonith using a sample configuration that only uses one Stonith device.

Sample Heartbeat with Stonith Configuration

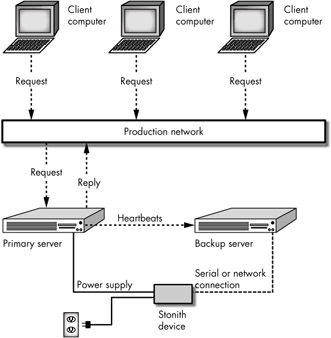

Figure 9-1 shows a two-server, high-availability configuration using Heartbeat and a single Stonith device with three client computers connecting to resources on the primary server.

Figure 9-1: Two-server Heartbeat with Stonith—normal operation

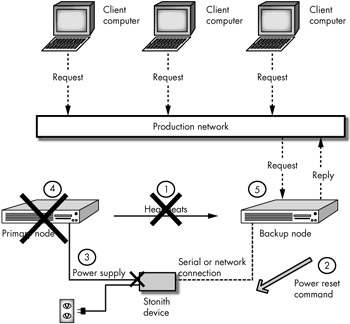

Normally, the primary server broadcasts its heartbeats and the backup server hears them and knows that all is well. But when the backup server no longer hears heartbeats coming from the primary server, it sends the proper software commands to the Stonith device to cycle the power on the primary server, as shown in Figure 9-2.

Figure 9-2: Stonith sequence of events

Stonith Sequence of Events

As shown in Figure 9-2, the Stonith operation proceeds as follows:

-

The Stonith event begins when heartbeats are no longer heard on the backup server.

Note This does not necessarily mean that the primary server is not sending them. The heartbeats may fail to reach the backup server for a variety of reasons. This is why at least two physical paths for heartbeats are recommended in order to avoid false positives.

-

The backup server issues a Stonith reset command to the Stonith device.

-

The Stonith device turns off the power supply to the primary server and then turns it back on.

-

As soon as the power is cut off from the primary server, it is no longer able to access cluster resources and is no longer able to offer resources to client computers over the network. This guarantees that client computers are unable to access the resources on the primary server and eliminates the possibility of a split-brain condition.

-

The backup server then acquires the primary server's resources. Heartbeat runs the proper resource script(s) with the start argument and performs gratuitous ARP broadcasts so that client computers will begin sending their requests to its network interface card.

Once the primary server finishes rebooting it will attempt to reclaim ownership of its resources again by asking the backup server to relinquish them, unless both servers have the auto_failback turned off.[5]

| Note | Client computers should always access cluster resources on an IP address that is under Heartbeat's control. This should be an IP alias and not an IP address that is automatically added to the system at boot time. |

Stonith Devices

Here is a partial listing of the Stonith devices supported by Heartbeat:

| Stonith Name | Device | Company Website |

|---|---|---|

| apcmaster | APC Master Switch AP9211 | www.apcc.com |

| apcmastersnmp | APC Masterswitch (SNMP) | www.apcc.com |

| apcsmart | APC Smart-UPS [a] Tested with 900XLI | www.apcc.com |

| baytech | Tested with RPC-5 [b] | www.baytechdcd.com |

| nw_rpc100s | Micro Energetics Night/Ware RPC100S | microenergeticscorp.com |

| rps10 | Western Telematics RPS-10M | www.wti.com |

| wti_nps | Western Telematics Network Power Switches NPS-xxx Western Telematics Telnet Power Switches (TPS-xxx) | |

|

[a]Comments in the code state that no configuration file is used with this device. Apparently it will always use the /dev/ups device name to send commands, so you need to create a link to /dev/ups from the proper /dev/tty device.

[b]The code implies other RPC devices should work as well. | ||

Stonith supports two additional methods of resetting a system:

| Meatware | Operator alert; this tells a human being to do the power reset. It is used in conjunction with the meatclient program. |

| SSH | Usually used only for testing. This causes Stonith to attempt to use the secure shell SSH [a] to connect to the failing server and perform a reboot command. |

|

[a]See Chapter 5 for a description of how to set up and configure SSH between cluster, or Heartbeat, servers. | |

You may also still see a null device. This "device" was originally used by the Heartbeat developers for coding purposes before the SSH device was developed.

Viewing the Current List of Supported Stonith Devices

If you download the source RPM file, you can view the list of currently supported Stonith devices in the file:

/usr/src/redhat/SOURCES/heartbeat-<version>/STONITH/README

Or you can list the Stonith device names with the command:

#/usr/sbin/stonith -L

The Stonith Meatware "Device"

Before purchasing one of the supported Stonith devices, you can use a meatware "device" to experiment with Stonith. A meatware device is a whimsical reference to a human being. When you use a meatware device, Heartbeat simply raises an operator alert (instead of resetting the power using software commands to a hardware device connected to a serial or network cable). After following the recipe in Chapter 7 that told you how to download and install the Heartbeat and Stonith RPMs, enter the following command to create a meatware device for a nonexistent host we will call chilly:

#/usr/sbin/STONITH -t meatware -p "" chilly

This command tells Stonith to create a device of type (-t) meatware with no parameters (-p "") for host chilly.

The Stonith program normally runs as a daemon in the background, but we're running it in the foreground for testing purposes. Log on to the same machine again (if you are at the console you can press CTRL+ALT+F2), and take a look at the messages log with the command:

#tail /var/log/messages STONITH: OPERATOR INTERVENTION REQUIRED to reset test. STONITH: Run "meatclient -c test" AFTER power-cycling the machine.

Stonith has created a special file in the /tmp directory that you can examine with the command:

#file /tmp/.meatware.chilly /tmp/.meatware.chilly: fifo (named pipe)

Now, because we don't really have a machine named chilly we will just pretend that we have obeyed the instructions of the Heartbeat program when it told us to reset the power to host chilly. If this were a real server, we would have walked over and flipped the power switch off and back on before entering the following command to clear this Stonith event:

#meatclient -c chilly

The meatclient program should respond:

WARNING! If server "chilly" has not been manually power-cycled or disconnected from all shared resources and networks, data on shared disks may become corrupted and migrated services might not work as expected. Please verify that the name or address above corresponds to the server you just rebooted. PROCEED? [yN]

After entering y you should see:

Meatware_client: reset confirmed.

Stonith should also report in the /var/log/messages file:

STONITH: server chilly Meatware-reset.

Using the Stonith Meatware Device with Heartbeat

Now that we have used the Stonith software commands and the meatware device manually, let's configure Heartbeat to do the same thing for us automatically at the time of a failover. On both of your Heartbeat servers add the following entry to the /etc/ha.d/ha.cf file:

stonith_host * meatware

Normally the first parameter after the word stonith_host is the name of the Heartbeat server that has a physical connection to the Stonith device. If both (or all) Heartbeat servers can connect to the same Stonith device, you can use the wildcard character (*) to indicate that any Heartbeat server can perform a power reset using this device. (Normally this would be used with a smart or remote power device that is connected via an Ethernet network capable which allows both Heartbeat servers to connect to it.)

Because meatware is an operator alert message sent to the /var/log/messages file and not a real device we do not need to worry about any additional cabling and can safely assume that both the primary and backup servers will have "access" to this Stonith "device." They need only be able to send messages to their log files.

| Note | When following this recipe, be sure that the auto_failback option is turned on in your ha.cf file. With Heartbeat version 1.1.2, the auto_failback option can be set to either on or off and ipfail will work. Prior to version 1.1.2 (when nice_failback changed to auto_failback), the nice_failback option had to be set to on for ipfail to work. |

-

Use a simple haresources entry like this:

#vi /etc/ha.d/haresources primary.mydomain.com sendmail

The second line says that the primary server should normally own the sendmail resource (it should run the sendmail daemon).

-

Now start Heartbeat on both the primary and backup servers with the commands:

primaryserver> service heartbeat start backupserver> service heartbeat start

or

primaryserver> /etc/init.d/heartbeat start backupserver> /etc/init.d/heartbeat start

Note The examples above and below use the name of the server followed by the > character to indicate a shell prompt on either the primary or the backup Heartbeat server.

-

Now kill the Heartbeat daemons on the primary server with the following command:

primaryserver> killall -9 heartbeat

Note Stopping Heartbeat on the primary server using the Heartbeat init script (service heartbeat stop) will cause Heartbeat to release its resources. Thus the backup server will not need to reset the power of the primary server. To test a Stonith device, you'll need to kill Heartbeat on the primary server without allowing it to release its resources. You can also test the operation of your Stonith configuration by disconnecting all physical paths for heartbeats between the servers.

-

Watch the /var/log/messages file on the backup server with the command:

backupserver> tail -f /var/log/messages

You should see Heartbeat issue the Meatware Stonith warning in the log and then wait before taking over the resource (sendmail in this case):

backupserver heartbeat[835]: info: ************************** backupserver heartbeat[835]: info: Configuration validated. Starting heartbeat <version> backupserver heartbeat[836]: info: heartbeat: version <version> backupserver heartbeat[836]: info: Heartbeat generation: 3 backupserver heartbeat[836]: info: UDP Broadcast heartbeat started on port 694 (694) interface eth0 backupserver heartbeat[841]: info: Status update for server backupserver: status up backupserver heartbeat: info: Running /etc/ha.d/rc.d/status status backupserver heartbeat[841]: info: Link backupserver:eth0 up. backupserver heartbeat[841]: WARN: server primaryserver: is dead backupserver heartbeat[841]: info: Status update for server backupserver: status active backupserver heartbeat[847]: info: Resetting server primaryserver with [Meatware Stonith device] backupserver heartbeat[847]: OPERATOR INTERVENTION REQUIRED to reset primaryserver. backupserver heartbeat[847]: Run "meatclient -c primaryserver" AFTER power-cycling the machine. backupserver heartbeat: info: Running /usr/local/etc/ha.d/rc.d/status status backupserver heartbeat[852]: info: No local resources [/usr/local/lib/ heartbeat/ResourceManager listkeys backupserver] backupserver heartbeat[852]: info: Resource acquisition completed.

Notice that Heartbeat did not start the sendmail resource; it is waiting for you to clear the Stonith Meatware event.

-

Clear that event by entering the command:

backupserver> meatclient -c primaryserver

The /var/log/messages file should now indicate that Heartbeat has started the sendmail resource on the backup server:

backupserver heartbeat[847]: server primaryserver Meatware-reset. backupserver heartbeat[847]: info: server primaryserver now reset. backupserver heartbeat[841]: info: Resources being acquired from primaryserver. backupserver heartbeat: info: Running /usr/local/etc/ha.d/rc.d/stonith STONITH backupserver heartbeat: info: Running /usr/local/etc/ha.d/rc.d/status status backupserver heartbeat: stonith complete backupserver heartbeat: info: Taking over resource group sendmail backupserver heartbeat: info: Acquiring resource group: primaryserver sendmail backupserver heartbeat: info: Running /etc/init.d/sendmail start

Heartbeat on the backup server is now satisfied: it owns the primary server's resources and will listen for Heartbeats to find out if the primary server is revived.

To complete this simulation, you can really reset the power on the primary server, or simply restart the Heartbeat daemon and watch the /var/ log/messages file on both systems. The primary server should request that the backup server give up its resources and then start them up again on the primary server where they belong. (The sendmail daemon should start running on the primary server and stop running on the backup server in this example.)

| Note | Notice in this example how you will have a much easier time performing system maintenance when you place all of your resources on a primary server and leave the backup server idle. However, you may want to place active services on both the primary and backup Heartbeat server and use the hb_standby command to failover resources when you need to do maintenance on one or the other server. |

Using a "Real" Stonith Device

Each Stonith device has unique configuration options. To view the configuration options for any Stonith device, enter the command:

#stonith -help

You can also obtain syntax configuration information for a particular Stonith device using the stonith command from a shell prompt as in the following example:

# /usr/sbin/stonith -l -t rps10 test

This causes Stonith to report:

STONITH: Cannot open /etc/ha.d/rpc.cfg STONITH: Invalid config file for rps10 device. STONITH: Config file syntax: <serial_device> <server> <outlet> [ <server> <outlet> [...] ] All tokens are white-space delimited. Blank lines and lines beginning with # are ignored

This output provides you with the syntax documentation for the rps10 Stonith device. The syntax for the parameters you can pass to this Stonith device (also called the configuration file syntax) include a /dev serial device name (usually /dev/ttyS0 or /dev/ttyS1); the server name that is being supplied power by this device; and the physical socket outlet number on the rps10 device. Thus, the following syntax can be used in the /etc/ha.d/ha.cf file on both the primary and the backup server in the /etc/ha.d/ha.cf configuration file:

STONITH_host backupserver rps10 /dev/ttyS0 primaryserver.mydomain.com 0

This line tells Heartbeat that the backup server controls the power supply to the primary server using the serial cable connected to the /dev/ttyS0 port on the backup server, and the primary server's power cable is plugged in to outlet 0 on the rps10 device.

| Note | For Stonith devices that connect to the network you will also need to specify a login and password to gain access to the Stonith device over the network before Heartbeat can issue the power reset command(s). If you use this type of Stonith device, however, be sure to carefully consider the security risk of potentially allowing a remote attacker to reset the power to your server, and be sure to secure the ha.cf file so that only the root account can access it. |

Avoiding Multiple Stonith Events

One potential advantage of offering all of the resoures from the primary server and not running any resources on the backup server (which you will recall is a limitation of the single-Stonith configuration I have been describing in this chapter) is that you can simply power off the primary server when the backup server takes ownership of the resources. This prevents the situation where confused Heartbeat servers start reseting each other's power and resources move back and forth between the primary and backup server.

To cause Heartbeat to power down, rather than power reset the primary server during a Stonith event, you can do one of two things:

-

Look at the driver code in the Heartbeat package for your Stonith device and see if it supports a poweroff (rather than a power reset)—most do. If it does, you can simply change the source code in heartbeat that does the stonith command and change it from this:

rc = s->s_ops->reset_req(s, ST_GENERIC_RESET, nodename);

to this:

rc = s->s_ops->reset_req(s, ST_POWEROFF, nodename);

This allows you to power off rather than power reset any Stonith device that supports it.

-

If you don't want to compile Heartbeat source code, you may be able to simply configure the system BIOS on your primary server so that a power reset event will not cause the system to reboot. (Many systems support this, check your system documentation to see if yours is one of them.)

[5]Formerly (prior to version 1.1.2) the nice_failback option turned on.

EAN: 2147483647

Pages: 219