That Was Then: DTS

You may be familiar with SSIS's predecessor, SQL Server 2000 Data Transformation Services (DTS). If not, DTS is a widely used tool designed to move data from one source to another, and many organizations have developed compelling BI ETL solutions using DTS. The differences between SSIS and DTS are many, though. In fact, SSIS is not a new version of DTS; it is a new tool in the SQL Server 2005 platform that replaces and extends DTS's functionality.

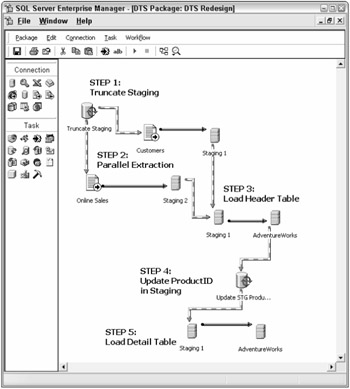

Does Figure 11-1 look familiar? If you've developed any DTS-based solutions to handle data processing, then surely you recognize it.

Figure 11-1

Since the topic in this chapter is Integration Services, the analysis of DTS will not be exhaustive; however, you will consider a few aspects of a typical DTS architecture in order to compare it with an Integration Services architecture. Ideally, the design of your ETL or data-processing solution will not be driven by your choice of ETL tool; however, a tool's capabilities inevitably influence the design. Source-to-destination data mappings are still the same and destination data structures do not change, but the process and logic to move and transform data from one to the other can be dependent on the capabilities of the tool. This was the case with DTS (in fact, this applies to any tool that is selected, but the difference is how much flexibility the tool will allow in data transformation design). The following sections consider the common design of a DTS package such as the one shown in Figure 11-1.

DTS Solution Architecture

DTS primarily uses a Data Pump task that moves data from a single source to a single destination with some interim transformation logic. The DTS interface focuses on the workflow features of the product, with the Data Pump being an object with configurable properties. A few built-in transformations can be defined, such as the built-in character mapping transforms. If transformation logic is included in the Data Pump, it is done in the form of a script. Some limitations are obvious, such as the one-to-one source-to-destination cardinality and the limitation of out-of-the-box transformations.

While scripting transforms can provide broad capabilities, scripting in itself introduces complexity, embedded code, and componentization scalability and support challenges. Because of these limitations, the approach that many DTS-based solutions use is to leverage the relational engine and use staging databases as interim layers of ETL. For example, if you needed the data source to split to multiple destinations, DTS would need to either make multiple passes from the source or stage the data and handle the partitioning of the data through another set of data pumps. Staging in DTS designs is also often used for cleansing, data correlation, and dimension lookups, again leveraging the relational engine. The pattern for data processing in DTS starts with a source. Then, raw data is staged. This data is transformed to an interim staging environment where lookups, data comparisons, and cleansing logic are applied. The final step in many cases loads the data to the destination, where perhaps a final cleanup may be applied.

Common DTS Processing Practices

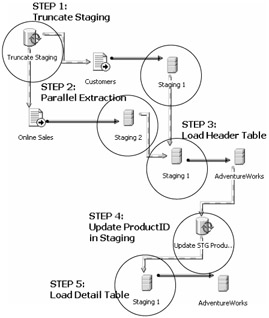

The approach highlighted in Figure 11-1 and described previously contains two general features of this data transformation model. Figure 11-2 highlights the staging-intensive nature of the process.

Figure 11-2

In this example, two staging tables have been used in between the source and destination in order to prepare the data for the load. Furthermore, when drilling into the data pumps that move the data from one table to another, oftentimes a SQL statement does some cleansing and correlating as the data is picked up from one staging table to another. The following code shows the source query used in Step 3 of the package that loads the header table.

SELECT STGOnlineDailyOrderBulk.OnlineCustomerID , STGOnlineDailyOrderBulk.OrderDate , SUM (UnitPrice) AS SubTotal , SUM (UnitPrice)* MAX(ISNULL(TaxRate,0.08)) AS TaxAmt , ISNULL(MAX(Address.AddressID), 1) AS BillToAddressID , ISNULL(MAX(Address.AddressID), 1) AS ShipToAddressID , SUM(freight) AS freight , DATEADD(D,7,OrderDate) AS DueDate FROM dbo.STGOnlineDailyOrderBulk INNER JOIN dbo.STGOnlineCustomers ON STGOnlineDailyOrderBulk.OnlineCustomerID = STGOnlineCustomers.OnlineCustomerID LEFT OUTER JOIN dbo.TaxRate ON STGOnlineCustomers.PostalCode = TaxRate.PostalCode LEFT OUTER JOIN AdventureWorks.Person.Address Address ON STGOnlineCustomers.AddressLine1 = Address.AddressLine1 AND STGOnlineCustomers.PostalCode = Address.PostalCode AND STGOnlineCustomers.City = Address.City GROUP BY STGOnlineDailyOrderBulk.OnlineCustomerID , STGOnlineDailyOrderBulk.OrderDate

In this particular example, the transformation and data association is handled by an intense query on the relational staging database. Note the use of multiple joins between the staging and the destination tables and SQL logic to perform some in-process cleansing.

The second feature of this approach is the in-place set-based transformation using the RDBMS engine to update data within the staging or destination tables. Figure 11-3 highlights the DTS Execute SQL task that updates one of the staging tables in place to capture a primary key on the destination table.

Figure 11-3

In Step 5, after the header table is loaded, the source SQL statement for the Data Pump joins the details staging table with the destination header table to return the IDENTITY column in the header.

SELECT SalesOrderHeader.SalesOrderID , STGOnlineDailyOrderBulk.* FROM dbo.STGOnlineDailyOrderBulk INNER JOIN AdventureWorks.Sales.SalesOrderHeader SalesOrderHeader ON SalesOrderHeader.CustomerID = STGOnlineDailyOrderBulk.OnlineCustomerID AND SalesOrderHeader.OrderDate = STGOnlineDailyOrderBulk.OrderDate

This example clearly does not represent all of the DTS-based solutions, nor does it leverage the lookup functionality embedded in the DTS Data Pump. The purpose of this example is to highlight the features of a typical DTS solution in order to compare it with an Integration Services solution.

DTS Limitations

As mentioned earlier, this architecture has a few limitations that affect scalability and may have an unnecessary impact on the destination systems. Generally, the DTS approach can be thought of as "ELT" instead of ETL. In other words, the common sequence for data processing is Extraction, Transformation, and Loading (ETL), but DTS solutions typically switch the order of the "T" and the "L." In this model, loading is commonly performed before transformation logic is applied. A few other limitations can be articulated from this generalized description.

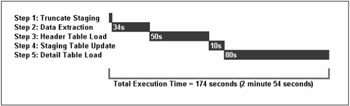

One drawback to this approach is its synchronous nature. Some parallelization is used, but most data processing steps require the full completion of the previous step in the workflow. Since this process is chained together, the total processing time will be a multiple of the duration of the extraction process, especially in a high-volume performance-driven environment. The Gantt chart in Figure 11-4 illustrates the total time taken to process the DTS package in Figure 11-1.

Figure 11-4

The second drawback to this approach is its disk-intensive nature. Every time data is inserted or updated in the database, it passes through several OSI layers, which adds resource overhead and stresses the system. Disk IO processes are expensive and generally slow down a system. To be sure, writing to the database is required in the loading phase, and often business requirements dictate that some interim database activities are necessary. The point, however, is that an architecture with data staging at its center has processing overhead that limits scalability. In fact, every step in the package from source to destination requires disk IO, including the set-based update statements, which at times may be more IO-intensive than pulling and writing data between data staging tables.

Related to the disk-intensive nature of this approach is the impact on the RDBMS when a process uses it for data correlation, lookups, and cleansing. This may or may not be relevant in every solution or even in the different layers of an ETL design, but consider a design where foreign keys are added to a staging table or queried in a custom source SQL statement through a series of database joins. Since the keys reside in a production destination database, even if the SQL statement is distributed across servers, there is a hit on the production system when that portion of the package runs. When the volume of the related destination tables starts to scale up, and if indexes are not optimized for loading, the load can really stress the RDBMS resources — processor, RAM, and IO. Whether the primary purpose of the destination database is to perform queries, report, or handle day-to-day transactions, this impact may affect the end users of your system. It may reduce productivity or, even worse, create a negative perception among end users of the value of the system.

These concerns notwithstanding, good design is possible using DTS, but the challenge comes with the required time, effort, and ongoing support. These DTS-based processes have also provided you with valuable lessons to apply when developing solutions with Integration Services. Finally, DTS may be perfectly appropriate for some systems; however, by examining the common pitfalls of DTS-based solutions, you will be better able to determine if DTS is a viable solution.

EAN: 2147483647

Pages: 182