Fact Table ETL Basics

The primary components in a fact table ETL process include acquiring the dimension surrogate keys, managing measure calculations, and identifying records for changes.

Acquiring the Dimension Surrogate Key

As you process fact source records (such as transactions or inventory), you must pull the surrogate key from the dimension. To accomplish this, you must have the business key from the transactional source that maps to the business key in the dimension table. This process of loading a dimension table involves looking up the surrogate key from the matching business key.

Type 2 Historical Changes and Late-Arriving Facts

If you are doing history on the dimension, it’s important to pull the current surrogate key. In some situations, even the current surrogate key is not the most accurate association to make. Much like a late-arriving dimension, there’s a situation called a late-arriving fact. Some systems hold a transaction for reporting until a pre-defined situation. In this case, the transaction may arrive in the ETL with an older transaction date than a strong majority of the records. When the related dimension record has gone through a Type 2 change since the transaction date, then the current dimension surrogate key would not be aligned with the transaction date of the fact record.

Missing Dimension Records or Inferred Members

Recall the Chapter 4 discussion on loading dimensions that reviewed the support for updating the dimension record when an inferred member exists. However, this was only half the story. When loading the fact records, it is possible to have a missing match within the dimension table. In other words, the business key does not exist in the dimension table. When this occurs, the inferred member must be added to the dimension, which generates a new surrogate key to be used for the fact table load.

In most cases, acquiring the surrogate key is straightforward, but as you can see, there can be exceptions to handle in the process.

Identifying the Dimension Surrogate Keys with SSIS

The best SSIS approach for acquiring surrogate keys is to take advantage of the data flow and use one of the following transformations:

-

A Lookup transformation is the primary method to use, because it performs well when the dimension table can fit into the transformation cache.

-

A Merge Join transformation works well if the dimensions are large and do not fit easily into memory for cache.

-

A Fuzzy Lookup transformation is useful with dirty data. If no match exists when performing a lookup on the dimension, the Fuzzy Lookup can find the “best guess” match. It indexes and caches the data; it also requires some start-up time and overhead, so the best approach is to leverage the Fuzzy Lookup in tandem with the Lookup transformation, by handling the missing records from the Lookup transformation with the Fuzzy Lookup.

Another approach is to use the control flow and handle the dimension association to the fact table with an Execute SQL Task. This requires staging the fact data and using a SQL join to get the surrogate key. However, this approach is synchronous-it requires one step at a time:

-

Landing the fact source data to a staging table

-

Performing the joins across all the related dimension tables (and either performing an UPDATE to the staging, or taking the join results and loading the fact table)

-

Loading the fact table

A data flow approach will scale better by reducing the impact on the database (eliminating expensive joins) and reducing the steps involved in the dimension association.

Using the Lookup Transformation to Get the Dimension Key

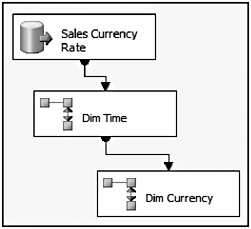

As an example of using the Lookup transformation for the dimension key, Figure 5-5 shows the currency rate fact table package, configured with a data flow containing an OLE DB Source, pulling from the currency rate source table and a Lookup transformation to get the surrogate key from the time dimension.

Figure 5-5: Currency rate fact table package

The currency rate fact table defines the changes of currency matching with U.S. dollars (USD) for this company, which sells products inside and outside of the United States. The sales currency rate source tracks daily currency conversions for AdventureWorks. Therefore, the OLE DB Source is configured to reference the AdventureWorks connection, and point to the Sales.CurrencyRate table. Figure 5-6 shows the Lookup Transformation Editor that references the time dimension.

Figure 5-6: Lookup transformation editor

Configured with the DimTime, this Lookup transformation caches the complete time dimension table into memory. Figure 5-7 shows the Columns tab of the editor.

Figure 5-7: Columns tab of the editor

The CurrencyRateDate column from the source is matched to the FullDateAlternateKey in the dimension table. In both cases, the column contains a datetime value at the day level (as opposed to having values down to the minute, second, and sub-second). The surrogate key, TimeKey, is checked as the return column, which will add the surrogate key to the output of the Lookup transformation that will later be used for the fact table inserts.

The second dimension relationship for the currency rate fact table is the currency itself. There is only one currency lookup because, even though the currency rate is being converted between two currencies, the default conversion currency is USD. Therefore, the currency lookup is referencing what the conversion rate is coming from in order to achieve a USD baseline. Figure 5-8 shows an updated data flow with asecond Lookup transformation.

Figure 5-8: Updated data flow with a second Lookup transformation

The Lookup transformation for the currency dimension is configured almost identically to the time dimension lookup. This lookup references the currency dimension table, joins the CurrencyCode from the source against the CurrencyAlternateKey in the dimension table, and returns the CurrencyKey to the pipeline. Since both the time dimension and the currency dimension are small (and, therefore, will fit in the Lookup cache) and the matching values are clean, this data flow solution to acquiring the dimension keys will run very quickly, and is preferred for most situations.

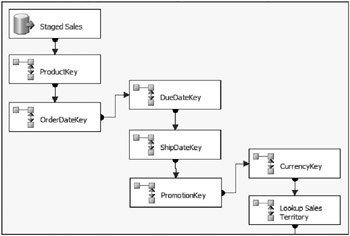

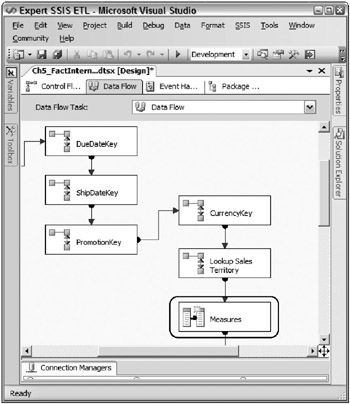

As a second, more complicated demo, Figure 5-9 shows the Sales fact table load package that contains several surrogate key lookups.

Figure 5-9: Sales fact table load package

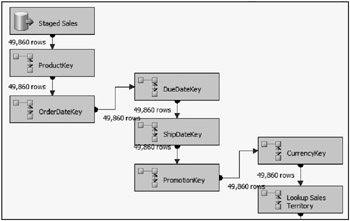

This data flow is not complete, and in later sections, the measures will be handled, as well as the fact table loads. But this example highlights the setup of a fact table load with several dimension relationships. What you may be wondering is how efficient this will be, seeing that the lookups happen one after the other. Yes, this package runs efficiently if the Lookup is designed sequentially in this manner. Although it may appear that the data flow might require the first dimension Lookup to be completed before the second, what is actually happening is that the Lookup transformations are being handled in parallel. Figure 5-10 shows a mid-stream picture of the data flow execution, before the records are completely landed into the fact table.

Figure 5-10: Mid-stream picture of the data flow execution

Based on the record count shown, note that the lookups are all happening at the same time, because the record count (49,860) is identical for all the Lookups. The reason this is an important point is that it underscores the architecture of SSIS. In this case, the Lookup transformation output is synchronous, meaning that the output of the Lookup is synchronously connected to the input of the next lookup. This means that as soon as a flat data buffer in the pipeline (typically representing about 10,000 rows) completes in one Lookup, it is immediately available to the next Lookup to perform its work. So rather than the multiple lookups having to be performed in serial, the data is actually being performed in parallel, which gives a tremendous advantage to how SSIS can scale, and makes for a compelling case to use the Lookup transformation for dimension key lookups. Although not shown, it will become apparent later that while rows are still coming in from the source, transformed data is already landing to the destination.

Also of note in this example, the sales fact table contains a product dimension relationship. If you remember, the product dimension includes Type 2 historical dimensions. This means that the lookup cannot be performed by including the entire table into memory, because it would generate duplicates with the product dimension business key, ProductAlternateKey. Instead of just selecting the Use a table or a view reference table option, the reference lookup uses a SQL Query to define which records should be loaded. Figure 5-11 shows the Lookup transformation editor for the product dimension lookup.

Figure 5-11: Lookup transformation editor for the product dimension lookup

The lookup is configured to use the results of a SQL query, and the query entered into the text box is as follows:

SELECT ProductKey, ProductAlternateKey FROM DimProduct WHERE EndDate IS NULL.

Essentially, this configuration will assign the current surrogate key to the fact record. A NULL in the EndDate indicates that the dimension record is current, and the Lookup cache will, therefore, only be populated with the current records in the Product dimension, so the join across the business key will still yield the right current match.

The “Advanced Fact Table ETL Concepts” section later in this chapter examines three more complicated dimension Lookup scenarios:

-

A late-arriving fact scenario has fact source records arriving late in the ETL with the current fact records. When a dimension with Type 2 historical changes is involved in this scenario, the late transaction date may require looking up an outdated dimension record to get the most accurate dimension association. In other words, the surrogate key of a non-current dimension record may be required.

-

Large dimensions that don’t fit in the Lookup cache (which varies based on physical RAM availability) require special handling to optimize the Lookup process for fact ETL processing.

-

Inferred members, when the dimension member is missing, must be accommodated in scenarios when the data is dirty or the dimension member arrives late in the dimension table.

Using the Merge Join Transformation to Identify the Dimension Key

The Merge Join transformation presents another alternative to getting the dimension’s surrogate key for the fact table load. With the Merge Join transformation, the source records can be merged with the dimension table across the business key, which makes the surrogate key available. The Merge Join transformation allows three join types:

-

An inner join only brings across records that match from both sources. Any record without a match is discarded.

-

The left outer join allows rows from the left source input to flow through the transformation either with a matching record or without a match on the right input.

-

A full outer join brings rows from both the left and right, whether or not there is a match between sources.

If the source has the possibility of missing dimension records, then a left outer join should be used in the join with the source rows acting as the left input. A full outer join would not provide the right solution, since there may be many dimension records (the right input) that have no current match in the source, and, therefore, are not needed. Similarly, an inner join should not be used because it would filter out new dimension members that are in the source, but not yet in the dimension.

One aspect of the Merge Join to be careful of is the possibility of producing duplicates when the dimension source brings over too many matching records for a single business key. The Lookup transformation will ignore a duplicate record in the lookup reference table, while the Merge Join will create multiple records in the matching input based on the number of matches across the join columns.

The Merge Join requires sorted input. You can sort the data in the transactional source, or use a Sort transformation. The Sort transformation can also de-duplicate records based on the sort. If you have duplicates coming across from a set of keys, select the Remove rows with duplicate sort values check box in the Sort transformation editor.

Using the Fuzzy Lookup to Pull Dimension Keys Based on Similarity

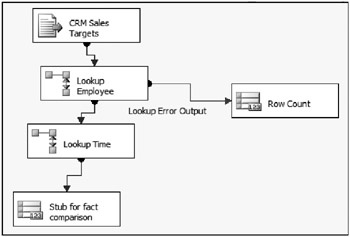

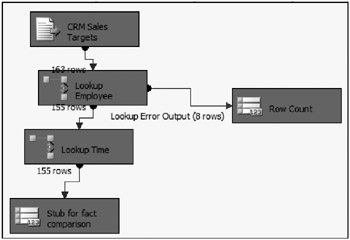

Using a Fuzzy Lookup transformation for dimension lookups provides greater flexibility when the source data does not match absolutely with the source. In some cases, the match to acquire the surrogate key will not be across a single business key, but rather a column or several columns that may not have exact matches. Since the Fuzzy Lookup matches on column value similarity, its use allows non-equal matches. For example, Figure 5-12 shows a data flow for the sales quota fact table.

Figure 5-12: Data flow for the sales quota fact table

The source, in this case, is coming from a flat file generated from the customer relationship management (CRM) system, which does not have a single matching key for the employee lookup. Figure 5-13 shows the Lookup editor on the Columns tab. Several columns are used in the join to return the surrogate key.

Figure 5-13: Lookup editor on the Columns tab

The challenge is that when this package is executed, several Employee dimension records do not return a match, even though the Employee record actually exists in the table. The difference is spellings and minor differences between the source and the dimension table itself. Figure 5-14 highlights the executed package, with the missing Employee matches sent to a Row Count transformation.

Figure 5-14: Executed package with the missing Employee matches sent to a Row Count transformation

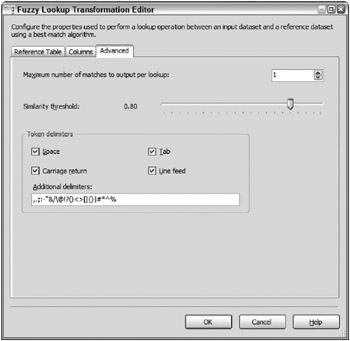

Rather than mark these eight fact records with an unknown employee surrogate key, the output can be pushed through a Fuzzy Lookup to pull the unmatched records. The Fuzzy Lookup columns mapping is identical to the Lookup configuration, but this transformation has advance options to configure the thresholds for the comparison. Figure 5-15 shows the options available on the Advanced tab of the Fuzzy Lookup transformation.

Figure 5-15: Options available on the Advanced tab of the Fuzzy Lookup transformation

The Similarity threshold, in this case, is set to 0.80 (or 80 percent), indicating that only employee matches will be identified that are over this threshold. Only the highest similar match is pulled, as indicated by the Maximum number of matches to output per lookup property set to 1. Although not shown, the Fuzzy Lookup outputs a confidence value, which identifies the overall possibility that the highest match selected is the right one. In other words, if multiple good matches are possible between the source and reference tables, the confidence value would be lower, because there would be some lack of surety on which match is the right one.

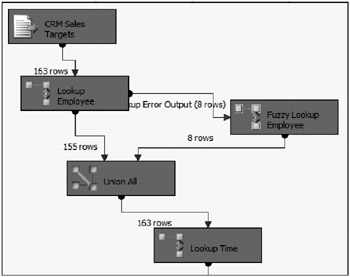

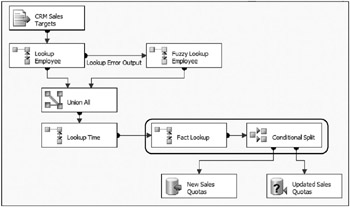

All said and done, the sales quota fact table package uses both the Lookup and the Fuzzy Lookup transformations to accomplish acquiring the dimension surrogate keys. A Union All transformation brings the records back together in the data flow from both the Lookup and the Fuzzy Lookup outputs. Figure 5-16 shows the completed data flow for the dimension lookups and the execution results.

Figure 5-16: Completed data flow for the dimension lookups and the execution results

All of the eight unmatched records for the employee dimension Lookup are now matched with the Fuzzy Lookup, and the rows are “union-ed” back into the pipeline for the ensuing transformations.

As an alternate approach, the Fuzzy Lookup could have been used in place of the Lookup transformation. However, for optimization purposes, the error row output was used. This is because the majority of records do match directly (which the Lookup handles efficiently), and only the missing rows are sent through the Fuzzy Lookup (which, behind the scenes, requires staging, indexing, and tempdb overhead to perform the matches). The general rule of practice is to separate the exception to the rule to handle the one-off situations, and then bring the data back into the main stream of processing.

Measure Calculations

Another major component of a fact table ETL load involves calculating measures. And, in most cases, calculating measures is relatively straightforward.

Measure Calculation Types

Calculating measures involves a few different types of transformations from the source:

-

No change from a source column- In the case where the source column matches one-to-one with the destination fact table column, the measure can be passed through form the source to the fact table without change.

-

Standard calculations-Oftentimes a measure will involve standard arithmetic calculations, such as dividing one column from the another, or determining the difference between two values. For example, the number of items in the sale record times the price of the item returns the transaction subtotal, or the subtotal sales divided by quantity provides the average price.

-

Derived calculations-In some cases, the measure must be derived in the ETL process. As mentioned in the introduction to this chapter, oftentimes a measure may simply be the value 0 or 1, based on certain conditions in the source feed. An example of this is an occurrence measure, where the measure indicates the happening of an event (1), or the non-happening of the event (0). Such measures are usable in additive scenarios when aggregating to determine the number of occurrences of the event.

-

Advanced calculations-In rare cases, a calculation is required that goes beyond simple math or derivation, such as aggregating a subtotal of a group of records, divided by a part, or using advanced algebraic expressions, or parsing out values in a string.

Handling Measure Calculations in SSIS

The good news is that measure calculations are relatively straightforward in SSIS. Here are a few methods for handling the calculations:

-

Using a Derived Column transformation to perform the calculation with SSIS expressions- SSIS provides many standard mathematical functions to perform calculations from associated columns in the same row of data.

-

Including the calculation in the source query- If the source pull is a relational database, as opposed to a flat-file extraction, the calculation expression can be handled inline in the SQL statement. This typically has low impact on the source system, as long as the query is straightforward.

-

Using an aggregate to handle the calculation- In the case where the measure is a SUM, MAX, MIN, or COUNT of a grouping of source rows, the Aggregate can perform this functionality. Combining the Aggregate transformation with an ensuing Derived Column transformation will enable both aggregates and calculations based on the aggregates.

| Important | Leverage the data flow Script Component as a transformation to handle advanced calculations. The Script Component unlocks a full-featured Visual Basic.NET environment, which can take advantage of many more functions and the ability to compare values across rows of data. When dealing with more advanced situations, consider the Script Component to provide that extended capability beyond what the SSIS expression language can provide. |

To exemplify measure calculations, the sales fact table load package referenced earlier requires some common calculation. Figure 5-17 shows an updated data flow with a Derived Column transformation added as the next in-line transformation after the dimension lookups.

Figure 5-17: Updated data flow with a Derived Column transformation

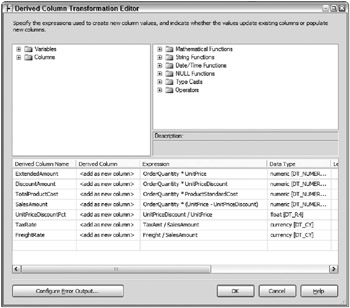

Because the Derived Column transformation also contains a synchronous output, the calculations contained within will be handled in parallel with the upstream lookups and the next downstream transformation. In the Sales fact table process, seven calculations are required. Figure 5-18 shows the Derived Column editor, which contains the calculation logic.

Figure 5-18: Derived Column editor containing the calculation logic

As you can see, these calculations use common mathematical equations that generate the needed output for the destination measure. The ExtendedAmount involves multiplying the OrderQuantity times the UnitPrice. The UnitPriceDiscountPct involves dividing the UnitPriceDiscount by the UnitPrice.

In summary, measure calculations are often easy to handle in SSIS. But when the situation requires more advanced computations, SSIS also provides the Aggregate transformation and the Script Component.

Managing Fact Table Changes

At times, a matching fact table record in the source may change, which, therefore, needs to propagate through to the fact table. To handle the change, a few different data warehousing approaches are common:

-

A simple UPDATE to the fact table to modify the existing record with the change

-

A newly generated record in the fact table with the new values, and a flag in the table to indicate the status of the record (current or expired)

-

An offset change record that calculates the difference between what was in the fact record and the new value

Each approach comes with its advantages and disadvantages, including the following:

-

UPDATE changes can be very expensive in large scenarios

-

Adding a second record can increase the fact size

-

Change records are not possible with non-additive measures

The right approach is situational. Since the goal of this book is applying SSIS to ETL, the right fact table design choice is left for other forums. However, to enable you to deal with the design, this discussion will focus on the common aspect of identifying changes, and, also, how to best perform fact table updates when required in the ETL.

Approaches to Identifying Changed Fact Records

If you are lucky, the source feed will include a way to identify whether the record requires a change or a new fact row. For example, a source column may provide the Data Manipulation Language (DML) type. DML is the cause for the row being sent to the ETL, commonly a value of I (insert), U (update), or D (delete). Alternatively, the source feed may include both a create date and a modified date. Comparing these dates together in conjunction with the last incremental change identifier (as discussed in Chapter 3) will allow the identification of the row as a new row or a modified row.

| Important | A new row would be when the creation date is greater than the last incremental change identifier date. A modified row would be when the create date is less than the incremental extraction date, and the modified date is greater than the last incremental extraction date. |

In both of these cases, the source records do not need to match the source rows to existing records in the fact table. Unfortunately, when a fact source goes through changes, most cases will not provide this capability, and the source row will need to be matched with the fact row to identify the DML type of the record.

Just like the other aspects of dimension and fact ETL, two general categories exist to associate source rows with fact rows in SSIS:

-

Database joins between the source data and the fact table- These enable the comparison of the source to the fact table. This approach comes with several drawbacks. For starters, it requires the source data to be in a table in the same database engine and on the same server as the fact table in order to perform the join efficiently. If the source data is already on the same server, then the join can be performed. However, it will impact the source database and create potential locking issues when the comparison is run (and the fact table will also be impacted during the expensive join operation). If the source data is on a different system or is in a flat-file form, then the data needs to be staged, which generates more disk IO and creates a multi-step process. On the other hand, fact tables often contain millions of rows and would consume tremendous memory consumption if brought completely into the SSIS pipeline buffers. When dealing with large volumes, an RDBMS can provide invaluable help in scaling an ETL solution. See the section, “Handling the Fact Updates, Inserts, and Deletes,” later in this chapter for more on leveraging the RDBMS to handle comparisons.

-

Data correlation with the data flow- As mentioned before, two primary data transformations exist that assist in associating sources together: the Lookup transformation and the Merge Join transformation. Using these, the source feed can be correlated with the fact table, which is the first step in processing changes. The general benefit of these transformations is that the source feed can come from any source system or files, and still be joined to the fact table. The Lookup will have more memory overhead when the fact table is fully cached. However, the Merge Join requires the inputs to be sorted, which would use just as much memory as the Lookup if the Sort transformation is used (because of the Sort’s data blocking nature). However, sources can be marked as pre-sorted, which gives the Merge Join an advantage when the source can be sorted efficiently.

Here is an example: The sales quota fact source doesn’t have the capability of identifying whether a source record is a change or a new fact record. Furthermore, the source does not provide a modified date column to filter the extraction. Therefore, the source feed will include non-changed rows along with changed, new records, and deletes.

To handle this example, two data flow methods are presented:

-

The Lookup transformation

-

The Merge Join transformation

Using the Lookup to Identify Fact Change Records

Using the Lookup approach, Figure 5-19 shows the sales quota fact data flow, which includes the dimension key lookups and a new Lookup transformation for the fact table. A Conditional Split transformation immediately follows the Lookup transformation.

Figure 5-19: Sales quota fact data flow

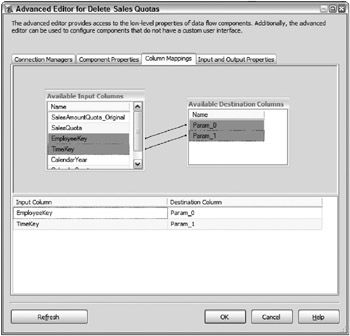

The Lookup transformation is configured with the fact table as the reference table, and the dimension keys that make up a unique record (the EmployeeKey and TimeKey) are joined with the corresponding fact table keys. Figure 5-20 shows the Columns tab of the fact table Lookup transformation.

Figure 5-20: Columns tab of the fact table Lookup transformation

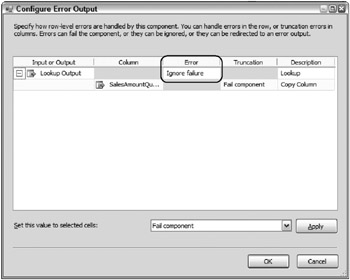

The Lookup is configured to pull back the SalesAmountQuota measures, aliased as SalesAmountQuota_Original to indicate that it came from the fact table. This measure will be used in the next step. The Lookup is also configured to ignore failure in the event of an error. Figure 5-21 shows the Configure Error Output editor of the Lookup transformation.

Figure 5-21: Configure Error Output editor of the Lookup transformation

Ignore failure means that, when the Lookup does not find a match, the record will still be sent down the Lookup output path, but the returned column(s) will be NULL. In this case, the SalesAmount Quota_Original will be marked as NULL when a match from the fact table does not exist.

The Conditional Split will now provide the DML identification of the records. Figure 5-22 shows the Conditional Split editor, which has three outputs defined.

Figure 5-22: Conditional Split editor with three outputs defined

The Conditional Split is like a CASE statement in that the criteria are checked in order until the first TRUE is reached in the WHEN condition. The first condition evaluated, called Sales Quota New, determines whether a match has been found in the fact table. The condition, ISNULL(SalesAmountQuota_Original) evaluates the returned column in the fact lookup to see if the value is NULL. In this case, a NULL indicates that the Lookup did not find a match and, therefore, the record must be inserted into the fact table. The SalesAmountQuota column in the fact table is defined as NOT NULL, thereby ensuring that a NULL in the SalesAmountQuota_Original indicates a non-match.

The second condition, called Sales Quota Change, checks the value of the measures between the source and the fact table. The output of this Boolean expression, SalesQuota != SalesAmountQuota_Original, will evaluate to TRUE if the measures do not match. When this is the case, the value has changed and needs updating in the fact table (or a change record needs to be generated).

The final condition is merely the default output, called Sales Quota No Change. With the Lookup approach, if the first two criteria do not apply, then by default there is no change. This is because the sales quota example pulls all the records from the source and, in many cases, a change hasn’t happened.

Following are a couple of drawbacks that inhibit using the Lookup for many cases:

-

Since the Lookup cache has a practical memory limitation, the fact volume may not fit in the private Lookup cache. This is accentuated by the nature of fact tables, which are commonly large.

-

The solution does not handle deletes. Deletes are rarer with fact processes, so this limitation may not apply to your situation. The lookup rows in cache are only used if the input to the Lookup contains a match to the reference table. Therefore, there is no way to know which fact row in the Lookup cache was not used and should be deleted.

Using the Merge Join to Identify Fact Changes

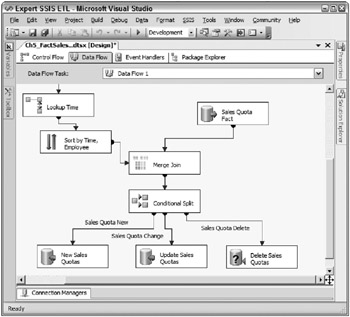

Before discussing the update and insert process, an alternate approach for identifying fact changes involves the Merge Join transformation. Figure 5-23 shows the identical fact ETL process, sales quota fact, only with a Merge Join used to correlate the fact source rows to the fact rows.

Figure 5-23: Sales quota fact with a Merge Join

This data flow uses a combination of the Merge Join, the Sort, and the Conditional Split transformations. The Merge Join requires two sorted inputs, and, in this case, the source rows are sent through the Sort transformation (sorted by TimeKey and EmployeeKey) as the first input. The second input brings the fact rows into the Merge Join, only the OLE DB source is pre-sorted with an ORDER BY statement and configured as pre-sorted.

Figure 5-24 shows the Merge Join editor configured as a Full outer join type to bring across both matching and non-matching rows from both inputs.

Figure 5-24: Merge Join editor configured as a Full outer join type

The keys and measures are brought across from the source input, along with the SalesAmountQuota from the fact table, aliased as SalesAmountQuota_Original to distinguish it from the source (just like the Lookup approach). The next component, a Conditional Split, identifies the row DML type: no-change, new, deleted, changed. Figure 5-25 shows the Conditional Split editor.

Figure 5-25: Conditional Split editor

The only difference from the previous Conditional Split reviewed with the Lookup approach is that a new condition has been added as condition 2. When the condition ISNULL(EmployeeKey) evaluates to TRUE, then the row exists in the fact table, but was deleted in the source. This Employee Key comes from the employee Lookup transformation and is used to join the source rows to the fact table. Therefore, a NULL indicates the row must be deleted in the fact table.

Overall, this approach allows identifying all types of row changes-new, changed, no change, and deleted. Because source adapters can be pre-sorted, this solution will scale with larger fact volumes. Typically, an index will exist on the columns used in the join pattern of the Merge Join. Be sure to evaluate the query plan, and, if possible, tweak the index to avoid table scans with the ORDER BY added to the fact query.

| Important | Don’t forget about historical dimension changes. If you had a historical dimension change, you can’t always compare across surrogate keys, because the surrogate key might have gone through a change. Otherwise, you may have duplicated data in the fact table. |

Handling the Fact Updates, Inserts, and Deletes

The final step in the fact ETL is to load new records and handle updates, if necessary. Data inserts are relatively straightforward and will be handled with a destination adapter configured to load the rows into the fact table. Chapter 12 discusses scaling data loads and how to choose and configure the destination adapters.

Updates and deletes present a more difficult challenge. With SSIS, two primary options exist for updating and deleting rows in a table from data in the data flow:

-

Using an OLE DB Command transformation, rows flowing through the pipeline can be mapped to RDBMS statements. In other words, for every row flowing through, the column values can be used to call a statement such as an UPDATE or DELETE statement or a stored procedure.

-

Pipeline data can be staged to a table in the same RDBMS, which can be used to perform a set-based UPDATE or DELETE.

Figure 5-23, highlighted earlier, shows the sales quota fact data flow with components added to handle inserts, updates, and deletes.

For the sales quota source records that are new records, an OLE DB Destination has been chosen using the SQL Native Client. The destination adapter has been named New Sales Quotas. The output from the Conditional Split to handle deletes is called Delete Sales Quotas. Because these deletes only account for a small percentage of rows in this example, an OLE DB Command has been chosen. In other words, not many rows are coming through the Delete Sales Quotas output; therefore, the row-by-row deletes will be handled fine without any major performance penalty by using an OLE DB Command. The SQLCommand property of the OLE DB Command contains the DELETE statement, as shown here:

DELETE FROM dbo.FactSalesQuota WHERE EmployeeKey = ? AND TimeKey = ? Remember, the OLE DB Command uses a parameterized OLE DB statement. The question marks are parameters in the statement mapped to columns and rows in the pipeline. Figure 5-26 shows the Column Mappings tab of the OLE DB Command editor.

Figure 5-26: Column Mappings tab of the OLE DB Command editor

The two question marks in the SQL statement are mapped to EmployeeKey and TimeKey in the DELETE output.

| Important | Performing an update or delete with the OLE DB Command transformation and, at the same time, inserting into the same table in the same data flow may cause locking issues. Be sure to turn off the table lock in the data flow destination used. If locking still becomes an issue, fast load may need to be turned off, in which case, it may be better to use a set-based approach for the OLE DB Command operation, such as the update output will demonstrate next. |

The final Conditional Split output requires rows to be updated in the fact table. Updates are more common in this scenario, so rather than using the OLE DB Command transformation, the rows are staged to a table called stgFactSalesQuota_Updates through a second OLE DB Destination adapter named Update Sales Quotas.

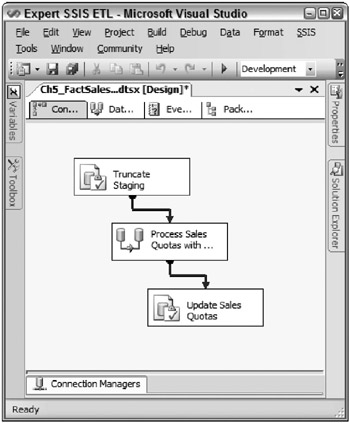

Two additional control flow steps are needed to complete the set-based update. Figure 5-27 shows the completed control flow tasks.

Figure 5-27: Completed control flow tasks

The first Execute SQL Task, which runs before the data flow, clears out the rows in the staging table with a TRUNCATE TABLE statement. Rows are typically preserved in the staging table until the next ETL run to allow auditing and troubleshooting. This is why the TRUNCATE statement happens before the data flow, as opposed to after the UPDATE statement.

The UPDATE statement happens after the data flow since the new staged records are added to the staging table in the data flow, as already shown. The Execute SQL Task used for the update performs a SQL UPDATE statement with the staging table joined to the fact table with the new measure value being handled in the update, as the following code shows:

UPDATE dbo.FactSalesQuota SET SalesAmountQuota = STG.SalesQuota FROM dbo.FactSalesQuota INNER JOIN SSISOps.dbo.stgFactSalesQuota_Updates STG ON FactSalesQuota.EmployeeKey = STG.EmployeeKey AND FactSalesQuota.TimeKey = STG.TimeKey Essentially, this is a very similar process used to scale dimension updates as discussed in Chapter 4.

EAN: 2147483647

Pages: 111