Creating a Custom Slowly Changing Package

After working with a couple of clients, it became obvious that the built-in SCD transformation would handle most, but not all, dimension processing situations, and its limitations have been pointed out earlier.

The question becomes: “How can we build a dimension ETL package that mimics the functionality of the built-in SCD support, but scales to handle high volumes?”

If you are now comfortable with the SSIS data flow features and the SCD concepts covered thus far, then the answer will be surprisingly easily. Before laying out a couple of variations, consider the different aspects of the dimension process:

-

Data correlation between the source records and the dimension table

-

Comparisons between the column values from the source and the dimension tables

-

Updates to handle Type 1 changing records and inferred members

-

Inserts to handle Type 2 historical changes and new dimension members

When considering the built-in SCD support, the SCD transformation (not including the downstream transformations) handles the first two aspects listed here. So, our objective first is to re-create this process by using out-of-the-box features.

Joining Source Data to Dimension Data

The first thing you want to do is correlate the data, comparing the source to the dimension. Within SSIS, there are two primary transformations that can help you correlate data: the Lookup transformation and the Merge Join transformation.

In both cases, you must use the business key in the source and associate it with the current record in the dimension. When you join those records together, you can then compare the different attributes to see if there are changes.

-

Using a Lookup transformation, the dimension table can be fully cached, which will limit the RDBMS impact as opposed to the row-by-row lookup approach of the SCD transformation. However, on a 32-bit server with 4GB of memory, the Lookup cache will max out in the neighborhood of 2 to 6 million dimension members in the cache, depending on the availability of system memory, the data type of the surrogate key and business key, and the number of columns needed to be returned by the lookup for comparison.

-

A Merge Join transformation will allow the dimension members to be joined with source records. When the source or dimension can be pre-sorted, this approach will allow greater memory scalability. Instead of keeping everything in cache, once a key match occurs, the row is sent downstream and no longer held up by the Merge Join. This approach requires a Left outer join, taking rows from the source on the left side, and the dimension on the right side, and merging across the business key.

Step B involves comparing the columns from the source to the dimension, and could be handled with a Conditional Split transformation.

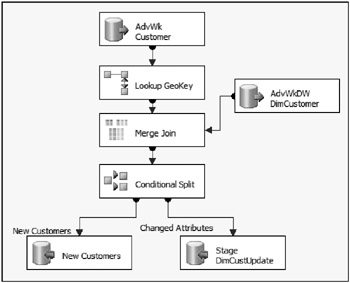

Figure 4-41 shows the sample data flow for the customer dimension.

Figure 4-41: Data flow for the customer dimension

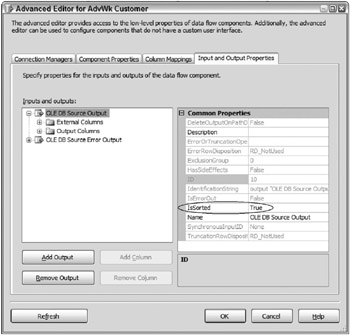

On the left, the customer source records are extracted (along with a Lookup to acquire the geography surrogate key), and on the right, the customer dimension records are pulled. Both of these sources are joined together with a Merge Join. As you recall, the Merge Join requires that the data sources are sorted. However, neither input is sorted using the Sort transformation. Rather, these sources use a SQL ORDER BY statement in the source adapter, sorting by the business key. To accomplish this, the Advanced Editor allows the sources to be flagged as pre-sorted. Figure 4-42 shows that the IsSorted property is set to True on the OLE DB Source Output of the dimension table source.

Figure 4-42: IsSorted property is set to True on the OLE DB Source Output of the dimension table source

To be sure, the IsSorted property is only a flag. It does not perform any actual sorting itself. It is merely a contract by the developer to the component to state that it should “trust this source is already sorted.”

The second required step to mark a source to be pre-sorted is to drill into the Output Columns folder in the same window, and change the SortKeyPosition (not shown) of the appropriate sorted column to an integer that delineates which columns are sorted in which order (negative indicates descending).

As a reminder, both inputs into a Merge Join need to be sorted on the join column in order for the Merge Join to validate. Since both sources have been marked as pre-sorted, the Merge Join will recognize the sort columns. Figure 4-43 shows the Editor window of the Merge Join transformation.

Figure 4-43: Editor window of the Merge Join transformation

The Left outer join Join type will allow the new source records to be identified. Notice also that the attribute columns are selected on both inputs. This is to allow the comparison of the columns in the next step. Because many of the column names are repeated, the columns from the right input (the Dimension table) are prefixed with a DW_.

Determining Data Correlation Approach

Before continuing with the review of this custom SCD package, here are a few considerations when determining what SSIS approach should be used to correlate data in the data flow. These approaches employ different uses of the Merge Join and Lookup transformation for bringing source and dimension data together.

-

Merge Join with Sort- The Merge Join requires the sources be sorted on the joining keys. You could use a Sort transformation in each source before the Merge Join. However, this approach requires a lot of memory because the Sort must encapsulate all of the records and then sort them before sending the rows downstream.

-

Merge Join with Presorted Input- Relational engines do a great job of sorting data because, in many cases, the join keys in the source are already indexed. If this is the case, use a simple ORDER BY statement in the source, and then tell the source adapter that the input is sorted (the approach identified in the last section).

-

Fully cached Lookup- The Lookup transformation allows full cache, partial cache, and no cache. Partial and no cache don’t scale well since database calls are expensive. However, if your dimension table size is manageable (less than a few hundred thousand members), then caching the dimension table in memory will provide great performance without the data sources having to be sorted.

-

Filtered Cache Lookup- If you have millions of rows in your dimension table and are able to target only new and changed records during the dimension source extract, one option is to land the business keys from those dimension tables in a staging table, then use that staging table to filter the lookup itself. This will allow all the needed records to be loaded into the Lookup cache, but not the entire dimension table.

On a 64-bit server, the fully cached Lookup scales very well. The benefits of 64-bit are in both the size of the cache (millions of records) and the ability to load the records into cache much faster than a 32-bit environment.

Determining Dimension Changes

Now that the dimension source has been joined with the dimension table, the records now need to be routed to the appropriate output for the dimension change handling. As Figure 4-41 shows, the next step after the Merge Join is a Conditional Split, which will help determine where the records should go.

The Customer dimension in AdventureWorks contains only Type 1 changing attributes, which simplifies this example. Therefore, only new members and changes need to be accommodated. The comparison is handled through the Conditional Split transformation, the editor shown in Figure 4-44.

Figure 4-44: Conditional Split transformation editor

As you can see, the first criterion evaluated is whether the business key is NULL in the dimension table, ISNULL(DW_CustomerAlternateKey). If it is NULL, then this indicates the dimension record does not exist, and, therefore, the source record is New Customers as the output name indicates.

The second criterion is to determine whether a change has happened in any of the attribute columns, using an SSIS expression, as shown in the following code:

(ISNULL(GeographyKey) ? 1 : GeographyKey) != (ISNULL(DW_GeographyKey) ? "" : DW_GeographyKey) || (ISNULL(Title) ? "" : Title) != (ISNULL(DW_Title) ? "" : DW_Title) || FirstName != DW_FirstName || (ISNULL(MiddleName) ? "" : MiddleName) != (ISNULL(DW_MiddleName) ? "" : DW_MiddleName) || LastName != DW_LastName || BirthDate != DW_BirthDate || MaritalStatus != DW_MaritalStatus || (ISNULL(Suffix) ? "" : Suffix) != (ISNULL(DW_Suffix) ? "" : DW_Suffix) || Gender != DW_Gender || EmailAddress != DW_EmailAddress || TotalChildren != DW_TotalChildren || NumberChildrenAtHome != DW_NumberChildrenAtHome || HouseOwnerFlag != DW_HouseOwnerFlag || NumberCarsOwned != DW_NumberCarsOwned || AddressLine1 != DW_AddressLine1 || (ISNULL(AddressLine2) ? "" : AddressLine2) != (ISNULL(DW_AddressLine2) ? "" : DW_AddressLine2) || Phone != DW_Phone || DateFirstPurchase != DW_DateFirstPurchase || CommuteDistance != DW_CommuteDistance The Logical OR operator (||) allows each column to be evaluated for changes. The ISNULL function helps to perform the comparison in the case that both of the values are NULL, which evaluates as not equal. In other words, if a change happens in any column, then the entire expression evaluates as TRUE, and the record is identified as meeting the Changed Attribute output.

Finally, if a row does not meet either of these criteria, then it is consider as not having changed, and therefore the Default Output is called No Changes.

You can also use the script component to do the conditional comparison, as it might be easier when dealing with a large number of columns or more complicated logic. The Conditional Split expression box does not allow a secondary editor window to generate more complicated expressions. Therefore, a Script Component would be potentially cleaner.

Handling Dimension Inserts and Updates

If a change exists or a new record needs to be created, the final step in the dimension table ETL is to handle inserts and updates.

The SCD Wizard approach also handles inserts and updates by using a series of OLE DB Command transformations and OLE DB Destinations. When considering how to deal with changes in a custom dimension process, the same options are available:

-

Dimension Updates- Updates for Type 1 changing attributes and inferred members can be handled by the OLE DB Command transformation, or a set-based update approach, which would leverage a staging table and an Execute SQL Task, as described earlier in this chapter.

-

Dimension Inserts- Inserts should use an OLE DB Destination adapter or other destination that optimizes the inserts. If updates are handled through a staging table approach, the OLE DB Destination can be configured with fast load support. Otherwise, database table locking contentions can happen if the updates are happening at the same time as the inserts.

Continuing with the previous example, a set-based approach is taken to handle the updates. Therefore, the output of the Conditional Split requires two OLE DB Destination adapters, as Figure 4-41 earlier demonstrated: one for the dimension inserts and a second for the staging table inserts.

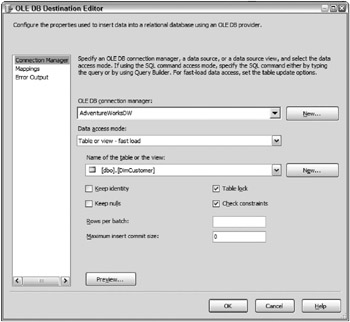

Since the ETL process controls the data in the dimension table and the updates will not be happening at the same time, a Fast Load with a Table Lock is used to handle the inserts. Figure 4-45 shows the OLE DB Destination adapter editor for the dimension inserts.

Figure 4-45: OLE DB Destination adapter editor

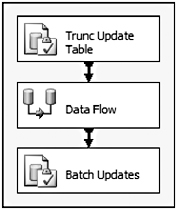

When it comes to the dimension updates, after the rows requiring an update are loaded to the staging table, the set-based update can be performed. Figure 4-46 shows the control flow of the Customer package, which ends in an Execute SQL Task to perform the update, called Batch Updates. This control flow also begins with a truncation statement to clear out the same staging table used for the updates.

Figure 4-46: Control flow of the Customer package

The update itself is formed by joining the dimension table with the staging table, and replacing the values in the staging table with the current values in the dimension table.

UPDATE AdventureWorksDW.dbo.DimCustomer SET AddressLine1 = stgDimCustomerUpdates.AddressLine1 , AddressLine2 = stgDimCustomerUpdates.AddressLine2 , BirthDate = stgDimCustomerUpdates.BirthDate , CommuteDistance = stgDimCustomerUpdates.CommuteDistance , DateFirstPurchase = stgDimCustomerUpdates.DateFirstPurchase , EmailAddress = stgDimCustomerUpdates.EmailAddress , EnglishEducation = stgDimCustomerUpdates.EnglishEducation , EnglishOccupation = stgDimCustomerUpdates.EnglishOccupation , FirstName = stgDimCustomerUpdates.FirstName , Gender = stgDimCustomerUpdates.Gender , GeographyKey = stgDimCustomerUpdates.GeographyKey , HouseOwnerFlag = stgDimCustomerUpdates.HouseOwnerFlag , LastName = stgDimCustomerUpdates.LastName , MaritalStatus = stgDimCustomerUpdates.MaritalStatus , MiddleName = stgDimCustomerUpdates.MiddleName , NumberCarsOwned = stgDimCustomerUpdates.NumberCarsOwned , NumberChildrenAtHome = stgDimCustomerUpdates.NumberChildrenAtHome , Phone = stgDimCustomerUpdates.Phone , Suffix = stgDimCustomerUpdates.Suffix , Title = stgDimCustomerUpdates.Title , TotalChildren = stgDimCustomerUpdates.TotalChildren FROM AdventureWorksDW.dbo.DimCustomer DimCustomer INNER JOIN dbo.stgDimCustomerUpdates ON DimCustomer.CustomerAlternateKey = stgDimCustomerUpdates.CustomerAlternateKey In this case, since the Customer dimension only contains Type 1 changing attributes, every non-key attribute is updated. In the case where Type 2 historical attributes are involved, the UPDATE statement would only target the Type 1 changing attributes and leave any changes in the Type 2 historical attributes to an insert.

When this package is run with a large volume, the differences will be stark compared to the built-in SCD Wizard. A customer dimension with hundreds of thousands or millions of records will process in a fraction of the time that the SCD would take, given how the SCD works.

EAN: 2147483647

Pages: 111