Incremental Data Extraction

Incremental extraction is the process of targeting modified records and new records, and then tracking the last completed extraction. For example, as you process rows, you might track the last modified datetime value or last identifier for the rows that you’ve pulled. Then, the next time you extract from the same source system, you start where you left off, rather than extracting the entire process batch.

How you do incremental extraction depends on the source system design. In fact, certain source systems do not initially provide a mechanism to target changes and new rows. In these cases, sometimes you have the flexibility to make changes on the source system, sometimes you don’t. SSIS can handle both scenarios.

Here are a few of the many ways that you may be able to identify changed source records:

-

Use a modified date or created date column from a database source- These are called change identifier columns. In fact, many transactional systems already have change identifier columns that can be used for an incremental extraction. This is probably the most common approach to incremental extraction.

-

Use an auto-incrementing identifier- If the source system doesn’t have a modified date or created date, there may be an auto-incrementing column acting as a change identifier, which increases every time a row change happens. You can also use this approach to identify changed records.

-

Use an audit table- Some source systems already have or allow a trigger (or similar mechanism) to capture the changes to an audit table. An audit table may track keys for that source table or the details of the change. However, this approach involves overhead, because triggers are expensive.

-

Log-based auditing- Some database servers provide a log reader–based mechanism to automatically track changes in a similar way to the trigger-based mechanism, but with a much lower overhead. These log-based systems are often called Change Data Capture features, and in order to take advantage of this, you need a source system that supports these features.

Beyond these options, other scenarios may involve combinations or unique solutions. For example, a few years ago we worked with a client that allowed us to add a column (defaulted as NULL) to the source table, but we were not allowed to add a trigger to update that column on a transaction-by-transaction basis. However, we were allowed to batch-update this column during our extraction window. On a nightly basis, we would update the new column with a datetime value where the value had been NULL. Then we would extract the records that matched the new datetime value, and not worry about pulling the same record each extraction.

For the worst-case scenarios, if you do not have the ability to target changed rows, and the source system cannot be changed (or if your source is a flat file), then you will have to use SSIS to identify the new or changed records. This section reviews the case where the source can be filtered and where SSIS needs to handle the filter.

| Important | It’s important to understand the CRUD (Create, Read, Update, and Delete) operations for the source system. For example, an application might handle the Modified DateTime field. If other processes update rows (such as a batch system running at night), ensure that the source system is updating the Modified DateTime. If not, you may be missing rows that the source system needs to update. That can be a problem. In any warehouse design, the biggest risk is not having your end users trust the data because you have incorrect data. If users don’t trust it and find bugs, then they don’t use the system and the project fails. |

Incremental Extraction Using a Change Identifier Value

If your source tables contain a change identifier value (such as a last modified datetime), or you are able to make small changes to your source tables to allow this, then the process in SSIS will involve three steps:

-

Targeting the changed records by making the source query dynamic. This process involves package variables and two methods in the data flow adapters.

-

Retrieving the incremental extraction values and updating package variables.

-

Capturing the last or maximum value of the change identifier value, so that the value can be tracked and used for the next extraction.

In addition to reviewing some standard approaches to handling this common scenario, this discussion examines an alternate approach if your source system is SQL Server. This approach does involve adding a column to the source tables. However, it doesn’t require a table trigger or application logic to update the column value, and, therefore, can add some significant benefits without the overhead common to change identifiers. This approach involves using the SQL Server timestamp data type, and is discussed after the three aforementioned steps are examined.

Targeting Change Records Through Dynamic Source Queries

The core requirement when attempting to only pull a selected subset of data from a source is the ability to make the query change based on parameters. In the case of an incremental identifier value (such as last modified date), the goal would be to target the source table for the extraction by filtering on the last modified date. Using SSIS, a couple approaches are possible that use properties of the source adapter.

-

Using an OLE DB source adapter may allow a parameterized query, where the query can reference package variables to filter the query.

-

The OLE DB adapter also allows the source table name or query to be embedded into a package variable. The adapter can then point to the package variable and use its contents value as the source text of the query.

OLE DB Parameterized Source Query

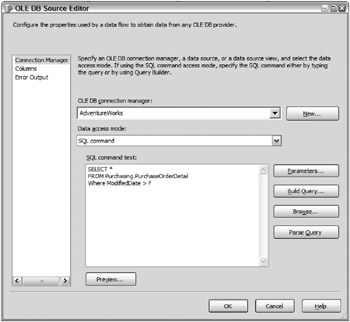

An OLE DB parameterized source query has been reviewed in the Professional SSIS book, and is the method that the Chapter 1 step-by-step refresher used to target records from a source. It simply involves setting the source adapter Data access mode properties to SQL command and then using the parameterized method of the adapter to define the parts of the statement that should be based on the parameters. Figure 3-15 shows the OLE DB Source Editor with a parameterized query.

Figure 3-15: OLE DB Source Editor with a parameterized query

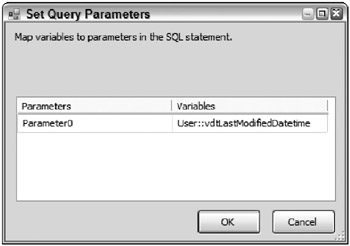

The second requirement of the parameterized query is to map the parameters to package variables, which is done using the Parameters button on the same screen. This example simply maps a package variable called vdtLastModifiedDatetime to the first parameter, as Figure 3-16 shows.

Figure 3-16: Mapping a vdtLastModifiedDatetime package variable to the first parameter

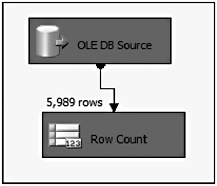

OLE DB parameters are 0 based, which means that the first ? in order is defined as the 0 parameter, the second ? in order is the 1 parameter, and so forth. Note that when you use parameterized queries, the statement cannot be parsed (using the Parse Query button) or previewed (using the Preview button). Therefore, to test the parameterization, the data flow must be executed. Figure 3-17 shows the executed data flow.

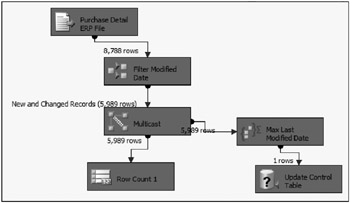

Figure 3-17: Executed data flow

A Row Count transformation is merely used as a development technique-a dummy destination to build and test the source adapter. The vdtLastModifiedDatetime variable has been defaulted to '1/1/2004', which caused 5,989 rows out of the 8,788 rows to be extracted. The next section discusses how to both update and retrieve the right variable value.

Variable Bound SQL Source

One major drawback to the OLE DB parameterized source query is that many of the non-SQL Server OLE DB source providers do not support parameterization. Therefore, an alternate approach will be needed that is also supported by the same source adapter.

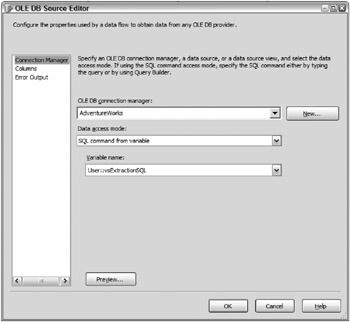

The OLE DB provider also allows a source query to be based on the contents of a package variable. This is different from the parameterized source query in that the entire SQL statement is entered into the variable, rather than just the filter values. To exemplify, a string variable has been added to a package called vsExtractionSQL, and it contains the following SQL code:

SELECT * FROM Purchasing.PurchaseOrderDetail WHERE ModifiedDate > '1/1/2004' The OLE DB source adapter is defined with the Data access mode property set to SQL command from variable, and the Variable name property is set to User::vsExtractionSQL, as shown in Figure 3-18.

Figure 3-18: Defining the OLE DB source adapter

Alternately, if the variable only contained the name of the table or view, you could select the Table name or view name variable option in the Data access mode property. However, by only selecting a view or a table, you would not be able to filter the rows on the source.

Unlike the OLE DB parameterized statement, using this variable-bound feature, the parsing and previewing feature in the editor works, and when this data flow is executed, the results are identical to Figure 3-17, shown previously. The next section reviews how to update the variables.

Retrieving Incremental Identifier Values and Updating Package Variables

The first step showed how to enable the data flow to be able to run a targeted extraction, and this section reviews how to update the package variables that drive the targeted extraction. Both the OLE DB parameterized approach and the variable-bound source approach need variable values updated in order to extract the correct batch of data.

As an example, let’s first update the vdtLastModifiedDatetime column, which is the only variable update that the OLE DB parameterized approach requires. Second, let’s look at a couple of methods to update the vsExtractionSQL variable with the right datetime value from the vdtLastModifiedDatetime variable.

The current incremental identifying column value needs to be stored so that each ETL run can use a new value for the extraction. This can be done in various ways (such as keeping it in a file), but the most common way is to store the value in a database control table that contains only the extraction information. For the Last Modified Date example, a table named cfgIncrementalExtraction has been created with two fields with one entry row, which looks like the following.

| SourceTable | LastModifiedDatetime |

|---|---|

| PurchaseOrderDetails | 1/1/2004 |

The SourceTable column simply defines the source object, in case multiple configuration entries are made. The LastModifiedDatetime column contains the value of the last successful extraction.

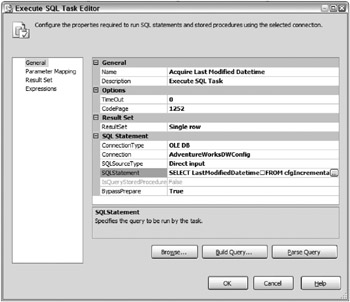

Therefore, the first step is to retrieve the last extraction value and update the vdtLastModifiedDatetime column variable. To do this, the easiest way is to use an Execute SQL Task. Figure 3-19 shows the editor of an Execute SQL Task.

Figure 3-19: Editor of an Execute SQL Task

The SQLStatement executes the following query against the table defined previously:

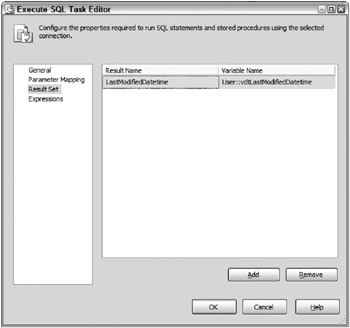

SELECT LastModifiedDatetime FROM cfgIncrementalExtraction WHERE SourceTable = 'PurchaseOrderDetail' The statement returns a single row where the resulting columns must update the variable. The ResultSet property of the task shown in Figure 3-19 has been set to Single row, and on the Result Set page of the editor, the LastModifiedDatetime column is mapped to the vdtLastModifiedDatetime variable, as Figure 3-20 highlights.

Figure 3-20: LastModifiedDatetime column mapped to the vdtLastModifiedDatetime variable

As an alternate approach, you could use the SQL logic to acquire the value that then could be embedded into a stored procedure and an output variable.

For the variable-bound source adapter method, a second step is required to update the vsExtractionSQL value for the variable-bound source adapter. In fact, two SSIS methods are available. The first is to have the variable updated by an expression, and the second is to use a Script Task to perform the update.

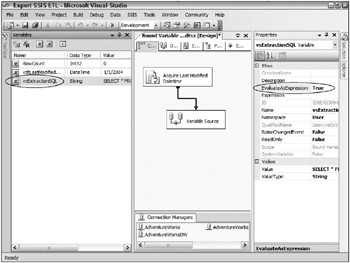

Variables have a little-known property called EvaluateAsExpression, which, by default, is set to false. When this is set to true, then an expression can be written that is evaluated any time the variable is read. Figure 3-21 shows that the EvaluateAsExpression property is viewable in the Properties window, when the variable is selected in the Variables window.

Figure 3-21: EvaluateAsExpression property viewable in the Properties window

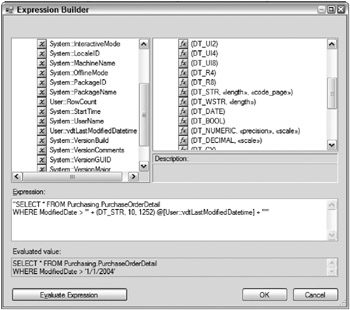

Since the release of SQL Server 2005 Service Pack 1, the expression can be entered into an expression editor, allowing the statement to be built with the SSIS expression functions. To invoke the Expression Builder dialog box, the Expression property, which is right below the EvaluateAsExpression property, should be selected. Figure 3-22 shows the editor with the expression that builds the SQL statement added.

Figure 3-22: Editor with the expression that builds the SQL statement

In this case, the expression updates the SQL statement by using the vdtLastModifiedDatetime value to update the string.

"SELECT * FROM Purchasing.PurchaseOrderDetail WHERE ModifiedDate > '" + (DT_STR, 10, 1252) @[User::vdtLastModifiedDatetime] + "'" The expression editor also allows the expression to be evaluated for accuracy and functionality.

One drawback of the EvaluateAsExpression feature of variables is that the property is difficult to find. Therefore, you may want to use a Script Task to manually update the value of the variable. To do this, be sure that the Script Task has the vdtLastModifiedDatetime entered in the ReadOnlyVariables list and the vsExtractionSQL in the ReadWriteVariables list. The script code that would be entered under the Public Sub Main() would look like this:

Dts.Variables("vsExtractionSQL").Value = _ "SELECT * FROM Purchasing.PurchaseOrderDetail WHERE ModifiedDate > '" _ + Dts.Variables("vdtLastModifiedDatetime").Value.ToString + "'" When put together, the control flow would include the Execute SQL Task, an optional Script Task, and a Data Flow Task, as the control flow in Figure 3-21 demonstrated earlier.

Capturing the Maximum Change Identifier Value

So far, when dealing with incremental extractions, you have seen how to dynamically update the source query based on the value of a variable, which was updated from the value stored in a control table. The final part of targeting database records is to capture the final change identifier value so that it can be used for the next extraction.

A couple choices exist to handle this. An Execute SQL Task can be used to run a SQL MAX operation on the destination table. This requires either a staging table or keeping the change identifier column in the dimension or fact tables. With large volumes, this approach may require some time and resource overhead if the number of rows required to scan is too large.

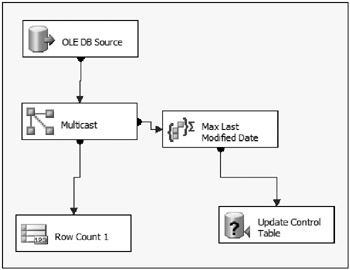

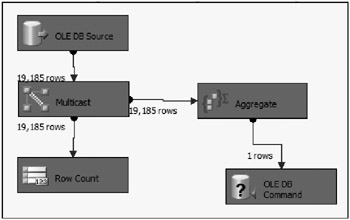

A better approach is to handle the operation right in the data flow. In other words, the Aggregate transformation can be used to capture the maximum value. But can the aggregate be done at the same time as the rest of the transformation process? Yes, with the use of a Multicast, the rows can be sent to an Aggregate, while at the same time, the rows are flowing through the rest of the data flow operations. Figure 3-23 shows a data flow with this configuration.

Figure 3-23: Handling the operation in the data flow

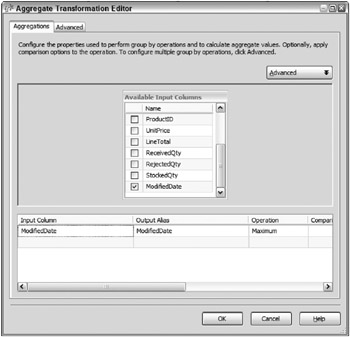

On the left side of the Multicast output, the rows are simply sent to a Row Count transformation. Once again, the Row Count transformation serves as a dummy destination for illustration purposes and, in reality, would be replaced by the actual data loading code. On the right side of the multicast, the rows are sent to an Aggregate transformation, configured to capture the maximum value of the ModifiedDate coming from the source. Figure 3-24 shows the Aggregate Transformation Editor.

Figure 3-24: Aggregate editor

Notice that there are no group-by columns in the Aggregate, which means that the maximum value of the ModifiedDate will be captured across the source data set. Because of this, only one row from the Aggregate will be sent downstream.

The next transformation used is an OLE DB Command transformation, which is updating the control table with the following statement:

UPDATE cfgIncrementalExtraction SET LastModifiedDatetime = ISNULL(?, LastModifiedDatetime) WHERE SourceTable = 'PurchaseOrderDetail' The parameter (?) is mapped to the single row Modified Date output of the aggregate. This update statement will only be executed when given the single-row Aggregate output. Also, it’s important to note that an ISNULL is used to trap for NULLs in the parameter. This is because the Aggregate will produce a single row NULL output even if there are no input rows from the source, since there are no group-by columns. This is expected, and without the ISNULL, the control table would be incorrectly updated with a NULL and affect the next run.

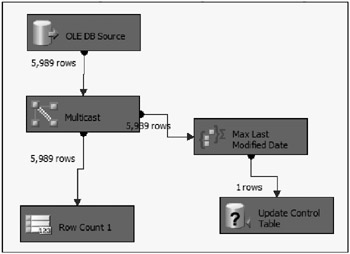

Figure 3-25 shows the data flow results when the extraction package is run in its entirety.

Figure 3-25: Data flow results

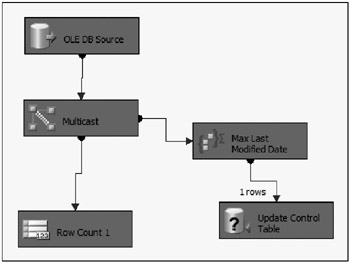

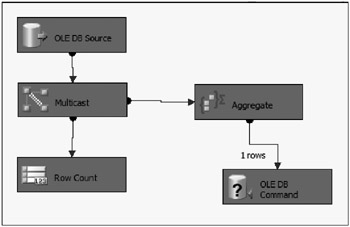

If the package is run again immediately, without any source rows being updated, then given the logic, the data flow results return what Figure 3-26 shows.

Figure 3-26: Data flow results after immediately running again

As expected, no rows were extracted, since the last run captured and tracked the maximum Modified Date, and there were, therefore, no rows left to pull.

Incremental Extraction from SQL Server Without a Trigger

Before looking at how to use SSIS to perform incremental extraction on text files and other sources (that is, sources that don’t contain a change identification value), one other option exists for table extractions from SQL Server.

If you’re using SQL Server as your source, then you may be able to perform an incremental extraction even though you don’t have an explicit column that helps identify changes. SQL Server provides a timestamp data type that is automatically updated when a row is added or updated, without requiring a trigger.

Although the timestamp data type sounds like it is related to a date time, it is actually a misnomer. In reality, it is a row version column, which creates an auto-incrementing binary value. And, any time a row is modified within the source (or added), that column value is updated automatically. In fact, this timestamp is used by SQL Server for internal row checking for things like replication. Each table can have only one timestamp column, and when you add it to the table, you are really just exposing it in the table for another use.

In a nutshell, the timestamp can be used in SSIS to perform incremental extraction. To leverage this, SSIS requires converting the binary column so that it is useable.

To demonstrate, the Sales.Customer AdventureWorks source table has been altered with a new timestamp column, using the following TSQL code:

USE AdventureWorks GO ALTER TABLE Sales.Customer ADD CustomerTimestamp timestamp NOT NULL In addition, a new row and column have been added to the control table. The new row contains the Sales.Customer source table column value, and a new varchar column has been added to capture the converted conversion number value of the binary timestamp value.

The difference between the datetime example reviewed earlier in this chapter and the timestamp being reviewed here is that the source query is also handling the conversion of the timestamp from binary to integer. Besides the normal columns being pulled, the SELECT statement includes the conversion and adjusted filter, with the following code:

SELECT *, CONVERT(BIGINT,CustomerTimestamp) as CustomerTimestamp_Int64 FROM sales.customer WHERE CONVERT(BIGINT,CustomerTimestamp) > ? Figure 3-27 shows the completed data flow for the first time that the process is run.

Figure 3-27: Completed data flow for the first time the process is run

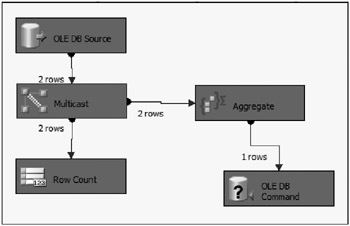

And, as expected, when this package is run immediately a second time, no rows are extracted, as Figure 3-28 shows.

Figure 3-28: No rows are extracted when the package is run a second time

Since a trigger already exists on the Sales.Customer table, to illustrate this timestamp use, the trigger should be dropped. Secondly, the following updates are next performed on the table:

USE AdventureWorks GO UPDATE Sales.Customer SET TerritoryID = 6 WHERE CustomerID = 13 UPDATE Sales.Customer SET CustomerType = 'I' WHERE CustomerID = 65 Then the package is run another time, with the data flow output shown in Figure 3-29.

Figure 3-29: Output of running the package again

The two updated rows have been sent out. As you can see, this gives you a nice way to handle incremental extraction from a SQL source without requiring application changes or a table trigger. Yes, it does require the addition of an 8-byte column in the source, but the incremental extraction benefits may outweigh the additional table width.

Using SSIS to Handle All Aspects of an Incremental Extraction

Ideally, every source would have a method to capture only the new and changed records (and even deletes). But unfortunately, not every source has this capability, especially when dealing with flat files as your source. Therefore, this section looks at how to handle flat file sources and database tables that do not have change identifier values.

Flat Files that Contain Change Identifier Values

It is very possible that you may have a source flat file that contains a LastModifiedDate or similar column in the file (and the file contains both changed and non-changed rows from the last extraction). However, because of the nature of flat files, the data flow source adapter cannot issue a query against the file.

This scenario can actually be handled very easily in SSIS, with the use of a Conditional Split transformation as the first step in the data flow. Since the vdtLastModifiedDate variable cannot be used in the source, what can be done instead is the Conditional Split can filter the rows immediately as they are pulled into the pipeline from the file. Figure 3-30 shows the modified data flow.

Figure 3-30: Modified data flow

The source adapter for the flat file is simply configured for the current structure of the delimited file. The Conditional Split checks the value of the Modified Date column for every row, and only allows rows through that are greater than the last incremental extraction value. Figure 3-31 shows the Conditional Split Transformation Editor.

Figure 3-31: Conditional Split editor

Rows that do not meet the criteria would usually go out the default output. But, as you can see in the data flow that Figure 3-31 shows, the default output (named non-changed) is not being used. Therefore, the non-changed rows are discarded by the pipeline.

Notice in the data flow that the next identifier value is captured using a Multicast and Aggregate just like the dynamic query examples in the previous section. Also, not shown is the control flow that uses the same approach as before with an Execute SQL Task to update the vdtLastModifiedDate variable.

Sources Without Change Identifier Values

Finally, you may have a situation where your source just doesn’t have a change identifier value at all. In these cases, you will either need to use the data flow to determine which records are changed, or land the entire source to a temporary table and rely on the database to compare the records.

Focusing on the data flow approach, to handle this scenario, the source needs to be correlated with the destination, and based on the comparison, records need to be identified as new or changed and, in some cases, deleted.

| Important | Even in prior examples where a change identifier column is available, it may not indicate whether a record is new or updated. Even in these cases, a comparison of source and destination is required. Chapter 5 delves into more detail on the compariso n between sources to identify changes. The review here is simply to identify whether the source record has a corresponding entry in the destination. |

A common theme throughout the book is how to do data correlation in the data flow to compare sources with destinations. The straightforward approaches are to use the Lookup or Merge Join transformations to perform the data association. The Lookup can identify matches with the output path and non-matches with the error row output. The Merge Join requires a Conditional Split to determine matches and non-matches.

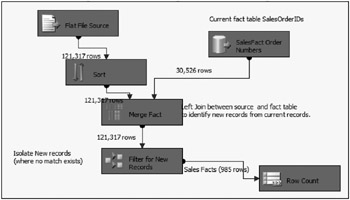

In the following example, a flat-file source contains sales data, but does not indicate whether the sale had previously been added to the destination table. To identify whether the sale had been added previously, the source data is merged with the destination table, which, in this case, is the sales fact table within AdventureWorksDW. Figure 3-32 shows the first several steps of the data flow that performs the data association and comparison.

Figure 3-32: Beginning the data flow that performs the data association and comparison

Before the Merge Join is used, the flat file source is sorted on the SalesOrderID, which is also contained in the fact table. On the right side of the Merge Join, an OLE DB source adapter is used, which pulls the existing SalesOrderID from the existing fact tables, pre-sorted with an Order By statement. The advanced editors of this adapter are configured to recognize the pre-sort. Details of how to accomplish this are discussed in Chapter 4. Figure 3-33 shows the Merge Join configured as a left outer join.

Figure 3-33: Merge Join editor

Since this example is attempting to identify new records to add to the destination, the left outer join allows both matches and non-matches from the source to the destination to flow through the transformation. The SalesOrderID from the destination is aliased as Fact_SalesOrderID to differentiate the value in the Conditional Split transformation. Figure 3-34 highlights the Conditional Split, which performs the filter of records by checking for NULLs in the Fact_SalesOrderID.

Figure 3-34: Conditional Split

If a NULL exists in the Fact_SalesOrderID, it means that the source record is new to the fact table, and, therefore, should be allowed through the transformation process to be added to the fact table. Otherwise, the record already exists, and, in this example, is ignored by the Conditional Split since the default output is not used.

This approach and similar approaches with the Lookup transformation allow incremental data extraction by using SSIS data flow components to filter out rows early in the process.

EAN: 2147483647

Pages: 111