| The RAID levels that use parity data utilize a very simple, elegant solution founded in the truth tables of our mathematical youth. You must remember truth tables? You had logic operators such as AND, OR, NOT, and so on. Just to jog your memory, here is the truth table, Table 5-1, for the AND operator: Table 5-1. Truth Table for the AND Operator | Data | Data | Result | | T | T | T | | T | F | F | | F | T | F | | F | F | F |

The AND operator can be summarized by saying, "the result is True only when BOTH data elements are True ". Compare this to the OR operator in Table 5-2: Table 5-2. Truth Table for the OR Operator | Data | Data | Result | | T | T | T | | T | F | T | | F | T | T | | F | F | F |

The OR operator can be summarized as follows , "the result is True when ANY data element is True ". This is only a precursor to the operator used in RAID 5. In RAID 5, we use the XOR (e X clusive OR ) operator shown in Table 5-3: Table 5-3. Truth Table for the XOR Operator | Data | Data | Result | | T | T | F | | T | F | T | | F | T | T | | F | F | F |

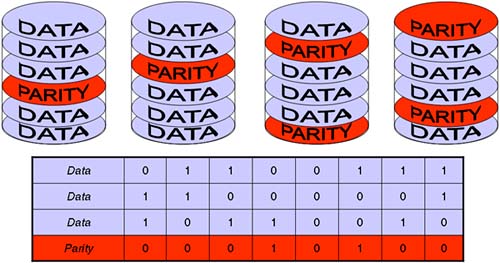

The XOR operator can be summarized as follows "the result is True when ONLY ONE of the data elements is True ". This differs markedly from OR in that with an OR operation we can get a True result from two basic premises: when both data elements are True OR when one data element is True . This is not the case when we have an XOR operator. With XOR we can get a True result from only one scenario: when ONLY ONE of the data elements is True . This simple mathematical wizardry is the basis of RAID 5. Let's apply the XOR truth table to three data blocks (they're only 1 byte in size , but it demonstrates the idea). This equates to a RAID 5 set over four disks, i.e., 75 percent capacity utilization with parity data spread over all four disks. I have included an Intermediate Result in order to show the workings of the XOR operation at each stage, although this is not technically necessary as the truth table explanation of " True when ONLY ONE is True " can easily be expanded to say " True if AN ODD NUMBER of data elements are True " in a multi-disk RAID 5 array shown in Table 5-4: Table 5-4. RAID 5 Parity Calculation | Data | | 1 | 1 | | | 1 | 1 | 1 | | Data | 1 | 1 | | | | | | 1 | | Intermediate Result | 1 | | 1 | | | 1 | 1 | | | Data | 1 | | 1 | 1 | | | 1 | | | Parity | | | | 1 | | 1 | | |

This calculation is maintained for each block in the stripe set with the parity data being distributed over all disks. The advantage of RAID 5 over RAID 3 or 4 is that whenever we hit the read-modify-write bottleneck, the fact that the parity data is spread over all spindles means that we are spreading the parity IO over all disks, unlike RAID 3 or 4 where a single parity disk becomes the bottleneck. On disk, it would look something like Figure 5-1. Figure 5-1. XOR Parity data in RAID 5.

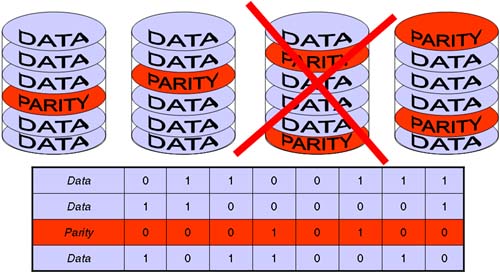

Should we lose a disk due to a failure, RAID 5 dictates that we can reconstitute the data from the existing data and the parity information. If we recall the beauty and elegance of an XOR operator, we recall "the result is True when ONLY ONE of the data elements is True ". The net result is that we can simply reapply the XOR operator on the existing data and the parity information, which SHOULD reconstitute the original data block(s) (Figure 5-2). Figure 5-2. Using Parity to rebuild data.

As you can see, we can sustain the loss of a single disk without affecting access to the existing data. Normally a RAID array would have a hot-spare disk waiting for such a failure. The array would rebuild the data on-the-fly allowing users to use the data immediately as the array can easily rebuild a lost data block should the user require it. Modifications to lost data blocks are allowed, as all we need is the new data block and the existing data blocks in order to recalculate the new parity block. These calculations need to happen in real time to not affect overall performance of the disk subsystem. Consequently, it is desirable to perform parity-based RAID levels via a hardware solution. Software implementations of RAID 5 are available but as with all software RAID solutions will be contending for system CPU cycles along with the IO subsystem. In most scenarios, a hardware solution will outperform a JBOD with software RAID. Hardware solutions for RAID need to be well thought out, as problems like the read-modify-write performance penalty cannot be avoided completely. The biggest IO bottlenecks for RAID involve any solution that uses parity data. If the stripe unit size multiplied by the number of disks is smaller than the underlying write IO size, the read-modify-write performance can be considerable. Most high-performance RAID arrays will implement large cache memory subsystems in order to hold frequently accessed data in RAM. Allied with high availability power supplies , redundant data paths, and battery backup data, access performance is maximized through the use of high-speed RAM with the RAID array offloading real IO to disk when it sees fit. Some RAID arrays use RAID 5DP (DP=Double Parity) to allow for up to two disk failures per RAID set. In some instances, they will use a slightly different algorithm for the second parity block although there is nothing to stop them from using the same simple XOR operator. |