Linux Kernel Architecture

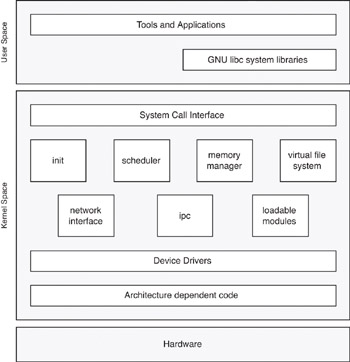

The GNU/Linux operating system is a layered architecture. The Linux kernel is monolithic and layered also, but with fewer restrictions (dependencies can exist between noncontiguous layers ). Figure 2.2 provides one perspective of the GNU/_Linux operating system with emphasis on the Linux kernel.

Figure 2.2: GNU/Linux operating system architecture.

Note here that the operating system has been split into two software sections. At the top is the user space (where we find the tools and applications as well as the GNU C library), and at the bottom is the kernel space where we find the various kernel components . This division also represents address space differences that are important to note. Each process in the user space has its own independent memory region that is not shared. The kernel operates in its own address space, but all elements of the kernel share the space. Therefore, if a component of the kernel makes a bad memory reference, the entire kernel crashes (also known as a kernel panic ). Finally, the hardware element at the bottom operates in the physical address space (which is mapped to virtual addresses in the kernel).

Let s now look at each of the elements of the Linux kernel to identify what they do and what capabilities they provide to us as application developers.

| Note | GNU/Linux is primarily a monolithic operating system in that the kernel is a single entity. This differs from microkernel operating systems that run a tiny kernel with separate processes (usually running outside of the kernel) providing the capabilities such as networking, filesystem, and memory management. Many microkernel operating systems exist today, including CMU s Mach, Apple s Darwin, Minix, BeOS, Next, QNX/Neutrino, and many others. Which is better is certainly hotly debated, but microkernel architectures have shown themselves to be dynamic and flexible. In fact, GNU/Linux has adopted some microkernel -like features with its loadable kernel module feature. |

GNU System Libraries (glibc)

The glibc is a portable library that implements the standard C library functions, including the top half of system calls. An application links with the GNU C library to access common functions in addition to accessing the internals of the Linux kernel. The glibc implements a number of interfaces that are specified in header files. For example, the stdio.h header file defines many standard I/O functions (such as fopen and printf ) and also the standard streams that all processes are given ( stdin , stdout , and stderr ).

When building applications, the GNU compiler will automatically resolve symbols to the GNU libc (if possible), which are resolved at runtime using dynamic linking of the libc shared object.

| Note | In embedded systems development, use of the standard C libraries can sometimes be problematic . The GCC permits disabling the behavior of automatically resolving symbols to the standard C library by using -nostdlib . This permits a developer to rewrite the functions that were used in the standard C library to his own versions. |

When a system call is made, a special set of actions occurs to transfer control between the user space (where the application runs) and the kernel space (where the system call is implemented).

System Call Interface

When an application calls a function like fopen , it is calling a privileged system call that is implemented in the kernel. The standard C library (glibc) provides a hook to go from the user space call to the kernel where the function is provided. Since this is a useful element to know, let s dig into it further.

A typical system call will result in the call of a macro in user space. The arguments for the system call are loaded into registers, and a system trap is performed. This interrupt causes control to pass from the user space to kernel space where the actual system call is available (vectored through a table called sys_call_table ).

Once the call has been performed in the kernel, return to user space is provided by a function called _ret_from_sys_call . Registers are loaded properly for a proper stack frame in user space.

In cases where more than scalar arguments are used (such as pointers to storage), copies are performed to migrate the data from user space to kernel space.

| Note | The source code for the system calls can be found in the kernel source at ./linux/kernel/sys.c . |

Kernel Components

The kernel mediates access to the system resources (such as interfaces, the CPU, and so on). It also enforces the security of the system and protects users from one another. The kernel is made up of a number of major components, which we ll discuss here.

init

The init component is performed upon boot of the Linux kernel. It provides the primary entry point for the kernel in a function called start_kernel . This function is very architecture dependent because different processor architectures have different init requirements. The init also parses and acts upon any options that are passed to the kernel.

After performing hardware and kernel component initialization, the init component opens the initial console ( /dev/console ) and starts up the init process. This process is the mother of all processes within GNU/Linux and has no parent (unlike all other processes, which have a parent process). Once init has started, the control over system initialization is performed outside of the kernel proper.

| Note | The kernel init component can be found in linux/init in the Linux kernel source distribution. |

Process Scheduler

The Linux kernel provides a preemptible scheduler to manage the processes running in a system. This means that the scheduler permits a process to execute for some duration (an epoch ), and if the process has not given up the CPU (by making a system call or calling a function that awaits some resource), then the scheduler will temporarily halt the process and schedule another one.

The scheduler can be controlled, for example by manipulating process priority or chaining the scheduling policy (such as FIFO or Round-Robin scheduling). The time quantum (or epoch) assigned to processes for their execution can also be manipulated. The timeout used for process scheduling is based upon a variable called jiffies. A jiffy is a packet of kernel time that is calculated at init based upon the speed of the CPU.

| Note | The source for the scheduler (and other core kernel modules such as process control and kernel module support) can be found in linux/kernel in the Linux kernel source distribution. |

Memory Manager

The memory manager within Linux is one of the most important core parts of the kernel. It provides physical to virtual memory mapping functions (and vice-versa) as well as paging and swapping to a physical disk. Since the memory management aspects of Linux are processor dependent, the memory manager works with architecture-dependent code to access the machine s physical memory.

While the kernel maintains its own virtual address space, each process in user space has its own virtual address space that is individual and unique.

The memory manager also provides a swap daemon that implements a demand paging system with a least-recently-used replacement policy.

| Note | The memory manager component can be found in linux/mm of the Linux kernel source distribution. |

Elements of user-space memory management are discussed in Chapter 17, Shared Memory Programming, and Chapter 18, Other Application Development Topics, of this book.

Virtual File System

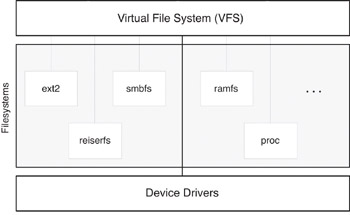

The Virtual File System (VFS) is an abstract layer within the Linux kernel that presents a common view of differing filesystems to upper-layer software. Linux supports a large number of individual filesystems, such as ext2, Minix, NFS, and Reiser. Rather than present each of these as a unique filesystem, Linux provides a layer into which filesystems can plug their common functions (such as open , close , read , write , select , and so on). Therefore, if we needed to open a file on a Reiser journaling filesystem, we could use the same common function open , as we would on any other filesystem.

The VFS also interfaces to the device drivers to mediate how the data is written to the media. The abstraction here is also useful because it doesn t matter what kind of hard disk (or other media) is present, the VFS presents a common view and therefore simplifies the development of new filesystems. Figure 2.3 illustrates this concept. In fact, multiple filesystems can be present ( mounted ) simultaneously .

Figure 2.3: Abstraction provided by the Virtual File System.

| Note | The Virtual File System component can be found in linux/fs in the Linux kernel source distribution. Also present there are a number of subdirectories representing the individual filesystems. For example, linux/fs/ext3 provides the source for the third extended filesystem. |

While GNU/Linux provides a variety of filesystems, each provides characteristics that can be used in different scenarios. For example, xfs is very good for streaming very large files (such as audio and video), and Reiser is good at handling large numbers of very small files (<1KB). Filesystem characteristics influence performance, and therefore it s important to select the filesystem that makes the most sense for your particular application.

The topic of file I/O is discussed in Chapter 10 of this book, File Handling in GNU/Linux.

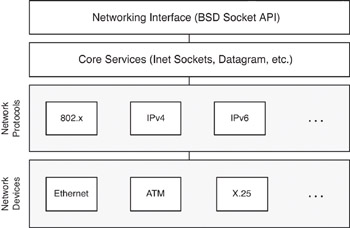

Network Interface

The Linux network interface offers a very similar architecture to what we saw with the VFS. The network interface component is made up of three layers that work to abstract the details of networking to higher layers, while presenting a common interface regardless of the underlying protocol or physical medium (see Figure 2.4).

Common interfaces are presented to network protocols and network devices so that the protocol and physical device can be interchanged based upon the actual configuration of the system. Like the VFS, flexibility was a design key.

The network component also provides packet scheduler services for quality of service requirements.

Figure 2.4: Network subsystem hierarchy.

| Note | The network interface component can be found in linux/net of the Linux kernel source distribution. |

The topic of network programming using the BSD Sockets API is discussed in Chapter 10 of this book.

Inter-Process Communication (IPC)

The IPC component provides the standard System V IPC facilities. This includes semaphores, message queues, and shared memory. Like VFS and the network component, the IPC elements all share a common interface.

| Note | The IPC component can be found in linux/ipc of the Linux kernel source distribution. |

The topic of IPC is detailed within this book. In Chapter 16, Synchronization with Semaphores, semaphores and the semaphore API are discussed. Chapter 15, IPC with Message Queues, discusses the message queue API, and Chapter 17 details the shared memory API.

Loadable Modules

Loadable kernel modules are an important element of GNU/Linux as they provide the means to change the kernel dynamically. The footprint for the kernel can there fore be very small, with required modules dynamically loaded as needed. Outside of new drivers, the kernel module component can also be used to extend the Linux kernel with new functionality.

Linux kernel modules are specially compiled with functions for module init and cleanup. When installed into the kernel (using the insmod tool), the necessary symbols are resolved at runtime in the kernel s address space and connected to the running kernel. Modules can also be removed from the kernel using the rmmod tool (and listed using the lsmod tool).

| Note | The source for the kernel side of loadable modules is provided in linux/kernel in the Linux kernel source distribution. |

Device Drivers

The device drivers component provides the plethora of device drivers that are available. In fact, almost half of the Linux kernel source files are devoted to device drivers. This isn t surprising, given the large number of hardware devices out there, but it does give you a good indication of how much Linux supports.

| Note | The source code for the device drivers is provided in linux/drivers in the Linux kernel source distribution. |

Architecture-Dependent Code

At the lowest layer in the kernel stack is architecture-dependent code. Given the variety of hardware platforms that are supported by the Linux kernel, source for the architecture families and processors can be found here. Within the architecture family are common boot support files and other elements that are specific to the given processor family (for instance, hardware interfaces such as DMA, memory interfaces for MMU setup, interrupt handling, and so on). The architecture code also provides board-specific source for popular board vendors .

Hardware

While not part of the Linux kernel, the hardware element (shown at the bottom of our original high-level view of GNU/Linux, Figure 2.1) is important to discuss given the number of processors and processor families that are supported. Today we can find Linux kernels that run on single-address space architectures and those that support an MMU. Processor families including Arm, PowerPC, Intel 86 (including AMD, Cyrix, and VIA variants), MIPS, Motorola 68K, Sparc, and many others. The Linux kernel can also be found on Microsoft s Xbox (Pentium III) and Sega s Dreamcast (Hitachi SuperH). The kernel source provides information on these supported elements.

| Note | The source for the processor and vendor board variants is provided in linux/arch in the Linux kernel source distribution. |

EAN: 2147483647

Pages: 203