Adding Detail and Process Layers

|

|

The interview process is the most efficient method for reviewing, correcting, and enriching the test inventory and building the system-level process flows. The information gathered during the interviews is used to correct and refine the test inventory and to identify data dependencies and cross-project/intersystem dependencies.

The product of the interview process is a mature, prioritized test inventory that encompasses the entire system and includes the expert input of all the participants. The test inventory and its prioritized test items are used to build cost, sizing, and scheduling estimates during the planning phases. During the test effort, the test inventory becomes the test repository and test metrics database.

| Note | The number of possible combinations of hardware and software, users, and configurations often is larger than all other tests combined. |

I have included the interview section here because it really is an integral part of building consensus and the agreement to test, along with determining the scope of the test effort. I use it to open management's eyes as to the size and complexity of what they are undertaking, as well as to educate myself and my team about what the project is and is not. The interview process or some other form of review needs to be conducted on the inventory before the analysis phases, which are discussed in Chapters 11, 12, and 13. Otherwise, your analysis will likely be a waste of time.

In addition to the project's coding requirements, it might also be appropriate for you to establish the environments that the system will run in during the interview process. However, I don't advise doing so during the interview if the number of environmental dependencies is extremely large or a political issue. I don't want the interviewee to become distracted from the core questions about his or her project.

Let's discuss two ways to approach this interview process: the traditional face-to-face interview approach and the modern Web-based collaborative approach. The preparation and materials are almost the same for both approaches. The difference is in the way we gather the information. The tried-and-true method using the interview process is described in the paragraphs that follow. The automated alternative is to prepare the materials electronically and place them in a high-function Web site. In this scenario, everyone is responsible for filling out the questionnaire as an electronic form, and you capture their responses in a database. I have tried for three years to use a Web site for this purpose and have only recently had any positive results that were actually preferable to the interview process. (I will talk about this project also.) Whichever method you choose, get support from upper management early in the process. If you don't, you will be pushing a big snowball uphill all by yourself. At any time it could overwhelm and crush you. Be especially cautious if this interview process is something new in your culture. If you opt for the interview approach, you will find instructions on how to perform the interviews in the following section, The Interviews: What and How to Prepare. Feel free to alter the process to fit your needs. Just keep your goals clearly stated and clearly in view.

The Interviews: What and How to Prepare

In the ideal case, I plan two levels of interviews for the system test planning effort: high-level interviews (duration: 15 to 30 minutes) and mid-level interviews (duration: 30 to 60 minutes). Interviewees are solicited from each of the project areas: IT, support, and system groups. Management may decide to go with just one set of interviews; I leave the choice to them. The trick is to do the best you can with what they give you.

Why Two Sets of Interviews?

The people I need to see are the SMEs. So why talk to the managers? The answer is, "because it is polite." I always offer management the choice and the opportunity to control the situation. That's what they are supposed to do, right?

Bona fide subject matter experts are few in numbers, expensive, and generally grossly overbooked. Development managers are especially protective of their wizards. In a bureaucratic shop, or worse, in a politically polarized situation, you will probably derail your effort if you try to go right to the SMEs. If you only rely on your personal charm, you will only get a subset of the SMEs into your interviews, and you could get into trouble. If you can't get management support, then by all means try the direct approach, but be careful.

One of the values that I add to a test project when I come in as a consultant is that I am "expendable." That's part of the reason that my consulting rates go so high. I am sacrificed on a fairly regular basis. This may sound odd, but let me explain.

In a big project, there are lots of politics. They usually have to do with budget and deadlines (usually impossible to meet). Or worse, they involve projects that have already gone bad and someone is trying to put a good face on the failure. I am often charged with detecting such situations and failures.

The normal tester who works for this company doesn't have the clout, the budget, or the perspective to get through the opposition that a development manager/director or even vice president can dish out. And the tester shouldn't even try. Hence, one of my best uses as a contractor is to "test the water."

When I walk into a planning meeting with development and lay out this "best-practice" methodology for testing the system, it is likely that not everyone is delighted about it. Typically, there are many reasons for the participants to disagree with what I have to say. These reasons are usually related to budget, but they can also be about impossible delivery dates, and the ambition of employees who want to be recognized as "experts".

Asking regular employees to risk their pensions in an undertaking of this type is not fair or right. A shrewd manager or director (usually a vice president) knows a lot about these intrigues, but that person will always need to find out exactly what is going on "this time." So we engage in a scenario that is a lot like staking the virgin out in the wasteland and watching to see who comes to dinner.

I am normally sacrificed to an ambush in a routine meeting somewhere just before the actual code delivery date. And when the dust and righteous indignation settle, all the regular employees are still alive and undamaged. My vice president knows who his or her enemies are. And the developers have a false sense of security about the entire situation. Meanwhile, the now dead contractor (me) collects her pay and goes home to play with her horses and recuperate.

The message for you normal employees who are testers is hopefully that this information will help you avoid a trap of this type. In any case, don't try these heroics yourself, unless you want to become a contractor/writer. But do keep these observations in mind, as they can be helpful.

Prepare an overview with time frame and goals statements, like the one listed under the Sample Memo to Describe the Interview Process heading in Appendix C, "Test Collateral Samples and Templates." Also prepare your SME questionnaire (see the Sample Project Inventory and Test Questionnaire for the Interviews in Appendix C), and send both to upper management. Get support from management as high up as you can. Depending on your position, your manager may need to push it up on your behalf. Just remind her or him that it is in the manager's best interest, too. In a good scenario, you will get upper management's support before you circulate it to the department heads and all the participants. In the ideal scenario, upper management will circulate it for you. This ensures that everyone understands that you have support and that they will cooperate. Here are my general outlines for the middleweight to heavyweight integration effort in the real-world example.

My Outline for High-Level Interviews

I plan for high-level interviews to take 15 to 30 minutes. (See the sample in Appendix C.) This is just a sample. Your own needs may differ greatly, but make sure you have a clear goal.

My outline for this type of interview is as follows:

GOALS

-

Identify (for this expert's area):

-

The project deliverables

-

Owners of deliverables (mid-level interviewees)

-

Project dependencies and run requirements

-

Interproject

-

Cross-domain functions/environment

-

Database and shared files

-

Business partners' projects

-

-

System and environment requirements and dependencies

-

The location of, and access to, the most recent documentation

-

-

Get management's opinion on the following:

-

Ranking priorities (at the project level)

-

Schedules

-

Delivery

-

Dependencies

-

-

Testing

This next section was added after the preliminary inventory was built. These items were added based on what we learned during the preliminary interviews and the meetings that followed. I talk about the day-in-the-life scenarios later in this chapter.

-

-

Go through the day-in-the-life scenarios to understand and document, answering the following questions:

-

Where do the new projects fit? (If they don't fit, identify the missing scenarios.)

-

How do the systems fit together; how does the logic flow?

-

Which steps/systems have not changed and what dependencies exist?

-

My Outline for Mid-Level Interviews

I plan for mid-level interviews to take from 30 to 60 minutes. (See the sample in Appendix C and the section Example Questions from the Real-World Example, which follows. Your own needs may differ greatly from what's shown in the sample, but make sure you have a clear goal. Be sure to ask the developer what type of presentation materials he or she prefers, and have them on hand. See my discussion of the "magic" questions in the Example Questions from the Real-World Example coming up.

My outline for this type of interview is as follows:

GOALS

-

Find out everything possible about the project-what it does, how it is built, what it interoperates with, what it depends on, and so on. If appropriate, build and review the following:

-

The logic flows for the projects and systems

-

The test inventory

-

Enumerate and rank additional test items and test steps in the test inventory

-

Data requirements and dependencies

-

All systems touched by the project

-

-

-

Get answers to the following questions (as they apply):

-

What will you or have you tested?

-

How long did it take?

-

How many testers did you need?

-

What do you think the test group needs to test?

-

-

Identify additional test sequences.

-

Identify requirements for test tools.

-

Establish the environment inventory and dependencies, if appropriate.

Example Questions from the Real-World Example

From the real-world example, here are the questions that I asked in the interview questionnaire:

-

Are these your PDRs? Are there any missing or extra from/on this list?

-

Is the project description correct?

-

Which test area is responsible for testing your code and modules before you turn them over to integration test?

-

What is the priority of this project in the overall integration effort based on the risk of failure criteria?

-

Who are the IT contacts for each project?

-

What are your dependencies?

-

In addition to integrating this code into the new system, what other types of testing are requested/appropriate:

-

Function

-

Load

-

Performance

-

Data validation

-

Other

-

No test

-

System test

-

-

What databases are impacted by this project?

-

What else do we testers need to know?

Conducting the Interviews

Schedule your interviews as soon after the statement is circulated as possible; strike while the iron is hot. Leave plenty of time between the individual interviews. There are a couple of reasons for this; one is to make sure that you can have time to debrief your team and record all your observations. Another reason is that you don't want to rush away from an expert that has more to tell you.

Prepare a brief but thorough overview of the purpose of the interview and what you hope to accomplish. I use it as a cover sheet for the questionnaire document. Send one to each attendee when you book the interview, and have plenty of printouts of it with you when you arrive at the interview so everybody gets one. Email attachments are wonderful for this purpose.

Be flexible, if you can, about how many people attend the interview. Some managers want all their SMEs at the initial meeting with them so introductions are made once and expectations are set for everyone at the same time. Other managers want to interview you before they decide if they want you talking to their SMEs. Every situation is different. The goal is to get information that will help everyone succeed. So, let the interviewees know that this process will make their jobs easier, too.

Keep careful records of who participates and who puts you off. If a management-level interviewee shunts you to someone else or refuses to participate at all, be sure to keep records of it. It has been my experience that the managers who send me directly to their developers are usually cutting right to the chase and trying to help jump-start my effort by maximizing my time with the SMEs. The ones that avoid me altogether are usually running scared and have a lot to hide. For now, just document it and move forward where you can.

How to Conduct the Interviews

Be sure that you arrive a little early for the interview and are ready to go so that they know you are serious. Hopefully, the interviewees have taken a look at the questionnaire before the meeting, but don't be insulted if they have not. In all my years of conducting this type of interview, I have only had it happen twice that I arrived to find the questionnaire already filled out. However, they usually do read the cover sheet with the purpose and goals statement. So make it worth their while. If you can capture their self-interest in the cover sheet, you have a much better chance of getting lots of good information from them. They will have had time to think about it, and you will need to do less talking, so you can do more listening.

Print out the entire inventory with all current information, sorted by priority/delivery and so on, and take it with you so you can look up other projects as well. Take a copy of the inventory that you have sorted so that the items relevant to the particular interview are at the top.

Give all attendees a copy of each of these materials, and have a dedicated scribe on hand, if possible.

Instructions for Interviewers

My short set of instructions to interviewers is as follows:

-

Be quick. Ask your questions 1, 2, 3-no chitchat. Set a time limit and be finished with your questions on time.

Finish your part on time but be prepared to spend as much extra time as the interviewee wants. Go through each question in order and write down the answers.

-

Listen for answers to questions that you didn't ask. Often, the developers have things that they want to tell you. Give them the chance. These things are usually very important, and you need to be open to hear them. I will give you some examples later in this section.

-

Follow up afterward by sending each participant a completed inventory. Close the loop, and give them the chance to review what you wrote. Confirm that you did hear them and that what they told you was important. Sometimes they have something to add, and they need to have the opportunity to review and correct as well.

Another important reason for doing this is that often developers look at what other developers said and find things that they didn't know. Many integration issues and code conflicts are detected in this way. It is much cheaper to fix these problems before the code moves from development to test.

-

Let them hash out any discrepancies; don't propose solutions to their problems.

In the real-world example, interviews were scheduled with the directors of each development area. The directors were asked to name the developers from their areas who would participate in the second level of interviews. Some directors were pleased with the process; some were not. Only one of the seven directors refused to participate in the interview process. Two of the directors had their development leads participate in both sets of interviews. Operations, customer support, and several of the business partners also asked to take part in the interview process. Including these groups not only helped enrich the information and test requirements of the inventory, it also gained allies and extra SME testers for the integration test effort.

An interview was scheduled to take no longer than 20 minutes, unless development wanted to spend more time. We were able to hold to this time line. It turned out that several developers wanted more time and asked for a follow-up meeting. Follow-up interviews were to be scheduled with the development managers after the results of the preliminary interviews were analyzed.

Analyzing the Results: Lessons Learned

Refer back to Table 7.2, which, you'll recall, incorporates the notes from the interviews into the preliminary inventory from Table 7.1. This inventory is sorted first by contact and then by priority. Notice that under the Priority column, "P," several of the initial priorities have been changed radically. In this project it turned out to be a profound mistake for the testers to estimate the relative priority of the projects based on a project's documentation and budget. Not only were the estimates very far from the mark established by development, but the test group was soundly criticized by the developers for even attempting to assign priorities to their projects.

The lesson learned in this case was be prepared, be thorough, but don't guess. We learned that lesson when we guessed at the priorities, but learning this lesson early saved us when we came to this next item. Notice the new column, TS-that is, Test Order. I will talk about it in detail in the next section.

The next thing to notice in the table are all the dependencies. One of the unexpected things we learned was that when the dependencies were listed out in this way, it became clear that some of the SMEs were really overloaded between their advisory roles and their actual development projects.

And, finally, the columns with the X's are impacted systems. This is the environmental description for this project. Each column represents a separate system in the data center. The in-depth descriptions of each system were available in the operations data repository.

When the full post-interview test inventory was published, the magnitude of the effort began to emerge. The inventory covered some 60 pages and was far larger and more complicated than the earlier estimates put forth by development.

When I go into one of these projects, I always learn things that I didn't expect-and that's great. I can give management this very valuable information, hopefully in time, so that they can use it to make good decisions. In this case, we discovered something that had a profound effect on the result of the integration effort, and the whole project. Just what we discovered and how we discovered it is discussed in the next section.

Being Ready to Act on What You Learn

As you learn from your interviews, don't be afraid to update your questionnaire with what you have learned. Send new questions back to people that you already interviewed, via email, if you can. Give them the chance to answer at a time that's convenient in a medium that you can copy and paste into your inventory. Every effort is different, and so your questions will be, too. Look for those magic questions that really make the difference to your effort. What do I mean by that? Here are a couple of examples.

What Was Learned in the Real-World Example

In the real-world example, the most important thing that we had to learn was very subtle. When I asked developers and directors what priority to put on various projects, their answer wasn't quite what I expected. It took me about four interviews before I realized that when I asked,

-

"What priority do you put on this project?"

The question they were hearing and answering was actually

-

"In what order do these projects need to be delivered, assembled, and tested?"

What emerged was the fact that the greatest concern of the developers had to do with the order in which items were integrated and tested, not the individual risk of failure of any module or system.

This realization was quite profound for my management. But it made perfect sense when we realized that the company had never undertaken such a large integration effort before and the individual silos lacked a vehicle for planning a companywide integration effort. In the past they had been able to coordinate joint efforts informally. But this time just talking to each other was not enough. Each silo was focused on their own efforts, each with their own string Gantt on a wall somewhere, but there was no split day integration planning organization in place, only the operations-led integration test effort. So there was no overall critical path or string Gantt for the entire effort.

I added a new column to the test inventory, "Test Order," shown as the sixth column in Table 7.2. But it became clear very quickly that no individual silo could know all the requirements and dependencies to assign the test order of their projects in the full integration effort. This realization raised a large concern in management.

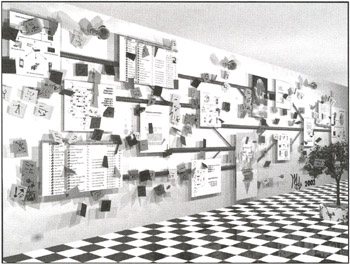

The beginning of the solution was started by inviting all interested parties to a required meeting in a large conference room with a big, clear, empty wall. Operations sponsored the meeting, and it was organized by the test group. We assigned a different color for each of the group silos. For example, accounting (which was the parent silo for billing) was assigned green, the color of money; documentation was gray; development was white; and so on. We printed each line from the preliminary inventory at the top of a piece of appropriately colored paper, complete with PDR, group description, IT owner, and dependencies columns.

In this real-world example, upper management had promised their regulating agency that the transition from two companies to one company would be undetectable to the public and all customers. In this light it seemed that the best approach was to go to bed as two companies and wake up on as one company. This approach is called the "big bang" method. It is intended to minimize outages and aftershocks. Critical transitions are often performed using this approach-for example, banking mergers, critical system switchovers, and coups in small countries.

In a big bang, good planning is not enough by itself. Success requires that everything relating to the event be carefully choreographed and rehearsed in advance. Rehearsal is an unfamiliar concept to most developers, and so success usually depends on a specialized integration team with far-reaching powers.

After the prioritization fiasco, I had learned not to "guess." So rather than waste my time doing a preliminary layout on the wall that would let them focus on criticizing my group instead of focusing on their integration issues, I gave each director the stack of papers containing his or her PDRs as they arrived. We also bought a large quantity of 2-by-2-inch sticky pads in the silos' colors and gave each member of a silo a pad of stickies in their color.

We explained that the purpose of the meeting was to clarify the order in which the projects should be integrated and tested. And we invited the developers to stick their PDR project sheets on the wall in the correct order for delivery, integration, and testing. We also asked them to write down on the paper all the integration-related issues as they became aware of them during the meeting. Directors and managers were invited to claim the resulting issues by writing them on a sticky along with their name and putting the sticky where the issue occurred.

I started the meeting off with one PDR in particular that seemed to have dependencies in almost all the silos. I asked the director who owned it to tape it to the wall and lead the discussion. The results were interesting, to say the least. The meeting was scheduled to take an hour; it went on for much longer than that. In fact, the string Gantt continued to evolve for some days after (see Figure 7.3). We testers stayed quiet, took notes, and handed out tape. The exercise turned up over a hundred new dependencies, as well as several unanticipated bottlenecks and other logistical issues.

Figure 7.3: A string Gantt on the wall.

| Fact: | Integration is a matter of timing. |

The "sticky meeting," as it came to be called, was very valuable in establishing where at least some of the potential integration problems were. One look at the multicolored mosaic of small paper notes clustered on and around the project papers, along with the handwritten pages tacked on to the some of the major projects, was more than enough to convince upper management that integration was going to require more that just "testing."

The experience of the sticky meeting sparked one of the high-ranking split day business partners to invent and champion the creation of the day-in-the-life scenarios, affectionately called "dilos." The idea behind the day-in-the-life scenarios was to plot, in order, all the activities and processes that a business entity would experience in a day. For example, we could plot the day in the life of a customer, a freight carrier, a customer service rep, and so on.

This champion brought in a team of professional enablers (disposable referees) to provide the problem-solving structure that would take all the participants through the arduous process of identifying each step in the dilos, mapping the steps to the PDRs and the system itself, recording the results, and producing the documents that were generated as a result of these meetings.

The dilos became the source of test requirements for virtually all system integration testing. They were used to plot process interaction and timing requirements. They also served as the foundation for the most important system test scenarios.

What Was Learned in the Lightweight Project

During the last set of interviews I did at Microsoft, the magic question was "What do I need to know about your project?" The minute I asked that question, the SME went to the white board, or plugged her PC into the conference room projector and started a PowerPoint presentation, or pulled up several existing presentations that she emailed to me, usually during the meeting, so that they were waiting for me when I got back to my office. I got smarter and put a wireless 802.11 card in my notebook so I could receive the presentation in situ, and I worked with the presentation as we conducted the interview. The big improvement was adding a second scribe to help record the information that was coming at us like water out of a fire hose.

The big lesson here is this: If you can use automation tools to build your inventory, do it. The wireless LAN card cost $150, but it paid for itself before the end of the second interview. The developer emailed her presentation to me in the meeting room. Because of the wireless LAN card, I got it immediately. For the rest of the interview, I took my notes into the presentation, cutting and pasting from the developer's slides into my questionnaire as needed. And I was able to publish my new interview results directly to the team Web site before I left the meeting room. After the meeting, I was finished. I didn't have to go back to my office and find an hour to finish my notes and publish them.

We have discussed automation techniques for documentation in a general way in this chapter. For specific details and picture examples, see Chapter 8, "Tools to Automate the Test Inventory."

|

|

EAN: 2147483647

Pages: 132

- Enterprise Application Integration: New Solutions for a Solved Problem or a Challenging Research Field?

- The Effects of an Enterprise Resource Planning System (ERP) Implementation on Job Characteristics – A Study using the Hackman and Oldham Job Characteristics Model

- Distributed Data Warehouse for Geo-spatial Services

- A Hybrid Clustering Technique to Improve Patient Data Quality

- Relevance and Micro-Relevance for the Professional as Determinants of IT-Diffusion and IT-Use in Healthcare