The Steps for the MITs Method

|

|

I have already introduced the spectrum of development methodologies in use today. Now I want to discuss the differences in approach and how testing in general and MITs in particular complement the differing priorities of these approaches.

Complimenting Your Development Methodologies

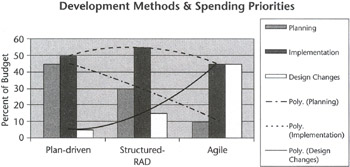

Agile and plan-driven teams use different approaches to design and implement software (see Figure 4.1). Proponents of the plan-driven methodology believe in spending up front to acquire information that will be used to formulate the design, so that they are as well informed as possible before they commit to a plan. This is called money for information (MFI).

Figure 4.1: The spending priorities of different development methods.

Users of the Agile method choose the design early and modify it as they go. So they reserve budget to help them adjust and absorb the changes and surprises that will certainly come. This is called money for flexibility (MFF).

MITs contributes to both approaches by first providing the tools to gather information and predict what will be needed, and then by giving you the tools to know if you are on schedule at any time during the process so that you can take appropriate action. However, the order and priority of the steps will necessarily be different for each of the development methods-and everything in between.

The method I call structured RAD is based on my real-world experiences. It combines the flexibility of the Agile approach with the more intense up-front planning and research of the plan-driven approach. My experiences show that typically there is far less risk of failure in this structured RAD approach than in either of the other two. Notice from the figure that the structured RAD approach typically spends more on implementation than either of the others. Consider that the test budget is included in implementation and that the RAD project typically spins through many incremental evolving development iterations in the implementation phase.

Management will consider various trade-offs regardless of the method they are following. For example, managers will spend MFI when the outcome is uncertain, as with new technologies or systems. MFI is used for unpredictable but resolvable or "must-have" scenarios, like establishing system limits or actual usage patterns. Usually these are things that can be estimated early using simulation.

If the issue is unresolvable, like a complete human factors failure, for example, the customer rejects the product. Or, if a technology or component is overwhelmed and simply cannot do the job in the real environment and there must be funding to provide alternatives, this is taken care of by MFF.

Remember, whether something is resolvable is in the eye of the beholder. If the unresolvable problem is discovered late in the development cycle, the entire investment is at risk. This leads to a situation where almost no price is too high for a resolution. This can be a showstopper for the plan-driven effort, which should have discovered the unresolvable problem during the planning phase. Because of their MFF focus, the Agile effort may well have funds left to find a resolution. There is an example of this situation in the Agile project in the next section.

The Software Capability Maturity Model (SW-CMM) [2] tells us what to do in general terms. It does not say how you should do it. The Agile methods, and any other formal methods that provide a set of best practices that specify how to implement the project, can be used with CMM practices. So, maturity can occur any time, any place; it is not dependent on the methods being used to develop or test the project.

Granted, the CMM is currently skewed in terms of inefficient paper and overburdened by policies, practices, and procedures. But that is simply the tradition and legacy of its past. There is no reason that efficient automated alternatives cannot be used to replace the legacy baggage.

Consider the following sets of steps. They all use the MITs methods, but the prioritization has been tailored to the development method in use.

MITs for a Plan-Driven Test Effort

This is the original MITs process; the first four steps are devoted to planning. The fifth step sets the limits and the agreement, and the last three steps are devoted to actually testing.

-

State your assumptions.

-

Build the test inventory.

-

Perform MITs analysis.

-

Estimate the test effort.

-

Negotiate for the resources to conduct the test effort.

-

Build the test scripts.[3]

-

Conduct testing and track test progress.

-

Measure test performance.

The rest of the chapters in this book follow this outline. Chapter 5 covers most of the metrics used in the method. Chapters 6, 7, and 8 cover the foundation and implementation of the test inventory and all its parts. Chapters 9 and 10 cover the MITs risk analysis techniques used to complete the test sizing worksheet. Chapters 11 through 13 cover the path and data analysis techniques used to identify tests for the inventory. Chapter 14 completes the test estimate and discusses the in-process negotiation process. (By the way, this information is useful even to an Agile tester because management always wants to know how much testing remains to be done and how long it is going to take.)

This process offers very good tools for planning up front and fits the traditional "best practice" plan-driven approach. It works well with traditional quality assurance policies and change management practices. It offers testers a lot of protection and latitude in the testing phase as well, since they will have negotiated test priorities, test coverage, and bug fix rates in advance. The superior tracking tools also give testers the edge in predicting trouble spots and reacting to trends early. This is a good approach to testing in a business-critical environment.

The following is the Agile Software Development Manifesto:

We are uncovering better ways of developing software by doing it and helping others do it Through this work we have come to value:

-

Individuals and interactions over processes and tools.

-

Working software over comprehensive documentation.

-

Customer collaboration over contract negotiation.

-

Responding to change over following a plan.

That is, while there is value in the items on the right, we value the items on the left more.

Source: "The Agile Software Development Manifesto," by the AgileAlliance, February 2001, at www.agilemanifesto.org.

MITs for a Structured RAD/Agile Test Effort

This type of effort is characterized by well-trained people and a high level of professional maturity. In the CMM scale, they are CMM Level 2 and Level 3. Notice how the estimation phase is shortened and actual test inventory is created using collaboration techniques. Extra importance is placed on the measure, track, and report steps because management has a keen (you could say, life-and-death) interest in the progress of the effort.

Because a Rad/Agile effort is a "code a little, test a little" process that repeats until done, the best source of status information is the tester. Because of proximity, developer and tester are nearly in synch and the tester is actually exploring the code and measuring the response. Contrary to popular myth about testers not being required in a Agile effort and the customer being the best source of information, Rad/Agile recognized that the customer is biased and not usually trained in the use of test metrics. Also contrary to some of the popular trends, the tester is necessary in an Agile effort to prevent requirements creep, bloat, and spin.

Agile management is likely to demand the highest quality of reporting because that is their best defense against the unknowns that are most certainly lurking out there in the development time line. Remember, Agile efforts don't spent MFI up front, so they usually haven't pretested all the new technologies that they are counting on at the back. Their best defense is in early detection of an unrecoverable error so that they can devise an alternative.

In a plan-driven environment, the developers "code a lot, then the testers test a lot." Meanwhile, the developers are moving on to something else and coding something new. So, the answer to the question "Are we on schedule?" has two answers, one from developers and one from the testers. Consequently, management is likely to get two very different answers to the question. Also, in the Agile effort, bug turnaround can be nearly instant. In the plan-driven method, developers must stop what they are doing (new) and refocus on what they did (old). This refocusing causes delays and difficulties.

The following list of steps shows the type of modifications necessary to tailor MITs to fit the needs of a Rad/Agile effort.

Steps:

-

Prepare a thumbnail inventory and estimate the test effort (best-guess method, covered in Chapter 7, "How to Build a Test Inventory").

-

Negotiate for the resources to conduct the test effort; budget for head count, test environments (hardware and software), support, and time lines/schedule.

-

Build the test inventory, schedule, and plan of action including targets; include your assumptions.

-

Conduct interviews and reviews on the inventory, schedule, and plan of action; adjust each accordingly. Perform MITs risk analysis (try to renegotiate budget if necessary).

-

Generate the tests, conduct testing, and record the results.

-

Measure, track, and report the following:

-

Test progress using S-curves

-

Test coverage metrics

-

Bug metrics

-

-

When the product is shipped, write your summary report and your recommendations.

MITs for a Free-for-All RAD/Agile Test Effort

This type of project is a typical entrepreneurial effort in a new technology, characterized by lots of unknowns in the feasibility of the system and often plagued by floating goals. It assumes a low maturity level on the part of the sales, management, and development communities.

This situation is best addressed using good measurements and very graphic records-ones that tell the story in clear pictures. Regular reporting of status is very important so that testers keep a continual line of information and feedback going to the decision makers.

The focus of this set of steps is on tester survival. The product will probably succeed or fail based on marketing and sales, not on its own merits.

Steps:

-

Conduct interviews and construct the inventory (ramp up), schedule, and plan of action; perform MITs analysis. Estimate the test effort (best-guess method, covered in Chapter 7, "How to Build a Test Inventory").

-

Prepare a contract to test. Establish and agree to targets for coverage, acceptable bug find and fix rates, code turnover schedules, and so on. Negotiate for the resources to conduct the test effort; budget for head count, test environments (hardware and software), support, and time lines/schedule. Be conservative.

-

Start testing, and record the results.

-

Measure, track, and report:

-

Test progress using S-curves

-

Test coverage metrics

-

Bug metrics

-

-

When the product is shipped or the contract is up, write your summary report and your recommendations.

-

Don't work without an open PO.

| Note | Crossing over, sometimes I will throw in a chart or worksheet from a different kind of effort. Don't be afraid to invent a new way of explaining the situation. |

Integrating Projects with Multiple Development Methodologies

I have worked in several large projects where different systems were being developed using different methodologies. MITs give me all the tools I need to test any of these projects. But again, the order of the steps and activities will be changed to suit the needs of the project.

Each development effort can be tested using an appropriate approach that is tailored to suit its needs. The interesting part comes when you integrate all of these systems. It turns out that good integration is not related to size or development methodology; it's a matter of timing. I use the same tools to integrate a large project as I do a small one. (More about timing issues in Chapter 7, "How to Build a Test Inventory.") How rigorous the approach is will be driven by the goals of upper management and the criticality of the final integrated system. Usually if it is a big project, it will be critical, and so even though many parts of the project may have been developed using Agile methods, the integration of the system will likely be conducted using a plan-driven approach. In any case, timing issues require a good deal of coordination, which requires good communications and planning.

[2]See the Glossary at the back of this book.

[3]This book covers the first five steps of the method in detail. Steps 6 through 8 are left to a future work.

|

|

EAN: 2147483647

Pages: 132