Solution: Improving the Quality Process

|

|

To improve a quality process, you need to examine your technology environment (hardware, networks, protocols, standards) and your market, and develop definitions for quality that suit them. First of all, quality is only achieved when you have balance-that is, the right proportions of the correct ingredients.

| Note | Quality is getting the right balance between timeliness, price, features, reliability, and support to achieve customer satisfaction. |

Picking the Correct Components for Quality in Your Environment

The following are my definitions of the fundamental components that should be the goals of quality assurance.

-

The definition of quality is customer satisfaction.

-

The system for achieving quality is constant refinement.

-

The measure of quality is the profit.

-

The target goal of the quality process is a hit every time.

| Note | Quality can be quantified most effectively by measuring customer satisfaction. |

My formula for achieving these goals is:

-

Be first to market with the product.

-

Ask the right price.

-

Get the right features in it-the required stuff and some flashy stuff that will really please the users.

-

Keep the unacceptable bugs to an absolute minimum. Corollary: Make sure your bugs are less expensive and less irritating than your competitor's bugs.

As far as I am concerned, this is a formula for creating an excellent product.

A Change in the Balance

Historically, as a market matures, the importance of being the first to market will diminish and reliability will become more important. Indications show that this already happening in some cutting-edge industries. For example, I just completed a study of 3G communications devices in the Pacific Rim. Apparently, the first-to-market service provider captures the early adopter-in this case, young people, students, and professionals. However, the higher volume sales of hardware and services go to the provider who can capture the second wave of buyers. This second wave is made up of members of the general public who need time to evaluate the new offerings. The early adopters serve to educate the general public. Further, the second wave of buyers is non-technical and they are not as tolerant of bugs in the system as the early adopters.

Elimination by Market Forces of Competitors Who Fail to Provide Sufficient Quality

The developers and testers who are suffering the most from the problems of outdated and inefficient quality assurance practices are the software makers who must provide high reliability. A market-driven RAD shop is likely to use only the quality assurance practices that suit their in-house process and ignore the rest. This is true because a 5 percent increase in reliability is not worth a missed delivery date. Even though the makers of firmware, safety-critical software, and other high-reliability systems are feeling the same pressures to get their product to market, they cannot afford to abandon quality assurance practices.

A student said to me recently, "We make avionics. We test everything, every time. When we find a bug, we fix it, every time, no matter how long it takes." The only way these makers can remain competitive without compromising reliability is by improving, optimizing, and automating their development processes and their quality assurance and control processes.

Clearly, we must control quality. We must encourage and reward invention, and we must be quick to incorporate improvements. What we are looking for is a set of efficient methods, metrics, and tools that strike a balance between controlling process and creating product.

Picking the Correct Quality Control Tools for Your Environment

Earlier I mentioned the traditional tools used by quality assurance to ensure that quality is achieved in the product: records, documents, and activities (such as testing). Improving these tools is a good place to start if you are going to try and improve your quality process. We need all of these tools; it is just a matter of making them efficient and doable.

I also talked about the failings and challenges associated with using paper in our development and quality processes. And, in Chapter 1, I talked about the fact that trained testers using solid methods and metrics are in short supply. In this section, I want to talk about some of the techniques I have used to improve these three critical quality assurance tools.

Automating Record Keeping

We certainly need to keep records, and we need to write down our plans, but we can't spend time doing it. The records must be generated automatically as a part of our development and quality process. The records that tell us who, what, where, when, and how should not require special effort to create, and they should be maintained automatically every time something is changed.

The most important (read: cost-effective) test automation I have developed in the last four years has not been preparing automated test scripts. It has been automating the documentation process, via the inventory, and test management by instituting online forms and a single-source repository for all test documentation and process tracking.

I built my first test project Web site in 1996. It proved to be such a useful tool that the company kept it in service for years after the product was shipped. It was used by the support groups to manage customer issues, internal training, and upgrades to the product for several years.

This automation of online document repositories for test plans, scripts, scheduling, bug reporting, shared information, task lists, and discussions has been so successful that it has taken on a life of its own as a set of project management tools that enable instant collaboration amongst team members, no matter where they are located.

Today, collaboration is becoming a common theme as more and more development efforts begin to use the Agile methodologies. [1] I will talk more about this methodology in Chapter 3, "Approaches to Managing Software Testing." Collaborative Web sites are beginning to be used in project management and intranet sites to support this type of effort.

I have built many collaborative Web sites over the years; some were more useful than others, but they eliminated whole classes of errors, because there were a single source for all documentation, schedules, and tasks that was accessible to the entire team.

Web sites of this type can do a lot to automate our records, improve communications, and speed the processing of updates. This objective is accomplished through the use of team user profiles, role-based security, dynamic forms and proactive notification, and messaging features. Task lists, online discussions, announcements, and subscription-based messaging can automatically send emails to subscribers when events occur.

Several vendors are offering Web sites and application software that perform these functions. I use Microsoft SharePoint Team Services to perform these tasks today. It comes free with Microsoft Office XP, so it is available to most corporate testing projects. There are many names for a Web site that performs these types of tasks; I prefer to call such a Web site a collaborative site.

Improving Documentation Techniques

Documentation techniques can be improved in two ways. First, you can improve the way documents are created and maintained by automating the handling of changes in a single-source environment. Second, you can improve the way the design is created and maintained by using visualization and graphics instead of verbiage to describe systems and features. A graphical visualization of a product or system can be much easier to understand, review, update, and maintain than a written description of the system.

Improving the Way Documents Are Created, Reviewed, and Maintained

We can greatly improve the creation, review, and approval process for documents if they are (1) kept in a single-source repository and (2) reviewed by the entire team with all comments being collected and merged automatically into a single document. Thousands of hours of quality assurance process time can be saved by using a collaborative environment with these capabilities.

For example, in one project that I managed in 2001, the first phase of the project used a traditional process of distributing the design documents via email and paper, collecting comments and then rolling all the comments back into a single new version. Thirty people reviewed the documents. Then, it took a team of five documentation specialists 1,000 hours to roll all the comments into the new version of the documentation.

At this point I instituted a Microsoft SharePoint Team Services collaborative Web site, which took 2 hours to create and 16 hours to write the instructions to the reviewers and train the team to use the site. One document specialist was assigned as the permanent support role on the site to answer questions and reset passwords. The next revision of the documents included twice as many reviewers, and only 200 hours were spent rolling the comments into the next version of the documentation. Total time savings for processing the reviewers' comments was about 700 hours.

The whole concept of having to call in old documents and redistribute new copies in a project of any size is so wasteful that an automated Web-based system can usually pay for itself in the first revision cycle.

Improving the Way Systems Are Described: Replacing Words with Pictures

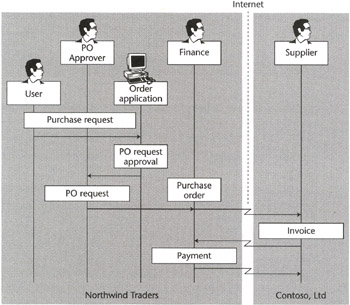

Modeling tools are being used to replace descriptive commentary documentation in several industries. For example, BizTalk is an international standard that defines an environment for interorganizational workflows that support electronic commerce and supply-chain integration. As an example of document simplification, consider the illustration in Figure 2.1. It shows the interaction of a business-to-business automated procurement system implemented by the two companies. Arrows denote the flow of data among roles and entities.

Figure 2.1: Business-to-business automated procurement system between two companies.

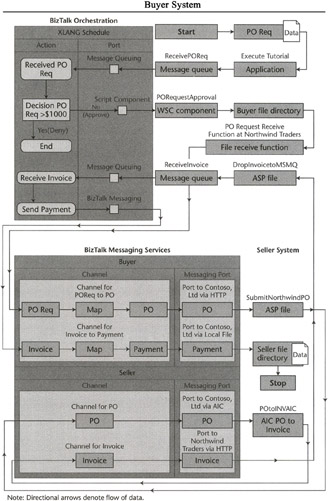

Microsoft's BizTalk server allows business analysts and programmers to design the flow of B2B data using the graphical user interface of Visio (a drawing program). They actually draw the process flow for the data and workflows. This drawing represents a business process. In BizTalk Orchestration Designer, once a drawing is complete, it can be compiled and run as an XLANG schedule. XLANG is part of the BizTalk standard. This process of drawing the system is called orchestration. Once the annalist has created this visual design document, the programmer simply wires up the application logic by attaching programs to the various nodes in the graphical design image.

Figure 2.2 shows the movement of the documents through the buyer and seller systems as created in the orchestration process. It also shows the interaction between the XLANG schedule, BizTalk Messaging Services, and the auxiliary components.

Figure 2.2: The movement of documents through the system.

| Fact: | One picture (like Figure 2.2) is better than thousands of words of commentary-style documentation and costs far less to create and maintain. |

It can take anywhere from 15 to 30 pages of commentary to adequately describe this process. Yet this graphic does it in one page. This type of flow mapping is far more efficient than describing the process in a commentary. It is also far more maintainable. And, as you will see when we discuss logic flow maps in Chapters 11 and 12, this type of flow can be used to generate the tests required to validate the system during the test effort.

Visualization techniques create systems that are self-documenting. I advocate using this type of visual approach to describing systems at every opportunity. As I said in Chapter 1, the most significant improvements in software quality have not been made through testing or quality assurance, but through the application of standards.

Trained Testers Using Methods and Metrics That Work

Finally, we have the quality assurance tool for measuring the quality of the software product: testing.

In many organizations, the testers are limited to providing information based on the results of verification and validation. This is a sad under-use of a valuable resource. This thinking is partly a holdover from the traditional manufacturing-based quality assurance problems. This traditional thinking assumes that the designers can produce what the users want the first time, all by themselves. The other part of the problem is the perception that testers don't produce anything-except possibly bugs.

It has been said that "you can't test the bugs out." This is true. Test means to verify-to compare an actuality to a standard. It doesn't say anything about taking any fixative action. However, enough of the right bugs must be removed during, or as a result of, the test effort, or the test effort will be judged a failure.

| Note | In reality, the tester's product is the delivered system, the code written by the developers minus the bugs (that the testers persuaded development to remove) plus the innovations and enhancements suggested through actual use (that testers persuaded developers to add). |

The test effort is the process by which testers produce their product.

The quality of the tools that they use in the test effort has a direct effect on the outcome of the quality of the process. A good method isn't going to help the bottom line if the tools needed to support it are not available.

The methods and metrics that the testers use during the test effort should be ones that add value to the final product. The testers should be allowed to choose the tools they need to support the methods and metrics that they are using.

Once the system is turned over to test, the testers should own it. After all, the act of turning the code over for testing states implicitly that the developers have put everything into it that they currently believe should be there, for that moment at least, and that it is ready to be reviewed.

As I observed in Chapter 1, there is a shortage of trained testers, and practicing software testers are not noted for using formal methods and metrics. Improving the tools used by untrained testers will not have as big a benefit as training the testers and giving them the tools that they need to succeed.

[1]The Agile Software Development Manifesto, by the AgileAlliance, February 2001, at www.agilemanifesto.org.

|

|

EAN: 2147483647

Pages: 132