Types of reviews

3.1 Types of reviews

Reviews take on various aspects depending on their type. The two broad types of reviews are as follows:

-

In-process (generally considered informal) review;

-

Phase-end (generally considered formal) review.

3.1.1 In-process reviews

In-process reviews are informal reviews intended to be held during the conduct of each SDLC phase. Informal implies that there is little reporting to the customer on the results of the review. Further, they are often called peer reviews because they are usually conducted by the producer's peers. Peers may be coworkers or others who are involved at about the same level of product effort or detail.

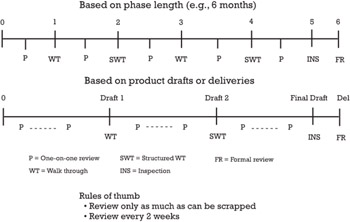

Scheduling of the reviews, while intentional and a part of the overall project plan, is rather flexible. This allows for reviews to be conducted when necessary: earlier if the product is ready, later if the product is late. One scheduling rule of thumb is to review no more than the producer is willing to throw away. Another rule of thumb is to have an in-process review every two weeks. Figure 3.2 offers suggestions on the application of these two rules of thumb. Each project will determine the appropriate rules for its own in-process reviews.

Figure 3.2: Scheduling rules of thumb.

There is a spectrum of rigor across the range of in-process reviews. The least rigorous of these reviews is the peer review. It is followed, in increasing rigor, by walk-throughs of the in-process product, such as a requirements document or a design specification. The most rigorous is the inspection. These are discussed more completely next. Table 3.1 summarizes some of the characteristics of in-process reviews.

| Review Type | Records | CM Level | Participants | Stress Level |

|---|---|---|---|---|

| One-on-one | None | None | Coworker | Very low |

| Walk-through | Marked-up copy or defect reports | Probably none | Interested project members | Low to medium |

| Structured walk-through | Defect reports | Informal | Selected project members | Medium |

| Inspection | Defect report database | Formal | Specific role players | High |

One-on-one reviews The one-on-one review is the least rigorous of the reviews and, thus, usually the least stressful. In this review, the producer asks a coworker to check the product to make sure that it is basically correct. Questions like, "Did I get the right information in the right place?" and "Did I use the right formula?" are the main concerns of the one-on-one review. The results of the review are often verbal, or at the most, a red mark or two on the draft of the product.

One-on-one reviews are most often used during the drafting of the product, or the correcting of a defect, and cover small parts at a time. Since there is virtually no distribution of records of the defects found or corrections suggested, the producer feels little stress or threat that he or she will be seen as having done a poor job.

Walk-throughs As the producer of a particular product gets to convenient points in his or her work, a group of peers should be requested to review the work as the producer describes, or walks through, the product with them. In this way, defects can be found and corrected immediately, before the product is used as the basis for the next-phase activities. Since it is usually informal and conducted by the producer's peers, there is less tendency on the part of the producer to be defensive and protective, leading to a more open exchange with correspondingly better results.

Participants in the walk-through are usually chosen for their perceived ability to find defects. The leader must make sure that the presence of a lead or senior person does not inhibit participation by others.

In a typical walk-through, the producer distributes the material to be reviewed at the meeting. While earlier distribution of the material would probably enhance the value of the walk-through, most describers of walkthroughs do not require prior distribution. The producer then walks the reviewers through the material, describing his or her thought processes, intentions, approaches, assumptions, and perceived constraints as they apply to the product. The author should not to justify or explain his or her work. If there is confusion about a paragraph or topic, the author may explain what he or she meant so that the review can continue. This, however, is a defect and will be recorded for the author to clarify in the correction process.

Reviewers are encouraged to comment on the producer's approach and results with the intent of exposing defects or shortcomings in the product. On the other hand, only deviations from the product's requirements are open to criticism. Comments, such as "I could do it faster or more elegantly," are out of order. Unless the product violates a requirement, standard, or constraint or produces the wrong result, improvements in style and so on are not to be offered in the walk-through meeting. Obviously, some suggestions are of value and should be followed through outside of the review.

It must be remembered that all reviews take resources and must be directed at the ultimate goal of reducing the number of defects delivered to the user or customer. Thus, it is not appropriate to try to correct defects that are identified. Correction is the task of the author after the review.

Results of the walk-through should be recorded on software defect reports such as those discussed in Chapter 6. This makes the defects found a bit more public and can increase the stress and threat to the producer. It also helps ensure that all defects found in the walk-through are addressed and corrected.

Inspections Another, more rigorous, in-process review is the inspection, as originally described by Fagan (see Additional reading). While its similarities with walk-throughs are great, the inspection requires a more specific cast of participants and more elaborate minutes and action item reporting. Unlike the walk-through, which may be documented only within the UDF, the inspection requires a written report of its results and strict recording of trouble reports.

Whether you use an inspection as specifically described by Fagan or some similar review, the best results are obtained when the formality and roles are retained. Having a reader who is charged with being sufficiently familiar with the product to be able to not read but paraphrase the documentation is a necessity. The best candidate for reader is likely the person for whom the product being reviewed will be input. Who has more interest in having a quality product on which to base his or her efforts?

Fagan calls for a moderator who is charged with logistics preparation, inspection facilitation, and formal follow-up on implementing the inspection's findings. Fagan also defines the role of the recorder as the person who creates the defect reports, maintains the defect database for the inspection, and prepares the required reports. Other participants have various levels of personal interest in the quality of the product.

Typically, an inspection has two meetings. The first is a mini walkthrough of the material by the producer. This is not on the scale of a regular walk-through but is intended to give an overview of the product and its place in the overall system. At this meeting, the moderator sees that the material to be inspected is distributed. Individual inspectors are then scheduled to review the material prior to the inspection itself. At the inspection, the reader leads the inspectors through the material and leads the search for defects. At the inspection's conclusion, the recorder prepares the required reports and the moderator oversees the follow-up on the corrections and modifications found to be needed by the inspectors.

Because it is more rigorous, the inspection tends to be more costly in time and resources than the walk-through and is generally used on projects with higher risk or complexity. However, the inspection is usually more successful at finding defects than the walk-through, and some companies use only the inspection as their in-process review.

It can also be seen that the inspection, with its regularized recording of defects, will be the most stressful and threatening of the in-process reviews. Skilled managers will remove the defect histories from their bases of performance evaluations. By doing so, and treating each discovered defect as one that didn't get to the customer, the manager can reduce the stress associated with the reviews and increase their effectiveness.

The software quality role with respect to inspections is also better defined. The software quality practitioner is a recommended member of the inspection team and may serve as the recorder. The resolution of action items is carefully monitored by the software quality practitioner, and the results are formally reported to project management.

In-process audits Audits, too, can be informal as the SDLC progresses. Most software development methodologies impose documentation content and format requirements. Generally, informal audits are used to assess the product's compliance with those requirements for the various products.

While every document and interim development product is a candidate for an audit, one common informal audit is that applied to the UDF or software development notebook. The notebook or folder is the repository of the notes and other material that the producer has collected during the SDLC. Its required contents should be spelled out by a standard, along with its format or arrangement. Throughout the SDLC, the software quality practitioner should audit the UDFs to make sure that they are being maintained according to the standard.

3.1.2 Phase-end reviews

Phase-end reviews are formal reviews that usually occur at the end of the SDLC phase, or work period, and establish the baselines for work in succeeding phases. Unlike the in-process reviews, which deal with a single product, phase-end reviews include examination of all the work products that are to have been completed during that phase. A review of project management information, such as budget, schedule, and risk, is especially important.

For example, the software requirements review (SRR) is a formal examination of the requirements document and sets the baseline for the activities in the design phase to follow. It should also include a review of the system test plan, a draft of the user documentation, perhaps an interface requirements document, quality and CM plans, any safety and risk concerns or plans, and the actual status of the project with regard to budget and schedule plans. In general, anything expected to have been produced during that phase is to be reviewed. The participants include the producer and software quality practitioner as well as the user or customer. Phase-end reviews are a primary means of keeping the user or customer aware of the progress and direction of the project. A phase-end review is not considered finished until the action items have been closed, software quality has approved the results, and the user or customer has approved going ahead with the next phase. These reviews permit the user or customer to verify that the project is proceeding as intended or to give redirection as needed. They are also major reporting points for software quality to indicate to management how the project is adhering to its standards, requirements, and resource budgets.

Phase-end reviews should be considered go-or-no-go decision points. At each phase-end review, there should be sufficient status information available to examine the project's risk, including its viability, the likelihood that it will be completed on or near budget and schedule, its continued need, and the likelihood that it will meet its original goals and objectives. History has documented countless projects that have ended in failure or delivered products that were unusable. One glaring example is that of a bank finally canceling a huge project. The bank publicly admitted to losing more than $150 million and laying off approximately 400 developers. The bank cited inadequate phase-end reviews as the primary reason for continuing the project as long as it did. Properly conducted phase-end reviews can identify projects in trouble and prompt the necessary action to recover. They can also identify projects that are no longer viable and should be cancelled.

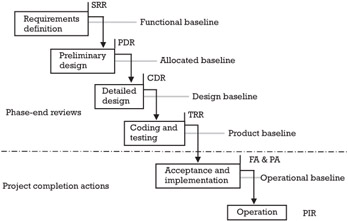

Figure 3.3 shows various phase-end reviews throughout the SDLC. Formal reviews, such as the software requirements review, preliminary design review (PDR), and the critical design review (CDR) are held at major milestone points within the SDLC and create the baselines for subsequent SDLC phases. The test readiness review (TRR) is completed prior to the onset of acceptance or user testing.

Figure 3.3: Typical phase-end reviews.

Also shown are project completion audits conducted to determine readiness for delivery and the post implementation review, which assesses project success after a period of actual use.

Table 3.2 presents the typical subjects of each of the four major development phase-end reviews. Those documents listed as required are considered the minimum acceptable set of documents required for successful software development and maintenance.

| Review | Required | Others |

|---|---|---|

| Software requirements | Software requirements specification Software test plan Software development plan Quality system plan CM plan Standards and procedures Cost/schedule status report | Interface requirements specification |

| Preliminary design | Software top-level design Software test description Cost/schedule status report | Interface design Database design |

| Critical design | Software detailed design Cost/schedule status report | Interface design Database design |

| Test readiness | Software product specification Software test procedures Cost/schedule status report | User's manual Operator's manual |

3.1.3 Project completion analyses

Two formal audits, the functional audit (FA) and the physical audit (PA), are held as indicated in Figure 3.3. These two events, held at the end of the SDLC, are the final analyses of the software product to be delivered against its approved requirements and its current documentation, respectively.

The FA compares the software system being delivered against the currently approved requirements for the system. This is usually accomplished through an audit of the test records. The PA is intended to assure that the full set of deliverables is an internally consistent set (i.e., the user manual is the correct one for this particular version of the software). The PA relies on the CM records for the delivered products. The software quality practitioner frequently is charged with the responsibility of conducting these two audits. In any case, the software quality practitioner must be sure that the audits are conducted and report the finding of the audits to management.

The postimplementation review (PIR) is held once the software system is in production. The PIR usually is conducted 6 to 9 months after implementation. Its purpose is to determine whether the software has, in fact, met the user's expectations for it in actual operation. Data from the PIR is intended for use by the software quality practitioner to help improve the software development process. Table 3.3 presents some of the characteristics of the PIR.

| Timing | 3 to 6 months after software system implementation |

| Software system goals versus experience | Return on investment Schedule results User response Defect history |

| Review results usage | Input to process analysis and improvement Too often ignored |

The role of the software quality practitioner is often that of conducting the PIR. This review is best used as an examination of the results of the development process. Problems with the system as delivered can point to opportunities to improve the development process and verify the validity of previous process changes.

EAN: 2147483647

Pages: 137