Network Load Balancing Architecture

This section describes the architecture of Network Load Balancing in Windows Server 2003:

-

How Network Load Balancing Works

-

Managing Application State

-

Detailed Architecture

-

Distribution of Cluster Traffic

-

Load Balancing Algorithm

-

Convergence

-

Remote Control

How Network Load Balancing Works

Network Load Balancing scales the performance of a server-based program, such as a Web server, by distributing its client requests across multiple servers within the cluster. With Network Load Balancing, each incoming IP packet is received by each host but accepted only by the intended recipient. The cluster hosts concurrently respond to different client requests, even multiple requests from the same client. For example, a Web browser might obtain the multiple images within a single Web page from different hosts in a Network Load Balancing cluster. This speeds up processing and shortens the response time to clients .

Each Network Load Balancing host can specify the load percentage that it will handle, or the load can be equally distributed across all of the hosts. Network Load Balancing servers use a distributed algorithm to statistically map workload among the hosts of the cluster according to the load percentages. This load balance dynamically changes when hosts enter or leave the cluster.

The load balance does not change in response to varying server loads (such as CPU or memory usage). For applications such as Web servers, which have numerous clients and relatively short-lived client requests, statistical partitioning of the workload efficiently provides excellent load balance and fast response to cluster changes.

Network Load Balancing cluster servers emit a heartbeat to other hosts in the cluster and listen for the heartbeat of other hosts. If a server in a cluster fails, the remaining hosts adjust and redistribute the workload while maintaining continuous service to their clients.

Existing connections to an offline host are lost, but the Internet services remain continuously available. In most cases (for example, with Web servers), client software automatically retries the failed connections, and the clients experience only a few seconds' delay in receiving a response.

Managing Application State

Application state refers to data maintained by a server application on behalf of its clients. If a server application (such as a Web server) maintains state information about a client session that spans multiple TCP connections, it's usually important that all TCP connections for this client be directed to the same cluster host. A shopping cart at an e-commerce site and Secure Sockets Layer (SSL) state information are examples of a client's session state. Network Load Balancing can be used to scale applications that manage session state that spans multiple connections.

When the Network Load Balancing client affinity parameter setting is enabled, Network Load Balancing directs all TCP connections to the same cluster host. This allows session state to be maintained in host memory. If a server or network failure occurs during a client session, a new logon may be required to reauthenticate the client and reestablish session state. Also, adding a new cluster host redirects some client traffic to the new host, which can affect sessions, although ongoing TCP connections are not disturbed.

Client/server applications that manage client state so that it can be retrieved from any cluster host (for example, by embedding state within cookies or pushing it to a back-end database) do not need to use Network Load Balancing client affinity.

To further assist in managing session state, Network Load Balancing provides an optional client affinity setting that directs all client requests from a TCP/IP Class C address range to a single cluster host. This feature enables clients that use multiple proxy servers to have their TCP connections directed to the same cluster host.

The use of multiple proxy servers at the client's site causes requests from a single client to appear to originate from different systems. Assuming that all of the client's proxy servers are located within the same 254-host Class C address range, Network Load Balancing ensures that client sessions are handled by the same host with minimum impact on load distribution among the cluster hosts. Some very large client sites can use multiple proxy servers that span Class C address spaces.

In addition to session state, server applications often maintain persistent server-based state information that's updated by client transactions ”for example, merchandise inventory at an e-commerce site.

Network Load Balancing should not be used to directly scale applications that independently update interclient state, such as SQL Server (other than for read-only database access), because updates made on one cluster host will not be visible to other cluster hosts.

To benefit from Network Load Balancing, applications must be designed to permit multiple instances to simultaneously access a shared database server that synchronizes updates. For example, Web servers with Active Server pages need to push client updates to a shared back-end database server.

Detailed Architecture

To maximize throughput and high availability, Network Load Balancing uses a fully distributed software architecture. An identical copy of the Network Load Balancing driver runs in parallel on each cluster host.

The drivers arrange for all cluster hosts on a single subnet to concurrently detect incoming network traffic for the cluster's primary IP address (and for additional IP addresses on multihomed hosts). On each cluster host, the driver acts as a filter between the network adapter's driver and the TCP/IP stack, allowing a portion of the incoming network traffic to be received by the host. By this means, incoming client requests are partitioned and the load balanced among the cluster hosts.

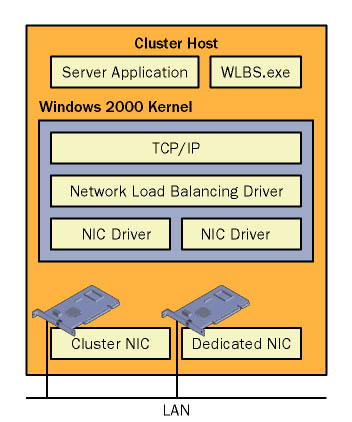

Network Load Balancing runs as a network driver logically beneath higher-level application protocols, such as HTTP and FTP. Figure 13-11 on page 284 shows the implementation of Network Load Balancing as an intermediate driver in the Windows 2000 network stack. The implications of this architecture include the following:

-

Maximizing throughput.

This architecture maximizes throughput by using the broadcast subnet to deliver incoming network traffic to all cluster hosts and by eliminating the need to route incoming packets to individual cluster hosts. Because filtering unwanted packets is faster than routing packets (which involves receiving, examining, rewriting, and resending), Network Load Balancing delivers higher network throughput than do dispatcher-based solutions.

As network and server speeds grow, the throughput of Network Load Balancing throughput grows proportionally, thus eliminating any dependency on a particular hardware routing implementation. For example, Network Load Balancing has demonstrated 250 megabits per second (Mbps) throughput on gigabit networks.

-

High availability.

Another key advantage of Network Load Balancing's fully distributed architecture is enhanced high availability resulting from (N-1) “way failover in a cluster with N hosts. In contrast, dispatcher-based solutions create an inherent single point of failure, which must be eliminated using a redundant dispatcher that provides only one-way failover. This offers a less robust failover solution than does a fully distributed architecture.

-

Using hub and switch on the subnet.

The Network Load Balancing architecture takes advantage of the subnet's hub and switch architecture to simultaneously deliver incoming network traffic to all cluster hosts. However, this approach increases the burden on switches by occupying additional port bandwidth.

This is usually not a concern in most intended applications, such as Web services and streaming media, because the percentage of incoming traffic is a small fraction of total network traffic. However, if the client-side network connections to the switch are significantly faster than the server-side connections, incoming traffic can occupy a prohibitive fraction of the server-side port bandwidth. The same problem arises if multiple clusters are hosted on the same switch and measures are not taken to set up virtual LANs for individual clusters.

-

NDIS driver.

During packet reception, Network Load Balancing's fully pipelined implementation overlaps the delivery of incoming packets to TCP/IP and the reception of other packets by the Network Driver Interface Specification (NDIS) driver. This speeds up overall processing and reduces latency because TCP/IP can process a packet while the NDIS driver receives a subsequent packet. It also reduces the overhead required for TCP/IP and the NDIS driver to coordinate their actions, and in many cases, it eliminates an extra memory copy of packet data.

During packet sending, Network Load Balancing also enhances throughput and reduces latency and overhead by increasing the number of packets that TCP/IP can send with one NDIS call. To achieve these performance enhancements, Network Load Balancing allocates and manages a pool of packet buffers and descriptors that it uses to overlap the actions of TCP/IP and the NDIS driver.

Figure 13-11. This diagram shows Network Load Balancing as an intermediate driver between TCP/IP and a NIC driver.

Distribution of Cluster Traffic

Network Load Balancing uses Layer 2 broadcast or multicast to simultaneously distribute incoming network traffic to all cluster hosts. In its default, unicast, mode of operation, Network Load Balancing reassigns the station address (MAC address) of the network adapter for which it is enabled (called the cluster adapter), and all cluster hosts are assigned the same MAC address. Incoming packets are thereby received by all cluster hosts and passed up to the Network Load Balancing driver for filtering.

To ensure uniqueness, the MAC address is derived from the cluster's primary IP address entered in the Network Load Balancing Properties dialog box. For a primary IP address of 1.2.3.4, the unicast MAC address is set to 02-BF-1-2-3-4. Network Load Balancing automatically modifies the cluster adapter's MAC address by setting a registry entry and then reloading the adapter's driver; the operating system does not have to be restarted.

If the cluster hosts are attached to a switch instead of a hub, the use of a common MAC address will create a conflict because Layer 2 switches expect to see unique source MAC addresses on all switch ports. To avoid this problem, Network Load Balancing uniquely modifies the source MAC address for outgoing packets; a cluster MAC address of 02-BF-1-2-3-4 is set to 02- h -1-2-3-4, where h is the host's priority within the cluster (set in the Network Load Balancing Properties dialog box). This technique prevents the switch from learning the cluster's actual MAC address, and as a result, incoming packets for the cluster are delivered to all switch ports. If the cluster hosts are connected directly to a hub instead of to a switch, Network Load Balancing's masking of the source MAC address in unicast mode can be disabled to avoid flooding upstream switches. This is accomplished by setting the Network Load Balancing registry parameter MaskSrcMAC to 0. The use of an upstream level-three switch will also limit switch flooding.

Network Load Balancing's unicast mode has the side effect of disabling communication between cluster hosts using the cluster adapters. Because outgoing packets for another cluster host are sent to the same MAC address as the sender, these packets are looped back within the sender by the network stack and never reach the wire. This limitation can be avoided by adding a second network adapter card to each cluster host. In this configuration, Network Load Balancing is bound to the network adapter on the subnet that receives incoming client requests, and the other adapter is typically placed on a separate, local subnet for communication between cluster hosts and with back-end file and database servers. Network Load Balancing uses only the cluster adapter for its heartbeat and remote control traffic. Communication between cluster hosts and hosts outside the cluster is never affected by Network Load Balancing's unicast mode.

Network traffic for a host's dedicated IP address (on the cluster adapter) is received by all cluster hosts because they all use the same MAC address. Because Network Load Balancing never load- balances traffic for the dedicated IP address, Network Load Balancing immediately delivers this traffic to TCP/IP on the intended host. On other cluster hosts, Network Load Balancing treats this traffic as load balanced traffic (because the target IP address does not match another host's dedicated IP address), and it might deliver it to TCP/IP, which will discard it. Excessive incoming network traffic for dedicated IP addresses can impose a performance penalty when Network Load Balancing operates in unicast mode because of the need for TCP/IP to discard unwanted packets.

Network Load Balancing provides a second mode for distributing incoming network traffic to all cluster hosts. Called multicast mode, this mode assigns a Layer 2 multicast address to the cluster adapter instead of changing the adapter's station address. The multicast MAC address is set to 03-BF-1-2-3-4 for a cluster's primary IP address of 1.2.3.4. Because each cluster host retains a unique station address, this mode alleviates the need for a second network adapter for communication between cluster hosts, and it also removes any performance penalty from the use of dedicated IP addresses. Network Load Balancing's unicast mode induces switch flooding in order to simultaneously deliver incoming network traffic to all cluster hosts. Also, when Network Load Balancing uses multicast mode, switches often flood all ports by default to deliver multicast traffic. However, Network Load Balancing's multicast mode opens up the opportunity for the system administrator to limit switch flooding by configuring a virtual LAN within the switch for the ports corresponding to the cluster hosts. This can be accomplished by manually programming the switch or by using IGMP or GMRP protocols. The current version of Network Load Balancing does not provide automatic support for IGMP or GMRP.

Network Load Balancing implements the necessary Address Resolution Protocol (ARP) functionality to ensure that the cluster's primary IP address and other virtual IP addresses resolve to the cluster's multicast MAC address. (The dedicated IP address continues to resolve to the cluster adapter's station address.) Experience has shown that Cisco routers currently do not accept an ARP response from the cluster that resolves unicast IP addresses to multicast MAC addresses. This problem can be overcome by adding a static ARP entry to the router for each virtual IP address, and the cluster's multicast MAC address can be obtained from the Network Load Balancing Properties dialog box or from the Wlbs.exe remote control program. The default unicast mode avoids this problem because the cluster's MAC address is a unicast MAC address.

Network Load Balancing does not manage any incoming IP traffic other than TCP, User Datagram Protocol (UDP), and Generic Routing Encapsulation (GRE, as part of PPTP) traffic for specified ports. It does not filter ICMP, IGMP, ARP (except as described earlier), or other IP protocols. All such traffic is passed unchanged to the TCP/IP protocol software on all of the hosts within the cluster. As a result, the cluster can generate duplicate responses from certain point-to-point TCP/IP programs (such as ping) when the cluster IP address is used. Because of the robustness of TCP/IP and its ability to deal with replicated datagrams, other protocols behave correctly in the clustered environment. These programs can use the dedicated IP address for each host to avoid this behavior.

Load Balancing Algorithm

Network Load Balancing employs a fully distributed filtering algorithm to map incoming clients to the cluster hosts. This algorithm was chosen to enable cluster hosts to independently and quickly make a load balancing decision for each incoming packet. It was optimized to deliver statistically even load balance for a large client population making numerous relatively small requests, such as those typically made to Web servers.

When the client population is small or the client connections produce widely varying loads on the server, Network Load Balancing's load balancing algorithm is less effective. However, the simplicity and speed of Network Load Balancing's algorithm allow it to deliver very high performance, including both high throughput and low response time, in a wide range of useful client/server applications.

Network Load Balancing load-balances incoming client requests so as to direct a selected percentage of new requests to each cluster host; the load percentage is set in the Network Load Balancing Properties dialog box for each port range to be load-balanced . The algorithm does not respond to changes in the load on each cluster host (such as the CPU load or memory usage). However, the mapping is modified when the cluster membership changes, and load percentages are renormalized accordingly .

When inspecting an arriving packet, all hosts simultaneously perform a statistical mapping to quickly determine which host should handle the packet. The mapping uses a randomization function that generates a host priority based on the IP address, port, and other information. The corresponding host forwards the packet up the network stack to TCP/IP, and the other cluster hosts discard it. The mapping remains invariant unless the membership of cluster hosts changes, ensuring that a given client's IP address and port will always map to the same cluster host. However, the particular cluster host to which the client's IP address and port map cannot be predetermined because the randomization function takes into account the current and past cluster's membership to minimize remappings. The load balancing algorithm assumes that client IP addresses and port numbers (when client affinity is not enabled) are statistically independent. This assumption can break down if a server-side firewall is used that proxies client addresses with one IP address and client affinity is enabled. In this case, all client requests will be handled by one cluster host, and load balancing is defeated. However, if client affinity is not enabled, the distribution of client ports within the firewall usually provides good load balance.

In general, the quality of load balance is statistically determined by the number of clients making requests. This behavior is analogous to dice throws, where the number of cluster hosts determines the number of sides of a die, and the number of client requests corresponds to the number of throws. The load distribution improves with the number of client requests just as the fraction of throws of an n -sided die resulting in a given face approaches 1/ n with an increasing number of throws. As a rule of thumb, with client affinity set, there must be many more clients than cluster hosts to begin to observe even load balance. As the statistical nature of the client population fluctuates, the evenness of load balance can be observed to vary slightly over time. Note that achieving precisely identical load balance on each cluster host imposes a performance penalty (throughput and response time) because of the overhead required to measure and react to load changes. This performance penalty must be weighed against the benefit of maximizing the use of cluster resources (principally CPU and memory). In any case, excess cluster resources must be maintained to absorb the client load in case of failover. Network Load Balancing takes the approach of using a very simple and robust load balancing algorithm that delivers the highest possible performance and availability.

The Network Load Balancing client affinity settings are implemented by modifying the statistical mapping algorithm's input data. When client affinity is selected in the Network Load Balancing Properties dialog box, the client's port information is not used as part of the mapping. Hence, all requests from the same client always map to the same host within the cluster. This constraint has no timeout value (as is often used in dispatcher-based implementations ) and persists until there is a change in cluster membership. When single affinity is selected, the mapping algorithm uses the client's full IP address. However, when Class C affinity is selected, the algorithm uses only the Class C portion (upper 24 bits) of the client's IP address. This ensures that all clients within the same Class C address space map to the same cluster host.

In mapping clients to hosts, Network Load Balancing cannot directly track the boundaries of sessions (such as SSL sessions) because it makes its load balancing decisions when TCP connections are established and prior to the arrival of application data within the packets. Also, it cannot track the boundaries of UDP streams because the logical session boundaries are defined by particular applications. Instead, the Network Load Balancing affinity settings are used to assist in preserving client sessions. When a cluster host fails or leaves the cluster, its client connections are always dropped. After a new cluster membership is determined by convergence (described shortly), clients that previously mapped to the failed host are remapped across the surviving hosts. All other client sessions are unaffected by the failure and continue to receive uninterrupted service from the cluster. In this manner, the Network Load Balancing load balancing algorithm minimizes disruption to clients when a failure occurs.

When a new host joins the cluster, it induces convergence, and a new cluster membership is computed. When convergence completes, a minimal portion of the clients will be remapped to the new host. Network Load Balancing tracks TCP connections on each host, and, after their current TCP connection completes, the next connection from the affected clients will be handled by the new cluster host; UDP streams are immediately handled by the new cluster host. This can potentially break some client sessions that span multiple connections or comprise UDP streams. Hence, hosts should be added to the cluster at times that minimize disruption of sessions. To completely avoid this problem, session state must be managed by the server application so that it can be reconstructed or retrieved from any cluster host. For example, session state can be pushed to a back-end database server or kept in client cookies. SSL session state is automatically re-created by reauthenticating the client.

The GRE stream within the Point-to-Point Tunneling Protocol (PPTP) protocol is a special case of a session that is unaffected by adding a cluster host. Because the GRE stream is temporally contained within the duration of its TCP control connection, Network Load Balancing tracks this GRE stream along with its corresponding control connection. This prevents the addition of a cluster host from disrupting the PPTP tunnel.

Convergence

Network Load Balancing hosts periodically exchange multicast or broadcast heartbeat messages within the cluster. This allows them to monitor the status of the cluster. When the state of the cluster changes (such as when hosts fail, leave, or join the cluster), Network Load Balancing invokes a process known as convergence , in which the hosts exchange heartbeat messages to determine a new, consistent state of the cluster and to elect the host with the highest host priority as the new default host. When all cluster hosts have reached consensus on the correct new state of the cluster, they record the change in cluster membership upon completion of convergence in the Windows event log.

During convergence, the hosts continue to handle incoming network traffic as usual except that traffic for a failed host does not receive service. Client requests to surviving hosts are unaffected. Convergence terminates when all cluster hosts report a consistent view of the cluster membership for several heartbeat periods. If a host attempts to join the cluster with inconsistent port rules or an overlapping host priority, completion of convergence is inhibited. This prevents an improperly configured host from handling cluster traffic. At the completion of convergence, client traffic for a failed host is redistributed to the remaining hosts. If a host is added to the cluster, convergence allows this host to receive its share of load balanced traffic. Expansion of the cluster does not affect ongoing cluster operations and is achieved transparently to both Internet clients and to server programs. However, it might affect client sessions because clients might be remapped to different cluster hosts between connections, as described in the preceding section.

In unicast mode, each cluster host periodically broadcasts heartbeat messages, and in multicast mode, it multicasts these messages. Each heartbeat message occupies one Ethernet frame and is tagged with the cluster's primary IP address so that multiple clusters can reside on the same subnet. Network Load Balancing heartbeat messages are assigned an ether type value of hexadecimal 886F. The default period between sending heartbeats is 1 second, and this value can be adjusted with the MsgAlivePeriod registry parameter. During convergence, the exchange period is reduced by half to expedite completion. Even for large clusters, the bandwidth required for heartbeat messages is very low (for example, 24 KB/second for a 16-way cluster). Network Load Balancing assumes that a host is functioning properly within the cluster as long as it participates in the normal heartbeat exchange among the cluster hosts. If other hosts do not receive a heartbeat message from any member for several periods of message exchange, they initiate convergence. The number of missed heartbeat messages required to initiate convergence is set to 5 by default and can be adjusted using the NumAliveMsgs registry parameter.

A cluster host will immediately initiate convergence if it receives a heartbeat message from a new host or if it receives an inconsistent heartbeat message that indicates a problem in the load distribution. When receiving a heartbeat message from a new host, the host determines whether the other host has been handling traffic from the same clients. This problem can arise if the cluster subnet was rejoined after having been partitioned. More likely, the new host was already converged alone in a disjoint subnet and has received no client traffic. This can occur if the switch introduces a lengthy delay in connecting the host to the subnet. If a cluster host detects this problem and the other host has received more client connections since the last convergence, it immediately stops handling client traffic in the affected port range. Because both hosts are exchanging heartbeats, the host that has received more connections continues to handle traffic, while the other host waits for the end of convergence to begin handling its portion of the load. This heuristic algorithm eliminates potential conflicts in load handling when a previously partitioned cluster subnet is rejoined; this event is logged in the event log.

Remote Control

The Network Load Balancing remote control mechanism uses the UDP protocol and is assigned port 2504. Remote control datagrams are sent to the cluster's primary IP address. Because they are handled by the Network Load Balancing driver on each cluster host, these datagrams must be routed to the cluster subnet (instead of to a back-end subnet to which the cluster is attached). When remote control commands are issued from within the cluster, they are broadcast on the local subnet. This ensures that they are received by all cluster hosts, even if the cluster runs in unicast mode.

| |

| Top |

EAN: 2147483647

Pages: 153

- The ROI of Lean Six Sigma for Services

- Seeing Services Through Your Customers Eyes-Becoming a customer-centered organization

- Success Story #3 Fort Wayne, Indiana From 0 to 60 in nothing flat

- Success Story #4 Stanford Hospital and Clinics At the forefront of the quality revolution

- Phase 2 Engagement (Creating Pull)