Models of E-Business Computing

|

WebSphere is a J2EE-compliant application server supporting the entire breadth of the J2EE specification. WebSphere version 5.0 is certified at the J2EE 1.3 level, and as such, J2EE is at the heart of the programming model. Given the platform portability premise of Java (and J2EE itself), there is a good chance that you will be able to port your conforming applications to WebSphere with little effort.

However, that only tells a part of the WebSphere story. As programmers, our needs for information computing are varied, dynamic, and growing. Most computing scenarios today have to address a variety of issues: end user delivery channels, development methodologies, business-enablement approaches, legacy protection, and fulfillment goals. WebSphere has excellent capabilities for supporting many of these requirements. To understand these, it would be best to start with a basic understanding of the key models of computing and how WebSphere addresses each of these. Then, you can combine this knowledge to form a solution tailored to your specific situation.

WebSphere provides support for four basic models of e-business computing:

-

Multi-tier distributed computing

-

Web-based computing

-

Integrated enterprise computing

-

Services-oriented computing

The fundamental structures of application design enable distributed computing for different business and organizational scenarios. Most applications will eventually exploit a combination of these models to solve the needs of the environment in which they will be used. The WebSphere programming model covers the full span of each of these models.

If you are already familiar with the fundamentals of distributed computing, component programming, shared and reusable parts, business process management, and service-oriented architecture then you might like to skip ahead a bit. More so, if you're already familiar with J2EE and the core premise for WebSphere, then skip this section – move on to The WebSphere Development Model section. If, on the other hand, you're not sure about these topics or a brief refresher might cement the issues in your mind then read on. Our view is that to best understand WebSphere you need to understand the types of computing models it is designed for.

Multi-Tier Distributed Computing

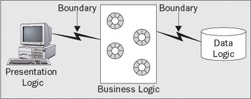

Multi-tier distributed computing is what motivated the development of many of the core server technologies within WebSphere. The idea of component-based programming is to define reusable and shared business logic in a middle-tier of a three-tier distributed application:

The value of three-tiered distributed computing comes from first structuring the application with a clean separation between the logic elements (presentation, business, and data) and then leveraging the boundaries between these elements as potential distribution points in the application, allowing, for example, the presentation logic to be hosted on a client desktop, the business logic in a middle-tier, and the data logic on a traditional data centre.

Placing the presentation logic on the user's desktop has the benefit of enabling a rich interaction model with the end user. Placing the data logic in the traditional data center allows tight, centralized control over the data and information assets of the enterprise. Placing the business logic in a middle-tier allows for the exploitation of a variety of computing systems and better reuse and sharing of common computing facilities to reduce the cost of ownership that is commonly associated with expensive thick clients. It also means that you don't have to manage large quantities of application logic in the desktop environment.

The boundary between each tier represents a potential distribution point in the application, allowing each of the three parts to be hosted in different processes or on different computers. The J2EE programming model provides location transparency for the business logic, allowing you to write the same code irrespective of whether the business logic is hosted over the network or is in the same process. However, you shouldn't let this trick you into treating your business logic as though it is co-located in the same process – notwithstanding the tremendous improvements we've seen in networking technologies, communication latency will affect the cost of invoking your business logic and you should design your application keeping in mind that such boundaries can carry this expense.

Even if you don't distribute your application components across a network, you can still benefit from the boundary between the tiers. Every boundary that you are able to design into your application becomes an opportunity to 'plug-replace' other components. Thus, even nominal distribution of these components can improve the ease of maintenance of your application. It also becomes an opportunity for the runtime to spread workload over more computing resources (we'll discuss this in detail in Chapter 12).

Through J2EE, WebSphere provides a formalized component architecture for business logic. This component technology has several key benefits to application development. Foremost, the component model provides a contract between the business logic and the underlying runtime. The runtime is able to manage the component. This ensures the optimal organization of component instances in memory, controlling the lifecycle and caching of state to achieve the highest levels of efficiency and integrity, protecting access to the components, and handling the complexities of communication, distribution, and addressing:

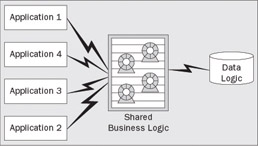

Secondly, the component architecture allows the client programming model to conform to well established rules, which implies that distributed components are shared components – that is, the same component can be used by many different applications simultaneously:

Finally, because the J2EE component model is designed around object-oriented principles, you are able to do a better job of modeling your business in the application design. This will help improve communication between you and your business end users – you can cast your application artifacts in terminology that they recognize, with behavior that is consistent with the conceptual model of the business they are trying to automate. We recommend that you exploit UML or some other standard modeling notation to define your basic business model design, and then use that model to generate the base J2EE artifacts in which you will implement that model. Rational Rose, for example, is one of several products that allow you to build a UML model, and then export that to a J2EE component implementation skeleton.

So, having a single, shared implementation and instantiation of business components in an environment where the developer doesn't have to deal with concurrency issues, not only reduces the duplication of development effort, but also allows you to concentrate more on ensuring the correctness and completeness of your component implementation. It gives you more control over your business processes by ensuring that business entities are adhering to your business policies and practices – you don't have to worry that someone has an outdated or alternative implementation of your business model sitting on their desktop. We have all heard stories about loan officers giving out loans at the wrong interest rate with inadequate collateral because someone had out-of-date rate tables loaded in the spread-sheet. Shared business logic implementations help avoid these costly problems by encouraging fewer implementations of the business logic and therefore fewer opportunities for the business logic to get out of sync.

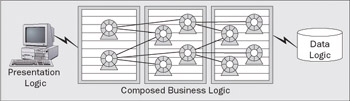

With the introduction of a component technology for business logic in a three-tiered distributed computing model, we immediately began to see design patterns for the composition of business objects. That is, businesses want to be able to form higher-level business concepts that aggregate previously independent elements of their business. Instead of a customer having multiple accounts, and therefore, multiple relationships with the business, the enterprise could bring together all the individual accounts for a given customer under a single customer object and thus form a better and more enhanced relationship with the business. The business logic could thus be structured in multiple tiers, ranging from general to more specific, all formulated under the same underlying component architecture.

From this was born the idea of multi-tier distributed computing, where the middle-tier of business logic could be composed of an arbitrary number of intermediate tiers all running on a common WebSphere J2EE platform architecture and thus ensuring consistency of programming, deployment, and administration:

We should reiterate here that the multi-tiered distributed computing nature of component-based programming for business logic enables the integration of distribution points within your application design. It also defines a contractual boundary to your business components that can be leveraged to share your business logic components within many different applications, which in turn may be distributed. However, you don't have to distribute the components of your application to benefit from component-based programming. In fact, distributing your components introduces coupling issues that are not normally found in centralized application designs.

In cases where you know the latency of distributed communication between two components cannot be tolerated in your application design, you can instrument your component with local interfaces that will defeat distribution, but still retain the benefits of component-based programming.

Many of the benefits of component-based programming come from the separation of application logic from concerns of information technology. In particular, the J2EE component model enables a single-level-store programming model, whereby the issues of when and how to load the persistent state of a component are removed from the client.

The component model allows the runtime to manage the component in the information system. This same principle of management applies to object identity, transaction and session management, security, versioning, clustering, workload balancing and failover, caching, and so on. In many cases, the runtime has a much better understanding of what is going on in the shared system than any one application can ever have, and thus can do a better job of managing the component and getting better performance and throughput in the information system. Since WebSphere is a commercially available product, you can acquire these benefits for much less than it would cost you to create the same capability on your own.

Web-Based Computing

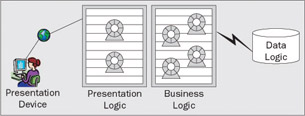

Web-based computing is, in some sense, a natural extension of the multi-tier distributed computing model whereby the presentation logic has been re-located in the middle-tier of the distributed computing topology, and drives the interaction with the end user through fixed-function devices in the user's presence. We refer to an in-presence, fixed-function device as a Tier-0 in the multi-tier structure of the application. The most common form of the Tier-0 device is the web browser on your desktop. Pervasive computing devices are emerging in the market in other forms as well – from PDAs and mobile phones, to intelligent refrigerators and cars:

Web applications exploit the natural benefits of component-based programming to enable the construction of web presentation logic that can be hosted on a server platform, and to achieve richer styles of interaction than can be achieved with plain static content servers. The web application server was originally conceived to extend traditional web servers with dynamic content. However, in the course of developing these web application servers, we realized that the issues of serving presentation logic are essentially the same as the issues of serving business logic. As with business logic, the purpose of the presentation logic server is that many clients (in this case, Tier-0 clients) share a common implementation.

When creating WebSphere, IBM found significant synergies in the marriage of web-based application serving and business logic serving. WebSphere uses the same underlying server technology for serving both EJB-based components for business logic and servlets for presentation logic. JSP pages are served as an extension of servlets. HTTP session persistence is implemented with some of the same underlying technologies as EJB essential-state persistence.

An emerging trend in the industry is to further extend the benefits of component-based programming of presentation logic out into the network. This trend is motivated by the need to further reduce the effects of latency on end-user response time. This idea leverages the fundamental nature of how workload is distributed over the presentation logic.

Presentation components that manage simple catalog-browsing logic can be pushed out in the network, closer to the end user, without putting the business at risk. Where the component is hosted in the network, whether at a central data center or out in a network hub, becomes primarily a matter of how the component is deployed and whether the hosting environment can protect the component's integrity to the level required by the component's implementation and value. The result can be a substantially better end-user performance with a moderate increase in the cost of managing the application and almost no increase in the complexity of the application design itself. Hosting parts of your application out in the network is referred to as edge-computing – computing is moved to the edge of your enterprise or the edge of your network.

Introducing component-based presentation logic in your application design does not limit you to simply delivering HTML to a browser. Servlets and JSP pages are capable of generating a wide variety of markup languages for a variety of device types. Information about the target device can be retrieved from the HTTP flow, and can be used to customize the output of the presentation logic. Even within the domain of browser-based devices, you have a variety of options like returning JavaScript or even a proprietary XML exchange to an Applet, in addition to HTML.

The servlet and JSP programming model are discussed in more detail in Chapter 4. The role of edge serving in improving the performance of serving the presentation layer to the end-user interaction model is discussed further in Chapter 12.

Integrated Enterprise Computing

Integrated enterprise computing is critical to retaining value in your past investments. Few new applications can be introduced into an established enterprise without some regard to how that will fit with existing applications and, by extension, the technology and platform assumptions on which those applications have been built. If you look into your enterprise you will find a variety of applications built on a variety of underlying technology assumptions. You may have applications built on SAP, CICS, Oracle, IMS, Windows, DB2, Tibco, PeopleSoft, Domino, MQSeries, and other proprietary technology bases. In many cases, you will be asked to integrate these applications into your application implementation.

In some cases you will be asked to create a new application that is intended to essentially replace an existing application – perhaps to exploit the productivity benefits of the J2EE-compliant, object-oriented, componentized or web-based capabilities provided by the WebSphere Application Server platform. It has become common for enterprises to initiate projects to re-engineer their core business processes. This has been largely motivated by the need for businesses to respond to rapidly changing market conditions that require the introduction of new products – business process re-engineering.

In our experience, projects that propose massive re-engineering of existing information technologies to enable business process changes run a high risk of failure. This is due, in part, to the complexity of such projects as it is hard to keep the scope of such projects constrained to isolated portions of the overall business process; features and scope-creep push the project towards higher levels of complexity.

Another major risk factor in the case of such projects is the extent to which the re-engineering can disrupt existing business activities. This requires re-education of end users, not just in the new business processes, but also in the underlying interaction models introduced by the new technology. It means fundamental changes to the administration processes and significant changes to the workload of the computing systems, and thus alters our basic understanding of resource utilization and, further, our ability to predict capacity requirements, not to mention the potential to take existing processes off-line during the transition to the new technology bases. While business process re-engineering is essential for enterprise growth and sustainability in our modern global economy, it can also represent a serious business risk.

A more prudent approach to business process re-engineering is a more incremental approach – one that puts together an application framework representing the re-engineered process on the new technology foundation, but delegates the majority of its business function implementations to the business application legacy already being used in the enterprise. Over time, as the foundation and application framework mature and investment opportunity warrants, legacy business implementations can be re-written as first-order implementation artifacts in the new technology base, and the legacy can then be withdrawn. We refer to this as incremental business process re-engineering.

Two things are key to enabling incremental business process re-engineering. The first is a good design for the new business application that will serve as a foundation for your business process objectives. This has to incorporate flexibility towards rapid or customized changes in the business processes, including the introduction of new processes, and yet account for the use of existing application artifacts. The second ingredient is the availability of basic technologies that enable communication of data and control-flow between the application framework and the legacy application elements that you want to exploit.

The methodologies for creating a good framework design are a topic in itself. We won't go into that very deeply here. However, the principles of object-oriented design – encapsulation, type-inheritance, polymorphism, instantiation, and identity – are generally critical to such frameworks. To that end, Java, J2EE component programming, and a good understanding of object-oriented programming are vital.

As usual, the issues of cross-technology integration are complicated – in mission-critical environments, you must address concerns about data integrity, security, traceability, configuration, and a host of other administrative issues for deployment and management. However, to support the productivity requirements of your developers, these complexities should be hidden – as they are in the WebSphere programming model.

The key programming model elements provided by WebSphere for enterprise integration are offered in the form of Java 2 Connectors and the Java Messaging Service, both of which are part of the J2EE specification. In addition, WebSphere offers programming model extensions that effectively incorporate these programming technologies under the covers of a higher-level abstraction – for the most part presented in the guise of standard J2EE component model programming. For example, in Chapter 7, we introduce the WebSphere adapter to J2EE Connectors that uses a stateless session bean programming interface.

Messaging-based approaches to application integration are very effective and introduce a great deal of flexibility. The asynchronous nature of a message-oriented programming model allows the applications to be decoupled (or loosely coupled if they weren't coupled to begin with). One popular model is to instrument one application to simply state its functional results as a matter of fact in an asynchronous message. The message can then be routed by the messaging system to other applications that may be interested in that outcome and can operate on it further. In particular, the message may be routed to multiple interested applications, thus enabling parallel processing.

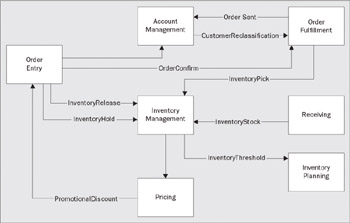

Series of applications designed in this manner can be strung together in various patterns to create a business system. The system can be re-wired in many different patterns to create different effects. No component ever has to know what other components it is wired to and so re-wiring can be accomplished without affecting the component implementations. For example:

In this sample enterprise information system (composed of several otherwise independent applications), the inventory hold message is sent to the inventory management system to put a hold on the stock for a pending order. Likewise, if the customer cancels the order, an inventory release message is sent to free that stock for another order. An order configuration message is sent to the order fulfillment system to ship the requested items to the customer. That same message is also sent to the account management system to be used to calculate the customer's loyalty to the store. If a customer is reclassified (for example, they've ordered more than a certain amount in the last 12 months) then a message is sent to the order fulfillment system – higher-classified customers received preference over other customers. If inventory (plus holds) drops below a certain threshold, then an inventory-threshold message is sent to the order fulfillment system (orders may be held to allow higher-classified customers to get preferential treatment). The inventory threshold message is also sent to the inventory planning system (to order more stock from the supplier), and to the pricing system (which may adjust prices if demand continues to exceed supply).

The point is that all of these systems are loosely coupled – each responding to the messages they receive based on their own encoding and considered policies. The system continues to work (albeit differently) if message-connections are dropped or added between the parts. For example, if the connection between the order entry system and account management system is dropped, the rest continues to work – you just don't get any assessment of customer loyalty. Likewise, adding a connection to pass the inventory-threshold message between the inventory management system and the order fulfillment could be used to slow down the fulfillment of orders containing items with a low inventory (allowing more higher-priority orders to be fulfilled).

Variations on message-oriented computing include: point-to-point asynchronous messaging, request-response messaging, and publish-subscribe messaging.

Looking at the problem of application integration (or perhaps, more appropriately, lines-of-business integration) from the top, it is important to model your business process flows in a way that allows you to adapt those flows rapidly to new procedures and opportunities – to be able to model and then rapidly modify the order entry process to perform credit checks or to process partial orders, and other similar processes without having to re-write either your order entry or your inventory systems.

Business process management is discussed in greater depth in Chapter 10.

Services-Oriented Computing

Services-oriented computing started out as the definition for the next level of coarse-grained functional composition – the idea being to aggregate interdependencies that you did not want to expose across a network boundary: the next higher aggregation beyond components in a distributed system. Services-oriented architectures (SOA) leverage the relative cohesiveness of a given business service as the primary point of interchange between parties in the network.

SOA has a lot of affinity with business-to-business integration. More recently, SOA has re-emerged with a renewed focus on leveraging Internet technologies, and has been re-branded as web services. The idea is to use XML as the basic message-encoding architecture, and HTTP as a communication transport, to provide a very low barrier to driving the programmatic interaction of automated processes between companies. Since both these technologies are pervasive in the web-based computing environment – nearly everyone now has support for HTTP and XML (or at least HTML) in their computing infrastructures – the problem of agreeing to a common technology base as a requisite to inter enterprise communication could be quickly overcome.

We've come to realize that the fundamentals of services-oriented computing could be exploited to overcome similar barriers between lines-of-business within an organization as well. In fact, the exploitation of web services may, in the end, be more pervasive for intra-enterprise integration than for inter-enterprise integration. When combined with more classic approaches to enterprise integration and gateways, the principles of incremental business process re-engineering can be applied to integrate legacy systems into the services-oriented computing model.

Advances in the field of services-oriented computing are heavily focused on web services now – especially on Web Services Definition Language (WSDL), and to a lesser extent on the Simple Object Access Protocol (SOAP), and Universal Description, Discovery and Integration (UDDI). These technologies combine to introduce business services that can be easily composed with other business services to form new business applications. At some level, web services are just a new generation of technology for achieving distributed computing, and in that sense, they have a lot in common with many of the other distributed computing technologies like OSF/DCE, CORBA, and J2EE's RMI/IIOP that went on before them. However, web services differ from their predecessors in the degree to which they deliberately address a 'loose coupling' model.

We measure the coupling of distributed application components in at least three ways:

-

Temporal affinity

-

Organizational affinity

-

Technology affinity

Temporal Affinity

Temporal affinity is a measure of how the information system is affected by time constraints in the relationship between two components. If an application holds a lock on data for the duration of a request to another business service, there are expectations that the requested operation will complete in a certain amount of time – data locks and other similar semaphores tend to prevent other work from executing concurrently. Tightly-coupled systems tend to have a low tolerance for latency. On the other hand, loosely-coupled systems are designed to avoid temporal constraints – the application and the underlying runtime are able to execute correctly and without creating unreasonable contention on resources even if the service requests take a long time to complete.

Organizational Affinity

Organizational affinity is how changes in the system affect other parts of the system. A classic example of this is in the versioning of inter-dependent components. If the interface of a component changes, it cannot be used until the dependent components are changed to use that new interface. In tightly-coupled systems, the change has to be coordinated between the organization introducing the new interface and the organization responsible for using that interface. This often requires direct and detailed communication between the organizations. On the other hand, there is a high degree of tolerance for mismatches between components in loosely-coupled systems.

Another dimension of organizational affinity is the degree to which the system has to be managed from a single set of administrative policies. Tightly-coupled systems tend to require a common set of administrative policies, most commonly handled with a centralized administration facility to ensure consistency of policy. The administration of loosely-coupled systems tends to be highly federated – allowing each party to apply its own administration policies and expressing the effects of those policies only as 'qualities of service' at the boundaries between the organizations. Generally, the invoker of a service in a loosely-coupled system can make choices based on the trade offs of costs, benefits, and risks of using an available service. Different providers with different quality of service characteristics can supply the same service, thus enabling capitalistic marketplace economics to drive a services community.

Technology Affinity

Technology affinity addresses the degree to which both parties have to agree to a common technology base, to enable integration between them. Tightly-coupled systems have a higher dependence on a broad technology stack. Conversely, loosely-coupled systems make relatively few assumptions about the underlying technology needed to enable integration.

Our national road systems represent a very good example of a loosely-coupled system – imposing few temporal or organizational constraints or technology requirements. Transactions of very different duration can travel over roads – from riding your bike next door to visit a neighbor, to driving across town to pick up dinner, to transporting your products to new markets across the country. On-ramps come in a variety of shapes, and can be changed significantly without preventing you from getting onto the highway. While the country may impose some basic regulatory guidelines, states and municipalities can have a great deal of latitude to impose their own regulations and to provide their administrative goals on the part of the system they control. The technology requirements are relatively low – enabling a huge variety of vehicle shapes, sizes, capacities, and utility. Many of these same characteristics can be attributed to the Internet as well.

However, loose coupling also introduces a degree of inefficiency – sub-optimization, based on the desire to maintain the freedom and flexibility inherent in loosely-coupled systems. To maintain the analogy, railroads and airplanes represent more tightly-coupled systems, but exist because of the additional efficiencies they can provide. They are optimized to a set of specific objectives – either to achieve optimal fuel efficiency, or time efficiency, respectively.

| Note | Messaging systems typically enable a degree of loose coupling. However, the majority of traditional messaging products typically introduce a higher degree of organizational affinity than web services (messages often carry a high-degree of structural rigidity), and a higher degree of technology affinity (requiring both parties to adopt the same proprietary software stack at both ends of the network – or suffer intermediate gateways). web services, on the other hand, emphasize the use of XML-based self-describing messages, and fairly ubiquitous technology requirements. |

WebSphere takes the idea of web services to a new level – centering the elements of web services first on WSDL as a way of describing a business service. One of the things that you will encounter as you enter into the web services world is the realization that web services and J2EE are not competing technologies – in fact they are very complimentary. Web services are about how to access a business service, J2EE is about how to implement that business service. The next thing you should realize is that you will have a variety of access needs.

The industry tends to talk first, if not exclusively, about accessing web services with SOAP over HTTP. While SOAP/HTTP is critical to standard interoperation over the Internet of loosely-coupled systems, it also introduces certain inefficiencies – namely it requires that the operation request be encoded in XML and that the request flows over a relatively unreliable communication protocol, HTTP. In more tightly-coupled circumstances you will want more efficient mechanisms that can be optimized to the tightly-coupled capabilities of the system without losing the benefit of organizing your application integration needs around the idea of a Web Service.

Web Services Definition Language (WSDL) is an XML-based description of a web service containing interface (messages, port-types, and service-types) and binding (encoding and address) information – essentially how to use the web service and how to access it. WSDL is described in more detail in Chapter 8. We can then leverage this separation to maintain a corresponding separation of concerns between development and deployment. Programmers can use the port-type and message definitions to encode the use of a web service, whereas deployers can use the binding and port definition to construct an access path to the web service. The access path can be loose or tight, depending on where the requesting application and the deployed service are, relative to each other.

| Note | One flaw in the web services specification is that the distinction between Document-oriented and RPC-oriented web services is captured in the binding – even though this distinction has implications on the programming model used by both the web services client programmer and the web services implementation programmer. We hope this issue will be resolved in future versions of the WSDL specification. |

With this as a foundation, you can introduce all your business services as web services on a services bus. The services bus represents any of the business services that have been published as web services in your, or anyone else's organization, which you can see and which can be accessed with any of the access mechanisms available to your execution environment. Constructing distributed applications in this fashion is known and supported by WebSphere as services-oriented computing.

The web services programming model is discussed further in Chapter 8.

A Comprehensive Application Serving Platform

The WebSphere Application Server supports the classic servlet- and EJB-component-based programming models defined by J2EE in support of both 'thick' clients and web-centered computing. In addition, it supports the following:

-

Control of multiple presentation device types – both traditional desktop browsers and other pervasive devices

-

web services for loosely interconnecting intra- or inter-enterprise business services

-

Message-oriented programming between application components

-

Business process management for scripting the flow of process activities implemented as J2EE components

-

Legacy integration through Java 2 Connectors and higher level adapters

We will discuss all of these models of computing through the rest of this book.

Basic Patterns of Application and Component Design

Given the models of e-business computing supported by WebSphere, we can identify a set of general application and component design patterns that can be mapped on to those e-business computing models. You should treat each of the general design patterns as building blocks – composing them to construct a complete business application based on an application design pattern. We provide a brief overview of the main component patterns here. All of these patterns are discussed in more detail later in this book. References to further discussions are included in each overview description.

It is worth considering how these basic component design patterns can be composed to form different application design patterns. We won't spend time in this book discussing different application design patterns. The Plants-By-WebSphere example referred to extensively in this book uses a self-service pattern. The characteristics of this pattern are:

-

Fast time to market

-

Often used to grow from a web presence to self-service

-

Separation of presentation and business logic.

This particular collection of characteristics has been classified as a Stand-Alone Single Channel (aka User-to-Business Topologoy 1) application pattern in the Patterns for e-Business: A Strategy for Reuse book by Jonathan Adams, Srinivas Koushik, Guru Vasudeva, and Dr. George Galambos ISBN 1-931182-02-7. A useful collection of online information related to e-business patterns can be found at http://www.ibm.com/developerworks/patterns. Following the steps in the selection of the design pattern to use for Plants-By-WebSphere will provide useful information about design, implementation and management of the application.

We recommend the use of this site in selecting a pattern for your application. This site does a good job of illustrating the rationale and implication for each pattern and will increase your chances of building a successful e-business application.

Thick Client Presentation Logic

Classic three-tiered computing design puts the presentation logic on the user's desktop – based on the principle that rich user interaction requires locality of execution, and personal computers afford the processor bandwidth needed for the I/O and computational intensity of a Graphical User Interface (GUI).

WebSphere supports this model with the J2EE Client Container. You can write Java-based client applications, including those that exploit the J2SE AWT and Swing libraries for presentation logic, and then connect these clients to EJBs hosted on the WebSphere Application Server either through RMI/IIOP directly, or more loosely through web services client invocations.

There is very little treatment of this model in this book, although there is a brief discussion of it in Chapter 4, and the programming aspects of calling an EJB through RMI/IIOP or through a web service are no different from calling an EJB or web service from within a server-based component hosted on the application server. The deployment and launching processes are slightly different.

Web Client Presentation Logic

The web-client design takes advantage of the four-tier computing model. This model concedes that a rich user interaction model is not critical to a broad spectrum of business applications, and compromises this for the benefit of localizing presentation logic on the application server and rendering the interaction through a web browser.

WebSphere supports this model with the use of JSP pages and servlets. This model is discussed in depth in Chapter 4.

Model-View-Controller

Model-view-controller (MVC) based designs are relevant to both thick client and web client-based application structures. The MVC pattern introduces a clean separation of concerns between the details of how information is laid out on a screen (the View) and the way business artifacts are laid out (the Model) and the control flow that is needed to operate on the model in response to input from the end user (the Controller).

The MVC pattern maps on to the J2EE programming model supported by WebSphere – views are implemented with JSP pages, models are implemented with EJBs, and controllers are implemented with servlets. Further, WebSphere supports the Apache Struts framework – a pre-engineered MVC framework based on JSP pages, servlets and EJBs.

Componentized Business Logic

We generally classify business logic as either representing procedures (for example, account-transfer, compute-shipping-charges, etc.) or as representing things you use in your business (for example, ledgers, accounts, customers, schedules, etc.). These map to session beans and entity beans, respectively. Generally, you will implement all of your high-level procedures (verbs) as methods on session beans, and implement a set of primitive operations on your business artifacts (nouns) as entity beans. The operation primitives that you implement on the entity beans will represent the fundamental behavior of those entities, and enable (or constrain) the kinds of things that a procedure (session bean) can do with the entity.

Encapsulation of Persistence and Data Logic

One of the significant benefits that comes from componentizing business logic – specifically of the modeling of business artifacts as entity beans – is that it encapsulates the persistence of the artifact's state and implementation. This encapsulation hides the specific schema of persistent state, but also allows that certain operations on the entity model be mapped down onto functions encoded in the data system that operate directly on the state of the entity in the data tier. For example, if you have a DB2 stored procedure in your database that computes order status based on the age of the order, then you can simply encode the getOrderStatus() method on your Order component to push that operation down on the stored procedure in your database.

Encapsulation of persistence and data logic within the entity bean can be done either with bean-managed persistence (BMP) where you're entirely responsible for managing the relationship of the bean's state to the underlying data system, or with container-managed persistence (CMP) where the container takes responsibility for managing that relationship. We recommend that you always use container-managed persistence whenever possible as the container can generally achieve a higher level of throughput and better resource utilization across the system given its knowledge of everything else that is going on.

Encapsulation of persistence and data logic is discussed further in Chapter 5.

Adapter-based Integration

Business procedures (or even entities) represented in your application design model may be actually implemented elsewhere in your information system – based on completely unrelated information technologies. Abstract components can be introduced to your application to represent this function implemented elsewhere. These abstract components are adapters – bridging between WebSphere and your non-WebSphere execution environments. In WebSphere, adapters based on the Java 2 Connector Architecture are implemented as session beans and/or web services. Further, interactions within the adapter to the external application may be implemented with micro-flows (a specialization of work flow) that can be used to compose multiple calls to the external program to form the function represented by the abstract component.

Conceptually, the use of adapters does not have to be limited to just encapsulating business procedure functions – adapters can also be used to encapsulate the state of business artifacts. This is generally achieved by implementing entity beans that make use of session beans that in turn are implemented as adapters to the external system.

Adapters and connectors are detailed in Chapter 7.

Request-Response Messaging

Request-response messaging can be used to form a remote-procedure-call (RPC)-like relationship between two components using messaging. Unlike RPC-based communication, the calls from a component to another component are not blocking. Instead, you issue a request message from one component to the other. Control is immediately handed back to your program, allowing you to perform some other work while you're waiting for the response to your request. In the meantime the second component will receive the request message, operate on it, and issue a response message back to your first component. The first component then needs to listen for response messages and correlate them to their corresponding request messages.

Publish-Subscribe Messaging

Another design pattern for message-oriented components is based on publishing and subscribing to notifications. With the pub-sub model you can create neural-network-like designs in your application. A component subscribes to a message topic that it works on. As messages of that type are generated by other components, the component receives the message and operates on it. It may produce other messages – perhaps of different types put on different topic queues. By interconnecting components of this sort, you can compose an aggregate network of otherwise independent components to perform your business application function.

Web Services

The web services design pattern represents an encapsulation of the business component that, having encapsulated the component with the formalisms defined by the web services standards, enables more loosely-coupled access to the business component. Web services do not define a component implementation model per se, but rather borrow from the existing component model for business procedures. However, the result is to present an abstract component definition to clients of that procedure that elevates it to the idea of a business service.

The web services pattern is described in Chapter 8.

WorkFlow

Workflow is a way of composing a set of individual business services and procedures into an overall business process. The workflow pattern is a powerful mechanism for codifying your business. It allows you to visualize your business processes, reason about their correctness and appropriateness to your markets, and quickly adjust your business processes as business conditions change. The design pattern involves capturing your business process in a workflow definition. The business process definition describes the flow of control and information between business activities. In turn, activities are defined in terms of web services – each activity in the flow is a call to a web service that implements that activity. In this sense, a workflow is a composition of business services used to perform that business process.

Workflows are discussed in more detail in Chapter 10.

Together, these models form relatively basic definitions of how to construct the components in your application. Your challenge, then, is to compose these components into an organization that fulfills the objectives for your application.

|

EAN: N/A

Pages: 135