Virtual Partitions (Peak Performance Virtualization)

| HP supports two different virtual partitioning options: Virtual Partitions and Integrity Virtual Machines. These each have their own benefits and tradeoffs, so we will describe both of them here and then discuss how to choose which technology fits your needs later, in Chapter 3Making the Most of the HP Partitioning Continuum. Let's first look at Virtual Partitions, or vPars. Key FeaturesVirtual Partitions (vPars) effectively provides you with the ability to run multiple copies of HP-UX on a single set of hardware, which can be a system or an nPar. It provides the ability to allocate CPUs and memory and I/O card slots to each of your OS images. Once that is done, the partitions boot as if they are separate systems. FunctionalityThe key features of vPars include:

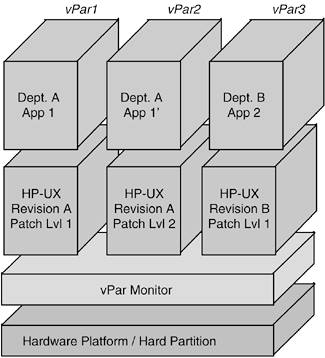

System and OS SupportThe vPars product has been available since 2001. It supports the rp5405, rp5470, rp7400 and all of HP's cell-based servers. There are some limitations with the cards and systems supported, and these are changing regularly, so you should verify that the configuration you are considering is supported with the current vPars software. ArchitectureThis section will provide some insights into the architecture of the vPars product. OverviewvPars runs on top of a lightweight kernel called the vPar Monitor. The high-level architecture is shown in Figure 2-11. Figure 2-11. vPars Architecture Overview

Some interesting things to note in this figure include:

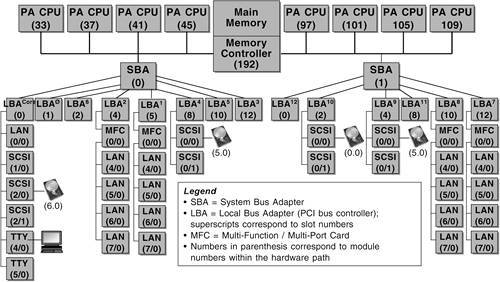

Partitionable ResourcesIn order to decide how to divide up a system, the first step is to do an ioscan to map out the resources that are available. The ioscan will provide all the information you need to identify which resources are available for assignment to vPars. note It is important that you run the ioscan and save or print the output prior to configuring and booting your first vPar. This is because once the monitor is running, the only access to the command will be from a running vPar and running ioscan from a vPar will only show you the available resources in that vPar. This means that you won't be able to identify the resources that can be assigned to other vPars. Actually, you will be able to identify the existence of I/O cards using the 'vparstatus A' command, but there will be no information about what types of cards they are. Figure 2-12 shows the resources available on an rp7400 graphically. Figure 2-12. Partitionable Resources on an rp7400 There are a few key things to understand about this diagram before you decide how to partition this system. Partitioning CPUsOn older versions of vPars, there was the concept of bound vs. unbound processors. The distinction is this:

An important distinction with the bound processors is that the actual I/O activity could be handled by any of the processors; only the interrupts were handled by the bound processors. On these older versions, each vPar needs to have at least one bound processor, but it may benefit from more, depending on the amount of I/O interrupt handling required by the workloads running in the vPar. Version 4.1 of vPars resolved this issue. HP-UX version 11iV2 now supports the movement of I/O interrupts between processors, so there is no longer any need for bound processors. You still need at least one processor if you want to keep the vPar running, of course. Other than that, all the CPUs can freely move between vPars. Partitioning MemoryMemory is allocated to vPars in 64MB ranges. It is possible to specify the blocks of memory you want to allocate to each of the vPars, but this isn't required. The memory controller (block 192 in Figure 2-12) is owned by the vPar monitor, which allocates the memory to the vPars when they boot. Changing the memory configuration of a vPar currently requires that the vPar be shut down, reconfigured, and then rebooted. Partitioning I/O DevicesI/O devices are broken up into system bus adapters (SBAs) and local bus adapters (LBAs). The SBAs are owned by the vPar monitor. I/O devices are assigned to vPars at the LBA or SBA level. On HP systems, the LBA is equivalent to an I/O card slot. Each SBA or LBA can be assigned to a specific vPar. Whatever card is in that slot becomes owned by that vPar and will show up in an ioscan once that vPar is booted. Keep in mind that if the card is a multifunction or multiport card, then all the ports must be assigned to the same vPar. Changing the I/O slot configuration of a vPar requires that the vPar be shut down, reconfigured, and then rebooted. A couple of interesting points on this:

Security ConsiderationsIn order to make it possible to administer vPars from any of the partitions, the early versions of the vPar commands were accessible from any of the partitions. These include the vparmodify, vparboot, and vparreset commands. On the positive side, a root user in any of the partitions can repair the vPar configuration database. The tradeoff was that it was possible for a root user in another partition to shut down, reboot, or damage another partition configuration. Newer versions of vPars will resolve this issue by allowing you to assign each vPar the designation of being a Primary or a Secondary administration domain. When these commands are run in a vPar that is a Primary administration domain, they will work as they did before. However, when running these commands in a vPar that is a Secondary administration domain, they will only allow you to operate on the vPar the command is run in. In other words, you will be able to run a vparmodify command to alter the configuration of the local vPar, but if you attempt to modify the configuration of another vPar, the command will fail. We do need to clear up one common misconception about this security concern, though. Each partition runs a completely independent copy of the operating system, including separate root logins. Therefore, if one of the vPars were compromised in a security attack, it would not be possible for them to gain access to processes running inside another vPar except through the network, as with any other separate system. Performance CharacteristicsWe describe this technology as peak performance virtualization because the physical resources of the system are assigned to partitions and once they are assigned, the operating system in the partition accesses those resources directly. The tradeoff here is flexibility. For example, each I/O card slot can only be assigned to a single vPar. However, because the OS interfaces directly with the card, no translation layer is required. If I/O performance is critical for an application in your environment, you will want to ensure that the solution you are using supports this direct I/O capability. |

EAN: 2147483647

Pages: 197