nPartitions (Electrically Isolated Hardware Partitions)

| nPartitions, or nPars, is HP's hardware partitioning solution. Because nPars is a hardware partitioning solution, it can be difficult to separate the features of the hardware from the features of nPars so we will start by discussing the hardware features that impact partitioning and then discuss partitioning itself. Key FeaturesSingle-System High-Availability (HA) FeaturesnPartitions allows you to isolate hardware failures so that they affect only a portion of the system. A number of other single-system HA features are designed to reduce the number of failures in ANY of the partitions. These include n+1, hot swappable components such as:

These features ensure that the infrastructure is robust enough to support multiple partitions. In addition, a number of error resiliency features are designed to ensure that all the partitions can keep running. These include:

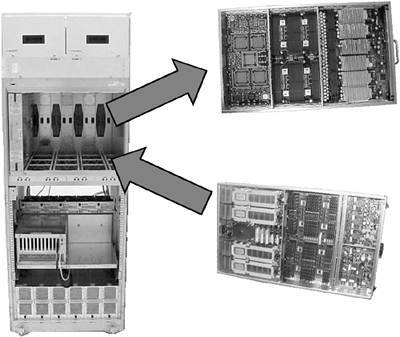

Finally, nPars provides hardware isolation to ensure that anything not corrected will impact only a portion of the system. Investment ProtectionHP's cell-based systems were designed from the beginning to provide industry-leading investment protection. In other words, the system can go through seven years of processor and cell upgrades inside the box. One example of how this is done is shown in Figure 2-3. Figure 2-3. Superdome In-Box Cell Upgrade

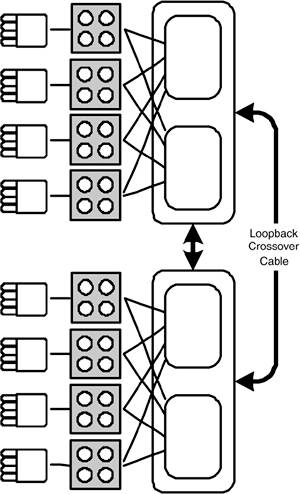

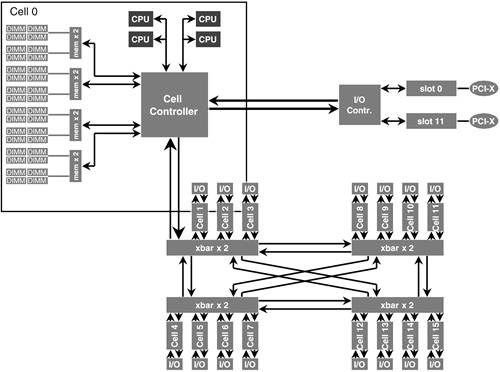

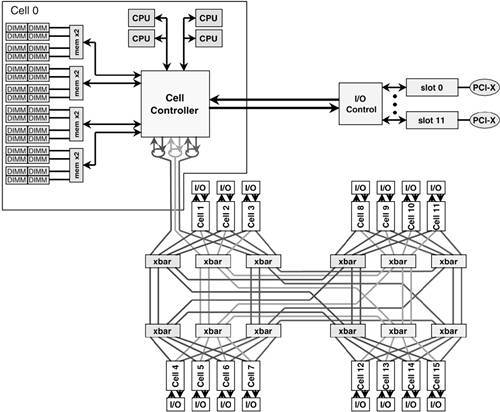

This picture shows the inside of a Superdome cabinet and two generations of cells that are currently supported in this cabinet. The cell on the top is for the PA-8600, PA-8700, and PA8700+ processors. This provided three generations of processors with the same cabinet, cell, memory, I/O, etc. Moving to Itanium or PA-8800 requires a cell-board swap. However, the memory in the old cell can be moved to the new cell as part of the upgrade, so the only things changing are the processors and cell boards. In addition, the new cell board supports five generations of CPUs including both PA (8800 and 8900) and Itanium (Madison, Madison 9M, and Montecito). So a user could start with the PA-8800 and upgrade to Itanium later using the same cell board. There are three more years' worth of processor and memory upgrades planned for this cell board. Finally, another upgrade is available for this same cabinet. As was mentioned earlier, there is a new chipset, the sx2000, that increases the number and bandwidth of the crossbars on the backplane. This provides yet another in-box upgrade which supports the same CPUs and I/O. The Anatomy of a Cell-Based SystemDual-Cabinet sx1000-Based Superdome ArchitectureThe architecture of the Superdome based on the sx1000 chipset is depicted in Figure 2-4. A fully loaded Superdome can support two cabinets, each holding eight cells and four I/O chassis. Each cell has four CPU sockets that can hold single- or dual-core processors. The chipset also supports either PA or Itanium processors. Each cell has 32 dual inline memory module (DIMM) slots that can currently support 64GB of memory, although this will increase over time. Four cells (called a quad) are connected to each of two crossbars inside each cabinet. Figure 2-4. Fully Loaded Superdome Component Architecture Using the sx1000 Chipset A few things to note in this diagram.

Single-Cabinet sx1000-Based Superdome ArchitectureThere is one significant difference between a dual-cabinet and a single-cabinet Superdome. The single-cabinet sx1000-based Superdome architecture is shown in Figure 2-5. Figure 2-5. Single-Cabinet Superdome Component Architecture Based on the sx1000 Chipset

As you can see from this diagram, the crossover cable that connects the two cabinets in a dual-cabinet configuration can be looped back to double the number of crossbar links between the two crossbars in the single cabinet configuration. This improves the performance and flexibility of the server. The New sx2000 ChipsetIn late 2005, HP introduced a new chipset for its cell-based systems called the sx2000. Figure 2-6 shows the architecture of a dual-cabinet Superdome with the new chipset. Figure 2-6. Dual-Cabinet Superdome Component Architecture Based on the sx2000 Chipset The key enhancements in this chipset are:

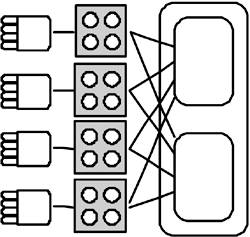

The end result of all of this is more resilience and more bandwidth. This means better performance and better availability. Midrange System ArchitecturesTwo other midrange systems use the same cell and crossbar architecture. The first is a four-cell system that was first introduced as the rp8400. The architecture of this system is shown in Figure 2-7. This same architecture is used in the rp8420 and rx8620 systems. Figure 2-7. HP rp8400 Component Architecture

Since there are only four cells in this system, only one crossbar is required. The core architecture is effectively the same as half of that of a single-cabinet Superdome. There is approximately 60% technology reuse up and down the product line. Figure 2-8 shows a picture of the Superdome and an rp8400. Figure 2-8. The Superdome and rp8400 Reuse Much of the Same Technology

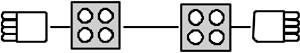

Much of this technology is also used in the rp7410, rp7420, and rx7620, although there is no need for a crossbar in these systems. This is because there are only two cells in this system, so the cell controllers are connected together rather than having a crossbar in between. This is shown in Figure 2-9. Figure 2-9. HP rp7410 Component Architecture

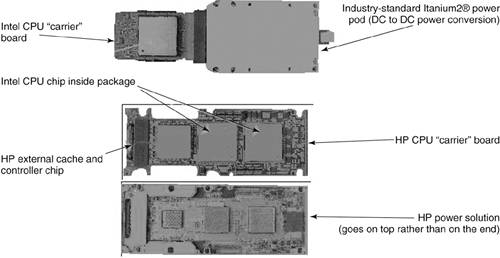

The key advantage to reusing system components is cost. Reuse makes it possible for HP to provide more features at a lower overall cost. Dual-Core ProcessorsIn 2004, HP introduced support for dual-core processors in all of its servers that use the PA-8800 or MX2 Itanium daughtercard. The MX2 is an interesting HP invention that warrants a brief description. HP was scheduled to release the PA-8800 processor in early 2004, which would allow the Superdome to go up to 128 CPUs. At that time Intel already had a dual-core Itanium processor (Montecito) in its roadmap, but this wasn't scheduled for release until 2005. HP didn't want to wait, so the HP chipset designers came up with a clever solution. They analyzed the form factor of the Itanium processor (top of Figure 2-10) and realized that it was possible to fit two processors in the same form factor. Figure 2-10. Intel Itanium Processor Compared to the HP MX2 They then put together a daughtercard which carried two Itanium processors, a controller chip, and a 32MB level-4 cache, and fit all this into the same form factor, power requirements, and pin-out of a single Itanium chip. They did this by laying the power pod on top of the daughtercard rather than plugging it into the side. If you think about this, the result is that you now have two Itanium chips plus a 32MB cache in every socket in the system. For some workloads, the addition of the cache alone results in as much as a 30% performance improvement over a system with the same number of processors. However, in order to maintain the power and thermal envelope of a single Itanium processor the mx2 doesn't support the fastest Itanium processors. nPar Configuration DetailsMuch of what we have talked about so far has been features of the hardware in HP's cell-based servers. Although this is all very interesting, what does it have to do with the Virtual Server Environment (VSE)? Well, since this section is about hardware partitioning, much of what we have talked about so far has been focused on helping you understand the infrastructure that you use to set up nPartitions. Now let's talk about how this all leads to an nPartition configuration. Earlier in this section we showed you a couple of architecture diagrams of the Superdome, both the single-cabinet and double-cabinet configurations. An extensive set of documents describes how to set up partitions that have peak performance and maximum resiliency and flexibility. We are not going to attempt to replace those documents here. However, we do want to give you some guidance on where to look. Selection of Partition CellsOne nice feature of the Superdome program is that there is a team of people to help you determine how you want the system partitioned as part of the purchasing process. That way the system is delivered already partitioned the way you want. Customer data suggests that very few customers change that configuration later. That said, many customers have become much more comfortable with dynamic systems technologies, and there should be much more of this in the future. When you get to the point that you want to reconfigure your partitions, a key resource for determining how to lay out your partitions is the HP System Partitions Guide, particularly for Superdomes with the sx1000 chipset. Although any combination of cells will work, there are a number of recommendations on combinations that provide the best performance and resilience. A tremendous amount of effort went into those recommendations. If you can, you should stick with them. An additional reason to look at the recommendations in the manual is that the combinations in that document will be different for the sx2000 chipset. Because of the triple-redundant connections between all of the cells and crossbars in the sx2000, the recommendations are more open. Memory PopulationFor many workloads, getting maximum memory performance is critical. Several key memory-loading concepts are helpful in making sure you get optimal performance from your system. The first is that there are dual-memory buses in the system. To take full advantage of the architecture, you should always load your memory four to eight DIMMs at a time. This will ensure that you are using both memory buses. The other important concept affecting memory loading is memory interleaving. This is also important to nPars, because to get optimal performance from an nPar, you need to ensure that the memory on each cell in the partition is the same. This is because the memory addressing in the partition is interleaved, which means the memory is evenly spread out in small increments over all the cells in the partition. The major advantage to this is that large memory accesses can take advantage of many memory buses at the same time, increasing overall bandwidth and performance. HP-UX 11iV2 introduced cell-local memory. What this means is that memory allocation is done from memory locally on the cell, where the process that is allocating the memory is running. Interleaving is better when large blocks of memory are being accessed in short periods of time. Workloads that can take advantage of this include statistical analysis, data warehousing and supply chain optimization. Cell-local memory is best for workloads that do lots of small memory accesses, such as online transaction processing and web applications. In addition, you can assign both cell-local and interleaved memory in each of your cells and each of your nPars. There are several things to remember here. The first is that you want to make sure that you still have the same amount of interleaved memory on all of the cells within each nPar. The other is that most workloads can typically benefit from a combination. Finding the right balance tends to be very workload dependent, so we recommend that you discuss your requirements with your HP Solutions Architect and then test a few combinations to determine the best balance. More details on nPartitions and how to configure and manage them is provided in Chapter 5, "nPartition Servers." |

EAN: 2147483647

Pages: 197