QoS for WLANs

|

| < Day Day Up > |

|

Wi-Fi effectively handles data services, but now it must show that it can support real-time traffic. This requires QoS functionality. To deliver QoS effectively via a wireless network, the network must be designed so that QoS can be maintained across the network ecosystem—wired and wireless—in an end-to-end manner (source to destination). A bottleneck at any juncture can nullify one or more of the QoS provisions.

When you consider QoS in the Wi-Fi network, you must realize that it has always been limited to the radio frequency. Today, when a network manager provisions end-to-end QoS over a wireless network, a policy management server must be installed so that it can aggregate network traffic. That may soon change.

The engineering community has decided to provide QoS via data packet prioritization so that important packets are more likely to make it through an end-to-end QoS implementation. This could be, for instance, from a Wi-Fi-based VoIP phone through a DSL connection to a telco termination to the PSTN, or a video signal from a cable modem over an 802.11a connection to a receiver.

| Note | A "flow" usually refers to a combination of source and destination addresses, source and destination socket numbers, and the session identifier, but the term can also be defined as any packet from a certain application or from an incoming interface, which is how the term is used in this chapter. |

This is where the Institute of Electrical and Electronics Engineers (IEEE) enters the picture. Its 802.11 Working Group realized the need for some QoS parameters for Wi-Fi to reach the comprehensive, converged communications usage that seems to be its destiny. That Working Group formed the 802.11e Task Group to draft a specification that would ensure that time-sensitive multimedia and voice applications could be sent over a Wi-Fi connection without jitter or interruptions. Thus Task Group 802.11e (also known as TGe) is an effort on the part of the IEEE 802 standards body to define and ratify new MAC functions, including QoS specifications for wireless communication protocols in the 802.11 family of standards. But at the same time, the Task Group is also ensuring that providing priority for one or more traffic flows does not make other flows fail.

Wi-Fi's QoS Contributions

Although many complain about Wi-Fi networks' lack of Quality of Service, there are some provisions for QoS in the 802.11 specifications. As Wi-Fi technology and its wireless networks gain acceptance in many different networking environments, some may forget that the main characteristic of all 802.11 networks (a, b, and g) is their distributed approach, which provides simplicity and robustness against failures. But, as you will learn, that distributed approach sometimes hinders QoS solutions.

Voice and video services require what's known as "isochronous data transfer" for high-quality operation. For example, transferring an analog voice signal across a digital network involves using an analog-to-digital converter to continuously sample the input voltage waveform to produce a series of digital numbers (perhaps of 8-bit precision). This series of samples is then transmitted across the network, where a digital-to-analog converter transforms the series of digital samples back into a voltage waveform.

For such a scheme to work well, the network transporting the voice data must not introduce significant amounts of delay in packet arrival times. Minimal delay is easy to achieve when there is little other competing traffic on the network. It's when the network is heavily loaded that it's difficult to deliver low levels of delay.

Not all information that needs to be transferred across a network is equally impacted by network latency. Packets containing pure data (as opposed to voice or video) can be delayed significantly, without seriously affecting the applications they support. For example, if a file transfer took an extra second because of network delay, it's doubtful that most users would even notice the extra transfer time. With voice and video, however, milliseconds count.

The general strategy for minimizing delay in voice and video packet transfer is to institute some sort of priority scheme, under which such latency-sensitive packets get sent ahead of the less time-critical traffic and receive "preferred treatment" by network equipment.

For example, 802.11b provides two methods of accessing the medium. One is called the distributed coordination function (DCF), and the other is called the point coordination function (PCF).

DCF could be defined as the "classical Ethernet-style network media access," i.e. individual stations contend for access. DCF is actually a type of slotted Aloha scheme that is based on a CSMA/CA rule. (CA stands for "Collision Avoidance" verses Ethernet's Collision Detection scheme.) Collision avoidance is needed because wireless devices do not "listen" to the medium at the same time they are sending, whereas wired devices do.

PCF acts upon a single point in the network (most likely, a network access point) like a centralized "traffic cop," telling individual stations when they may place a packet on the network. In other words, PCF acts a lot like a token-based procedure. The wireless device requests the medium, the request is queued, and when the access point (AP) gets to that position in the queue, it is given a token to talk for a period of time. The scheme means that a wireless device can predict when it is to get the medium and how long. That's where QoS comes in—predictability lends itself to QoS.

However, since most Wi-Fi networks and devices use DCF, there is little or no experience with PCF usage in a Wi-Fi network for QoS. Furthermore, even though PCF could support QoS functions for time-sensitive multimedia applications, this mode interjects three other issues into the QoS paradigm—any or all of which could lead to poor QoS performance:

-

Its inefficient and complex central polling scheme causes the performance of PCF to deteriorate when the traffic load increases.

-

Incompatible cooperation between modes can lead to unpredictable beacon delays. At Target Beacon Transition Time (TBTT), the Point Coordinator schedules the beacon as the next frame to be transmitted, and the beacon can transmit when the medium has been found idle for longer than a PCF interframe space interval. Depending on whether the wireless medium is idle or busy around the TBTT, the beacon frame may be delayed. The time for which the beacon frame has been delayed, i.e. the duration it is sent after the TBTT, defers the transmission of time-bounded MSDUs (MAC Service Data Unit) that have to be delivered in the Contention Free Period mode. However, since wireless computing devices start their transmissions even if the MSDU transmission cannot finish before the upcoming TBTT, QoS performance can be severely reduced by introducing unpredictable time delays in each Contention Free Period. In the worst case, the maximum beacon frame delays of around 4:9 ms are possible in 802.11a.

-

Transmission time of the polled computing devices is unknown. A device that has been polled by the Point Coordinator is allowed to send a frame that may be fragmented into a different number of small frames. Furthermore, in 802.11a different modulation and coding schemes are specified, so in 802.11a networks the transmission time of the MSDU can change and is not under the control of the Point Coordinator. These issues can prevent the Point Coordinator from providing QoS guarantees to other stations that are polled during the remaining Contention Free Period.

Another way to create QoS is with a network access point (a telecommunications term for the location where data enters the telecommunications network, not a wireless access point) somewhere past the wireless access point and use PPP (point-to-point protocol) and/or a VPN (virtual private network) to access the network access point, and let it grant various QoS parameters.

Wired is not Wireless

Several characteristics of wireless communication pose unique problems that do not exist in wired communication. These characteristics are:

-

Mobility.

-

Highly variable, location-dependent, and time varying channel capacity.

-

Burstiness.

-

A tendency toward high error rates.

-

Limitation in channel capacity, battery, and processing power of the wireless device.

To provide the Quality of Service needed in a wireless environment, there must be mechanisms in place that can account for and adapt to such wireless specific issues. For example:

-

Proportion of usable bandwidth depends on the number of hosts. So, to support QoS, a limit must be placed on the number of users per cell.

-

To deal with channel quality degradation, the geographical span of the cell must be limited.

-

To support QoS, a limitation must be placed on the rate of traffic sources.

The root of the latency problems in WLANs is not only that all end-users share the same link, but that a collision can effectively prevent any party from accessing a channel. Furthermore, 802.11 currently has no method to decide which client to service and for how long (e.g. no idea of the flow rate or number of backlogged packets for arbitrary traffic distributions). The binary exponential back-off scheme leads to unbounded jitter.

Moreover, 802.11e only provides the hooks to implement QoS. It is up to the packet scheduler in the 802.11 access point to not only provide throughput, delay bound, and jitter bound, but also to efficiently utilize the channel. A scheduling scheme can deliver QoS from a tighter form of service differentiation to explicit guarantees within the practical channel error rates.

| Note | "Delay bound" refers to fixed and variable delay; "jitter bound refers to variance in packet delay; and "delay-jitter bound" refers to a bound between the maximum and minimum delays that a flow's packets experience. |

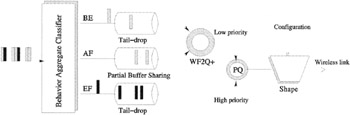

However, you can support QoS in a WLAN by placing a limitation on the rate of traffic sources, by using statistical differentiation such as DiffServ's priority queuing (PQ), weighted fair queuing (WFQ), and traffic shaping mechanisms. (See Fig. 16.2.) Let's look at what these differentiations mean.

Figure 16.2: DiffServ can limit the rate of the WLAN's traffic sources in order to support the network's QoS needs. It does this by dividing traffic into three service categories— BE (Best Effort), AF (Assured Forwarding), and EF (Expedited Forwarding). BE and AF traffic is forwarded using WFQ (Weighted Fair Queuing), and EF traffic is forwarded using PQ (Priority Queuing).

Priority queuing (PQ): This technique gives strict priority to important traffic by ensuring that prioritized traffic gets the fastest handling at each point where PQ is used. Priority queuing can flexibly prioritize according to network protocol (for example IP, IPX, or AppleTalk), incoming interface, packet size, source/destination address, and so on. In PQ, each packet is placed in one of four queues—high, medium, normal, or low-based on an assigned priority (some systems use just three categories). Packets that are not classified by this priority list fall into the normal traffic queue. During transmission, the algorithm gives higher-priority queues absolute preferential treatment over low-priority queues. But although PQ is useful for making sure that mission-critical traffic traversing various wide are network (WAN) links gets priority treatment, most PQ techniques rely on static configurations and therefore do not automatically adapt to changing network requirements.

Weighted fair queuing (WFQ): For situations in which it is desirable to provide consistent response time to heavy and light network users alike without adding excessive bandwidth, the solution is flow-based WFQ. In other words, a flow-based queuing algorithm that creates bit-wise fairness by allowing each queue to be serviced fairly in terms of byte count. For example, if queue #1 has 100-byte packets and queue #2 has 50-byte packets, the WFQ algorithm will take two packets from queue #2 for every one packet from queue #1. This makes service fair for each queue—100 bytes each time the queue is serviced. WFQ ensures that queues do not starve for bandwidth and that traffic gets predictable service. Low-volume traffic streams, which comprise the majority of the average network's traffic, receive increased service, transmitting the same number of bytes as high-volume streams. This behavior results in what appears to be preferential treatment for low-volume traffic, when in actuality it is creating fairness. WFQ is designed to minimize configuration effort, therefore it can automatically adapt to changing network traffic conditions.

Traffic shaping: Refers to techniques that create a traffic flow that limits the full bandwidth potential of the flow(s). Traffic shaping is used in many ways, for example, when a central site that normally has a high-bandwidth link (e.g. a T-1 line), while remote sites have a low-bandwidth link, (e.g. a DSL line that tops out at 384 Kbps). In such a case, it is possible for traffic from the central site to overflow the low bandwidth link at the other end. With traffic shaping, however, you can pace traffic closer to 384 Kbps to avoid the overflow of the remote link. Traffic above the configured rate is buffered for transmission later in order to maintain the configured rate.

The optimal solution would be to provide service differentiation that adapts itself to channel and traffic conditions while providing fair access to all wireless computing devices. This could be done by (1) QoS differentiation, (2) statistical QoS guarantees, and (3) absolute QoS guarantees.

To implement such a solution requires that there be a method to incorporate a distributed fair queuing technique into the QoS enabling mechanism. In distributed environments, like wireless networks, each wireless computing device operates independently of each other without a central control point where the complete knowledge of demand, available resources, and current channel characteristics could be known. Because of this distributed nature, the ideas of admission control and resource allocation need to evolve into a distributed methodology where each computing device operates independently and makes decisions cooperatively for admission, resource reservation, channel access, and data transmission.

| Note | A distributed environment distributes some of the MAC functionality onto a main processor system. This requires sufficient host processor and associated resources, such as memory, to be available to partition MAC functionality. A typical application would be Wi-Fi NICs, where the computing device's processor is available to run a driver. Thus in a distributed environment the MAC driver takes on more of the functionality of the MAC, including 802.11 MAC fragmentation/defragmentation, power management, encryption/decryption, and queue management. Also, the MAC driver requires more memory to support the power save queues, QoS queues, etc., and more MIPS (millions of instructions per second) from the system processor to perform tasks such as fragmentation/defragmentation and encryption/decryption. |

Next, adaptive protocols must be in place to assess, account, and adjust for the changes and fluctuation in wireless characteristics, mobility, error rate, and capacity. Also, energy efficiency in the MAC QoS protocol needs to be investigated. Finally, cross-layer QoS mapping should be investigated in order to accurately translate the user requirements into a suitable QoS parameter in the lower layers. And that is just for the wireless sector.

|

| < Day Day Up > |

|

EAN: 2147483647

Pages: 273