DEPLOYING A WEB-MAS USING THE WEBPARSER

The WebParser has been implemented as a Java reusable software component. This allows us to:

-

Modify, in a flexible way, the behavior of the parser by changing only the rules.

-

Integrate this component, like a new skill, in a specialized web agent.

We have deployed a Java application from this WebParser to test different rules retrieved from the selected web pages. This allows the engineer to test the behavior of the parser before it will be integrated as a new skill in the web agent.

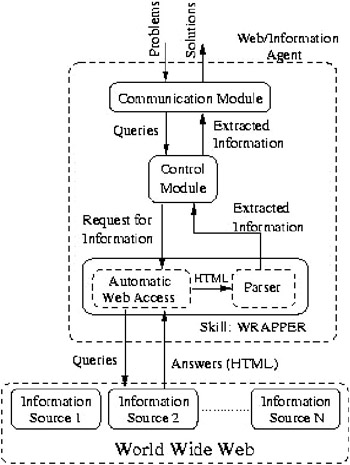

We used a simple model to design our web agents . This model allows to us to migrate the designed agent to any predefined architecture that will ultimately be used to deploy the multi-agent web system. Figure 5 shows the model that defines a basic web agent using the next modules:

-

Communication module . This module defines the protocols and languages used by the agents to communicate with other agents in the system [i.e., KQML (Finin et al., 1994) or FIPA-ACL (FIPA.org, 1997)].

-

Skill: wrapper . This basic skill must be implemented by every agent that wishes to retrieve information from the Web. This module needs to be able to do the following tasks : access the web source automatically; retrieve the HTML answer; filter the information; and, finally, extract the knowledge.

-

Control . This module coordinates and manages all the tasks in the agents.

Figure 5: Architecture for a Web Agent

To correctly integrate the WebParser in the web agent, or to change the actual wrapper skill if the agent is deployed, it will be necessary to adapt this agent's functionality to the behavior of the software component. To achieve successful migration to the WebParser, it will be necessary to change or modify the following:

-

The processes which are used by the agent to access to the information source and to extract the knowledge ( Automatic Web Access and Parser modules respectively).

-

The answers retrieved by the agent (HTML pages) will be provided as input to the parser (and the rules defined by the engineer).

Once the web agent is correctly designed, the integration of the WebParser only needs to define the two set of rules analyzed in the previous section, and then use the API provided with the WebParser to correctly execute this software module.

SimpleNews: A MetaSearch System for Electronic News

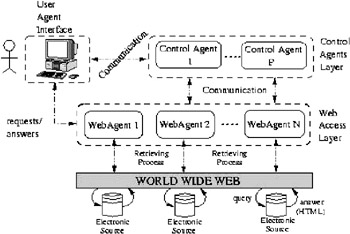

SimpleNews (Camacho et al., 2002a) is a meta-search engine that, by means of several specialized and cooperative agents, searches for news in a set of electronic newspapers. SimpleNews uses a very simple topology (as Figure 6 shows), where all of the web agents solve the queries sent by the UserAgent. The motivation for designing and implementing SimpleNews was to obtain a web system that could be used to evaluate and compare empirically different multi-agent frameworks in the same domain. Actually, SimpleNews has been implemented using the Jade, JATLite, SkeletonAgent (Camacho et al., 2002b), and ZEUS frameworks.

Figure 6: SimpleNews Architecture

The SimpleNews engine uses a set of specialized agents to retrieve information from a particular electronic newspaper. SimpleNews can retrieve information from the selected electronic sources, filter the different answers from the specialized agents, and show them to the user . As Figure 6 shows, the architecture of SimpleNews can be structured in several interconnected layers :

-

UserAgent Interface . This agent only provides a simple Graphical User Interface to allow users to make requests for news from the selected electronic papers. SimpleNews uses a UserAgent that provides a simple graphical user interface for making queries, the number of solutions requested , and the agents that will be consulted. The interface used by this agent allows to the user to know: the actual state of the agents (active, suspended , searching or finished) and the messages and contents sent between the agents. Finally, all requests retrieved by the agents are analyzed (only different requests are taken into account) and the UserAgent builds an HTML file, which is subsequently displayed to the user.

-

Control Access Layer . Jade, JATLite, or any other multi-agent architecture needs to use specific agents to manage, run or control the whole system ( AMS, ACC, DF in Jade, or AMR in JATLite). This level represents the set of necessary agents (for the architecture analyzed) that will be used by SimpleNews to work correctly. This layer resolves the differences from the two versions of SimpleNews that are implemented (from Jade framework and from JATLite).

-

Web Access Layer . Finally, this layer represents the specialized web agents that retrieve information from the specific electronic sources in the Web.

The meta-search engine includes a UserAgent and six specialized web agents. The specialized web agents can be classified into the following categories:

-

Financial Information . Two web agents have been implemented, and they will specialize in financial newspapers: Expansion (http://www.expansion.es), and CincoDias (http://www.cincodias.es).

-

Sports information . Two other web agents specialize in sportive newspapers: Marca (http://www.marca.es) and Futvol.com (http://www.futvol.com).

-

General information . Finally, two more web agents have been implemented to retrieve information from generic newspapers: El Pais (http://www.elpais.es) and El Mundo (http://www.elmundo.es).

The selected electronic sources are Spanish to allow a better evaluation of the retrieval process. It is difficult to evaluate the performance of a particular web agent when using a query in a different language. Another reason is that most of those sources are widely used in Spain, so the information stored in them should be enough to test an MAS built with those web agents.

From all the possible available versions of SimpleNews, we selected the Jade 2.4 version for several reasons:

-

This framework provides an excellent API, and it is easy to change or modify some Java modules of the agents.

-

The performance evaluation shows us that the system has a good fault tolerance.

-

It is a multi-agent framework widely used in this research field. So, more researchers can analyze the possible advantages of integrating this module into their agent-based or web-based applications.

WebParser Integration into SimpleNews

This section provides a practical example which shows how the WebParser could be integrated into several web agents that belong to a deployed MAS (SimpleNews). The following steps must be taken by the engineer to replace the actual skill (wrapper) in the selected agent:

-

Analyze the actual wrapper used by the web agent. Then, identify which modules (or classes) are responsible for the extraction of the information.

-

Analyze the web source. It will be necessary to generate the set of rules to extract the information and generate the information in the appropriate format. The WebParser provides a simple Java object for storing the extracted information. If a more sophisticated structure is necessary, the engineer may need to program a method that translates these objects into the internal representation of the agent.

-

Change the actual wrapper module used by the WebParser and use the tested rules as input to the parser.

-

Test the web agent. If the integration is successful, the behavior does not change in any of the possible situations (information not found, server down, etc.) managed by the agent.

For instance, assume that we want to integrate the WebParser into the specialized web agent www-ElPais that belongs to SimpleNews. The method of achieving the previous steps is outlined below:

-

The architecture of this agent is quite similar to the one shown in Figure 5. The wrapping process is achieved by several Java classes that belong to a specialized package (agent wrapper).

-

The web source is shown in Figure 8. The figure on the left shows the answer (using the query: "bush" ) to the search engine used by this electronic newspaper. The figure on the right shows the HTML request. It is interesting to see how the information is stored in a nested table (our system only retrieves news headlines). Figure 7 shows the HTML and DataOutput rules necessary to extract the information.

- HTML rule: type= table position= 1 - DataOutput rule: data Level= 1.2 begin-mark= <td><b> end-mark= </b></td> attrib= {null} distrib= (null) data type= (str) data struc= sortlist

Figure 7: HTML and DataOutput-Rules to Extract the Headlines from the Web Page Request

Figure 8: Web Page Example and HTML Code Provided by http://www.elpais.es -

Once the previous rules have been tested (using several pages retrieved from the information sources) and the different situations have been considered , the classes or package identified in the first step are changed by the WebParser and the related rules.

-

Finally, the web agent is tested with some test that has been used previously, and the results are compared.

These two rules will be stored in two different files, which will be used by the WebParser when the wrapping skill of the agent is used to extract the knowledge in the source request. The final integration of the WebParser will be achieved by the engineer through exchanging the actual Java classes in the agent for a simple method invocation with several parameters (like the name of the rules and the page to be filtered).

Verification and Test

This section shows how complex it is to integrate the WebParser into a deployed system. So, previous processes were repeated for every agent in the selected version of SimpleNews. This version uses six web agents, specialized in three type of news. Two groups of three different agents were made and were modified by different programmers. We have evaluated seven phases:

-

Architecture Analyses . This stage is used by the engineer to study and analyze the architecture of the implemented MAS.

-

WebParser Analyses . It is necessary to study the API (http://scalab.uc3m.es/~agente/Projects/WebParser/API) provided by the WebParser to correctly integrate the new software module.

-

Web Source Analyses . The possible answers and requests from the web source are analyzed.

-

Generate/Test Rules . The HTML and DataOutput Rules are generated by the engineer. Using the WebParser application, the engineer tests the rules, using as examples some of the possible HTML requests from the web source.

-

Change Skill . When the rules work correctly, the WebParser is changed for the actual Java classes.

-

Test Agent . The agent with the integrated parser is tested in several possible situations.

-

Test Multi-Agent System . Finally, all the web agents are tested. If the integration process is successful, then the new system should not present any differences.

Table 1 shows the average measures obtained by two students who used the deployed system to change the skill of all the web agents. Each programmer modified three agents (one of each type), and the table shows the average effort for each integration phase. It is important to note that the order of modifying the different agents is shown in the table; the General Information agents (www-Elpais, www-ElMundo) were modified first. From this table, it is possible to show when the multi-agent system is analyzed (and when the functionality and software modules of the agents are properly understood by the engineers ). Changing and modifying the actual wrapping skill in the agents only required a few hours to adapt it in the first agent. When this process is successfully implemented, the next agents only need about one hour to build the rules, to change the skill and to test the new agents. This average time is measured over deployed agents (so it is necessary to change the implemented modules). If we are building new agents from scratch or using an MAS framework, it is not necessary to implement the Change Skill phase, so the implementation of a wrapper agent could take about 30 minutes (the wrapper skill). This means that the time and effort to program (and to reprogram these classes when the sources change) the agent is highly reduced.

| Phase | General Information | Finantial Information | Sportive Information |

|---|---|---|---|

| Analyze SimpleNews | 13.4 |

|

|

| Analyaze WebParser | 34.2 |

|

|

| Analize Web Source | 1.6 | 0.4 | 0.7 |

| Generate/Test Rules | 1 | 0.2 | 0.15 |

| Change agent Skill | 4.3 | 0.3 | 0.3 |

| Test Web agent | 0.9 | 0.5 | 0.3 |

| Test MAS | 2 | 0.6 | 0.3 |

EAN: N/A

Pages: 171