Simplest Threading Example: Using the Process Thread Pool

|

I’ve written the simplest threading example that I could think of so you can get your feet wet without freaking out. In particular, this example will help you understand how your code interacts with a multithreaded environment that you didn’t write yourself and that interacts with your code in new and nonintuitive ways. Remember, threads are all around you in Windows. Even though you don’t think you’ve written any threading code, your code lives in a multithreaded environment, which you must understand if you are to successfully coexist with it.

A simple threading example (can there be such a thing?) starts here.

We saw in the previous chapter that a .NET process contains a pool of threads that a programmer can use to make asynchronous method calls. Although that case is quite useful, that’s not the only thing you can do with the thread pool. It’s available to your applications for any purpose you might like to use it for. Using the thread pool allows you to reap most of the benefits of threading in common situations, without requiring you to write all the nasty code for creating, pooling, and destroying your own threads. It’s important to understand that the thread pool manager is a separate piece of logic that sits on top of the basic threading system. You can use the underlying system without going through the pool manager if you don’t want the latter’s intercession, as I’ll demonstrate in the last example in this chapter.

Every process contains a pool of threads that your program can use.

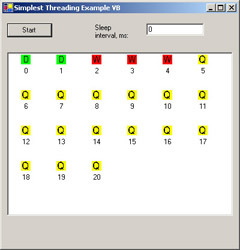

Figure 9-2 shows my simple sample application. The program uses the thread pool to execute tasks in the background and the list view shows you the progress of the various tasks.

Figure 9-2: Simplest threading sample application.

When the user clicks the Start button, the sample application calls the function System.Threading.ThreadPool.QueueUserWorkItem, thereby telling the thread pool manager, “Hey, here’s a piece of program logic that I’d like executed in the background on another thread, please.” This code is shown in Listing 9-1. I pass this function a delegate (a pointer to a method on an object; see Chapter 8) containing the code that I want the background thread to execute. In this case, it points to a method on my form named DoSomeWork, shown in Listing 9-2. You can see that the target function simply wastes time in a tight loop to simulate actually doing something. Just before making the call, I place a yellow Q icon in the list view box to show you that the request has been queued. The thread pool manager puts each delegate in its list of queued work items, from which it will assign the delegate to one of its worker threads for execution. The sample program repeats the request 21 times.

I put a unit of work into a background pool for processing in a thread pool.

Listing 9-1: Simplest threading sample application code that queues a work item.

Private Sub Button1_Click(ByVal sender As System.Object, _ ByVal e As System.EventArgs) Handles Button1.Click ’ Enqueue 21 work items Dim i As Integer For i = 0 To 20 ’ Set icon to show our enqueued status ListView1.Items.Add(i.ToString, 0) ’ Actually enqueue the work item, passing the delegate of our ’ callback function. The second variable is a state object that ’ gets passed to the callback function so that it knows what it’s ’ working on. In this case, it’s just its index in the list view ’ so it knows which icon to set. System.Threading.ThreadPool.QueueUserWorkItem( _ AddressOf DoSomeWork, i) Next i End Sub

Listing 9-2: Simplest threading application target function that does the work.

Public Sub DoSomeWork(ByVal state As Object) ’ We’ve started our processing loop. Set our icon to show ’ that we’re in-process. ListView1.Items(CInt(state)).ImageIndex = 1 ’ Perform a time-wasting loop to simulate real work Dim i As Integer For i = 1 To 1000000000 Next i ’ Set icon to show we’re sleeping. Then sleep for the ’ number of milliseconds specified by the user ListView1.Items(CInt(state)).ImageIndex = 2 System.Threading.Thread.Sleep(TextBox1.Text) ’ We’re finished. Set our icon to show that. ListView1.Items(CInt(state)).ImageIndex = 3 End Sub

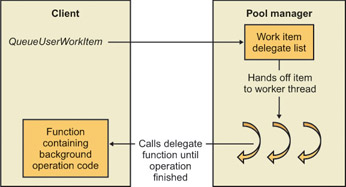

It’s entirely up to the pool manager how many worker threads to create and have competing for the CPU simultaneously. The developers of this system component put a great deal of thought into making the best use of available CPU cycles. The pool manager uses an internal algorithm to figure out the optimum number of threads to deploy in order to get all of its jobs done in the minimum amount of time. When the pool manager hands off the delegate to one of its worker threads, the thread calls the target function in the delegate, which contains the code that performs the work item. This process is shown in Figure 9-3.

Figure 9-3: Pooled thread executing a delegate.

At the start of the target function (shown previously in Listing 9-2), I change the list view icon to a red W so that you can see when the work item is dequeued and the pool thread starts working on it. At the end of the target function, I change the icon to a green D so that you can see when it’s done. Since it’s fairly common to use the same delegate function to handle work items on a number of threads, as I’ve done here, you’ll sometimes want to provide the function with information about the data set that you want it to work on in this particular instance. The method QueueUserWorkItem accepts as its second parameter an object of any type, which it then passes to the work item target function. To demonstrate passing state information to the target function, I pass the sequence number of each request, which the target places into the list view so that you can see that it got there.

The user interface of the sample application tracks the process of a task through a thread.

The pool manager is quite clever. When I run this sample application on my big server machine, with two 2-GHz processors and 2 GB of RAM, it runs only three worker threads at a time, as you can see in Figure 9-2. Windows correctly figures that since each thread is attempting to run to completion without blocking, then adding more threads than this and swapping between them would only slow down the process. Suppose we have a task that takes 10 seconds to do, and we have five such tasks to complete. If we switch tasks after every second of work, we won’t complete the first task until 46 seconds into the process. If, however, we plow straight through the first one, we get the first result after 10 seconds, the second result after 20 seconds, and so on. We still use the same amount of time to accomplish all the tasks (actually a little less because we don’t have as much swapping overhead), but we get some results sooner. You will sometimes hear people disparage multithreading as “nothing but smoke and mirrors,” meaning that it sometimes doesn’t get an individual job done any faster. In the case of a task that proceeds directly to completion, they’re right.

The pool manager decides the number of worker threads to assign to its queued tasks.

But that’s not how most computing tasks usually work. If one thread has to wait for something else to happen before it can finish its task—a remote machine to answer a request or a disk I/O operation to complete, for example—we could improve overall throughput by allowing other threads to use the CPU while the first one waits. This is the main advantage of preemptive multithreading: it allows you to make efficient use of CPU cycles that would otherwise be wasted, without writing the scheduling code yourself. The sample program demonstrates this case as well. You will see in the code listing in Listing 9-2 that at the end of the target function I’ve placed a call to System.Threading.Thread.Sleep(). This method tells the operating system to block the calling thread for the specified number of milliseconds. You’ll notice a blue S icon appear in the list box to tell the user that the thread is sleeping. The operating system removes a sleeping thread from the ready list and does not assign it any more CPU cycles until the sleep interval expires, at which time the operating system puts the thread back into the ready list to compete for CPU cycles again. There are other ways for a thread to enter this efficient waiting state, and other criteria that you can specify to cause your thread to leave it, as I will discuss later in this chapter. When I enter a reasonable number of milliseconds in this sample program, say, 1000, I find that the pool manager starts more threads, usually six or eight, and assigns them to the task of executing my work items. The pool manager noticed that its two original threads were sleeping and that CPU cycles were going to waste. It figured, again correctly, that it made sense to deploy more threads to use the available CPU cycles on other work items.

If the threads stop to wait for something else, the pool manager spins up more of them to most effectively use CPU time.

This example was easy to write and, I hope, easy for newcomers to understand. You should now be able to see how threads run and how they get swapped in and out. However, don’t let the simplicity of this example fool you into thinking that threading is easy. There are at least two major classes of problems that this simple program hasn’t dealt with. The first is called thread safety, and refers to the case in which more than one thread tries to access the same resource, say, an item of data, at the same time. Looking at the code in Listing 9-2, we can see that the target function calls methods on the control ListView1 to set the icon representing that work item’s state. What happens if two threads try to do this at the same time—is it safe or not? It isn’t usually unless we write code to make it so, which I haven’t done here. It’s a bug waiting to happen, but on the systems on which I’ve tested it, the timing of the worker threads is different enough that I haven’t encountered a conflict. There’s no guarantee, however, that the pool manager’s strategy or other thread activity in a particular system won’t change the thread timing enough to cause a conflict. I left this problem in purposely so you could see how benign-looking code sometimes belies its appearance in multithreaded cases. You must consider thread safety in any multithreaded operation, so I’ll discuss this in my next example.

This example looks cool, but it doesn’t cover the problem of two threads accessing the same data.

The second problem that this simple example doesn’t cover is control over your threads. The pool manager uses different optimization strategies in different environments. If I run this same example program on my notebook PC, with only 1 CPU running at 800 MHz and with only 512 MB of RAM, the pool manager will immediately assign 12 threads and give each a work item. This thread management doesn’t appear optimum for overall throughput, as I explained before. I think the reason that the pool manager changes its strategy is that this less powerful machine is subject to more contention for the fewer available CPU cycles. Since all the threads in the machine are fighting over fewer cycles, the pool manager creates more of its own threads to increase its overall share. That’s good game theory from one app’s own standpoint, but probably won’t work well when several apps all try the same strategy. The pool manager also doesn’t employ certain strategies at all, such as changing thread priorities. If you don’t like the way the pool manager operates, you’ll have to write your own code that creates threads, assigns them to tasks, and destroys them when you are finished with them. I describe this case in the last sample of the chapter.

This example doesn’t cover creating and scheduling your own threads, either.

My customers report that they like the thread pool very much. They use it for something like 70 or 80 percent of their multithreading needs. Breaking it down further, people who describe themselves as application programmers use it exclusively, while those who consider themselves system programmers use it about half the time. Always consider the thread pool your first choice.

|

EAN: 2147483647

Pages: 110