Code Access Security

|

At the beginning of the PC era, very few users installed and ran code that they hadn’t purchased from a store. The fact that a manufacturer had gotten shelf space at CompUSA or the late Egghead Software pretty much assured a customer that the software in the box didn’t contain a malicious virus, as no nefarious schemer could afford that much marketing overhead. And, like Tylenol, the shrink-wrap on the package ensured a customer that it hadn’t been tampered with since the manufacturer shipped it. While the software could and probably did have bugs that would occasionally cause problems, you were fairly comfortable that it wouldn’t demolish your unbacked-up hard drive just for the pleasure of hearing you scream.

Customers generally feel that software purchased from a store is safe for them to run.

This security model doesn’t work well today because most software doesn’t come from a store any more. You install some large packages, like Microsoft Office or Visual Studio, from a CD, although I wonder how much longer even that will last as high-speed Internet connections proliferate. But what about updates to, say, Internet Explorer? A new game based on Tetris? Vendors love distributing software over the Web because it’s cheaper and easier than cramming it through a retail channel, and consumers like it for the convenience and lower prices. And Web code isn’t limited to what you’ve conventionally thought of as a software application. Web pages contain scripts that do various things, not all of them good. Even Office documents that people send you by e-mail can contain scripting macros. Numerically, except for perhaps the operating system, your computer probably contains more code functions that you downloaded from the Web than you installed from a CD you purchased, and the ratio is only going to increase.

However, most software today now comes from the Web.

While distributing software over the Web is great from an entrepreneurial standpoint, it raises security problems that we haven’t had before. It’s now much easier for a malicious person to spread evil through viruses. It seems that not a month goes by without some new virus alert on CNN, so the problem is obviously bad enough to regularly attract the attention of mainstream media. Security experts tell you to run only code sent by people you know well, but who else is an e-mail virus going to propagate to? And how can we try software from companies we’ve never heard of? It is essentially impossible for a user to know when code downloaded from the Web is safe and when it isn’t. Even trusted and knowledgeable users can damage systems when they run malicious or buggy software. You could clamp down and not let your users run any code that your IT department hasn’t personally installed. Try it for a day and see how much work you get done. We’ve become dependent on Web code to a degree you won’t believe until you try to live without it. The only thing that’s kept society as we know it from collapsing is the relative scarcity of people with the combination of malicious inclination and technical skills to cause trouble.

It is essentially impossible for a user to know when code from the Web is safe and when it isn’t.

Microsoft’s first attempt to make Web code safe was its Authenticode system, introduced with the ActiveX SDK in 1996. Authenticode allowed manufacturers to attach a digital signature to downloaded controls so that the user would have some degree of certainty that the control really was coming from the person who said it was and that it hadn’t been tampered with since it was signed. Authenticode worked fairly well to guarantee that the latest proposed update to Internet Explorer really did come from Microsoft and not some malicious spoofer. But Microsoft tried to reproduce the security conditions present in a retail store, not realizing that wasn’t sufficient in a modern Internet world. The cursory examination required to get a digital certificate didn’t assure a purchaser that a vendor wasn’t malicious (like idiots, VeriSign gave me one, for only $20), as the presence of a vendor’s product on a store shelf or a mail-order catalog more or less did. Worst of all, Authenticode was an all- or-nothing deal. It told you with some degree of certainty who the code came from, but your only choice was to install it or not. Once the code was on your system, there was no way to keep it from harming you. Authenticode isn’t a security system; it’s an accountability system. It doesn’t keep code from harming you, it just ensures that you know who to kill if it does.

The Authenticode system doesn’t protect you from harm; it merely identifies the person harming you.

What we really want is a way to restrict the operations that individual pieces of code can perform on the basis of the level of trust that we have in that code. You allow different people in your life to have different levels of access to your resources according to your level of trust in them: a (current) spouse can borrow your credit card; a friend can borrow your older car; a neighbor can borrow your garden hose. We want our operating system to support the same sort of distinctions. For example, we might want the operating system to enforce a restriction that a control we download from the Internet can access our user interface but can’t access files on our disk, unless it comes from a small set of vendors who we’ve learned to trust. The Win32 operating system didn’t support this type of functionality, as it wasn’t originally designed for this purpose. But now we’re in the arms of the common language runtime, which is.

We want to specify the levels of privilege that individual pieces of code can have, as we do with the humans in our lives.

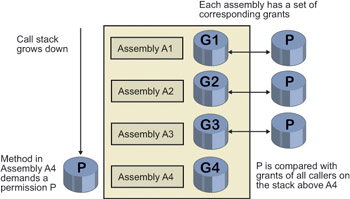

The .NET common language runtime provides code access security, which allows an administrator to specify the privileges that each managed code assembly has, based on our degree of trust, if any, in that assembly. When managed code makes a runtime call to access a protected resource—say, opening a file or accessing Active Directory—the runtime checks to see whether the administrator has granted that privilege to that assembly, as shown in Figure 2-28. The common language runtime walks all the way to the top of the call stack when performing this check so that an untrusted top- level assembly can’t bypass the security system by employing trusted henchmen lower down. (If a nun attempts to pick your daughter up from school, you still want the teacher to check that you sent her, right?) Even though this checking slows down access to a protected resource, there’s no other good way to avoid leaving a security hole. While the common language runtime can’t govern the actions of unmanaged code, such as a COM object, which deals directly with the Win32 operating system instead of going through the runtime, the privilege of accessing unmanaged code can be granted or denied by the administrator.

The .NET common language runtime provides code access security at run time on a per-assembly basis.

Figure 2-28: Access check in common language runtime code access security.

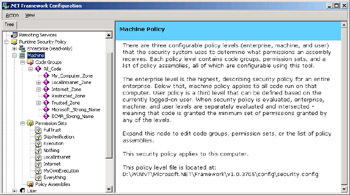

The administrator sets the security policy, a configurable set of rules that says which assemblies are and which aren’t allowed to perform which types of operations. These permissions can be set at three levels: enterprise, machine, and user. A lower-level setting can tighten restrictions placed by settings at a higher level, but not loosen them. For example, if the machine-level permission allows a particular assembly to open a file, a user-level permission can deny the assembly that privilege, but not grant it.

An administrator sets the security policy by editing XML-based configuration files stored on a machine’s disk. Any program that can modify an XML file can edit these files, so installation scripts are easy to write. Human administrators and developers will want to use the .NET Framework Configuration utility program mscorcfg.msc. This utility hadn’t yet appeared when I wrote the first edition of this book, and I said some rather strong things about the lack of and the crying need for such a tool. Fortunately it now exists, whether because of what I wrote or despite it—or whether the development team even read it—I’m not sure. You can see the location of the security configuration files themselves in Figure 2-29.

The administrator sets the code access security policy by editing XML-based configuration files.

Figure 2-29: Security configuration files in the configuration utility.

Rather than grant individual permissions to various applications, an administrator creates permission sets. These are (for once) exactly what their name implies—lists of things that you are allowed to do that can be granted or revoked as a unit to an assembly. The .NET Framework contains a built-in selection of permission sets, running from Full Trust (an assembly is allowed to do anything at all) to Nothing (an assembly is forbidden to do anything at all, including run), with several intermediate steps at permission levels that Microsoft thought users would find handy. The configuration tool prevents you from modifying the preconfigured sets, but it does allow you to make copies and modify the copies.

The administrator constructs permission sets— lists of privileges that are granted and revoked as a group.

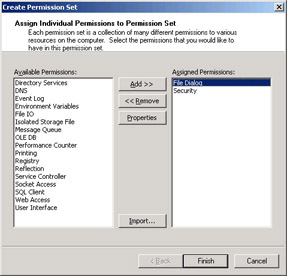

Each permission set consists of zero or more permissions. A permission is the right to use a logical subdivision of system functionality, for example, File Dialog or Environment Variables. Figure 2-30 shows the configuration tool dialog box allowing you to add or remove a permission to a permission set.

Figure 2-30: Assigning permissions to a permission set.

A permission set contains permissions, and a permission contains finer- grained properties.

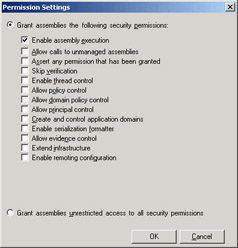

Each permission in turn supports one or more properties, which are finer-grained levels of permitted functionality that can be granted or revoked. For example, the File Dialog permission contains properties that allow an administrator to grant access to the Open dialog, the Save dialog, both, or neither. Figures 2-31 and 2-32 show the dialog boxes for setting the properties of the File Dialog permission and the ambiguously-named Security permission.

Figure 2-31: Setting properties of a single permission.

Figure 2-32: Setting properties of a different permission.

Now that you understand permission sets, let’s look at how the administrator assigns a permission set to an assembly. A code-privilege administrator can assign a permission set to a specific assembly just as a log-in administrator can assign specific log-in privileges to an individual user. Both of these techniques, however, become unwieldy very quickly in production environments. Most log-in administrators set up user groups (data entry workers, officers, auditors, and so on), the members of which share a common level of privilege, and move individual users into and out of the groups. In a similar manner, most code-privilege administrators will set up groups of assemblies, known as code groups, and assign permission sets to these groups. The main difference between a log-in administrator’s task and a code-privilege administrator’s task is that the former will deal with each user’s group membership manually, as new users come onto the system infrequently. Because of the way code is downloaded from the Web, we can’t rely on a human to make a trust decision every time our browser encounters a new code assembly. A code-privilege administrator, therefore, sets up rules, known as membership conditions, that determine how assemblies are assigned to the various code groups. A membership condition will usually include the program zone that an assembly came from, for example, My Computer, Internet, Intranet, and so on. A membership condition can also include such information as the strong name of the assembly (“our developers wrote it”), or the public key with which it can be deciphered (and hence the private key with which it must have been signed). You set membership criteria using the .NET Framework Configuration tool, setting the properties of a code group, as shown in Figure 2-33.

An administrator assigns assemblies to various code groups based on membership conditions such as where the code came from and whose digital signature it contains.

Figure 2-33: Setting code group membership conditions.

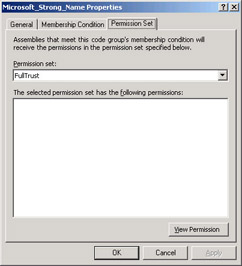

Once you’ve set your membership criteria, you choose a permission set for members of that group, as shown in Figure 2-34. By default, .NET provides full trust to any assembly signed with Microsoft’s strong name. I will be curious to see how many customers like this setting and how many do not.

The administrator then assigns a permission set to each code group.

Figure 2-34: Assigning a permission set to a code group.

When the common language runtime loads an assembly at run time, it figures out which code group the assembly belongs to by checking its membership conditions. It is common for an application to belong to more than one code group. For example, if I ran Microsoft Money add-ons from the Web, they would belong to both the Microsoft group (because I can decode its signature with Microsoft’s public key) and the Internet Zone group (because I ran them from the Internet). When code belongs to more than one group, the permission sets for each group are added together, and the resulting (generally larger) permission set is applied to the application.

If an assembly belongs to more than one code group, its permission set is the sum of the permission sets of the groups to which it belongs.

While most of the effort involved in code access security falls on system administrators, programmers will occasionally need to write code that deals with the code-access security system. For example, a programmer might want to add metadata attributes to an assembly specifying the permission set that it needs to get its work done. This doesn’t affect the permission level it will get, as that’s controlled by the administrative settings I’ve just described, but it will allow the common language runtime to fail its load immediately instead of waiting for the assembly to try an operation that it’s not allowed to do. A programmer might also want to read the level of permission that an assembly actually has been granted so that the program can inform the user or an administrator what it’s missing. The common language runtime contains many functions and objects that allow programmers to write code that interacts with the code-access security system. Even a cursory examination of these functions and objects is far beyond the scope of this book, but you should know that they exist, that you can work with them if you want to, and that you almost never will want to. If you set the administrative permissions the way I’ve just described, the right assemblies will be able to do the right things, and the wrong assemblies will be barred from doing the wrong things, and anyone who has time to write code for micromanaging operations within those criteria is welcome to.

The common language runtime contains many functions and objects for interaction with the code- access security system programmatically.

|

EAN: 2147483647

Pages: 110