Lesson 1: Scale Database Applications

Lesson 1: Scale Database Applications

Estimated lesson time: 120 minutes

Scalability refers to the ability of an application to efficiently utilize resources to accommodate an increased workload. In other words, your application should be able to grow along with the number of users. Even though your application might have a limit to the number of concurrent users it can handle, you should be able to make a change to either the application or the environment that allows it to increase that limit.

Typically, scalability is accomplished by increasing the capacity of the server. This is referred to as scaling up; it usually involves adding more processing power or memory to the server. Because no application changes are required when scaling up, this tends to be a preferred method.

Another method for scaling your application involves adding additional servers to handle database workload. SQL Server 2005 provides several ways for you to scale out a database application. Which methods you use depends on factors such as the degree of data coupling, the number of application changes, and the maintainability requirements.

Application scalability should not be an afterthought in the design and development process. Even if there is only a remote chance that your application will need to accommodate a larger number of users, it should be designed to be scalable from the very beginning. Depending on the technique chosen, implementing application scalability can involve quite a few changes.

This lesson will cover several techniques you can use to scale your application. Table 8-1 lists each of these techniques along with their advantages and disadvantages. As you read through the lesson, you can refer to this table and determine which technique is best suited to your application. Keep in mind that it might be necessary to utilize more than one of these techniques.

| Scale-Out Technique | Description | Advantage | Disadvantage |

|---|---|---|---|

| Scalable shared database | Represents a read-only database located on a storage area network. It can have up to eight different instances executing queries against the database. | Simple to implement and no application changes are required. | Database must be set as read-only, so it needs to be a database that is rarely updated. |

| Peer-to-peer replication | Type of replication in which changes made to one database are copied to all other peer databases. | Easy to implement and allows for updates. | Does not provide conflict resolution, so only one member can update the database at a time. |

| Linked servers | Allows you to execute queries against remote data sources as if they were local. | Allows for updates and requires minimal application changes. | Not effective when data coupling is high. |

| Distributed partition views | Allows you to partition data using a partition key. | Provides good performance even if data updates are required. | Application changes are needed, and it is not easy to implement and maintain. |

| Data-dependent routing | Middleware services or the application is used to route queries for a partitioned database. | Good for handling a large number of servers with many updates. | Not easy to implement and maintain and can be cumbersome if you need to search for all data. |

| Service-oriented data architecture | Represents a database application that uses autonomous and reusable services that are implemented by the database itself using Service Broker. | Flexible solution that allows you to easily move services to another server if necessary. | Requires a lot of application changes. |

Specifying a Data-Partitioning Model

Data partitioning involves moving data from a single database server into more than one repository. It is not uncommon for applications to perform fine when they are moved into production and then start to slow as more data is added to the database. In some cases, changes made to the application or the database, such as applying indexes, allow the application to return to acceptable performance. In cases where this is no longer possible, you might need to consider implementing a data-partitioning model.

There are two kinds of data partitioning: vertical and horizontal. Vertical partitioning involves splitting a table into multiple tables. Typically, this is done to reduce data redundancy, but if a database has gone through the normalization process, this type of partitioning might not be necessary. Horizontal partitioning is used to restrict the number of rows within each table. This is a more commonly used form of data partitioning. The table is partitioned by values in one or more of the columns. For example, you could partition the Person.Contacts table based on the Contact ID. In some cases, it might be necessary to combine both types of partitioning to achieve the desired results.

How data is partitioned and what data is affected depends on the type of data involved. It also depends on the amount of data coupling that exists between tables. For example, if you have a database that contains several years of customer account data, you might need to move some of that customer data to another database. Typically, all this data will not reside within a single table. It might be spread out among several related tables. Even though you might have a table named Customer, you will need to move data as it relates to a customer entity and not just the single customer record from the Customers table. By moving the data as an entity, you will reduce the need to execute distributed queries and allow queries to be executed faster.

Data partitioning is typically just one step in implementing a scale-out solution. It is important to identify the type and amount of data to be partitioned early on. This will help you to select a scale-out technique most appropriate for your application.

Partition Functions

Once you have determined what data needs to be moved, you will need to create the additional repositories. This might involve creating databases on separate servers or creating additional filegroups on the same server. If you are creating additional file groups, you will need to use a partition function and partition scheme to specify how tables and indexes are partitioned.

| Important | SQL Server 2005 Enterprise Edition required Partition functions can only be created using SQL Server 2005 Enterprise Edition. See the Introduction for instructions on how to install the free 180-day evaluation edition of SQL Server 2005 Enterprise Edition from the companion DVD, if you do not already have a copy installed. |

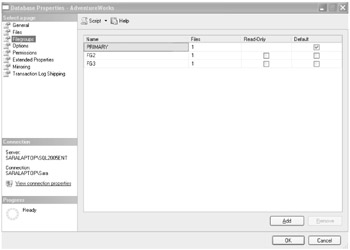

To demonstrate how partitioning works, assume that you wish to partition the Production.Product table in the AdventureWorks database. The AdventureWorks database includes one file group named PRIMARY that contains one data file and one transaction log file. To partition this database, you could add two filegroups named FG2 and FG3, which contain additional data files. This could be accomplished by using the interface in SQL Server Management Studio or by issuing the following Transact-SQL statements. The path to your database may be different than the following.

USE [master] GO ALTER DATABASE [AdventureWorks] ADD FILEGROUP [FG2] GO ALTER DATABASE [AdventureWorks] ADD FILE ( NAME = N'AdventureWorks_Data_FG2', FILENAME = N'C:\Program Files\Microsoft SQL Server\MSSQL.8\MSSQL\DATA\AdventureWorks_Data_FG2.ndf' , SIZE = 5120KB , FILEGROWTH = 1024KB ) TO FILEGROUP [FG2] GO ALTER DATABASE [AdventureWorks] ADD FILEGROUP [FG3] GO ALTER DATABASE [AdventureWorks] ADD FILE ( NAME = N'AdventureWorks_Data_FG3', FILENAME = N'C:\Program Files\Microsoft SQL Server\MSSQL.8\MSSQL\DATA\AdventureWorks_Data_FG3.ndf' , SIZE = 5120KB , FILEGROWTH = 1024KB ) TO FILEGROUP [FG3] GO

The partition function is used to define a range of records that will be included in the partition(s). By default, all tables and indexes in SQL Server 2005 are assigned to at least one partition. You can view these partitions by executing a query using the sys.partitions catalog view.

The partition function accepts an input parameter type, which in this case will be an integer because you wish to partition the table by Product ID. The function also accepts a range of values that will be used to separate the table into groups. In the following Transact-SQL statement, the partition function is named pfnProduct, and it is used to separate an integer value into three groups. In this case, the ranges will be: less than or equal to 450, 451 to 800, and 801 or greater.

CREATE PARTITION FUNCTION pfnProduct (int) AS RANGE LEFT FOR VALUES (450, 800)

Partition Schemes

The next step is to create a partition scheme. The partition scheme is used to assign a partition to certain filegroups. In the following example, the partition scheme named pscProduct assigns the partition to the filegroups named Primary, FG2, and FG3:

CREATE PARTITION SCHEME pscProduct AS PARTITION pfnProduct TO ('Primary', 'FG2', 'FG3') The last thing to do is associate a particular index with the new partition scheme. ProductID is the column you will partition on, but this column is defined as a primary key for the Production.Product table. To associate an index with a partition scheme, you need to execute the DROP INDEX Transact-SQL statement against that index.

You cannot execute the DROP INDEX statement on a primary key. To get around this, you would first need to remove the primary key by using the interface in SQL Server Enterprise Manager or by using the ALTER TABLE statement. Before you do this, be aware that doing so will also remove all foreign key relationships. For the AdventureWorks database, this change will affect several tables.

Once the primary key is removed, you can re-create the index as a unique and clustered index and execute the following Transact-SQL code:

IF EXISTS (SELECT * FROM sys.indexes WHERE object_id = OBJECT_ID(N'[Production].[Product]') AND name = N'PK_Product_ProductID') DROP INDEX PK_Product_ProductID ON Production.Product WITH (MOVE TO pscProduct (ProductID), ONLINE = OFF )

The DROP INDEX statement will move the index from the primary filegroup to the partition defined by the partition scheme. An alternative to executing the DROP INDEX statement is to use the Storage page for the index properties. (Refer to Figure 8-1.)

Figure 8-1: Storage page within the properties for the ProductID index

Object Catalog Views

Once the partition is created, you can see the new partition records using the sys.partitions object catalog view. For example, the following Transact-SQL statement can be used to view all partitions for the object named "Product":

select * from sys.partitions where object_name(object_id) = 'Product'

Before the partition scheme was added, the previous query would have returned four records. Each record represented one of the four indexes for the Production.Product table. Now that the partition has been created, the query will return six records, as follows:

| Partition ID | Object ID | Index ID | Partition Number | Hobt ID | Rows |

|---|---|---|---|---|---|

| 72057594056278016 | 1429580131 | 0 | 1 | 72057594056278016 | 129 |

| 72057594056343552 | 1429580131 | 0 | 2 | 72057594056343552 | 176 |

| 72057594056409088 | 1429580131 | 0 | 3 | 72057594056409088 | 199 |

| 72057594056474624 | 1429580131 | 2 | 1 | 72057594056474624 | 504 |

| 72057594056540160 | 1429580131 | 3 | 1 | 72057594056540160 | 504 |

| 72057594056605696 | 1429580131 | 4 | 1 | 72057594056605696 | 504 |

The last column in the query result is the number of rows, and the first three records represent the partition for the Product ID index. Notice that the number of rows for these records totals 504, which is the number of records in the Production.Product table. You can see how many records exist within each partition. If the distribution is not acceptable, you would need to drop and re-create the partition function to specify a different range.

As shown in Table 8-2, in addition to the sys.partitions view, SQL Server 2005 includes four other views that can be used to query partition information.

| View Name | Description |

|---|---|

| sys.partitions | Returns information about partitions assigned to objects in a database. |

| sys.partition_functions | Returns information about any partition functions created for a database. |

| sys.partition_parameters | Returns information about parameters used in partition functions. This view could be joined to the sys.partition_functions view on the function_id column. |

| sys.partition_schemes | Returns information about partition schemes created for the database. This view can be joined to the sys.partition_functions on the function_id column. |

| sys.partition_range_values | Returns the ranges allowed for parameters in a partition function. This was specified when creating the partition scheme. |

Targeting Multiple Servers

In the previous section, we examined a partitioning model that involved adding filegroups to a single server. While this can help an application to scale, it might be necessary to target multiple servers to achieve the desired results. For many of today's Web sites and enterprise-wide applications, targeting multiple servers is the only way these applications can accommodate the high demand.

In cases where data is partitioned across multiple servers, those servers will need to cooperate to balance the load of the application. A group of servers joined together in this way is known as a federation. How these federated servers cooperate depends on the technique chosen. This section will present several techniques that can be used to target multiple servers.

Using Linked Servers

Linked servers (which were introduced in Chapter 2, "Designing Database Queries") provide a way to issue queries against remote data sources as if they were local. If you were to partition data so that it resided on more than one server, linked servers could be used to query the remote data. The advantage to linked servers is that as far as the application is concerned, the remote data source exists on the local database server.

Only small application changes are needed to make this scenario work. The drawback is that the remote query consumes more resources than a local query. For a partitioning model that targets multiple servers to be effective, the performance benefit from partitioning the data has to outweigh the cost of querying the remote data.

To demonstrate a scenario involving partitioned data and linked servers, let's assume that you needed to partition data in the AdventureWorks database. You have decided to move product history information to another server, a method known as data archiving.

| Best Practices | Partition large databases only The AdventureWorks database would not be considered a large database and typically, there would be no need to partition data from tables that contained only a few hundred records. A table that did need to be partitioned might contain millions or even billions of records. Keep this in mind as you are selecting a scale-out technique for your real-world application. |

In many cases, there are legal and/or company policy restrictions that force you to maintain a certain number of years of historical data. Even though users rarely access historical data beyond three years, assume you have to maintain history for up to seven years.

The product history information is stored in two tables: Production.ProductListPriceHistory and Production.ProductCostHistory. Both these tables have a start and end date. The end date could be used to partition the data and move data older than three years but less than seven years into a separate database. You could also move data older than seven years into a database that would only be accessible through backups.

The data residing in a separate database would need to be accessible to the database application. A linked server could be created which points to this database. The database application allows users to optionally select a date range when requesting product history. Assume that the following stored procedure was originally used to access the historical data:

CREATE PROCEDURE spGetProductListPriceHistory @ProdID int, @EndDate datetime = NULL AS BEGIN SET NOCOUNT ON; IF @EndDate IS NULL BEGIN SELECT StartDate, EndDate, ListPrice FROM Production.ProductListPriceHistory WHERE ProductID = @ProdID AND EndDate IS NULL ORDER BY EndDate END ELSE BEGIN SELECT StartDate, EndDate, ListPrice FROM Production.ProductListPriceHistory WHERE ProductID = @ProdID AND EndDate IS NOT NULL AND EndDate <= @EndDate ORDER BY EndDate END END

The spGetProductListPriceHistory stored procedure accepts two input parameters: product ID and end date. If an end date is not provided, a default value of NULL is assigned, and the stored procedure returns history records where the end date is set with a value of NULL. Otherwise, the stored procedure will return all history records that fall before or on the end date passed in as a parameter.

Once the history records have been moved to a new database, we will need to alter the previous stored procedure. Assume that a linked server named svrProductHistory was created. We could then use the following Transact-SQL to alter the stored procedure:

ALTER PROCEDURE spGetProductListPriceHistory @ProdID int, @EndDate datetime = NULL AS BEGIN SET NOCOUNT ON; IF @EndDate IS NULL BEGIN SELECT StartDate, EndDate, ListPrice FROM Production.ProductListPriceHistory WHERE ProductID = @ProdID AND EndDate IS NULL ORDER BY EndDate END ELSE IF DateDiff(dd, GetDate(), @EndDate) > 1095 --3 * 365 = 1095 BEGIN SELECT StartDate, EndDate, ListPrice FROM Production.ProductListPriceHistory WHERE ProductID = @ProdID AND EndDate IS NOT NULL AND EndDate <= @EndDate UNION SELECT StartDate, EndDate, ListPrice FROM OPENDATASOURCE('SQLNCLI', 'Data Source=svrProductHistory\sql2005ent; Integrated Security=SSPI') .AdventureWorks.Production.ProductListPriceHistory WHERE ProductID = @ProdID AND EndDate IS NOT NULL AND EndDate <= @EndDate Order BY EndDate END END The new version of the stored procedure would check to see whether the end date passed in as a parameter exceeded three years. If it did, then it would use a UNION clause to return records from both the original database and the newly created linked server. The OPENDATASOURCE function is used to execute an ad hoc query against the server named svrProductHistory. The OPENDATASOURCE function provides an alternative to using a four-part name to query the linked server. For more information about using a four-part name to query a linked server, refer to Lesson 1, "Writing Database Queries," in Chapter 2.

By default, SQL Server 2005 does not allow you to execute ad hoc queries. Therefore, you must enable this option using the sp_configure system stored procedure. Because it is an advanced option, you will also need to first show the advanced options. You could use the following Transact-SQL code to enable ad hoc queries:

sp_configure 'show advanced options', 1; GO RECONFIGURE; GO sp_configure 'Ad Hoc Distributed Queries', 1; GO RECONFIGURE; GO

The last thing to consider is that as time passes, more history records will be added the database. You will need to create a process that moves records older than three years to the second database. Although you could do this using a custom application, it would be easier to simply create a SQL Server Integration Services (SSIS) package that can be scheduled to run once a day. The package would execute code that queried the database for records older than three years. If any are found, it can delete the records from the original database and insert them into the second database.

Implementing Scale-Out Techniques

Implementing a successful scale-out technique involves consideration of all potential solutions. It is possible that you might need to implement a combination of one or more techniques to accomplish your goal. This section presents techniques that can be used to target multiple servers and thus scale out your application.

Using a Distributed Partition View

A Distributed Partition View (DPV) is a technique in which data partitioned horizontally across multiple servers is joined together to make it appear as if it comes from one table. The data is partitioned by a key value from each table. For example, the Product ID column could be used to partition the product tables in the AdventureWorks database.

The goal of a DPV is to transparently distribute the load of a database application across multiple servers. This is different from the partition we created earlier, in which a table was assigned to multiple filegroups on the same server. In the case of a DPV, the data is partitioned across two or more servers.

To create a DPV, you will first need to horizontally partition your table or tables. The considerations that apply to this task are the same as those discussed in the section of this chapter titled "Specifying a Data-Partitioning Model." Once the data has been moved to its respective servers, you will need to utilize check constraints to ensure the range of values for each partition.

For example, assume we want to create three partitions for the Person.Contact table in the AdventureWorks database. The partitions will reside on three separate servers named ServerA, ServerB, and ServerC. The Person.Contact table contains 19,972 records. If this table is partitioned on the column ContactID, we can split it so that 6,657 records reside in the partition on ServerA, 6,657 records in the partition on ServerB, and 6,658 records in the partition on ServerC (6,657 + 6,657 + 6,658 = 19,972).

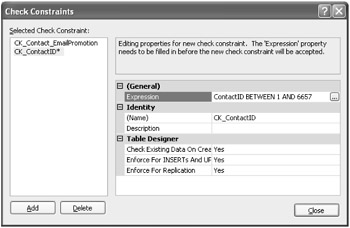

The partitioned tables will need to include a check constraint that enforces the rule that each server will contain a certain range of ContactID records. You can add check constraints with the ALTER TABLE statement or by using the Check Constraints dialog box in SQL Server Management Studio (refer to Figure 8-2). The Transact-SQL statement used to create a check constraint on ServerA would appear as follows:

ALTER TABLE Person.Contact ADD CONSTRAINT CK_Contact_ContactID CHECK (ContactID BETWEEN 1 AND 6657) GO

Figure 8-2: The Check Constraints dialog box used to add a new constraint to the Person.Contact table

Now, we can create linked servers to reference the additional servers. Once the linked servers are created, we can create the DPV using the following Transact-SQL statement:

CREATE VIEW tblContact AS SELECT * FROM ServerA.AdventureWorks.Person.Contact UNION SELECT * FROM ServerB.AdventureWorks.Person.Contact UNION SELECT * FROM ServerC.AdventureWorks.Person.Contact

The CREATE VIEW statement will need to be executed on all three servers. The view will join the records from all three tables. When the application needs to search for contacts, you can execute a SELECT query using the view named tblContact.

| Important | A loopback linked server is not allowed You might be tempted to create a linked server definition that points back to the same instance of SQL Server. This is known as a loopback linked server, and it is not allowed. If you want to experiment with creating a distributed partition view, you will have to use separate instances of SQL Server 2005. |

You will need to set the LazySchemaValidation property for each linked server. This option specifies whether the schema used by the linked server is validated when the local SQL Server instance is idle. Setting this option provides a performance advantage for queries against the linked server. You can set this option by using the sp_serveroption system stored procedure. The Transact-SQL code needed to set this option is as follows:

USE master; EXEC sp_serveroption 'ServerB', 'lazy schema validation', 'true'; GO

For your view to be updateable, you will need to ensure that no triggers are enabled on any of the partitioned tables. You will also need to remove any indexes for computed columns, and the view cannot be created using the EXCEPT or INTERCEPT clauses. In addition, each partitioned table must be referenced only once. Because a distributed transaction will be created during an update, you need to set the XACT_ABORT_OPTION to ON. This ensures that the transaction will be rolled back if a run-time error is encountered.

Using Data-Dependent Routing

Data-Dependent Routing (DDR) is a technique in which data is partitioned horizontally and then middleware or the application itself is used to route requests to the appropriate database. If middleware is used to accomplish this task, then the process is considered somewhat transparent. If the routing is done by the application, then changes to accommodate this type of routing will need to be made.

Because DDR requires a good deal of application changes to implement, it is generally only utilized by high-performing Web applications that require a lot of updates. Typically, routing will occur within the data access layer and will require that a table containing the server names, table names, and value ranges is created. This table will be used to route requests based on a key value to the appropriate server.

To demonstrate how DDR works, assume that you want to partition the AdventureWorks database. The data will be partitioned across 10 servers, although, in a real-world situation, this could potentially be hundreds or even thousands of servers. The first step is to partition the data. The considerations to assess are featured in the section of this chapter titled "Specifying a Data-Partitioning Model."

Assume that you have decided to partition the customer tables according to CustomerID. The data will be partitioned as an entity, and all of the data for a customer will be moved to a single server. When there is a need to return customer information, it can be retrieved from one server and thereby reduce the need for costly distributed queries.

Once the data has been moved to separate tables, you will need to create lookup tables that store the routing information. One lookup table will contain a single record for each partitioned server, along with the linked server name used to query that server. For example, the following records would reside in a server lookup table:

| ServerID | ServerName | Description |

|---|---|---|

| 1 | Server1 | Customers 1 – 2000 |

| 2 | Server2 | Customers 2001 – 4000 |

| 3 | Server3 | Customers 4001 – 6000 |

| 4 | Server4 | Customers 6001 – 8000 |

| 5 | Server5 | Customers 8001 – 10000 |

| 6 | Server6 | Customers 10001 – 12000 |

| 7 | Server7 | Customers 12001 – 14000 |

| 8 | Server8 | Customers 14001 – 16000 |

| 9 | Server9 | Customers 16001 – 18000 |

| 10 | Server10 | Customers 18001 – 19185 |

Another table would contain the partition key, which in this case is the CustomerID, along with the ServerID. For example, the following is a partial result set for a customer lookup table:

| CustomerID | ServerID |

|---|---|

| 1 | 1 |

| 2 | 1 |

| 2557 | 2 |

| 2634 | 2 |

| 8999 | 5 |

| 12455 | 7 |

The challenge with DDR involves searching for data across all partitions. You definitely do not want to execute distributed queries against hundreds of servers. Typically, the solution to this problem lies with the creation of summary data. For example, you could create a table that contained summary data for each customer and all orders for that customer. This same table would be located on all the servers. When there was a need to search through all the customers, you would reference the summary table to locate the customer record. You could then retrieve the order detail for that customer by executing a distributed query against the server containing that customer's data. For more information about data-dependent routing, refer to the article, "Microsoft TechNet SQL TechCenter: Scaling Out SQL Server with Data Dependent Routing" at http://www.microsoft.com/technet/prodtechnol/sql/2005/scddrtng.mspx. You can also refer to the article, "Scaling Out SQL Server with Data-Dependent Routing" at http://www.dell.com/downloads/global/power/ps3q05-20050100-Auger.pdf.

Using Service-Oriented Database Architecture

Based on the widely adopted concept of Service-Oriented Architecture (SOA), Service-Oriented Database Architecture (SODA) combines SOA with the new features available in SQL Server 2005. SODA allows your database application to use independent and reusable services available through the database itself. This means the database is operating as a service provider.

SOA involves a collection of services that communicate with each other. The services themselves are autonomous and do not rely on the state from other services to function. However, they must have the ability to communicate with each other using XML messages, and SOA allows them to do that. SODA offers a way for SQL Server 2005 to implement the service-oriented architecture.

The benefit to using SODA is that you have a flexible solution in which services can easily be moved to another server if necessary. It also handles a high volume of transactions and database updates. Most important, applications can take advantage of features built into SQL Server 2005, such as the following:

-

Service Broker Provides queuing along with reliable and secure messaging to applications using SQL Server 2005. It can be used to coordinate services asynchronously and provide a scalable and robust solution.

-

Query Notifications Allows an application to request that a notification is sent when the results of a query change. This is useful when refreshing cached data. Instead of refreshing the data on a scheduled basis, the application can refresh the cache only when the data has actually changed.

-

Native XML Web Services Through HTTP endpoints, you can expose SQL Server data directly through XML Web services. Instead of residing on an Microsoft Internet Information Services (IIS) server, the Web services reside on SQL Server itself and are built using stored procedures or user-defined functions.

-

Notification Services Allows you to send notifications to subscribers concerning events within the application. The notifications can be sent to a variety of devices according to the subscribers' preferences.

-

SQLCLR The SQL Common Language Runtime (CLR) allows you to create and compile .NET Framework code that executes on the SQL Server. This is where the logic for your service provider resides. You can use SQLCLR to create high-level functions that replace Transact-SQL stored procedures and user-defined functions.

The drawback to implementing a solution using SODA is that it requires many application changes, and a good deal of the code will reside in the database in the form of SQLCLR code. Because so much of the business logic resides with the database, you are essentially tied to that particular database. However, if you work with SQL Server 2005 exclusively and know this will always be the case, it might not be an issue for you.

To demonstrate how SODA works, assume you want to build a service-oriented data solution using the AdventureWorks database. You want to separate the data and services that are used to handle initial sales orders from the work orders. In this case, if the database handling work orders goes down or is overloaded, sales orders will not be affected.

To accomplish this task, you will first need to partition the data used to handle sales orders and work orders. The tables associated with these processes will be moved to separate databases. Reference tables that are used by both processes will be replicated to each database using peer-to-peer replication. You can also use replication to copy product and customer data to each database server.

When a sales order is entered into the system, one or more records are created in the sales order tables. A sales order message is then placed into a queue using Service Broker. The message is forwarded to the database that handles work orders. If the database is not available, then the message will be queued until the server is available. If the database is available, then the message will be processed and a response will be sent to the user through Notification Services.

Transparently Distributing Data and Workload

In some cases, you might not have access or the ability to change an existing application. The application might be suffering from performance problems, but making major changes to the code is not an option. This section features techniques that can be used to scale your application transparently, which means few or no application changes are required.

Using a Scalable Shared Database

This is a new feature with SQL Server 2005 that allows you to indicate whether a database is read-only. Typically, this is used for reporting or data-warehousing databases in which you know that no updates are required. Data-warehousing databases are used to store large amounts of data and are typically stored in a multidimensional format. You can create a scalable shared database that is used by up to eight different SQL Server instances to execute long-running queries or generate reports.

| Important | System requirements Only Microsoft Windows Server 2003, Service Pack 1 or later can support the creation of a scalable shared database. You will also need to use SQL Server 2005 Enterprise Edition. |

To enable a scalable shared database, it must be mounted on a read-only volume and accessed over a storage area network (SAN). The availability of the SAN protocol is new with the Enterprise Edition of SQL Server 2005. It allows you to cluster servers together and deliver high availability to your database applications.

Once the volume is mounted and the database built, you can attach the database to an instance of SQL Server. This is when it becomes available as a scalable shared database. It can then be accessed by clients using reporting servers.

| Best Practices | Reporting servers need to be identical All reporting servers should be running under the same operating system and service pack. If upgrades or updates are needed, make sure they are applied to all servers. |

Even though the database is read-only, you will need to periodically refresh the data within the database. To update the database, you will need to detach all instances and attach only one instance in read/write mode. The best way to do this is to alternate the update cycles between two different volumes. While the first volume is being updated, the second one can be reattached to the production server to prevent application downtime.

Using Peer-to-Peer Replication

One of the best ways to target multiple servers is by using replication. In many cases, no application changes are necessary, and by using multiple servers, applications are allowed to scale to whatever level is necessary. There are many types of replication, but one that you might want to consider is peer-to-peer replication. Peer-to-peer replication is a new feature added with SQL Server 2005. This low-overhead solution allows you to maintain copies of a database on multiple servers, while still allowing for updates.

In peer-to-peer replication, each server will access its own copy of the database, but only one of the servers at a time will be allowed to update the data. Once the server updating the database has made the changes, a new copy of the database will be sent to the peers. If any of the other peers has made a similar change before the change is propagated to the others, a conflict could occur. For this reason, you want to implement this solution on servers where there is a low update frequency. In cases where there is a high update frequency, you might want to consider another scale-out option or consider using another replication type, such as merge replication. Merge replication does provide conflict resolution, but it consumes more server resources.

In peer-to-peer replication, each peer acts as both a publisher and a subscriber. There is no master publisher that copies updates to read-only subscribers. Each peer is able to make updates and then copy the changes to all other peers. Because conflict resolution is not built-in, the only way to avoid conflicts is by using a concept known as data stewardship. This involves assigning ownership of the data and then creating rules that define how that data will be updated.

A good example of where peer-to-peer replication is effective is in the case of a large company with offices scattered across the country. Each office has its own application and database servers, but they all need to share customer account information. Peer-to-peer replication would allow each office to maintain a separate copy of the database. The office that opened the customer account would be the only one allowed to update the data for that customer. All other offices would be able to read the customer data and place orders for that customer, but only the original office would own the customer account. In addition, the office that placed an order would be the only one allowed to update that order. The application would be responsible for determining which office is allowed to update a customer or order record.

For this scenario to work, all the databases must maintain the same data schema. The individual peer databases should not contain any additional tables or objects that are not part of the central schema. Before any subscriptions are created, a publication that allows peer-to-peer replication should be created. When the subscriptions are created, they need to be initialized using a backup as opposed to a snapshot. Using a backup is a fast and easy way to populate the subscription database.

In this lesson, we will not go into detail surrounding replication and specifically configuring peer-to-peer replication. For more information regarding this topic, refer to the article, "How to: Configure Peer-to-Peer Transactional Replication" at http://msdn2.microsoft.com/en-us/library/ms152536.aspx.

Moving Code to a Different Tier

You have probably heard or read about using a multi-tiered architecture to develop enterprise-wide applications that accommodate a large number of concurrent users. Typically, there will be a user presentation tier to represent the user interface and a middle tier to encapsulate the business logic. The middle tier will communicate with the final layer, the data tier, to access and update data for the end user. By moving functionality to different tiers, you can increase code reusability and interoperability.

In some cases, you might be able to gain a performance advantage by moving complex business logic from the middle tier to the SQL Server platform. SQL Server 2005 introduced the ability to execute code for the .NET Framework CLR on SQL Server itself. This means you can write code using Microsoft Visual Basic .NET, C#, or J# and execute it on the SQL Server. To determine in which tier code will operate most efficiently, you might have to execute portions of the code in both environments and compare the results. For more information about the considerations to make regarding this decision, refer to the article, "The Database Administrator's Guide to the SQL Server Database Engine .NET Common Language Runtime Environment" at http://www.microsoft.com/technet/prodtechnol/sql/2005/clr4dbas.mspx.

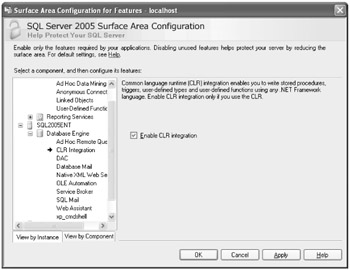

You will need to enable CLR integration before using it. You can do so with the SQL Server Surface Area Configuration tool. You will need to select the Surface Area Configuration For Features link and then expand the node for the instance of SQL Server 2005 you wish to enable. If you select CLR Integration, you should see a dialog box such as the one in Figure 8-3.

Figure 8-3: Enable CLR Integration using the SQL Server Surface Area Configuration tool

By default, this feature is disabled, so you will need to select the Enable CLR Integration check box and click Apply to save the changes. Alternatively, you can execute the following Transact-SQL statement from a new query window inside of SQL Server Management Studio:

sp_configure N'clr enabled', 1 GO RECONFIGURE GO SELECT * FROM sys.configurations WHERE [Name] = N'clr enabled'

Once CLR has been enabled, you can create a CLR stored procedure by using an ordinary text editor such as Windows Notepad. For example, you could type the following code into Notepad and then save the file as CLRStoredProcedure.cs or CLRStoredProcedure.vb:

//C# using System; using System.Data; using Microsoft.SqlServer.Server; using System.Data.SqlTypes; public class CLRStoredProc { [Microsoft.SqlServer.Server.SqlProcedure] public static void GetTomorrowsDate() { System.DateTime tomorrow = System.DateTime.Now; System.TimeSpan ts = new System.TimeSpan(1, 0, 0, 0); SqlContext.Pipe.Send(Convert.ToString(tomorrow.Add(ts))); } } 'VB Imports System Imports System.Data Imports Microsoft.SqlServer.Server Imports System.Data.SqlTypes Public Class CLRStoredProc <Microsoft.SqlServer.Server.SqlProcedure> _ Public Shared Sub GetTomorrowsDate() Dim tomorrow As System.DateTime tomorrow = System.DateTime.Now() Dim ts As New System.TimeSpan(1, 0, 0, 0) SqlContext.Pipe.Send(Convert.ToString(tomorrow.Add(ts))) End Sub End Class Notice that the CLRStoredProc method uses the SqlContext object to send a command through the SQL pipe. The SQLContext object is used to provide access to the context of the caller while the managed code executes in the SQL Server. The SqlPipe is one of three objects available through the SqlContext. This represents the pipe in which results flow back to the client. You can execute any one of the following methods:

-

Send Used to send data straight to the client. The data can either be a result set using a SqlDataReader object or a string message.

-

ExecuteAndSend Used to execute a command using a command object and then send the results back to the client.

-

SendResultsStart Used to send data that did not originate from a SqlDataReader object back to a client. Accepts a SqlDataRecord object as input and marks the beginning of the result set.

-

SendResultsRow Used in conjunction with SendResultsStart to send a row of data back to the client.

-

SendResultsEnd Used in conjunction with SendResultsStart and SendResultsRow to send data back to the client. This method is used to set SqlPipe back to its original state.

SQL Server provides command-line compilers that can be used to generate an assembly from a code file. If you are using Visual Basic, you will use vbc.exe, and if you are using C#, you will use csc.exe. To use the compiler, go to a Visual Studio 2005 Command Prompt and enter a command such as the following example. Replace <dir> with the location that you've created for testing this example. You also have the option of creating a directory named c:\TK442\Chapter8\Code\Lab1, which will be used in a lab exercise later in the chapter.

//C# csc /target:library .<dir>\SQLStoredProc.cs 'VB vbc /target:library <dir>\SQLStoredProc.vb

By executing the compiler, you will generate a dynamic-link library (DLL) file that is placed in the target library by default, <dir>\. You will then need to create an assembly in SQL Server that lets you access the stored procedure. You do so by executing a Transact-SQL statement, such as the following, from a new query window:

Use AdventureWorks; CREATE ASSEMBLY SQLStoredProc from <dir>\SQLStoredProc.dll' WITH PERMISSION_SET = SAFE

The final step is to create a stored procedure that references the SQLStoredProc assembly. For example, the following Transact-SQL statement can be used to reference the assembly using its external name:

Use AdventureWorks; CREATE PROCEDURE GetTomorrowsDate AS EXTERNAL NAME SQLStoredProc.CLRStoredProc.GetTomorrowsDate

Now that the procedure has been created, we can execute it just like any Transact-SQL stored procedure. For example, the following Transact-SQL statement will return tomorrow's date in the messages window:

EXEC GetTomorrowsDate

Rewriting Algorithms

It has long been established that Transact-SQL is a set-based language and not an object-oriented one. For this reason alone, you should restrict the amount of complex business logic that resides in Transact-SQL stored procedures, user-defined functions, and triggers. Even though there are scenarios where Transact-SQL performs more quickly than code in the .NET Framework, it is generally best to restrict use of Transact-SQL for queries. (For more information about this, refer to Lesson 2, "Designing a Cursor Strategy" and Lesson 3, "Designing Efficient Cursors," in Chapter 3, "Designing a Cursor and Caching Strategy.")

SQL Server 2005 introduced the ability to execute .NET Framework code on SQL Server, which we covered in the previous section. Now that you have this option, you might need to consider whether moving some of the business logic to the database server is a good idea. SQLCLR is not a magic bullet, and just because it is offered does not mean you should rewrite all your code to utilize it. If you just need to retrieve data, then it is quicker and more efficient to use Transact-SQL. However, if you need to retrieve data and perform complex operations against that data, then SQLCLR might be a better option.

Lab: Scaling Database Applications

In this lab, you will experiment with techniques used to scale out a database application. In the first exercise, you will create a partition function that is used to indicate how a partition is divided. In Exercise 2, you will create a SQLCLR user-defined function.

The completed code examples, in both Visual Basic and C#, are available in the \Labs\Chapter 08 folder on the companion CD.

| Important | Lab requirements You will need to have SQL Server 2005 installed before you can complete this lab. Refer to the Introduction for setup instructions. |

Exercise 1: Create a Partition Function

In this exercise, you will create a partition function that is used to horizontally partition the data in the Production.Product table. The data will be partitioned by ProductNumber, which is a character-based column. The data will be placed into separate filegroups that reside on the same instance of SQL Server.

-

Open Microsoft SQL Server Management Studio.

-

Connect to the instance of SQL Server 2005 that contains the AdventureWorks database.

-

Right-click the AdventureWorks database, and select Properties. Select the Filegroups page. (Refer to Figure 8-4.)

-

Click Add, and enter FG2 as the name of the new filegroup. Add another filegroup named FG3.

-

Select the Files page, and click Add to add a new file. The new file will be named AdventureWorks_Data_FG2, the File Type will be data, and the Filegroup will be FG2. Add another file named AdventureWorks_Data_FG3 with a File Type of data and the Filegroup FG3. When finished, click OK to save your changes to the database.

-

Select New Query.

-

Select AdventureWorks from the Available Databases drop-down list box. Add the following code to the query window:

CREATE PARTITION FUNCTION pfnProduct (nvarchar(25)) AS RANGE LEFT FOR VALUES ('FL', 'LN') -

Click the Execute button, and ensure that the function is created successfully.

-

Replace the code added in step 7 with the following:

CREATE PARTITION SCHEME pscProduct AS PARTITION pfnProduct TO ('Primary', 'FG2', 'FG3') -

Click the Execute button, and ensure that the scheme is created successfully.

-

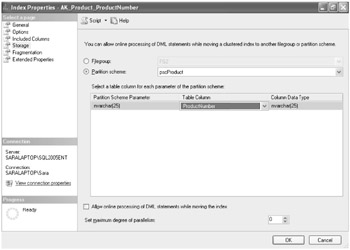

From Object Explorer, expand the Production.Product table, and then the indexes node within the AdventureWorks database. Right-click the AK_Product_ProductNumber index, and select Properties.

-

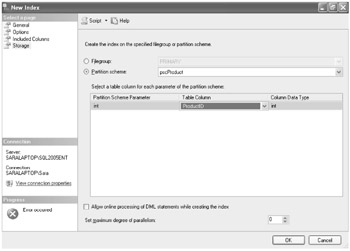

From the Properties dialog box, select the Storage page. Select the Partition scheme option, and ensure that the partition scheme named pscProduct is selected. (Refer to Figure 8-5.) Enter ProductNumber as the Table Column, and click OK to save the changes.

-

Return to the new query window, and execute the following query:

select * from sys.partitions where object_name(object_id) = 'Product'

-

You should see results similar to those in the following table:

Open table as spreadsheet

Open table as spreadsheet Partition ID

Object ID

Index ID

Partition Number

Hobt ID

Rows

72057594056867840

1429580131

1

1

72057594056867840

504

72057594056998912

1429580131

3

1

72057594056998912

504

72057594057064448

1429580131

4

1

72057594057064448

504

72057594057129984

1429580131

17

1

72057594057129984

504

72057594057195520

1429580131

2

1

72057594057195520

137

72057594057261056

1429580131

2

2

72057594057261056

204

72057594057326592

1429580131

2

3

72057594057326592

163

-

Notice that in this table, the last three rows are associated with our newly created partition. The table was partitioned into three groups of the following sizes: 137, 204, and 163.

Figure 8-4: The Filegroups page as displayed in the Properties dialog box for the AdventureWorks database

Figure 8-5: The Storage page for the AK_Product_ProductNumber index properties

Exercise 2: Create a CLR User-Defined Function

In this exercise, you will create a user-defined function using .NET Framework code. The code will be created using a Windows text-based editor and compiled using the Visual Basic or C# command line compiler. You will then create an assembly in SQL Server and execute the newly created user-defined function just as you would a user-defined function created with Transact-SQL.

| Best Practices | For demonstration purposes only For demonstration purposes, the user-defined function in this exercise performs a data access query. Generally, you will not use SQLCLR for basic data access. In this case, Transact-SQL is a better alternative. |

-

Open SQL Server Management Studio.

-

Connect to the instance of SQL Server 2005 that contains the AdventureWorks database.

-

Select New Query.

-

Select AdventureWorks from the Available Databases drop-down list box. Add the following code to the query window:

sp_configure N'clr enabled', 1 GO RECONFIGURE GO SELECT * FROM sys.configurations WHERE [Name] = N'clr enabled'

-

Click the Execute button, and ensure that the query executed successfully.

-

Open Windows Notepad, and copy in either the C# or VB code shown below:

//C# using System; using System.Data; using Microsoft.SqlServer.Server; using System.Data.SqlClient; using System.Data.SqlTypes; public class TK442Chapter8 { [Microsoft.SqlServer.Server.SqlFunction(DataAccess=DataAccessKind.Read, IsDeterministic=true)] public static Int32 GetProductID(String prodNumber) { SqlCommand cmd; Int32 ret = 0; //Connect to the context connection SqlConnection conn = new SqlConnection("Context Connection=true"); conn.Open(); cmd = new SqlCommand("SELECT ProductID FROM Production.Product " + " WHERE ProductNumber = @prodNumber", conn); cmd.Parameters.AddWithValue("@prodNumber", prodNumber); try { //Execute the command and return the results SqlDataReader dr = cmd.ExecuteReader(); while(dr.Read()) { ret = Convert.ToInt32(dr[0]); } } catch(SqlException ex) { //Process the exception SqlContext.Pipe.Send("The Following error was encountered: " + ex.Message); } return ret; } } 'VB Imports System Imports System.Data Imports System.Data.SqlClient Imports Microsoft.SqlServer.Server Imports System.Data.SqlTypes Public Class TK442Chapter8 <Microsoft.SqlServer.Server.SqlFunction(DataAccess:=DataAccessKind.Read, IsDeterministic:=true)> _ Public Shared Function GetProductID(ByVal prodNumber as String) As Int32 Dim cmd As SqlCommand Dim ret As Int32 = 0 'Connect to the context connection Dim conn as New SqlConnection("Context connection=true") conn.Open() cmd = New SqlCommand("SELECT ProductID FROM Production.Product " & _ " WHERE ProductNumber = @prodNumber", conn) cmd.Parameters.AddWithValue("@prodNumber", prodNumber) Try 'Execute the command and return the results Dim dr As SqlDataReader = cmd.ExecuteReader() Do While dr.Read() ret = Convert.ToInt32(dr(0)) Loop Catch ex as Exception 'Process the exception SqlContext.Pipe.Send("The Following error was encountered: " & _ ex.Message) End Try Return ret End Function End Class -

Save the files with the name TK442Chapter8Lab1.cs or TK442Chapter8Lab1.vb to the directory C:\TK442\Chapter8\Code\Lab1\.

Important Local directory required

If you have not already created a directory on your local drive, do so now and use the path from this step. This path will be referenced in later steps. If you choose to use a different path, make sure you reference the new path in the next steps.

-

Open a Visual Studio 2005 Command Prompt. From the command window, execute the following command:

//C# csc /target:library c:\TK442\Chapter8\Code\Lab1\TK442Chapter8Lab1.cs 'VB vbc /target:library c:\TK442\Chapter8\Code\Lab1\TK442Chapter8Lab1.vb

-

Ensure that the command completes successfully and returns no errors. When complete, there should be a file named TK442Chapter8Lab1.dll in the C:\TK442 \Chapter8\Code\Lab1 directory.

-

Return to Microsoft SQL Server Management Studio, and execute the following command from the new query window for the AdventureWorks database:

CREATE ASSEMBLY TK442Chapter8Lab1 FROM 'c:\TK442\Chapter8\Code\Lab1\TK442Chapter8Lab1.dll' WITH PERMISSION_SET = SAFE

-

Ensure that the query executed successfully. Replace the code in step 10 with the following code:

CREATE FUNCTION GetProductID(@prodNumber nvarchar(25)) RETURNS int EXTERNAL NAME TK442Chapter8Lab1.TK442Chapter8.GetProductID

-

Ensure that the query executed successfully. Replace the code in step 10 with the following code:

Select dbo.GetProductID('AR-5381') -

Ensure that the previous query returns a value of 1.

EAN: N/A

Pages: 162