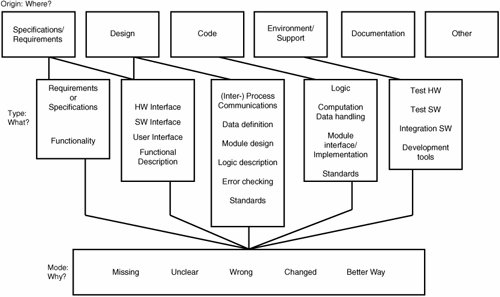

Software Failure Modes and Their Sources

| Machines fail because of wear among parts, environmental hazards, metal fatigue, corrosion and rust, and operator misuse. Systems fail because of unit failures, communication glitches, and operator misuse or configuration errors. The failure modes for software are quite different, because software does not wear or age as do mechanical or even electrical components. Most software failures are actually written into the script like a villain is written into a melodrama. However, they are usually unconsciously programmed side effects that produce unintended consequences. Over the past 40 years, programming language and operating system designers have given a great deal of attention to eliminating opportunities for programmers and users to provoke unintended side effects. The structured programming movement of the 1970s and the elimination of the GOTO command in modern programming languages have been a great help. More recently, the development of object-oriented programming languages, object-oriented design technology, and integrated program development environments have made a huge difference. An excellent paradigm for software failure opportunities and the ultimate sources of these failures is given in Robert Grady's book Successful Software Process Improvement. Figure 13.4 is adapted from his schema and description.[16] It is important to have a schema like this to be able to identify the most likely source of a program failure, or to at least narrow it down to a few possibilities. The apparent failure is exhibited as doing nothing when it was supposed to do something, or doing the wrong thing, or loss of control, or shifting the process sequence to the wrong place, or simply halting. Today's operating systems often can give programmers or testers valuable clues to help them identify the failure mode and its potential source(s). Early operating systems were not very helpful. One of the first operating systems, IBJOB on the IBM 7090 computer, simply halted when it lost program control. It sent the following cryptic message to the line printer: Figure 13.4. Mapping Failure Modes to Their SourcesAdapted with permission from R. B. Grady, Successful Software Process Improvement (Upper Saddle River, NJ: Prentice-Hall, 1997), p. 199.

All this message told the user was that the program did not come to a normal stopping point. The user would spend the rest of the night looking at a machine-language dump of his program's state when it halted due to an unknown failure. The schema shown in Figure 13.4 takes the failure mode source analysis back to one of the four program development stages: specification/requirements, design, coding/implementation, or environment/support. Its failure modes fall into five categories:

Grady's schema allows the FMEA analyst to work backwards through the diagram from the failure mode, to the type of error, to its most likely source. In our experience, the largest source of failure in new programs is the high-level designer's misunderstanding of the functional specification. Second is the detailed designer's choice of algorithm (including data definitions and data handling) to compute a result. Third is the implementer or coder's expression of the algorithm in the programming language as implemented on the host computer hardware. Experience with Grady's paradigm has shown it to be very effective for analog electronics systems and software-controlled digital electronic systems. The most subtle and difficult-to-discover and -correct failures are those that are intermittent either in time or in some data element range of values. This is true of electrical systems, whether analog or digital, but it's less common in purely mechanical systems. |

EAN: 2147483647

Pages: 394