Backup Strategy

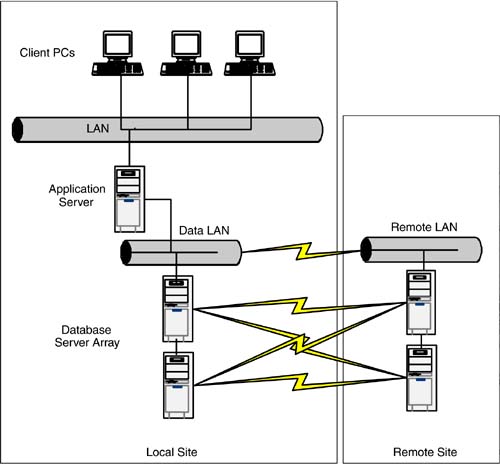

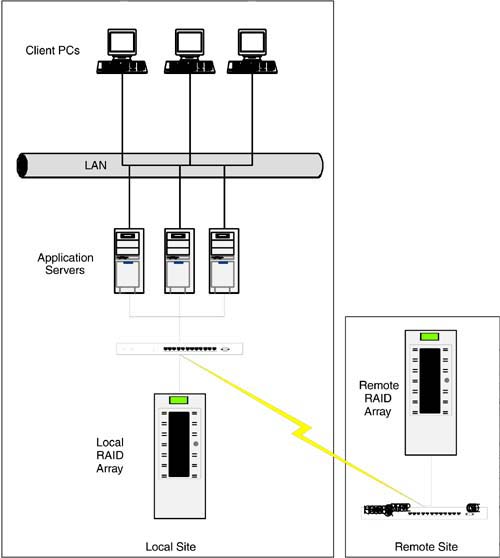

| You can purchase new hardware, build new buildings , even hire new employees , but the data that was stored on the old equipment cannot be bought at any price. You must have a copy not affected by the disaster, preferably one easily restored to the new equipment. The following sections explore a number of options available to help ensure that such a copy of your data survives a disaster at the primary facility. Offsite Tape Backup StorageThis option calls for the transportation of backup tapes made at the primary computer facility to an offsite location. Choice of the location is important. You want to ensure survivability of the backups in a disaster, but you also need quick availability of the backups . This option has some drawbacks. First, it takes time to move the backup tapes offsite. A disaster striking at the wrong moment may result in the loss of all data changes that have occurred from the time of the last offsite backup. There is also the time, expense, and energy of having to transport the tapes. In addition, physical damage or loses can occur when transporting tapes. Finally, there is a risk that all of this ongoing effort may be worthless in a disaster. The complete recovery of a system from a tape backup has always been an intricate , time-consuming process rarely undertaken by organizations unless necessary. The increased difficulty caused by multiple systems has alarmed industry experts. Citing 60 to 80 percent failure rates as justification, industry experts have always advocated testing the recovery process when the backup process changes. Few organizations have the resources for one full-scale test, let alone a test every time the backup changes. As a result, most recovery processes go untested until a disaster strikes. Some organizations contract with disaster recovery companies to store their backup tapes in hardened storage facilities. These can be in old salt mines or deep within a mountain cavern. While this certainly provides for more secure data storage, regular transportation of the data to the storage facility can become quite costly. Quick access to the data can also be an issue if the storage facility is a long distance away from your recovery facility. Automated Offsite BackupThis option calls for a backup system located at a site away from the primary computer facility with a communications link coupling the backup system to the primary computer facility. The remote backup system copies operating system data, application programs, and databases at designated times from the primary facility's servers or SAN. A majority of the installed backup systems use tape libraries containing some degree of robotic tape handling. Optical and magnetic disk-based systems used to be the high-priced option for those desiring higher speeds or a permanent backup. Waning prices have allowed more consideration for business needs in media selection. A majority of the time, the communications link is the primary bottleneck in remote backup implementations . The fastest options use peripheral, channel-based communication, such as SCSI, SSA, ESCON/FICON, and Fibre Channel over fiber- optic cable. Communications service providers typically have dark fiber, but they make their money selling widely used provisioned services. Channel-based services usually require your own private network infrastructure. You will pay for the installation of the fiber either up front or in a long- term lease. These costs tend to limit you to campus backbone networks. Channel-based networking costs make front-end network-based (Ethernet, TCP/IP, etc.) backup solutions the volume leader in the market. Concessions on speed allow you to leverage your existing network infrastructure and expertise. Done outside peak hours to avoid degrading performance, the backup uses capacity that sits idle in most private networks. Remote backup does not provide up-to-the-second updates available from the next two options. It does provide a means for conveniently taking backups and storing backups offsite any time of the day or night. Another huge advantage is that backups can be made from mainframes, file servers, distributed (UNIX-based) systems, and personal computers. Although such a system is expensive, it is not prohibitively so. A Windows-only solution can be moderately priced depending on the exact features, and typically includes a general backup strategy that scales well as you install additional servers. Incremental costs include occasional upgrades to larger tape drives and faster communications. Split-Site Server ArrayBased on OLTP specifications, a server array maintains the integrity of the client's business transaction during a server failure within the array. For example, an Internet shopping application may allow you to place several items into your shopping cart before buying them. A server failure in a server array will go unnoticed. In a failover server cluster, the contents of the shopping cart will disappear unless additional client-side software exists within the application to conceal the server failure. This is shown in Figure A-1. Figure A-1. Split-server site configuration. Splitting the server array by location further reduces the risk to service and data loss. The two halves use fiber-optic cabling (the campus backbone network would be suitable) for communication within the array, while an additional network connection provides client access. The server array appears to the client as one server. Behind the scenes, the server array uses all of the purchased resources to provide transaction throughput exceeding the throughput of a single server, all the while updating both copies of the data ”one in the primary facility and the other located in the remote site in real time. Distance limitations and the price of dark fiber make this solution a good fit in a campus setting between buildings. Remote Disk MirroringRemote disk mirroring extends RAID Level 1 to provide 100 percent duplication of data at two different sites. A disk subsystem located at a site away from the primary facility communicates with the local disk subsystem. The two disk subsystems each record a copy of the data for fault-tolerant operation. In a primary facility disaster, you have an almost up-to-the-second copy of the data at the remote site. Remote disk mirroring deals with the bandwidth bottleneck between the two locations by buffering peak demand within the remote disk mirroring process. The buffered data could be lost in a disaster unless saved in the original server during the outage . A basic remote disk mirroring configuration is shown in Figure A-2. Figure A-2. Remote disk mirroring configuration. You can simplify the recovery process by locating the remote disk subsystem at your recovery site. It does not require an entire computer system at the remote site, just the disk subsystem. This option originated in the mainframe disk market, but distributed SANs are making it available to those using distributed architectures. Industry experts acknowledge that the remote disk mirror significantly reduces the risk of data loss in a disaster. Unlike tape-based backups, the remote disk mirror is almost a duplicate of the original. Instead of recovering data, the recovery effort focuses on restoring the primary site's half of the SAN and the computer systems lost in the disaster. The complete recovery of each computer system remains an intricate, time-consuming process, but employing contract personnel can assist in these efforts. Industry experts advocate periodically testing the accessibility of the remote disk mirror after disconnection from the primary site. Most organizations have a couple of hours to devote to these tests to ensure the safety of their data. Final ObservationsMarket trends indicate network-based storage is replacing server- imbedded storage. The network storage market offers both SANs and NAS. NAS devices connect to the front-end network (Ethernet, TCP/IP, etc.) as a replacement for a file server. Typically, a NAS uses a slim microkernel specialized for handling file-sharing I/O requests such as NFS (UNIX), SMB/CIFS (DOS/ Windows), and NCP (NetWare). SANs can be broken into two components :

Today, a SAN can cost two to three times the price of a NAS device for the same amount of disk space. This price reflects the number of additional components involved:

For the added cost, you receive higher transfer rates per second in device-level block-mode transfers typical of disk devices. These speeds easily sustain the throughput necessary for an active relational database management system such as Oracle or SQLServer. There are other deficiencies in current storage network implementations. There are a limited number of Fibre Channel component vendors. Even so, interoperability between different vendors ' hardware components is not a sure thing. Fibre Channel network experience comes at a premium. Those with experience will typically attest to higher administrative requirements compared to Ethernet. Extending Fibre Channel beyond the "dark fiber" campus requires bridging to IP. Fibre Channel over IP protocols extends the Fibre Channel-based SAN to metropolitan networks. It also adds infrastructure costs. The evolution of Ethernet into the Gigabit and 10 Gigabit range created a great deal of strategic thinking in the SAN market. Why not eliminate Fibre Channel (FC-AL) in favor of a Gigabit Ethernet infrastructure? Most places looking at a SAN already have an existing investment in Ethernet technology with an existing knowledge base of their workings. An Ethernet-based storage network would use the same Ethernet switches as your front-end network, taking advantage of VLAN technology to ensure quality of service. The jump to a metropolitan network uses the same technologies as your existing Internet access. A new open standard, Internet SCSI (iSCSI), aims at dumping Fibre Channel in favor of Gigabit Ethernet. Technically, iSCSI has been relatively easy to implement. A number of vendors offer components and management software. For example, you can buy a Gigabit Ethernet SAN infrastructure that allows you to convert your existing external SCSI or Fibre Channel storage into a SAN. The solution has demonstrated the viability of iSCSI-based SANs using first-generation prototype iSCSI host adapters and various types of storage. As second-generation iSCSI host adapters and storage become a common option, anyone with a Gigabit Ethernet switch will be able to create a SAN with the right storage management software. Extend the Gigabit Ethernet infrastructure to a remote location, and you have the desired remote disk mirroring. |

EAN: 2147483647

Pages: 108