The WebLogic JMS Provider

In this section, we will take a detailed look at the WebLogic JMS provider implementation. As you will see, WebLogic JMS not only provides a messaging system that fully implements the JMS specification but also provides other configuration and programming options that go well beyond what JMS defines to provide enterprise-class messaging features. We will not attempt to provide comprehensive coverage of WebLogic JMS, but will instead focus our discussions on the details that we feel are most important for designing and building robust messaging applications with WebLogic JMS. For more comprehensive coverage of JMS, we refer you to the WebLogic JMS documentation at http://edocs.bea.com/wls/docs81/jms/index.html.

Understanding WebLogic JMS Servers

WebLogic JMS introduces the JMS server . A JMS server defines a set of JMS destinations and any associated persistent store that reside on a single WebLogic Server instance. A WebLogic Server instance can host zero or more JMS servers and can serve as a migration target for zero or more JMS servers. All destination names must be unique across every JMS server in a WebLogic Server instance. If you are deploying a JMS server into a clustered server, the destination names must be unique across every JMS server deployed to any member of the cluster.

When you are using WebLogic Clustering, a JMS server represents the unit of migration when failing over a set of destinations from one WebLogic Server instance to another. By associating destinations with a JMS server, WebLogic JMS makes it easier to migrate a set of destinations from one WebLogic Server instance to another. We will talk more about JMS server migration in the next section.

Clustering WebLogic JMS

WebLogic JMS clustering is built on top of the basic WebLogic Server clustering mechanisms. It provides a JMS application with the features you would expect from a clustered messaging infrastructure. In this section, we will take a closer look at WebLogic JMS clustering, which will include discussions on the following features of clustering:

-

Location transparency provides the application with a uniform view of the messaging system by hiding the physical locations of JMS objects.

-

Connection routing , load balancing , and failover provide the application with a single, logical connection into the messaging system.

-

Distributed destinations provide a single, logical destination distributed across multiple servers in the cluster to support both load balancing and high availability.

-

JMS server migration allows you to migrate a set of destinations to another server in the event of server failure or maintenance outage .

Location Transparency

WebLogic JMS registers managed objects such as connection factories and destinations in JNDI. Because WebLogic Server provides JNDI replication across a cluster, an application can simply look up the objects by their JNDI name , regardless of which servers in the cluster are hosting the objects. For example, applications can access a JMS destination without knowing which WebLogic Server instance hosts the JMS server that holds the destination. In the same way, you can create a JMS connection and session without having to worry about what servers are in the cluster or where the destinations you are using reside.

Connection Routing, Load Balancing, and Failover

When deployed to a cluster, WebLogic JMS connection factories provide transparent access to all JMS servers in the cluster. This means that any JMS connection you create using one of these factories will have access to every JMS destination across the cluster. How this works depends on where the application is located in relation to the WebLogic Server cluster.

For client applications not running in a WebLogic Server instance, WebLogic JMS defaults to using a simple round- robin algorithm to distribute connection requests across all running servers in the cluster on which the connection factory is deployed. The algorithm s state, however, is tied to each client s copy of the connection factory. Because most clients create only one connection with their connection factory, the overall load distribution will be relatively uniform because the load-balancing algorithm is initialized by randomly selecting the initial server. Connection factories provide failover by routing connection requests around failed servers.

Once the client creates a connection, WebLogic JMS routes all JMS operations over that same connection to any WebLogic Server in the cluster. This enables WebLogic JMS clusters to support a large number of concurrent clients but does expose the client to failures if the server to which it is connected fails. To handle this problem gracefully, clients must use the standard JMS mechanism to register an object that implements that javax.jms.ExceptionListener interface with the connection by calling the setExceptionListener() method. To recover from a failed connection, you will need to use the connection factory to create a new connection and any other objects that were associated with the failed connection object.

Another interesting failure scenario occurs when the JMS connection is still operational but the WebLogic Server instance on which a particular JMS destination resides fails. For example, the client s JMS connection is talking with server1 , but it is asynchronously consuming messages from a destination on server2 . If the client is asynchronously consuming messages using one of the failed destinations, then you need some way to notify the client application that the MessageConsumer is no longer valid. Unfortunately, the JMS specification does not specify how to handle such problems.

WebLogic JMS provides an extension that allows you to register objects that implement the javax.jms.ExceptionListener interface on a JMS session. To use this extension, the application needs to cast the session to a weblogic.jms.extensions.WLSession type in order to invoke the setExceptionListener() method:

WLSession wlSession = (WLSession)queueSession; wlSession.setExceptionListener(new MyExceptionListener());

Whenever the destination failure scenario happens, the exception listeners will receive a callback with a weblogic.jms.extensions.ConsumerClosedException event for any affected consumers. At this point, the JMS consumers for destinations not on the failed server are okay, the JMS session is okay, and the JMS connection is okay. If the application has received but not acknowledged any messages from the failed server, though, the application cannot acknowledge these messages. Unfortunately, this means that the only thing left to do is to call the session s recover() method. Invoking this method will recover all unacknowledged messages for the entire session, which may cause all of the recovered messages to be redelivered ”even for consumers that did not fail. To mitigate this scenario, as well as to maximize concurrency, you should use one session per message consumer.

| Best Practice | Client applications consuming messages asynchronously should register exception listeners on their JMS sessions using the weblogic.jms.extensions.WLSession extension. Because recovering from this state requires invoking the recover() method on the session, you should use a separate session for each consumer to minimize the impact of the failure of a destination s server. |

For server-side applications, WebLogic JMS avoids extra network traffic by processing connection requests locally wherever possible. If the connection factory is not deployed locally, then the server-side application will connect to one of the servers where the connection factory exists. In most applications, it is very desirable to distribute the load across all servers in a cluster. There are multiple ways to achieve this. In almost all situations, it is best to distribute the load as it enters the cluster and keep all processing of a particular message within the local server to which the message was delivered. Therefore, we recommend deploying your applications and JMS resources homogeneously across the cluster. Because any particular destination must reside on only one server in the cluster, we need to use distributed destinations to accomplish this homogenous distribution.

| Best Practice | For JMS applications that use all servers in the cluster, deploy your connection factories to all servers in the cluster. For applications that use only a subset of the cluster for JMS, deploy the connection factories on the WebLogic Server instances where the JMS servers reside. Following this rule will help eliminate unnecessary routing of JMS requests through servers with no JMS server deployed. |

Distributed Destinations

Distributed destinations give WebLogic JMS the ability to make JMS destinations highly available and load balanced. To create a distributed destination, simply map multiple member JMS destinations to a single, logical, distributed JMS destination. JMS applications use distributed destinations just like any other JMS destination. How WebLogic JMS routes application requests to the underlying member JMS destinations depends on many different factors, such as where the application resides (in a remote client or in a server), what the application is doing (sending messages or receiving messages), what type of destination is used (queue or topic), and how many consumers there are.

WebLogic JMS load balances message producers to a distributed destination on a request-by-request basis. This means that, all other things being equal, the messages produced by a client will be evenly distributed across the member destinations of the distributed destination. For message consumers, WebLogic JMS load balances them at creation time, thereby pinning each consumer to a member destination. WebLogic JMS also looks at several other factors when load balancing producers and consumers, such as the number of consumers for a member destination, the location of the member destinations, the availability of a persistent store (if the message delivery mode is persistent), and the current transaction context. Any of these other factors can cause WebLogic JMS to alter its default load-balancing policy for a particular message or destination. We will spend the rest of this section explaining how WebLogic JMS routes application requests that use distributed destinations.

One important thing to note: WebLogic JMS is a robust, enterprise-level messaging system. As such, many different configuration options can lead to many different situations that alter the behavior of message routing. For example, if a message producer is sending a persistent message to a distributed destination where not all member destinations have an associated persistent store, WebLogic JMS will do its best to route the message to a destination with a persistent store. There are so many of these types of situations and rules that we cannot possibly cover all of them here. In general, we highly recommend that you deploy distributed destinations homogeneously across the cluster using similar configurations for each participating JMS server and its associated destinations. Therefore, we will focus on describing the behavior of distributed destinations when deployed in homogenous configurations. For more complete information, please refer to the WebLogic Server documentation at http://edocs.bea.com/_wls/docs81/jms/implement.html .

| Best Practice | When you are deploying distributed destinations, always use similar settings for every JMS server and member destination in the cluster. |

Producing Messages to a Distributed Queue

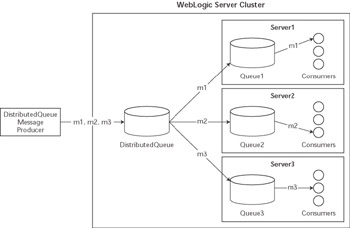

Figure 9.2 illustrates the producer s perspective of distributed queue operation using the default load-balancing configuration. There are three WebLogic Server instances running in a cluster, each hosting a member destination ( Queue1 , Queue2 , and Queue3 ) of the distributed queue, DistributedQueue . Each message sent by the producer is load balanced across the member destinations. Because this is a point-to-point messaging model, only one consumer will receive each message.

Figure 9.2: Sending messages to a distributed queue.

When a producer sends messages to a distributed queue, WebLogic JMS first determines how to load balance the send() requests. You can control this with the connection factory s Load Balancing Enabled checkbox, which is enabled by default, and the distributed destination s Load Balancing Policy , which is round-robin by default. If load balancing is enabled, WebLogic JMS load balances each producer on every send() call using the algorithm specified by the load-balancing policy. If it is disabled, WebLogic JMS load balances only each producer s first call to send(); all subsequent messages from a particular producer will be sent to the same member destination.

WebLogic JMS uses several other heuristics that override the default load-balancing behavior; the heuristics are applied in this order:

-

Persistent store availability means that WebLogic JMS will prefer destinations whose underlying JMS server has a persistent store for delivering persistent messages.

-

Transaction affinity means that WebLogic JMS will try to send all messages associated with a particular transaction context to the same JMS server to minimize the number of JMS servers involved in the transaction.

-

Server affinity means that WebLogic JMS will try to use a destination in the local process. WebLogic JMS will use member destinations in the WebLogic Server instance in which the producer/consumer is running or to which the producer/consumer s JMS connection is connected. Although this is the default behavior, you can disable this behavior by deselecting the connection factory s Server Affinity Enabled checkbox.

-

Zero consumer queues means that WebLogic JMS will do its best to avoid sending messages to member queues with zero consumers, unless all member queues have zero consumers. Because of the transient nature of some message consumers, it is still possible for messages to end up on queues with zero consumers.

Figure 9.2 portrays a conceptual view of how a distributed queue works. In reality, all routing to a member queue occurs inside a WebLogic Server process. Each producer has a JMS connection attached to one WebLogic Server in the cluster. By default, server affinity causes WebLogic JMS to attempt to send the messages from a particular producer to a member destination on the server to which the producer s JMS connection is attached. The message may be routed to a different destination if server affinity is disabled, there is no local member destination, or one of the other heuristics takes precedence.

| Tip | By default, server affinity causes message producers sending messages to a distributed queue always to send to the member queue in the JMS server to which the producer is connected. To enable a producer to load balance messages across member destinations, disable server affinity on the connection factory the producer is using. Of course, this load balancing will still be subject to other heuristics that might skew the distribution of messages. |

Another important feature of distributed queues is message forwarding. The zero consumer queues heuristic will try to prevent routing point-to-point messages to a member destination with no consumers if there are other member destinations with consumers. It is still possible, though, for messages to end up on a queue with no consumers if, for example, a consumer exits after the message is sent but before it is received. WebLogic JMS provides a forwarding mechanism by which messages can be forwarded from a member queue with no consumers to a member queue with consumers after a specified amount of time. The distributed queue s Forward Delay attribute controls the number of seconds WebLogic JMS will wait before trying to forward the messages. By default, the value is set to “1, which means that forwarding is disabled.

Consuming Messages from a Distributed Queue

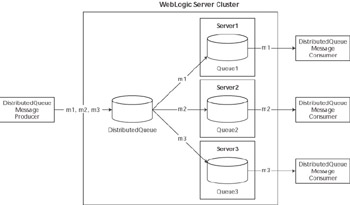

When an application creates a message consumer for a distributed queue, WebLogic JMS associates the consumer with one of the member destinations of the distributed queue. From that point on, the consumer is pinned to that member destination. Figure 9.3 illustrates the operation of a distributed queue from the consumer s perspective. In this figure, the three DistributedQueue consumers have each been associated with one of the member destinations. Each consumer will be eligible to receive only messages routed to his or her respective member destinations.

Figure 9.3: Consuming messages from a distributed queue.

When consumers are created, WebLogic JMS associates the consumer with a member destination by load-balancing the consumers across the available member destinations. Although this load balancing is done only once for each consumer, the mechanisms are very similar to those used for load balancing messages sent to a distributed queue. All other things being equal, WebLogic JMS will use the distributed destination s load-balancing policy to distribute the consumers across all member destinations. Just as before, WebLogic JMS uses heuristic optimizations that will override the default load-balancing mechanism. If server affinity is enabled, WebLogic JMS will try to associate the consumer with a local member destination, as described previously. WebLogic JMS will also try to associate new consumers with member destinations that currently have zero consumers, unless server affinity prevents this.

When a WebLogic Server hosting a member of a distributed queue fails, all unconsumed persistent messages remain on the failed server s queue. These messages will not be available until either the WebLogic Server instance is restarted or the JMS server containing the member queue is migrated to another WebLogic Server instance. We will talk more about JMS server migration later in this section. If the messages are non-persistent, all of the unconsumed messages will be lost.

WebLogic JMS distributed queue consumers are essentially the same as nondistributed queue consumers once the association between the consumer and the member destination has been established. If the WebLogic Server hosting the member queue fails, WebLogic JMS throws a javax.jms.JMSException to all synchronous consumers. For asynchronous consumers, the behavior varies depending on whether the server hosting the destination is also the one to which the consumer s JMS connection is attached. If the server is the one to which the JMS connection is attached, the connection s ExceptionListener is notified and the application will have to recreate the JMS connection and all of its associated objects (sessions, consumers, producers, etc.). The more interesting case occurs when the consumer is using one server for the JMS connection and another server for the destination. As we discussed in the Connection Routing, Load Balancing, and Failover section, you can register an ExceptionListener with the WebLogic JMS session to receive notification when the destination s server fails but the connection s server does not. Make sure you understand the caveats to this approach, which we have already discussed.

Producing Messages to a Distributed Topic

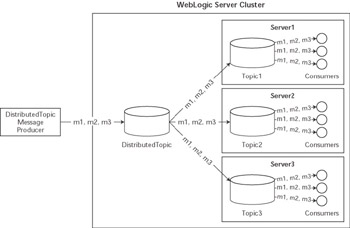

Figure 9.4 illustrates the operation of a distributed topic from the producer s perspective. As in the previous example, there are three WebLogic Server instances running in a cluster, each hosting a member destination ( Topic1 , Topic2 , and Topic3 ) of the distributed topic, DistributedTopic . When a producer sends messages to a distributed topic, WebLogic JMS sends a copy of the message to every available member topic that has at least one consumer. Because all member topics in Figure 9.4 have at least one consumer, every message is sent to the three member topics. If the three consumers attached to Topic3 were to unsubscribe from DistributedTopic , any future messages would be sent only to Topic1 and Topic2 until a new consumer was assigned to Topic3 .

Figure 9.4: Publishing messages to a distributed topic.

If you publish messages directly to a topic that is associated with a distributed topic, WebLogic JMS will replicate the messages to every member of the distributed topic, just as it would if you had published the messages to the distributed topic itself. This means that any topic associated with a distributed topic will distribute messages just as the distributed topic does.

As we discuss later in the Persistent Stores section, persistent messages sent to a distributed topic are not actually persisted unless there are one or more durable subscribers. If one or more of the distributed topic s member topics is unavailable, then WebLogic JMS will save the persistent messages and forward them once the member topic becomes available. This means that any durable subscribers for member topics will eventually receive messages once their member topics become available and they are able to reconnect . On the other hand, messages sent to distributed topics with no durable subscribers are delivered only to the active set of subscribers at the time the message is published. We will talk more about durable subscribers and message persistence later.

Consuming Messages from a Distributed Topic

Distributed topic consumers are similar to distributed queue consumers; WebLogic JMS associates them with a member destination at the time they are created. WebLogic JMS uses the same load-balancing mechanisms and optimization heuristics for distributed topic consumers that it uses for distributed queue consumers. The same error-handling mechanisms that we discussed for distributed queue consumers also apply to distributed topic consumers. There are two important differences in how consumers work with distributed topics that we need to mention.

First, any durable subscriptions must be made with a distributed topic s member destinations. WebLogic JMS does not currently support creating a durable subscription with a distributed topic directly. This is really unfortunate because while it does not prevent you from building an application that leverages distributed topics with durable subscribers, it does make it more difficult (because you have to build knowledge of the member topics into the application) and potentially less efficient (you won t be able to leverage server affinity because you have no way of knowing with which server your JMS connection is associated).

Second, message-driven bean (MDB) deployments are treated as a single consumer per member topic. This means that regardless of how many MDB instances are in the pool on each server, messages will be sent to a single MDB instance for each member topic. If you stop to think about it, this is desirable because the whole idea of deploying an MDB is to create one virtual consumer per deployment. You probably do not want each message sent to the topic to be processed by multiple MDB instances in the same server. Where this becomes less clear is when you think about deploying an MDB to a cluster using a distributed destination. In some cases, you might actually want each message processed in each server; in others, it might be desirable to process each message once across the entire cluster. Currently, WebLogic JMS supports only sending each message to all servers hosting the MDB.

Migrating JMS Servers

WebLogic Server provides a migration framework that allows for an orderly migration of services from one WebLogic Server instance to another instance in the same cluster. You can use the WebLogic Console, the weblogic.Admin program, or a JMX program to perform the actual migration. Currently, you are responsible for deciding when to migrate a service. WebLogic JMS supports a migration framework that simplifies the migration of a JMS server from one instance of WebLogic Server to another instance of WebLogic Server in the same cluster, if properly configured.

Migrating a JMS server from one WebLogic Server instance to another is simple provided that the target server has access to all of the JMS server s resources used by the source server. If a persistent store is involved, the persistent store will need to be available on the target server. If the source server failed with transactions in flight, you will also need to migrate the JTA service, and its associated transaction log files, so that these in-flight transactions can be recovered. A complete discussion of the configuration and use of the WebLogic Server migration framework to perform JMS server migration can be found in Chapter 11.

| Best Practice | If you need to be able to move a JMS destination to another server to handle failover, always use the migration framework rather than trying to do it yourself. |

Configuring WebLogic JMS

Now that you understand the high-level overview of WebLogic JMS clustering, let s take a more detailed look at the many different configuration options that can affect your JMS applications. We will cover only a subset of the functionality, and we recommend that you consult the WebLogic JMS documentation for more information.

Connection Factories

A JMS connection factory can be thought of as a template defining common connection attributes. Once you create connection factories using the WebLogic Console, the connection factories are bound into JNDI when WebLogic Server starts up. Each connection factory can be deployed on multiple WebLogic Server instances or clusters. An application accesses JMS by looking up a connection factory and using the connection factory to create a connection. Once the connection is established, all predefined connection factory attributes are applied to the connection.

Let s look at a few of the more important connection factory attributes. A complete discussion of individual attributes is outside the scope of this chapter, and you should consult the WebLogic JMS documentation if you re interested. Using the WebLogic Console, navigate to the Services- > JMS- > Connection Factories folder in the left-hand navigation bar and create a new connection factory. The following list explains some of the settings on the connection factory s General Configuration tab:

Default Message Delivery Attributes. These settings include Priority , Time to Live , Time to Deliver , Delivery Mode, and Redelivery Delay . Values set here are used for messages for which these attributes are not explicitly set in the application or not overridden by other configuration parameters.

Messages Maximum. This parameter is not a true message quota, as the name might lead you to believe. For a normal JMS session, this value indicates the maximum numbers of outstanding messages (that is, messages that have not yet been processed by the consumer s business logic) that a consumer is willing to buffer locally. If a consumer falls behind to the point where it has too many outstanding messages, WebLogic JMS will buffer the messages at the server until the client starts to catch up. If the session is a WebLogic JMS multicast session, the server will not buffer the overflowed messages and will instead discard them based on the policy specified by the Overrun Policy attribute.

Acknowledge Policy. This setting applies only to message consumers that use the CLIENT_ACKNOWLEDGE acknowledgment mode. In previous versions of the JMS specification, the exact semantics of the acknowledge() method were not well defined. WebLogic JMS chose to implement the acknowledge() method so that it acknowledged all messages up to and including the current message being acknowledged. With the release of the JMS 1.0.2b specification, Sun clarified the behavior to state that the acknowledge() method should acknowledge all messages received by the associated JMS session ”even those received after the message currently being acknowledged. A value of ACKNOWLEDGE_PREVIOUS in the Acknowledge Policy retains the old WebLogic JMS behavior while ACKNOWLEDGE_ALL yields the JMS 1.0.2b behavior.

Load Balancing Enabled. This setting indicates whether to load balance the messages sent to a distributed destination on a per-call basis. If checked (the default), associated producers messages are load balanced across the member destinations on every call to QueueSender.send() or TopicPublisher.publish() . Otherwise, the load balancing occurs only on the first invocation, and all future invocations will go to the same member destination, unless a failure occurs. This attribute has no effect on the consumers and applies only to pinned producers created through this connection factory. A pinned producer sets its destination when it is created and cannot change it afterward.

Server Affinity Enabled. This checkbox controls how WebLogic JMS load balances consumers or producers running inside a WebLogic Server instance across a distributed destination. If enabled, WebLogic JMS prefers to associate consumers and producers with member destinations located in the same server process. If disabled, WebLogic JMS load balances them across all member destinations in the distributed destination just as it would if the consumers and producers were running in a remote client process.

| Best Practice | Most applications should use the default values for Server Affinity Enabled and Load Balancing Enabled, which are true for both settings. |

We will discuss the connection factory s Transactions Configuration and Flow Control Configuration tabs later in this chapter. Like most configuration objects in WebLogic Server, connection factories must be targeted to one or more WebLogic Server instances to make them accessible to applications.

WebLogic JMS defines one connection factory, weblogic.jms.ConnectionFactory , which is enabled by default. WebLogic Server 8.1 also adds another new XA connection factory, weblogic.jms.XAConnectionFactory , which is also enabled by default. You can disable them by deselecting the Enable Default JMS Connection Factories checkbox in the server s JMS Services tab of the WebLogic Console. Note that this also disables the javax.jms.QueueConnectionFactory and javax.jms.TopicConnectionFactory connection factories that are deprecated but still available to support backward compatibility with WebLogic Server 5.1. Because the default connection factory settings cannot be changed, we recommend that you always define application-specific connection factories. When choosing JNDI names for user -defined connection factories (or, for that matter, anything else), avoid using JNDI names in the javax.* and weblogic.* namespaces.

| Best Practice | Always define application-specific JMS connection factories and disable the default JMS connection factories. Avoid using JNDI names in the javax.* or weblogic.* namespaces. |

Templates

Templates provide an efficient way to define multiple destinations with similar attribute settings. By predefining a template, you can very quickly create a set of destinations with similar characteristics. Changing a value in a template changes the behavior for all destinations using that template. Each destination can override any template-defined attribute by setting the value for the attribute explicitly on the destination itself. Using JMS templates is completely optional for applications that use predefined destinations. Any application wishing to use temporary destinations, though, is required to assign a Temporary Template to the WebLogic JMS server(s) involved.

| Best Practice | Use JMS templates to create and maintain multiple destinations with similar characteristics. |

Destination Keys

By default, WebLogic JMS destinations use first-in-first-out (FIFO) ordering. Simply put, the next message to be processed by a consumer will be the message that has been waiting in the destination the longest. WebLogic JMS also gives you the ability to use message headers or property values to sort messages in either ascending or descending order. To do this, you need to define one or more destination keys and associate these keys with a JMS destination, either directly or through the use of a template. Any destination can have zero or more destination keys that control the ordering of messages in the destination. By creating a descending order destination key on the JMSMessageID message header, we can configure a destination to use last-in-first-out (LIFO) ordering.

It is important to note that using sorting orders other than FIFO or LIFO increases the overhead of sending a message. WebLogic JMS will have to scan some portion of the messages in a destination to determine where to place the incoming message. While this is not a big deal for destinations with a small backlog of messages, it can be a huge performance penalty for destinations containing a large backlog of messages. Therefore, we recommend avoiding sorted destinations unless the price of not sorting the destination (for example, in increased application complexity) is higher than the cost of the potential performance degradation.

In most cases, the default FIFO sort order works best and will always give the best performance. You can change the sorting order to LIFO using the JMSMessageID without any significant performance penalty. Sorting destinations by any other property can cause significant performance degradation on the producer and/or the consumer. The amount of performance degradation will depend on the number of undelivered messages stored in the destination at any point in time.

| Best Practice | FIFO or LIFO sort orders provide the best performance. Any other sorting order can cause significant performance degradation that will be proportional to the number of undelivered messages stored in the destination. |

Time-to-Deliver Extension

WebLogic JMS provides a time-to-deliver extension, which allows sending messages that will not be delivered until some time in the future. This extension can be a very useful feature for implementing certain types of application functionality. To use it, simply set the producer s time to deliver before sending the message, as shown here. This will cause the producer to set the WebLogic JMS-specific JMSDeliveryTime header when the message is sent. Note that you must cast the standard JMS producer to a WebLogic JMS-specific type in order to use this extension:

// Send the message one minute from now... long timeToDeliver = System.currentTimeMillis() + (60 * 1000); weblogic.jms.extensions.WLMessageProducer producer = (WLMessageProducer)queueSender; producer.setTimeToDeliver(timeToDeliver); queueSender.send(message);

One important point to note: the JMS provider sets most JMS message header fields. This means that regardless of what values are set using the JMS Message interface, the JMS producer will overwrite them. We mention this here because the weblogic_.jms.extensions.WLMessage interface provides a setJMSDeliveryTime() method. Trying to use this mechanism to set the JMSDeliveryTime header will have no effect because the message producer will overwrite this header field value when it sends the message.

| Warning | The setJMSDeliveryTime() method in the weblogic.jms.extensions.WLMessage interface is like most of the other setter methods on the javax.jms.Message interface. Setting a value on WLMessage has no effect because the producer overwrites it when a message is sent. |

Persistent Stores

WebLogic JMS uses persistent stores for two different purposes. When WebLogic JMS determines that a message should be persistent, it uses the Message Store associated with the destination s JMS server to store the entire message. By default, WebLogic JMS keeps all messages in memory for faster access ”even persistent messages that it has already written to secondary storage. If the backlog of messages is small, this can significantly improve performance without consuming significant amounts of memory. Of course, as the backlog of messages gets larger, the memory demands can cause the JVM to run out of memory. WebLogic JMS uses the Paging Store to page the body of the message out of memory to save memory. Message headers and properties remain in memory for faster access. As we will see in the Delivery Overrides , Destination Quotas, and Flow Control section, we can use quotas and thresholds to help control the amount of memory consumed.

Configuring Message Stores

WebLogic JMS supports two types of persistent stores for saving JMS messages: JDBC and file-based persistent stores. The choice of a particular store type has no effect on the application code. As their name suggests, JDBC persistent stores save messages in database tables, while the file stores save messages in files. To use a persistent store, create a message store and assign it to a JMS server. Each JMS server must have its own backing JDBC or file store. In order to migrate a JMS server with an associated message store, the message store must be accessible via the same path on the target WebLogic Server. The path is either the JDBC connection pool name or the directory where the file resides.

JDBC-based stores may share the same physical database schema, but each must have its own uniquely named set of tables. The JDBC store uses two tables whose base names are JMSStore and JMSState . By using the Prefix Name parameter, you can prepend values to these base names to create unique names per store. By knowing the table-naming syntax for your database, you can force the tables to be in different schemas; for example, specifying a Prefix Name of bigrez.JMS_Store1_ will cause the JDBC store to create tables in the bigrez schema with the names JMS_Store1_JMSStore and JMS_Store1_JMSState . Failure to specify unique table names for multiple stores sharing the same database can result in message corruption or loss.

| Warning | Multiple JMS servers cannot share the same persistent store. For JDBC-based stores, you must specify a unique Prefix Name value for every store that uses the same underlying database connection pool (and, thus, the same database schema). Failure to do so can result in message corruption or message loss. |

In the case of file stores, multiple JMS servers can share the same directory. WebLogic JMS will automatically create unique names of the form < JMSFileStoreName > ######.DAT , where ###### is a unique number. Let s compare and contrast the two types of stores.

File stores generally perform better than JDBC stores. By default, writing to a file store is a synchronous operation. WebLogic JMS provides three Synchronous Write Policy settings for controlling how messages are written to the store:

-

Cache-Flush is the default policy that forces all messages to be flushed from the operating system cache to disk before the completion of a transaction, or a JMS operation in the nontransactional case. The Cache-Flush policy is reliable and scales well as the number of simultaneous users increases.

-

Direct-Write bypasses the operating system cache and forces all messages to be written to disk. WebLogic JMS supports only the Direct-Write policy on Solaris, HP-UX, and Windows operating systems. If this policy is set on an unsupported platform, the file store automatically uses the Cache-Flush policy instead. This policy s reliability and performance depend on operating system and hardware support of on-disk caches.

-

Disabled allows for maximum performance, but because messages may remain in operating system caches, it exposes the application to message loss and duplicate messages.

When using the Direct-Write policy, the performance and scalability are significantly reduced without the use of an on-disk cache. Unfortunately, the use of an on-disk cache can expose the application to message loss or duplication in the event of a power failure unless the on-disk cache is reliable. Many high-end storage devices that offer on-disk caches also provide a battery backup to prevent the loss of data during a power failure.

To add to the complexity of this setting, Windows provides an OS option to enable write caching on the disk; this is enabled by default. The problem is that some versions of Windows do not send the correct synchronization commands to tell the disk to synchronize the cache (for more information, please refer to http://support.microsoft.com/default.aspx?scid=kb%3Ben-us%3B281672). You can disable write caching with most disk drives using the Windows Device Manager entry for the disk in question; the Write Cache Enabled checkbox is located on the Disk Properties tab.

Solaris does not support the use of on-disk caches when using Direct-Write . This means that all disk writes go directly to disk and will not take advantage of any reliable on-disk caches. If your storage device uses a reliable on-disk cache, you should test with both Direct-Write and Cache-Flush to see which one is actually faster for your particular device.

| Best Practice | Cache-Flush is generally the safest policy. If your hardware and OS support using reliable on-disk caches with Direct-Write , you may be able to improve performance significantly by switching to Direct-Write . Remember, using on-disk caches without battery backup can cause data loss or corruption should a power failure occur. |

One final word of caution: Some third-party JMS providers set their default write policy to the equivalent of WebLogic JMS s Disabled policy, which allows the operating system to buffer all file writes without flushing them to disk. While this is great for performance, it can cause data loss and corruption in the event of a power failure. Before you try to compare performance numbers for persistent messages with WebLogic JMS, make sure you understand the write policy configuration for each JMS provider.

| Warning | Some third-party JMS providers default to the equivalent of WebLogic JMS s Disabled policy, so make sure you check before trying to compare performance numbers with those of WebLogic JMS. |

Because file stores are often collocated with the JMS server, writing to a file store may generate less network traffic. File store availability, however, is subject to hardware failures so it is often desirable to place file stores on shared disks or storage area networks (SANs). Do not use NFS to share access to a file store; any attempt to do so may result in message loss, corruption, and/or duplicate messages. Because of these issues, JDBC stores may provide an easier solution for addressing the failover issues since the database typically resides on a separate machine from the application servers.

WebLogic JMS never attempts to reduce the size of a file store. The file store grows as needed to hold all unconsumed messages up to the quota limits configured for the JMS server or its individual destinations. Although the entire file store can be reused to store new messages, the amount of disk space that the store consumes will never shrink even when the file store has no messages in it.

| Warning | WebLogic JMS will never shrink the size of a file store, though it will reclaim the space inside the file for future use. |

Finally, a file store can be thought of as a database. For JMS applications that process large number of persistent messages, you should configure disk access just as you would when setting up high-performance database servers. Isolate file stores on separate disks. When using multiple file stores, you may need to put each store on a separate disk, or even disk controller. Using advanced, on-disk caching technology can provide large performance improvements without sacrificing the integrity of the message store. If the messages are important enough to store to disk, then they are probably too important to lose due to hardware failure. Consider using a SAN, a multiported disk, and/or disk mirroring technology to make the file store highly available.

Setting Up Paging Stores

A WebLogic JMS server can also move in-memory messages out of the server s memory to secondary storage to prevent trying to hold too many messages in memory; this is known as paging. When a message that has been paged out of memory is needed, the server moves it back into memory. This paging behavior is completely transparent to the JMS application. Of course, reading and writing messages to disk will have a significant performance impact, but this is much better than filling up all of the server s available memory.

By default, WebLogic JMS keeps all messages in memory for faster access ”even persistent messages. This can cause problems as the number and size of the persistent messages in the destination grow. You must enable paging to tell WebLogic JMS that it is okay to page messages out of memory.

| Tip | Paging is important for both persistent and non-persistent messages. |

To give WebLogic JMS the ability to page messages, configure each JMS server with a Paging Store . A paging store is nothing more than a message store being used for paging messages. Like message stores, each JMS server must have its own paging store. WebLogic Server 8.1 will automatically create a paging store for you if you enable paging but forget to configure a paging store. Of course, you probably want to configure the paging store explicitly so that you can control the location of the store, which might be on a RAID disk array to increase performance for high-volume systems.

WebLogic JMS does not allow the same message store to be used for both message persistence and message paging. For persistent messages, WebLogic Server actually does not use the paging store to page the messages because they already exist in the message store; however, you still need to enable paging for persistent messages. One important thing to note is that even though the paging process writes nonpersistent messages to secondary storage as needed, these messages will not survive server restarts or system crashes. Because of this, there is no need to worry about high availability of a paging store, and we always recommend using a file-based paging store to maximize performance.

| Best Practice | Always enable paging for your JMS server. Failure to do so can result in a WebLogic Server instance running out of memory if the message consumers are not able to keep up with the producers. For production systems, we recommend explicitly configuring a paging store rather than relying on WebLogic Server 8.1 to do it for you so that you can control the location of the file store. |

Understanding When Messages Are Persisted

On the surface, message persistence seems straightforward, and for point-to-point messaging it is. Point-to-point message producers can specify a message s delivery mode, which determines whether the message is persistent. For WebLogic JMS, the producer s desired delivery mode can be overridden by several JMS configuration options. In the end, the message will either be persistent or non-persistent based on the application s request and the WebLogic JMS configuration. The section on overrides, quotas, and flow control provides more information on how to control message delivery characteristics such as persistence.

For publish-and-subscribe messaging, the message s delivery mode and WebLogic JMS configuration do affect the persistence or nonpersistence decision. WebLogic JMS also considers the number and type of subscribers to the topic. If a topic has one or more durable subscribers, messages will be retained until all durable subscribers have received a copy of the message. If the delivery mode for the message is non-persistent, WebLogic JMS retains the messages by buffering them in memory (or paging them out to the paging store, if configured); persistent messages are written to the message store. If there are no durable subscribers currently subscribed, WebLogic JMS will not persist the message because there is no need to retain the message because the JMS specification requires only that the currently active subscribers receive the message.

The durable subscription itself is persisted to ensure that it survives server restarts or system crashes, as required by the JMS specification. Because of this, WebLogic JMS requires that a persistent message store be configured on any WebLogic JMS server that hosts topics that will have durable subscribers, even if all of the messages are nonpersistent. WebLogic JMS will throw a javax.jms.JMSException if an application attempts to create durable subscribers on a topic that is hosted by a WebLogic JMS server with no message store.

Delivery Overrides, Destination Quotas, and Flow Control

As we discussed previously, you can override default delivery attributes for messages either programmatically in the application or administratively using the WebLogic Console. In this section we will take a look at how to use delivery overrides. We will then examine different WebLogic JMS throttling features such as quotas, paging, and flow control.

Overriding Message Delivery Characteristics

Message delivery attributes include Priority , Time to Live , Time To Deliver , Delivery Mode , and Redelivery Delay . As we saw earlier, default values for these can be set in the connection factory; however, the application can override these values by setting them explicitly in the code. In addition, the WebLogic JMS destination configuration can override both the connection factory and the application-specified values. You can specify destination configuration overrides either directly in the destination s settings or indirectly in the destination s template settings. Any settings in the destination override the setting in the destination s template.

Let s look at an example to make sure that you understand how this works. In our example, we list the different ways to configure the Delivery Mode for a message being sent to a particular destination in ascending order of precedence. Each subsequent method will override all of the previously mentioned methods so that the last one that is applicable will define the actual Delivery Mode for the message:

-

The connection factory specifies the Default Delivery Mode , which defaults to persistent.

-

The application may override the Delivery Mode by explicitly setting it in the application code prior to sending the message. For example,

queueSender.setDeliveryMode(deliveryMode); queueSender.send(message);

where deliveryMode can be set to one of the following constants:

javax.jms.DeliveryMode.PERSISTENT

javax.jms.DeliveryMode.NON_PERSISTENT

-

The destination s template can override the Delivery Mode by setting it to Persistent , Non-Persistent , or No-Delivery , where No-Delivery is the default and simply means that the template does not override the values specified by the application and/or the connection factory.

-

The destination can override the Delivery Mode by setting it to Persistent , Non-Persistent , or No-Delivery , where No-Delivery is the default and simply means that the destination does not override the values specified by the template, the application, and/or the connection factory.

-

The JMS server can implicitly override a Delivery Mode value of Persistent by not configuring a Store , which will force all messages delivered to destinations associated with the JMS server to be non-persistent. If the JMS server has a store configured, this simply implies that the JMS server is not overriding the Delivery Mode .

Warning If an application invokes the setJMSDeliveryMode(deliveryMode) or setJMSPriority(priority) methods from the javax.jms.Message interface, WebLogic JMS will override these values, as the JMS specification designates that these methods are strictly for use by JMS providers. You must set the delivery mode or priority using the appropriate producer method calls for them to take effect.

Understanding Quotas and Thresholds

WebLogic JMS provides mechanisms for establishing quotas either on individual JMS destinations or on the entire JMS server. These quotas control the maximum amount of data that can be stored, either in a message store or in memory. Without quotas in place, producers can continue to produce messages until their messages consume all available space in the persistent store or all available memory in the JMS server. When the quota is reached, WebLogic Server 8.1 JMS producers will wait until space becomes available up to the user defined time-out period. If the quota condition does not subside before the timeout period, producers get a javax.jms.ResourceAllocationException . It is up to the application how to handle this condition.

The connection factory s SendTimeout attribute controls the maximum number of milliseconds a producer will block when waiting for space. You can disable blocking sends by setting this value to 0. An application may choose to override this setting by changing the value on the producer:

weblogic.jms.extensions.WLMessageProducer producer = (WLMessageProducer)queueSender; producer.setSendTimeout(sendTimeoutMillis); queueSender.send(message);

You should be very careful about using blocking sends with message producers running inside a WebLogic Server instance. These will cause server execute threads to block and could bring the entire server to a grinding halt and possibly deadlock the server for the amount of blocking time. In most cases, application message producers running inside the server should either disable blocking sends or set their values very small to prevent thread starvation . Also, be careful about retrying sends indefinitely inside the server for similar reasons.

| Warning | Blocking sends are a convenient way to shield applications from temporary quota limits. Care needs to be given, though, when the producers are running inside a WebLogic Server so as not to block execute threads that are needed to consume messages and clear the quota limit condition. To disable blocking sends, set the SendTimeout to 0. |

The BlockingSendPolicy attribute of the JMS server defines the expected behavior when multiple senders are competing for space on the same JMS server. The valid values are as follows :

FIFO. This value indicates that all send requests are to be processed in the order in which they were received. When the quota condition subsides, requests for space are considered in the order in which they were made.

Preemptive. This value indicates that any send operation can preempt other blocking send operations if space is available. That is, if there is sufficient space for the current request, that space is used even if there are other requests waiting for space. This can result in the starvation of larger requests. If sufficient space is not available for the request, the request is queued up behind other existing requests.

WebLogic JMS also supports message paging and flow control to help limit the amount of memory consumed by unconsumed messages and prevent applications from ever reaching the quota, respectively. Message paging allows WebLogic JMS to page messages out of memory to the associated paging store once the high threshold is reached. This will help prevent unconsumed messages from using too much of the server s available memory but will slow down the overall throughput of messages because they now must be written to disk. Once the consumers catch up, WebLogic JMS will suspend message paging until the threshold is reached again. WebLogic JMS uses a low threshold value to determine when consumers have caught up.

Flow control allows WebLogic JMS to slow down the rate at which message producers are sending messages in an attempt to allow consumers to catch up. As with message paging, WebLogic JMS will begin flow control once the high threshold is reached and will suspend flow control once the low threshold is reached.

Both flow control and message paging require defining the quota and the thresholds. Message paging also requires creating a message store dedicated to message paging and associating it with the JMS server. Message paging is disabled by default. By default, flow control is enabled on all connection factories but will never be used unless the appropriate quotas and thresholds are configured. We will talk more about flow control configuration in the Understanding Flow Control section later in this chapter.

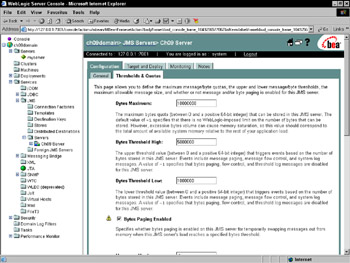

To configure quotas and thresholds, use the Thresholds & Quotas Configuration tab of the JMS server, JMS template, or JMS destination. Quotas and thresholds can be set in terms of the number of bytes or messages, as shown in Figure 9.5. Let s discuss the meaning of these parameters in the context of a JMS server.

Figure 9.5: Configuring a JMS server s thresholds and quotas.

Bytes Maximum and Messages Maximum specify the quota of the associated JMS server. These parameters indicate the maximum number of bytes or messages that may be stored in the JMS server. Bytes Maximum and Messages Maximum must be greater than or equal to the corresponding threshold parameters. This means you cannot use message paging or flow control without quotas.

| Best Practice | Always use quotas to prevent the server from consuming all available space storing unconsumed messages. |

Bytes Threshold High and Messages Threshold High specify the upper threshold that WebLogic JMS uses to determine when to start paging and flow control. When the number of bytes or messages exceeds the upper threshold, WebLogic JMS will log a warning message and take action to try to prevent reaching the quota. If the JMS server has paging enabled, WebLogic JMS will begin paging to the configured paging store. If flow control is enabled, WebLogic JMS will start limiting the producers message flows.

Bytes Threshold Low and Message Threshold Low specify the lower threshold that WebLogic JMS uses to determine when to stop paging and flow control. When the number of bytes or messages drops below the lower threshold, WebLogic JMS triggers events indicating that the threshold condition is cleared. When this happens, WebLogic JMS logs a message, stops any paging that was occurring, and disarms any flow control that was occurring and instructs all of the flow-controlled producers to begin increasing their message flow rates.

You can also configure quotas and thresholds for individual JMS destinations or templates. The attributes are similar to those of the JMS server and have similar meanings with the exception of the Bytes Paging Enabled and Messages Paging Enabled parameters. When configuring paging on a destination or template, the values specified complement those set at the JMS server level. By setting a value to default , you are telling WebLogic JMS to use the values from the JMS server.

Because the quotas and thresholds can be set at the JMS server level and at the individual destination level, the values specified at the server level can limit the effective settings of the individual destinations. Let s look at an example to make sure you understand the implications of this. Imagine that you have a JMS server that hosts two destinations, Queue1 and Queue2 . If you set the Bytes Maximum for the server to be 50,000 and the Bytes Maximum for each destination to 30,000, then you may run into a situation where the overall quota of the server limits the quota of the individual destination. If Queue1 currently contains 25,000 bytes of messages, then the effective quota for Queue2 at that point is 25,000 bytes instead of the 30,000 bytes specified by the Bytes Maximum parameter for Queue2 because of the JMS server s 50,000 byte quota.

| Warning | JMS server quota and threshold parameters apply across all destinations in a JMS server and may limit those specified for each destination. |

Enabling Message Paging

Message paging is a mechanism that allows WebLogic JMS to remove message data from memory and write it to a persistent message store configured for paging. By using paging, WebLogic JMS can prevent unconsumed messages from using up all of the WebLogic Server s available memory. Paging allows a WebLogic JMS server to hold more messages without increasing the heap size of the JVM. When WebLogic JMS is paging, it writes message bodies to the JMS server s paging store. Message headers and properties are never paged out of memory.

You should always enable paging as a preventive mechanism. Most non-persistent messaging applications, however, choose to use non-persistent messages for speed. Because paging will significantly reduce the performance of your messaging application, you should tune your application configuration to try to prevent paging from ever occurring. Remember, a healthy messaging system requires consumers to keep up with producers.

Persistent messages do not actually use the paging store because they already exist in the message store, and non-persistent messages that have been paged out of memory do not survive server restarts or crashes. Therefore, you do not need to worry about high availability for messages stored in a paging store. Because file-based stores are generally much faster than the equivalent JDBC-based stores, we strongly recommend using file-based stores for paging.

| Best Practice | Always enable paging and use a file-based paging store as a precaution, but tune your application to avoid paging for best performance. |

Understanding Flow Control

WebLogic JMS starts using flow control only if you enable it on the producer s connection factory and the JMS server or destination exceeds the configured upper threshold. Once WebLogic JMS starts controlling the flow of messages to a particular server or destination, it will continue to do so until the number of unconsumed bytes or messages drops below the configured lower threshold. At that point, WebLogic JMS will tell the producers to start increasing their flow of messages gradually until message rates are no longer being throttled.

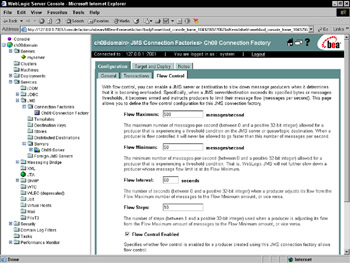

You have already seen how to configure the threshold values for a JMS server and destination. The attributes that control the tuning of the flow control algorithm are set via a producer s connection factory. When you create a connection factory, flow control is enabled by default and the flow control tuning parameters are given some default values, as shown in Figure 9.6. Of course, you can change the default values as necessary. Let s spend some time looking at the flow control algorithm and how these tuning parameters affect its behavior.

Figure 9.6: Configuring the flow control parameters.

WebLogic JMS engages the flow control algorithm when an upper threshold is reached. At that point, WebLogic JMS will limit the flow rate of all producers by setting their maximum allowable flow rate to that specified by their connection factory s Flow Maximum parameter. Unless the lower threshold is reached first, WebLogic JMS will continue to slow down producers over time until all producers are at their minimum flow rate, as specified by the connection factory s Flow Minimum parameter. The rate at which WebLogic JMS slows down producers is controlled by the Flow Interval and Flow Steps parameters. Flow Interval defines the time interval over which a producer is slowed from its maximum rate to its minimum rate. Flow Steps is the number of incremental steps WebLogic JMS uses in the slow-down process.

Once the maximum allowable flow rate reaches the Flow Minimum value, WebLogic JMS will maintain the producers flow rates until the JMS server or destination backlog reaches the lower threshold. At that point, WebLogic JMS will linearly increase the producers maximum allowable flow rates Flow Steps times over the Flow Interval period, with the final step disengaging flow control completely.

As with paging, flow control should be used as a preventive measure. Flow control is no substitute for proper application design and tuning so that consumers can keep up with producers. Because flow control effectively slows down the sending thread, care should be given when using flow control on producers running inside the WebLogic Server. We discuss this more in the next section where we will go into more detail about how to design JMS applications properly with WebLogic Server.

| Best Practice | Always use flow control as a preventive measure for producers that run outside the WebLogic Server process. Be careful using flow control inside the server because it will cause server threads to slow down. |

EAN: 2147483647

Pages: 125

- The Second Wave ERP Market: An Australian Viewpoint

- Context Management of ERP Processes in Virtual Communities

- Distributed Data Warehouse for Geo-spatial Services

- A Hybrid Clustering Technique to Improve Patient Data Quality

- Relevance and Micro-Relevance for the Professional as Determinants of IT-Diffusion and IT-Use in Healthcare