The Challenges

As a project manager, you have one of the most challenging jobs on the planet. You are responsible for planning the project, keeping it on schedule and within budget, keeping all the stakeholders informed, and ensuring that deliverables are in fact delivered. And if that isn’t enough, you must also rise to the unique challenges offered by each software development project.

Almost all software projects start with an incomplete understanding of what exactly will be delivered. Requirements are often vague and frequently change even as the project is in progress. Also, reporting on the status of the project and the health of the software being developed can involve gathering information from a variety of disparate sources and then manually manipulating it into meaningful metrics. This process can be tedious, laborintensive, and error-prone.

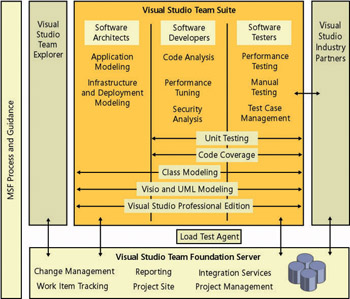

This book introduces a new tool set for managing the software development life cycle. Called Visual Studio Team System, this tool set includes tools for software architects, developers, testers, release managers, and of course, project managers like you. Visual Studio Team System (illustrated in Figure 1-1) consists of four versions of Visual Studio, each for a different team role, and a server application called the Visual Studio 2005 Team Foundation Server. Team Foundation Server acts as the focal point for software project teams, offering services ranging from work item tracking and team Web portals to source code version control, build management, and distributed test execution. Team Foundation Server integrates the information generated by these various services into a single data warehouse and provides a sophisticated reporting system based on the latest data analysis technology.

Figure 1-1: Visual Studio Team System

Visual Studio Team System offers compelling benefits to you as the project manager. For instance, it makes gathering information simpler and more accurate. Much of the information gathering is automatic-a by-product of using the tools. Work item tracking is fully integrated into Visual Studio, making it convenient for team members to update work items and link them to the work they are doing, such as checking in code, creating builds, and conducting tests. For the project manager who has to manually gather this sort of information from a variety of sources, this feature alone makes Visual Studio Team System worthwhile.

You can access Visual Studio Team System in a variety of ways using familiar tools. For instance, you can manage work items by using Microsoft Office Project. Or if you prefer, you can manage the very same work items by using Microsoft Office Excel. You can review project reports and access team Web portals by using Windows Internet Explorer. Also, you can use the Team Explorer client application to access all the Team Foundation Server services.

Visual Studio Team System is designed from the ground up to support the latest Agile development methods. It does not impose a specific methodology. Rather, it can be configured to support any number of different methodologies such as Scrum, the Unified Process, and the Microsoft Solutions Framework. What’s more, Visual Studio Team System can evolve as your team’s development methodology evolves, allowing the tools to support the process rather than the other way around.

This book is designed to help you get the most out of Visual Studio Team System. You will learn how to use Visual Studio Team System within the context of project management processes: initiating and planning a project, building and stabilizing the software, and closing the project. You will also learn how to tailor Visual Studio Team System to meet the specific needs of the team and the project.

Before we go any further into Visual Studio Team System, let’s step back and take a look at some of the other challenges that face software development teams and explore the nature of software engineering projects to see how Visual Studio Team System can help.

The Track Record

Software development projects in general have had a checkered past. As an industry we’ve had some revolutionary successes, including IBM’s O/S 360 operating system, Microsoft Windows and Microsoft Office, and of course, the Internet. Unfortunately, we’ve also had more than our fair share of spectacular failures over the years. Here are some of the more notorious fiascos:

-

A $250 million automated baggage handling system delayed the opening of the Denver International Airport by 16 months. The baggage system software never worked properly, and ultimately the entire system was dismantled and replaced (see http://www.ddj.com/dept/architect/184415403).

-

The U.S. Federal Bureau of Investigation wrote off more than $100 million for its failed Virtual Case File system, a massive case management system that suffered from poorly specified and slowly evolving design requirements, overly ambitious schedules, and the lack of a plan to guide hardware purchases, network deployments, and software development for the Bureau (see “Who Killed the Virtual Case File?” at IEEE Spectrum Online, September 2005 at http://www.spectrum.ieee.org/sep05/1455).

-

The U.S. Internal Revenue Service’s $8 billion Business Systems Modernization program was its third attempt to modernize its antiquated information systems. The size and complexity of the project coupled with a lack of oversight and user involvement have caused the program to run nearly 3 years late and more than $200 million over budget. The delay and cost overruns have compromised the agency’s ability to collect revenue and conduct audits (see “For the IRS There’s No EZ Fix” in CIO Magazine, April 2004 at http://www.cio.com/archive/040104/irs.html).

-

The U.S. Federal Aviation Administration canceled its Air Traffic Modernization Program in 1994 after spending $2.6 billion on its Advanced Automation System (see “ Observations on FAA’s Air Traffic Control Modernization Program,” U.S. Government Accounting Office, 1999 at http://www.gao.gov/archive/1999/r199137t.pdf).

-

Ariane 5 Flight 501, the maiden flight of the Ariane 5 rocket launcher for the European Space Agency, took place on June 4, 1996. Approximately 30 seconds after liftoff, the inertial guidance computer experienced a numeric overflow when converting a 64-bit floating point value to a 16-bit unsigned integer. As a result, the launcher veered wildly out of control and self-destructed, causing a $500 million loss (see “ARIANE 5 Flight 501 Failure, Report by the Inquiry Board,” European Space Agency, 1996 at http://homepages.inf.ed.ac.uk/perdita/Book/ariane5rep.html).

The loss caused by these failures goes well beyond the money invested in the development project. In some cases in which the software is part of a larger project, the failure caused delays and cost overruns that dwarfed the cost of the defective software. In other cases, the failure hindered the ability of an organization to perform its function, resulting in lost revenue that far exceeded the original software investment. And in a few extreme cases, poor software quality actually resulted in injury and death (see “An Investigation of the Therac-25 Accidents” at http://courses.cs.vt.edu/~cs3604/lib/Therac_25/Therac_1.html).

So, how bad is it really? A small research and consulting firm called the Standish Group went about answering that very question. Formed in the mid 1980s, the Standish Group started with a very specific focus: to collect and analyze case information on real-life software engineering projects for the purpose of identifying the specific factors that relate to success and failure. In 1994, the Standish Group published the Chaos Report, which is one of the most often quoted sources regarding the overall track record of software development projects. This report was based on the analysis of more than 8,000 software development projects representing a cross-section of industries. According to the report, a staggering 31 percent of all software development projects were failures, meaning that they were cancelled before the software was completed. Only 16 percent of the projects were completed on time and on budget with all the features initially envisioned.

In 2004, the Standish Group released an update to their Chaos Report. They studied an additional 40,000 projects in the 10 years since the original report was issued. The good news is that project success more than doubled, from 16 percent to 34 percent. Conversely, project failure dropped by more than half, from 31 percent to 15 percent.

Although the trend is encouraging, we still have a long way to go. A 34 percent success rate means that 66 percent of the software projects failed to meet expectations. Very few other industries would tolerate this sort of performance. Would Toyota-or its customers-be satisfied if two thirds of the cars it produced failed to work properly? Of course not. So, why does the software industry get away with it? Perhaps it’s because software-based automation has had such a profound effect on improving productivity. Despite the high failure rate, the overall return on investment has been satisfactory because the successes tend to produce huge gains that more than offset the losses due to failures. Just the same, as higher productivity becomes the norm, this sort of project performance will not be tolerated indefinitely. Software development organizations will be expected to improve and will be held to a higher standard. Which is to say that you, the project manager, will be held to a higher standard of performance too.

Visual Studio Team System can help you achieve that higher standard. It becomes another set of tools that you can add to your project management toolbox. Visual Studio Team System gives you unprecedented visibility into the status of work items in addition to the health of the product being developed. Automated reports and diagrams provide at-a-glance information in near real time. Visual Studio Team System not only saves you time by automatically collecting project-related information into a single data warehouse, it also distributes this information to team members in addition to other authorized stakeholders through team Web portals and automated reporting services.

Complexity and Change

Consider where we’ve come since the IBM PC was introduced in 1981. At that time, the IBM PC shipped with the MS-DOS 1.0 operating system, which consists of approximately 4,000 lines of code (see the Operating System Documentation Project at http://www.operating-system.org/ betriebssystem/_english/bs-msdos.htm). In 2006, Microsoft introduced Windows Vista, which, according to Wikipedia ( http://en.wikipedia.org/wiki/Source_lines_of_code), consists of a whopping 50 million lines of code. Although lines of code is a crude measure of complexity, it clearly illustrates the magnitude of the increase in software size since personal computers became popular.

| Note | Interestingly, the microprocessor of the original IBM PC contained 29,000 transistors, whereas today’s modern dual-core microprocessors contain more than 150 million transistors. When you compare lines of code to transistors, you see that the size of the operating system software has increased more than twice the size of the microprocessor that it runs on. One might conclude that the complexity of the software is growing even faster than the complexity of the hardware. |

Imagine how many different paths of execution are possible in 50 million lines of code, and you begin to appreciate the fact that modern software rivals biological systems in complexity. Of course, very few of us are building operating systems or software applications this large, but we are all experiencing increasing complexity as we evolve from stand-alone applications to distributed service-based architectures.

Change compounds the challenge of software complexity. Software exists within the context of an ever-changing environment: changing requirements, changing operating systems, changing components and services, changing architectural paradigms, changing hardware platforms, and changing network topologies, to name a few.

This ever-growing complexity and accelerating change place more demands not only on the project team but on the project manager. As the product grows more complex, the project to produce it also tends to grow more complex. There are more tasks for you to coordinate and more deliverables for you to track. Rapid change requires you to be adaptive and keep your projects agile. You need to be able to quickly replan in order to respond to the inevitable changes that will occur.

Visual Studio Team System provides several mechanisms for dealing with complexity and change. Work item tracking allows you to quickly create tasks lists that are instantly available to all team members and easily revise the task lists to reflect changes in the project. Team members can conveniently update their tasks from within Visual Studio, Office Excel, or Office Project or by using a growing number of third-party Team Foundation Server client applications. They can also create their own work items as needed, allowing them to more accurately track their own activities and coordinate work. In some cases, the system can automatically create work items, such as when a build fails. In this way, work item tracking in Visual Studio Team System reduces the effort required by you to manage complex and rapidly changing projects.

You can use work item tracking to manage items other than tasks. For instance, you can manage requirements and track bugs as Visual Studio Team System work items. You can even invent your own work items types as needed or modify existing work item types to better meet the needs of the team and its stakeholders. The flexible nature of Visual Studio Team System work items enabled the team to better handle rapid change and also increases the likelihood that the work items accurately reflect the true state of the project.

Visual Studio Team System offers other team members some great tools for managing complexity. For instance, Visual Studio Team Edition for Software Architects includes the Distributed Systems Designers, which allows solution architects to model sophisticated applications based on distributed, service-oriented architectures. Skeletal implementations can be generated directly from these models, and the models automatically update themselves to reflect changes made directly to the code. In this way, the models provide a visual representation that communicates the intent and design of an application better than does source code alone. Visual Studio Team Edition for Software Developers contains refactoring tools that enable developers to remove unnecessary complexity from applications and unit-test tools to verify that the application is working correctly. Visual Studio Team Edition for Software Testers contains additional test tools that enable software testers to identify defects and monitor stability as the software evolves. Team Foundation Server contains a build system called Team Build, which automates the process of building complex applications. It can also be used to monitor the health of the application by running daily builds and tests to identify any integration issues that may have been caused by recent changes. This early warning system provides the feedback necessary for the team to keep a complex application stable as it evolves. Team Build also records comprehensive build data to the Team Foundation Server data warehouse for analysis and reporting.

The Human Factor

Many organizations have tried to control software development projects by prescribing a detailed methodology. Called by Tom DeMarco and Tim Lister in their 1987 book Peopleware (Dorset House Publishing) a Big M methodology, it attempts to specify a procedure for every aspect of the software development process in much the same way a cookbook recipe specifies the sequence of steps to produce a cake. At first glance, this approach seems reasonable.

Prescribing the process in this manner should produce consistent, repeatable results. Unfortunately, building software is nothing like baking a cake. The process of baking a cake is well understood and deterministic. If you accurately follow the recipe, you get the same result time after time with little variability.

Unfortunately, building software is far less predictable. Unlike baking the cake, in which the goal is the same result time after time, each software program is a unique creation with its own unique issues. Even the most careful and methodical developers produce software that displays unintended behavior. Occasionally, this unintended behavior is a happy accident that actually enhances the program, but more often than not it’s a defect that must be corrected.

Because each software application is new, unique, and somewhat unpredictable, it stands to reason that the activities to produce the software will differ from one application to the next. For this reason, software development does not fit neatly into a prescribed, Big M methodology. It’s neither possible nor desirable to create a detailed process that will work for every possible situation. Good things happen when you allow a team to innovate, try new things, learn from their failures, and build on their successes. This sort of team behavior, referred to by Craig Larman in his 2003 book Agile and Iterative Development: A Manager’s Guide, First Edition (Addison Wesley Professional) as emergent behavior, is essential for successful software development, yet it is the antithesis of Big M methodology. The people involved are going to have a far greater effect on the success of a software development project than a prescribed process. If the team members are competent and motivated, they will get the job done regardless of the process imposed upon them. Without a competent and motivated team, however, the results will almost always be unsatisfactory regardless of the process used. Put simply, people trump process every time.

That being said, even a competent and motivated team can benefit from utilizing guidance that describes in general terms its overall approach to software development. This sort of guidance is sometimes referred to as a little m or lightweight methodology. It tends to focus on describing a process framework without going into too many details. Microsoft’s MSF for Agile Development is a good example of a lightweight methodology. It describes a process based on incremental, iterative development. Although MSF includes prescriptive guidance in the form of workstreams and activities, this guidance is cursory, consisting mainly of bullet points designed to serve as a checklist rather than a procedure.

Visual Studio Team System is designed to support a wide range of software development methodologies. Team Foundation Server includes process templates for two different methodologies: MSF for Agile Software Development and MSF for CMMI Process Improvement. Both methodologies describe an iterative, incremental approach to software development. Both methodologies also describe the same team model and the same principles and mindsets. The main difference lies in level of detail that each methodology offers. MSF for Agile Software Development provides a lightweight set of workstreams, activities, work products, and reports. It is designed for self-directed teams operating in an agile environment. CMMI Process Improvement, on the other hand, is designed for organizations that require a more formal approach. As such, it is more prescriptive, providing a comprehensive set of workstreams, activities, work products, and reports.

Regulatory Requirements

Recent years have seen a significant increase in the number of regulations that impact information systems. As a result, software development organizations that produce these information systems must be diligent in their compliance or run the risk of stiff penalties and in some cases even criminal charges that can result in jail time. Let’s take a look at two examples, SOX and HIPAA.

In response to the massive corporate accounting scandals including those of Enron, Tyco, and Worldcom, the United States government enacted the Sarbannes-Oxley Act of 2002. Often referred to as SOX, this federal law requires that the Chief Executive Officer (CEO) and the Chief Financial Officer (CFO) of any publicly traded corporation personally certify the accuracy of the corporation’s financial reports. This law has sharp teeth-chief executives face stiff jail sentences and large fines for knowingly and willfully misstating financial statements. What’s more, these officers must certify an annual report to the federal government stating that their company has “designed such internal controls to ensure that material information relating to the company and its consolidated subsidiaries is made known to such officers by others within those entities, particularly during the period in which the periodic reports are being prepared.”

The law also requires the signing officers to certify that they “have evaluated the effectiveness of the company’s internal controls as of a date within 90 days prior to the report.” This evaluation requires the implementation of an internal control framework that encompasses all the information systems that generate, manage, or report financial information. In other words, IT organizations must maintain strict controls on all aspects of these systems, including their design, implementation, and maintenance. SOX mandates that any software development project impacting financial reporting in any way is subject to an internal controls audit. As a result, these projects require a great deal of control and record keeping.

But the impact of regulations doesn’t stop there. Industry-specific regulations also impact software development projects. For instance, the health care industry in the United States has the Health Insurance Portability and Accountability Act (HIPAA). Enacted in 1996, HIPAA contains a broad-ranging set of reforms designed to protect health insurance coverage for workers and their families when they change or lose their jobs. In addition, HIPAA contains Administrative Simplification (AS) provisions, which address, among other things, the privacy and security of health data. The Privacy Rule regulates the use and disclosure of any health-related information that can be linked to an individual. Organizations that handle private health information must take reasonable steps to ensure confidentiality, make a reasonable effort to disclose the absolute minimum amount of information to third parties for treatment or payment, and keep track of all such disclosures. In addition, HIPAA mandates standards for three types of security safeguards related to private health information: administrative, physical, and technical. Obviously, information systems that contain private health information must comply with the HIPAA privacy and security rules. Failure to do so could result in severe monetary penalties.

Visual Studio Team System addresses the need for control and record keeping through the use of work items. Work items not only help the team manage the flow of work and work products in a project, they also provide historical documentation on the work that was done and the work products affected. Also, the Team System Version Control System can be configured with a policy that requires each set of source code revisions to be associated with a work item, creating an audit trail that extends all the way to individual file changes. In this way, Visual Studio Team System generates compliance data automatically, as a by-product of the normal work flow.

IT Governance

Another challenge that organizations face is how to maximize the value of their investment in IT technology. Perhaps the best way to explore this challenge is to consider the point of view of the Chief Information Officer (CIO). The CIO is responsible for successfully executing business strategy through the effective use of information technology. As such, the CIO has one foot in the business world and one foot in the technology world. Although this executive is responsible for the effective use of technology, the CIO’s primary focus is not on technology per se. Rather, the CIO is focused on maximizing the flow of value from the IT organization to the rest of the company. To that end, CIOs spend a great deal of time thinking about IT governance because IT governance is the primary vehicle for guiding the performance of the IT organization.

The term flow of value expresses a concept borrowed from the lean manufacturing movement. Actually two concepts are embodied in this term. Value refers to the business value a given asset or service offers. Flow refers to the ongoing production and delivery of that business value. For instance, a Customer Relationship Management (CRM) system enables a company to do a better job of meeting its customers’ needs, which in turn leads to greater revenue for the company. By implementing the CRM system, the IT organization generates business value that benefits the company. In this context, the flow of value refers to the delivery of business value from the IT organization to the rest of the company. But flow of value can also refer to the process used to create business value within the IT organization. When people start thinking in terms of the flow of value within this context, value-added practices start replacing wasteful practices, which in turn leads to increased productivity, increased throughput, reduced costs, improved quality, and increased customer satisfaction.

What is IT governance? Dr. Peter Weill, Director of the Center for Information Systems Research at the MIT Sloan School of Management, describes IT governance as a “decision rights and accountability framework to encourage desirable behavior in the use of IT” (see Weill’s 2004 article “IT Governance on One Page,” co-authored by Jeanne W. Ross, at http://mitsloan.mit.edu/cisr/r-papers.php). He goes on to say that IT governance need not be an elaborate set of policies and procedures. Rather, Dr. Weill claims that an organization can communicate its IT governance in a two-page document that lays out a vision for the IT organization, states who can make what decisions and who is accountable for what outcomes, and describes the desirable behaviors such as sharing, reuse, cost savings, innovation, and growth.

Visual Studio Team System supports IT governance through its process guidance and process metrics. Each Team Project in Visual Studio Team System includes a project Web portal that contains, among other things, process guidance documentation. The process documentation for both versions of MSF describe a team model that specifies roles and responsibilities in terms of advocacy groups. The MSF process documentation also describes desirable behaviors in terms of principles and mindsets and governance checkpoints that serve as process gates. You can customize this process guidance to meet the specific needs of your organization, or you can even replace it completely.

As software development teams use Visual Studio Team System, the Visual Studio Team System data warehouse accumulates a wealth of information about both the development project and the product being developed. Project performance can be measured using work item status and work item history. Product health can be monitored via build status and measured through a variety of quality indicators including bug counts, unit testing statistics, code coverage, and code churn. This information can be incorporated into the IT governance process, providing objective performance metrics that serve as the basis for decision making.

EAN: N/A

Pages: 93