The SQL Server Operating System (SQLOS)

| An instance of a running application, known as a process, is merely a container for one or more threads that are scheduled to run on a processor for a time slice determined by the operating system. Threads allow applications to make more effective use of a CPU, even on a computer that has a single CPU. Windows is a preemptive, multitasking operating system. That means in order to run application code, the operating system grants a time slice to the thread. And when the time slice is over or when a high-priority thread needs to run, the operating system saves the contextual information for the current thread, preempts or stops the running thread, and loads the contextual information of the other thread so the other thread can run. This approach is designed to keep a single application from taking over the system and to provide even performance across the system. User Mode Scheduler (UMS)SQL Server efficiently leveraged the previously discussed Windows scheduling facility up through SQL Server 6.5. However, this general-purpose, one-size-fits-all scheduling approach limited the scalability heights that SQL Server was trying to achieve. The Windows preemptive scheduling approach results in context switches (stopping one thread and running another thread), which are expensive operations and involve switching from user mode to kernel mode. In a process such as SQL Server that makes use of a lot of threads, excessive context switching has a negative impact on the overall performance of and limits the scalability of SQL Server. This is why the SQL Server team decided that SQL Server should handle its own scheduling. SQL Server knows its own scheduling needs better than Windows does. Therefore, SQL Server can do a better job than Windows of implementing efficient thread scheduling and avoiding context switching. SQL Server 7.0 first introduced the notion of User Mode Scheduler (UMS), which is a thin layer above the operating system, whose primary job is to optimize SQL Server thread management by minimizing the context switches and keeping as much of the SQL Server scheduling process as possible in user mode. UMS functionality is provided in a file named ums.dll under the binn folder. In addition to taking over scheduling from Windows, UMS also abstracts operating systemdependent features such as fibers and asynchronous I/O. But how does UMS take over scheduling from the preemptive operating system? UMS has Windows believe that all threads except the one that UMS wants to run are not viable. If a thread is in an infinite wait state, that means if a thread calls WaitForSingleObject and passes INFINITE for the timeout value, Windows considers the thread not viable for scheduling purposes and ignores it. The only way to awaken such a sleeping thread is to signal the thread's event object. To the operating system, only one SQL Server thread per processor generally appears to be active at a time. So, even though the server may have hundreds of worker threads at any given time, only one of them for each processor on the server appears to Windows to be actually doing anything. The Windows scheduler is a preemptive scheduler. UMS, on the other hand, follows the cooperative model and is a non-preemptive scheduler. UMS relies on threads to yield voluntarily. UMS takes this approach to keep from involving the Windows kernel any more than absolutely necessary. UMS cooperative scheduling requires more careful coding on the part of SQL Server development team, but it can actually be more efficient than a preemptive model because the scheduling process can be tailored to the specific needs of the application. When a thread yieldseither because it has finished the task at hand or because it has executed code with an explicit call to one of the UMS yield functionsit checks the list of threads that are ready to run and signals the first thread in the list that it can run. This way, everything happens in user mode, avoiding a switch to kernel mode. When SQL Server starts, one UMS scheduler is created for each processor in the machine. Each scheduler maintains the following five lists:

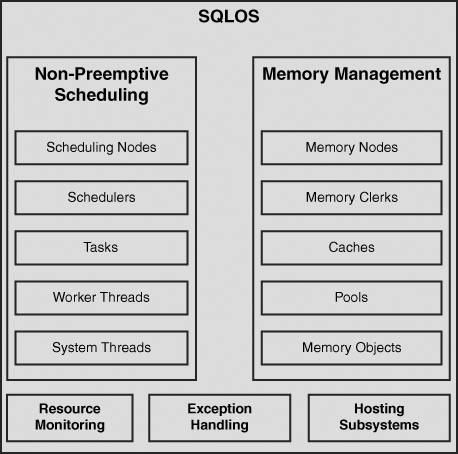

In SQL Server 7.0 and 2000, you used the DBCC SQLPERF(umsstats) undocumented statement to monitor the health of each visible scheduler on the system. In SQL Server 2005, you can access the sys.dm_os_schedulers dynamic management view (DMV) to list statistics for both visible and hidden schedulers on the system. DMVs are a new type of metadata views provided for monitoring and troubleshooting purposes. They essentially provide a real-time snapshot of internal memory structures, indicating the server state. DMVs are discussed in Chapter 9, "Performance Analysis and Tuning." Introducing SQLOSThe UMS-based thread management architecture made SQL Server self-managing and easy to scale as new CPUs are added to the machine. During the SQL Server 7.0 rewrite, when UMS was introduced, the memory manager, storage engine, and relational engine were also upgraded to have built-in adaptive algorithms and self-tuning capabilities. When SQL Server 7.0 was shipped in 1998, it truly was the first enterprise database engine that was capable of automatic configuration and dynamic self-tuninga concept that other database vendors initially downplayed as "not for the enterprise" but are now aggressively pursuing. In SQL Server 2005, Microsoft has taken the self-managing and self-tuning paradigm to a much higher level. As a result, a component named SQLOS was born. SQLOS is a layer that sits on top of the underlying operating system and is responsible for managing operating system resources that are specific to SQL Server. SQLOS gives SQL Server the ability to serve its internal resource requirements much more efficiently and comprehensively. Each component within SQLOS is dedicated to performing specific functions as well as working with other components in a harmonious manner, providing large-scale performance with the ease of adapting to different hardware configurations, such as 32-bit, 64-bit, x64, dual core chips, and large memory addressability. In other words, no configuration changes are necessary within SQL Server in order for SQLOS to adapt to hardware resources while providing unprecedented scalability. You will notice that SQL Server 2005 does not ship ums.dll anymore. UMS is now referred to as the "Non Preemptive Scheduler," and it is one of the components of SQLOS. Figure 2.1 shows various SQLOS components. Figure 2.1. SQLOS is a user mode operating system layer that sits between the SQL Server engine and the operating system and provides services such as thread management, memory management, and hosting services. As Figure 2.1 shows, the two big components of SQLOS are non-preemptive scheduling (formerly known as UMS) and memory management. Other components include the resource monitor, exception handler, and hosting subsystems. SQLOS brings all these system components together and provides a cohesive API that the SQL Server development team can use to easily exploit hardware and operating system features. Non-Preemptive SchedulingThe non-preemptive scheduling component of SQLOS is used for scheduling and synchronizing concurrent tasks without having to call the Windows kernel. It is responsible for scheduling Windows threads or fibers effectively. The scheduler is responsible for managing the scheduling process and for ensuring that only one thread processor is active at any given time. The sys.dm_os_schedulers DMV can be used to view a list of schedulers. A collection of schedulers that provides an abstraction layer over a group of CPUs is called a scheduling node. The term task refers to a unit of work that is scheduled by SQL Server. A Transact-SQL (T-SQL) statement batch can map to one or more tasks. For instance, a parallel query is executed by multiple tasks. The tasks are executed by worker threads. A worker thread represents a logical thread in SQL Server that is internally mapped (1:1) to either a Windows thread or, if lightweight pooling is on, to a fiber. The worker thread-to-Windows thread mapping is maintained until the worker thread is deallocated either because of memory pressure or because it has been idle for a long time. Worker threads are executed and managed by system threads. The max worker threads OptionEach instance of SQL Server maintains a pool of either Windows threads or fibers for processing user queries. This thread pool helps optimize performance when large numbers of clients are connected to the server. The maximum size of this pool is controlled by the max worker threads server configuration option. The minimum and default values for the max worker threads advanced option have changed in SQL Server 2005. In SQL Server 2000, the minimum value that can be set for the max worker threads option is 32; it is 128 in SQL Server 2005. In SQL Server 2000, the max worker threads option defaults to 255. In SQL Server 2005, this option is by default set to 0, which allows SQL Server to automatically configure the number of worker threads at startup. If the max worker threads option is left at the default of 0, SQL Server 2005 uses the formula shown in Table 2.1 to set the max worker threads configuration value.

For instance, on an eight-processor 32-bit machine, max worker threads defaults to 256+((84)*8), which equals 288. On an eight-processor 64-bit machine, max worker threads defaults to 512+((84)*16), which equals 576. It is important to note that SQL Server does not actually create all the threads, but it reserves the memory required for creating the number of threads specified by the max worker threads option. When the actual number of user connections is smaller than the number set in max worker threads, one thread handles each connection. However, when the actual number of connections exceeds the number set in max worker threads, SQL Server pools the worker threads so that the next available worker thread can handle the request. Routing Tasks to a SchedulerSQL Server 2000 chose the scheduler in a round-robin fashion at connection time. All batches on a connection were run on the same scheduler, which could lead to imbalances, especially with long-lived connections. In SQL Server 2005, the decision to choose a scheduler is based on how busy the scheduler is. In other words, unlike in SQL Server 2000, where a task was routed to a particular scheduler, in SQL Server 2005, the routing decision is made based on the load on the scheduler. At connection time, SQL Server chooses a scheduler based on the load on the schedulers. Each subsequent batch on the same connection uses the same algorithm, and a new scheduler is chosen based on the load, with a bias toward the current scheduler for the connection. That is, if the load on the current scheduler (where the last batch for the connection was executed) is not appreciably higher than the average load, SQL Server does not look for a new scheduler; instead, it utilizes the warm cache and minimizes the CPU cycles used to find the least loaded scheduler. The columns such as load_factor and runnable_tasks_count in sys.dm_os_schedulers can be used to determine how busy a scheduler is. Memory ManagementOn a 32-bit x86 platform, Windows gives all processes a 4GB virtual address space (VAS), which is divided into two partitions of 2GB each: the user mode partition and the kernel mode partition. If an application demands more than 2GB of user mode VAS, you can place a /3GB switch in the system's BOOT.INI file to limit the kernel VAS to 1GB and provide 3GB of user mode VAS to the application. Windows XP and Windows 2003 support an additional /USERVA switch in the BOOT.INI file that provides a finer degree of control by allowing you to specify exactly how much address space to set aside for user mode access. If even 3GB memory is not sufficient, you can use a Pentium Pro or later processor, place the /PAE switch in your BOOT.INI file, and leverage the Address Windowing Extensions (AWE) facility provided by Windows to access up to 64GB of physical memory. Note that by reducing the kernel portion of VAS to 1GB, you reduce the space available to support internal management structures. If there is more than 16GB of physical memory available on a computer, the operating system needs 2GB of process address space for system purposes and therefore can support only a 2GB user mode address space. In order for AWE to use the memory range above 16GB, you need to be sure that the /3GB parameter is not in the BOOT.INI file. If it is, the operating system cannot address any memory above 16GB. SQL Server divides its VAS into two regions: the buffer pool and reserved address space. The buffer pool, also known as the BPool, is a primary memory allocation source for SQL Server, and it is used as a data and index page cache and for memory allocations smaller than 8KB. The BPool size is governed by the min server memory and max server memory configuration options. The BPool never drops its memory allocation below the level specified in min server memory, and it never acquires more memory than the level specified in max server memory. Objects that use the BPool include the buffer cache, the procedure cache, system-level structures, locks, log caches, and connection contexts. The second region in SQL Server VAS is often called MemToLeave, which actually is an incorrect term; the correct term for this memory region is reserved address space. The reserved address space region is used for internal SQL Server allocations that exceed 8KB of contiguous space and for allocations made by external consumers, such as extended stored procedures, OLE DB providers, in-process COM objects, SQLCLR (.NET common language runtime integration with SQL Server) assemblies, and so on. The reserved address space is a contiguous allocation of memory of size 256MB+(max worker threads*512KB). For instance, if max worker threads is set to 256, the reserved address space size is 256MB+(256*512KB), which equals 384MB. As shown in Figure 2.1, the SQL Server 2005 memory management architecture consists of several components, such as memory nodes, memory clerks, caches, pools, and memory objects. The memory node component is responsible for providing the locality of allocations. It consists of the single-page allocator, the multi-page allocator, the large page allocator, the reserved page allocator, the virtual allocator, and the shared memory allocator. Memory clerks, which are the key to granular memory management in SQL Server 2005, enable SQLOS to track and control the amount of memory consumed by a component. The sys.dm_os_memory_clerks DMV can be used to figure out memory distribution and to see a list of active memory clerks and the amounts of different kinds of memory allocated by each clerk. For example, the following query uses the sys.dm_os_memory_clerks DMV to list each type of memory clerk along with the sum of reserved virtual memory, committed virtual memory, and single and multi-pages allocated by each clerk: SELECT [type], SUM(virtual_memory_reserved_kb) AS TotalVirMemReservedKB, SUM(virtual_memory_committed_kb) AS TotalVirMemCommittedKB, SUM(multi_pages_kb) AS TotalMultiPagesKB, SUM(single_pages_kb) AS TotalSinglePagesKB FROM sys.dm_os_memory_clerks GROUP BY [type] ORDER BY TotalVirMemCommittedKB DESC, TotalVirMemReservedKB DESC, TotalMultiPagesKB DESC, TotalSinglePagesKB DESC; You can use the sys.dm_os_virtual_address_dump DMV to obtain detailed information about the VAS. In addition to the architectural change just discussed, the following are some more changes that have been introduced to memory management in SQL Server 2005:

|

EAN: 2147483647

Pages: 150

4

4