Practice1.Ruthless Testing

Practice 1. Ruthless TestingRuthless testing is all about developers doing absolutely everything in their power to ensure that their software is as heavily tested as it can be before the software reaches people, both QA and customers. Ruthless testing is one of the most crucial practices of sustainable development and if there is one practice to focus on, this is it. Ruthless testing is primarily about automated testing. Developers should not do any of the following:

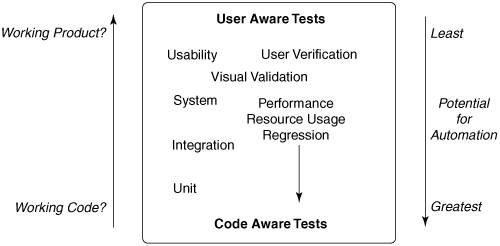

All of the above would be a waste of time, effort, and money. Developers have access to a testing resource that is excellent at repeatedly performing mind-numbingly dull tasks: a computer! Tests can be written as code, keeping the task of software development as a coding exercise with more code produced to complete a feature, but usually more code dedicated to testing than to implementing the feature. Developers still have to do some user-level testing, just as they do today. The difference is that as developers add and change functionality and fix defects, they put in place safeguards in the form of automated tests. These automated tests are then run as often as possible to give the developers confidence that their product is behaving as expected and keeping the expected behavior over time. There are multiple types, or levels, of testing that can (and should) be utilized in Ruthless Testing as shown in Figure 5-3. Each of these types has varying degrees of effectiveness, which is described in more detail in the following sections. Figure 5-3. The different types, or levels, of tests that should be utilized in ruthless testing. The types of tests are organized by how aware they are of user tasks and code implementation. User-awareness indicates how close the tests are to replicating exact tasks that users perform. Code-awareness indicates the degree of knowledge the tests have with the actual implementation of the product. Test automation is a critical part of ruthless testing. Code-aware tests have the greatest potential for automation and provide the greatest return for the least amount of effort. The user aware tests of usability, user verification, and visual validation are rarely automated because each of these tests requires human effort for the design of the tests and careful observation and analysis of the test results. However, while code-aware tests can determine whether the code is working as expected, they do not necessarily ensure that the product works as users expect. Hence, ruthless testing requires a strategy that mixes both code-level and user-level tests. Unit Tests: Test-Driven DevelopmentUnit tests are the lowest level of tests you can create for software because they are written with explicit knowledge of the software's classes, methods, and functions. They essentially consist of calling methods or functions to ensure that the results are as intended. Test-driven development means writing the unit tests for new code before writing the code and then ensuring that the tests always pass whenever new changes are made [Astels 2003] [Beck 2002a] [Husted and Massol 2003] [Link and Frolich 2003]. In test-driven development, tests are run every time the developer builds the software. The result of the build is either a green light (all tests passed) or a red light (a test failed). Test-driven development is a powerful way to develop software. It should not be viewed as optional, and it is a crucial part of achieving sustainability. Some of the benefits of test-driven development are:

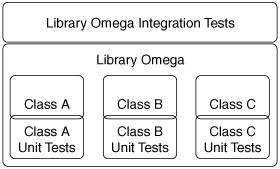

Tip: Use Mock Objects! If you deal with databases, network interfaces, user interfaces, or external applications you should learn about Mock Objects [Thomas and Hunt 2002] [http://www.mockobjects.com]. Mock objects are essentially a design and testing technique that let you simulate an interface so that you can test your code in isolation while having control over the types of events and errors that reach your code. Tip: Be Creative When Implementing Automated Testing Automated tests shouldn't always mean manually specifying calls and expected return values and error conditions as in JUnit. In many cases, it is possible to write a program to generate tests for you. For example, suppose you have a piece of software (a method, class, or component) that accepts a string of flags just like the typical UNIX utility (e.g., grep, awk, sed). You could write a program that called the code with the flags in all possible orders. This type of test is extremely thorough and bound to pay off. I have seen cases where libraries that have been in use for years with no tests have tests added to them in this way and serious problems are uncovered, in some cases fixing problems that had been long outstanding and thought to be unreproducible! Integration TestsIntegration tests test the interface exposed by a collection of classes that are linked or grouped into a library/module/component. Unlike unit tests, they do not understand the implementation of the library. Hence, integration tests can be a useful mechanism to document and test the expected behavior of a library. Figure 5-4 illustrates the difference between unit and integration tests. In this example, the unit tests for each class in the library test the class's public and private interfaces, while the library's integration tests only test the collective public interface of the library. Hence, integration tests can be considered optional because they are a subset of the collective unit tests. However, integration tests can still be useful because they are separately maintained and created from the unit tests, especially if the programmers who depend on the library use the integration tests to document how their code expects the library to behave. The tests don't need to be complete; they only need to test the expected behavior. Two situations where integrations tests are most useful are where a library is used in more than one application (or program) and when a third-party library is used. Figure 5-4. Integration tests are different from unit tests. In this example, a library called Omega is made up of three classes. Each class has its own unit tests, and the library has a separate set of integration tests. One example of when to use integration tests is when you are dealing with a third-party library or any library that the product imports in a precompiled form. What you need is a test harness and a set of tests that realistically represent how you are using that library, the methods you call, and the behavior you expect when you call those methods. You often don't need to test all the interfaces exposed by the library, just the ones you depend on. Then, as new versions of the library are shipped to you, all you need to do is plug the new version into your test harness and run the tests. The more portions of your software architecture that you can treat as third-party libraries and test in this way, the more robust the interfaces your architecture will have. Having sound interfaces will give you a great deal of flexibility in the future so that at any time you could choose to replace an implementation of one of these interfaces without disrupting the entire system. This is a huge benefit and speaks to the need to have well-designed interfaces that are easily tested.

System TestsSystem tests are automated tests that cover the functioning of the complete, integrated system or product. System tests know nothing about the structure of the software, only about what the product is intended to do from the user's standpoint. System tests are intended to mimic the actions a user will take, such as mouse movements and keyboard input, so that these inputs can be fed into the application in the desired order and with the desired timing. System tests are the most difficult type of test to implement well. This is because in order to be useful over the long term, the system test capabilities must be designed into the software. Also, it is exceptionally difficult to add system test capabilities to existing software. For new software, the extra design effort is worth it since well-thought-out system tests can be extremely effective and can significantly contribute to the productivity of the development team by reducing the dependence on people to manually test the software and also by providing an additional mechanism to prevent defects reaching people (i.e., QA and customers). Design testability into your product. Too many teams rely on external test harnesses or manual testing by people and learn to regret not investing the extra effort. The complexities of modern computer applications make it difficult to create automated system tests. Modern applications contain complexities such as multiple threads of execution, clients and servers that exchange unsynchronized data, multiple input devices, and user interfaces with hundreds of interface elements. The result is highly nondeterministic behavior that, without built-in testability, will lead to unreproducible problems. Record and PlaybackBecause of the complexity of automating system-level testing, most software organizations choose to leave system testing to its QA organization (i.e., people). However, this is a wasted opportunity because most software products, especially new ones, could greatly benefit from a record and playback architecture. Many applications have a built-in logging capability. As the application runs, it records the actions it is taking in a log file. Log files can be useful when debugging a problem. In most cases, however, logging is only intended for the programmers. Imagine if it were possible to read the log file back into the application and have the application perform all the operations recorded in the log file in the same sequence and at the same time to produce the same result as when the recording was made. That is record and playback. With such architecture, recreating problems and debugging them becomes almost trivial [Ronsse et al 2003]. Record and playback is equally effective in deterministic and nondeterministic applications[1]. One example of a highly nondeterministic application where record/playback is widely employed is in the computer gaming industry. In computer games, game companies invest a great deal of effort in creating special-purpose game engines. These engines take the game terrain, levels, characters, props, etc. and render them in real-time in response to the inputs of one or more users. Game engines are complex pieces of software that must consider multiple inputs (such as in a multiplayer game) where characters in the game can interact with each other and the contents of the scene, and these interactions have a notion of time, space, and sequence; interactions are different depending on where a character is relative to other characters at any given time. Because of these complexities, many game companies have by necessity designed record/playback into their game engines so they can quickly diagnose problems that without record/playback would be virtually impossible to reproduce and fix. In some 3D game engines the playback even allows the use of a completely different camera viewpoint, which is like being a neutral third-party observer to the game play. Many useful articles can be found written about these game engines on game developer web sites such as http://www.gamasutra.com.

Visual Validation TestingVisual applications (those that produce an image, movie, or allow the user to interact with their data in real time) are the most complex to produce automated tests for. There are many cases where a person must look at the visual output or interact with the program in some way (such as in a computer game) to ensure that the application actually produces the outputs that it should. For example, in a 2D graphics application, the only feasible way to ensure that a circle created by the program, especially if it is anti-aliased[2],is really a circle, is to look at it.

It is important to ensure that the amount of visual verification required is at an optimal minimal level. This is only possible by automating the code-aware tests as much as possible to ensure that focus can be put on the verification, not say testing for regressions. This can be restated as: Use time spent testing by people wisely; don't get people to do tests that a computer could do. It should be noted that some aspects of visual validation could also be automated. If the visual output can be captured as images, a database of correct images (as determined by a person) can be used to ensure that images created with the new version of software are the same as the correct images, or different because they should be.

Performance TestingEvery piece of software should have some performance goals. Performance tests ensure that your application achieves or exceeds the desired performance characteristics. Performance could be measured in one or all of unit (e.g., to test the performance of frequently called methods or functions), integration, or system tests. The most common way to do performance testing is by using system timers in your tests and either logging the results so they can be compared or graphed against previous test runs or by creating a test failure if the performance is not within some expected range. Alternatively, there are quite a few commercial and open source performance testing applications available. My current favorite is Shark, which is a tool available from Apple for development on OS X.

Resource-Usage TestingResource usage testing ensures that your application is using desirable amounts of memory, CPU, time, disk bandwidth, network bandwidth, for example. Resource usage tests can be valuable to catch problems early and without a person having to explicitly monitor them. Each project will have its own set of resources that are more important to monitor than others. Some of the factors are:

Regression TestingRegression tests are tests written to prevent existing features or bug fixes from being broken when new code changes are made. They aren't a different kind of test, because these tests should be built into unit, integration, and system tests. However, they are included as a reminder: Be sure to add tests when you fix a defectthere is nothing more frustrating than fixing a defect more than once! Usability TestingUsability testing is different from all the other types of testing already discussed because it cannot be automated. However, for any application with a user interface, usability testing is a critical part of sustainable development and should not be considered optional unless the interface is brain-dead simple, and even then I'd still recommend it. Usability testing involves observing real users in realistic conditions using the product in a controlled environment. In a usability test [Rubin 1994] [Dumas and Redish 1999], careful attention must be made to not guide or bias users. Users are asked to perform a predefined task and think out loud while they use the system with more than one person observing and taking notes. The reason several people should observe the tests is to ensure there is no bias or ability to ignore issues that arose. When done well, usability testing will quickly uncover interface and interaction flaws that won't normally be uncovered until after the product ships. Hence, doing usability testing as early as possible is definitely a flavor of being ruthless.

User Verification TestingUser verification testing is typically the last type of testing that is performed before a product is installed and actually used by customers. It isn't necessary for all products and will depend on the customer. For example, government agencies often demand verification testing, as do customers who use products where a product failure could result in the loss of a life, injury, or damage. This testing involves real users performing real work on the software in conditions that are as close as possible to how the end-users will use the product, often in parallel with the live system. In applications such as mission-critical software, this testing could take weeks to months due to the high cost of failure after the software is installed.

Resources Required for Ruthless TestingRuthless testing is only effective if tests are easy to run and results can be obtained and analyzed quickly. Ruthless testing may require additional computing resources and effort, depending on the size and complexity of the product. Extra investment is required when it is impossible for the team to quickly and easily run and analyze tests on its own. You may need to also invest in some centralized or shared fast computers, although an investment in distributed testing might be advisable, where spare CPU cycles are used to run tests, especially at night. Where many tests are being run, some custom programs might help to simplify the presentation and analysis of results.

|

EAN: 2147483647

Pages: 125