Available Solutions

You can do many things to improve this kind of system:

-

Denormalization This is probably the most effective way to improve the system. SQL Server will have to query a smaller number of tables. You can create a redundant set of tables (one or more, depending on the subject) and periodically (for example, every hour or every day) transfer data from the normalized tables to it. An additional benefit is that "readers" and "writers" will not block each other.

-

Limit the result set Most search engines on the Web limit the number of results that the user can return (for example, 200 or 500 records).

-

Split results rather than limit result sets Users should be able to access records in batches (of, for example, 25 or 50 records). Chances are that the user will browse through only the first couple of pages. There is no need to serve the user with 5,000 records that he or she will not review.

-

Index An additional benefit of splitting tables to provide one table for data modification and another for querying is that the indexes you would need to create on the denormalized tables will not slow down data modification transactions.

-

Stored procedures vs. ad hoc queries Because of the many performance and management reasons discussed in the "Reuse of Stored Procedure Execution Plans" in Appendix B, you should use precompiled objects such as stored procedures as much as you can. Often, you can significantly simplify a query by dynamically assembling it. When you reduce the number of tables and parameters, the query optimizer creates a better execution plan.

-

Search types Good results can sometimes be achieved by using a little psychology. For example, users do not always need to do a "Contains" search on a string (like ‘%string%’). Most of the time, "Begins with" (like ‘string%’) and "Match" (=‘string’) searches are sufficient. For the latter two, SQL Server does not ignore the index. If you add a list box with "Search types" to the text box and make "Begins With" the default option, users will use it probably 90 percent of the time. SQL Server does a table scan only when users really need to scan the whole field.

I will review in detail some of these options in an Asset5 database scenario.

Result Splitting

There are three options for achieving result splitting:

-

On a web server

-

Using a temporary table on the database server

-

Using a static denormalized table on the database server

Page Splitting on a Web Server

Some web sites split the result set on a web server. The complete result set is transferred to the web server and components on it create HTML pages with links between them. There are several good reasons to use this technique. A farm of web servers can efficiently balance the load. There are solutions on the market that can be purchased and implemented rapidly. Unfortunately, network traffic between servers is not reduced and SQL Server has to grab and transfer much more than it should. I will not go into the details of how to implement such a solution (you'll need a different book for that).

Page Splitting Using a Temporary Table on the Database Server

The idea of this solution is to collect the complete recordset in a temporary table and then to send to the caller only a subset of records (for example, 25 records) to be displayed on the current page.

In the following example, the query creates a temporary table that has an additional identity field. The second query returns records with identity values between the specified numbers. Initially, these numbers might be 1 and 25. The next time it is called, the client increases the values of the first and the last records to be displayed.

CREATE PROCEDURE ap_InventoryByMakeModel_Quick_TempTbl -- Return a batch (of specified size) of records which satisfy the criteria -- Demonstration of use of temporary table to perform record set splitting. @Make varchar(50) = '%', @Model varchar(50) = '%', @FirstRec int = 1, @LastRec int = 25, @RowCount int = null output AS /* test: declare @re int exec ap_InventoryByMakeModel_Quick_TempTbl @RowCount = @rc output select @re exec ap_InventoryByMakeModel_Quick_TempTbl @FirstRec = 26, @LastRec = 50, @RowCount = @rc output */ SET NOCOUNT ON Create table #Inv (ID int identity, Inventoryid int. Make varchar(50), Model varchar(50), Location varchar(50), FirstName varchar(30), LastName varchar(30), AcquisitionType varchar(12), Address varchar(50), City varchar(50), ProvinceId char(3), Country varchar(50), EqType varchar(50), Phone varchar(20). Fax varchar (20) , Email varchar(128), UserName varchar(50)) insert into #Inv(Inventoryid, Make, Model, Location, FirstName, LastName, AcquisitionType, Address, City, ProvinceId, Country, EqType, Phone, Fax, Email, UserName) SELECT Inventory.Inventoryid, Equipment.Make, Equipment.Model, Location.Location, Contact.FirstName, Contact.LastName, AcquisitionType.AcquisitionType, Location.Address, Location.City, Location.ProvinceId, Location.Country, EqType.EqType, Contact.Phone, Contact.Fax, Contact.Email, Contact.UserName FROM dbo.EqType EqType RIGHT OUTER JOIN dbo.Equipment Equipment ON EqType.EqTypeId = Equipment.EqTypeId RIGHT OUTER JOIN dbo.Inventory Inventory ON Equipment.EqId = Inventory.EqId INNER JOIN dbo.Status Status ON Inventory.StatusId = Status.StatusId LEFT OUTER JOIN dbo.AcquisitionType AcquisitionType ON Inventory.AcquisitionTypeId = AcquisitionType.AcquisitionTypeId LEFT OUTER JOIN dbo.Location Location ON Inventory.LocationId = Location.LocationId LEFT OUTER JOIN dbo.Contact Contact ON Inventory.OwnerId = Contact.ContactId where Make Like @Make and Model Like @Model order by Location, LastName, FirstName select @RowCount = @@rowcount SELECT * FROM #Inv WHERE ID >= @FirstRec AND ID <= @LastRec order by ID return

The stored procedure should be used the first time in the following manner to get the first batch of records and the number of records:

declare @rc int exec ap_InventoryByMakeModel_Quick_TempTbl @RowCount = @rc output select @rc

The next time, the user must specify the first and last record that he wants to see:

declare @rc int exec ap_InventoryByMakeModel_Quick_TempTbl @FirstRec = 26, @LastRec = 50, @RowCount = @rc output

There are, however, several problems with this solution. You are still executing the query against a large set of normalized tables. You are also creating a large temporary table every time you execute the stored procedure. Because you are working in a stateless environment, you cannot keep the temporary table on the server. Therefore, SQL Server works much harder than it should. The advantage to this technique is that network traffic is significantly reduced.

Page Splitting Using Denormalized Tables on the Database Server

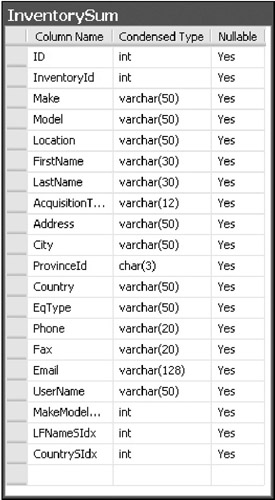

To reduce the number of tables that need to be joined every time, you can create a new table that contains all the fields that you typically need in the query. The InventorySum table has such a role in the Asset5 database.

Most of the fields are just copied from normalized tables. ID is an identity field, which you will use to request a subset of records and to sort records on. Several records have a SIdx suffix. I call them surrogate indexes. They store the position of the record in a set when it is sorted in a particular order. For example, LFNameSIdx is the surrogate index that is used when a recordset is returned sorted by first and last name (see Figure 20-1).

Figure 20-1: Surrogate index

I have created a stored procedure to be executed periodically from a job to populate the denormalized table:

Alter Procedure ap_InvSum_Generate -- Generate denormalized table that will speed-up the querying. @debug int = 0 As SET NOCOUNT ON SET XACT_ABORT ON declare @intTransactionCountOnEntry int create table #Inv(ID int identity(1,1), Inventoryid int, Make varchar(50), Model varchar(50), Location varchar(50), Status varchar(15), FirstName varchar(30), LastName varchar(30), AcquisitionType varchar(12), Address varchar(50), City varchar(50), ProvinceId char(3), Country varchar(50), EqType varchar(50), Phone varchar(20), Fax varchar (20) , Email varchar(128), UserName varchar(50), MakeModelSIdx int, LFNameSIdx int, CountrySIdx int) -- get result set insert into #Inv(Inventoryid, Make, Model, Location, FirstName, LastName, AcquisitionType, Address, City, ProvinceId, Country, EqType,Phone, Fax, Email, UserName ) SELECT Inventory.Inventoryid, Equipment.Make, Equipment.Model, Location.Location, Contact.FirstName, Contact.LastName, AcquisitionType.AcquisitionType, Location.Address, Location.City, Location.ProvinceId, Location.Country, EqType.EqType, Contact.Phone, Contact.Fax, Contact.Email, Contact.UserName FROM dbo.EqType EqType RIGHT OUTER JOIN dbo.Equipment Equipment ON EqType.EqTypeId = Equipment.EqTypeId RIGHT OUTER JOIN dbo.Inventory Inventory ON Equipment.EqId = Inventory.EqId LEFT OUTER JOIN dbo.AcquisitionType AcquisitionType ON Inventory.AcquisitionTypeId = AcquisitionType.AcquisitionTypeId LEFT OUTER JOIN dbo.Location Location ON Inventory.LocationId = Location.LocationId LEFT OUTER JOIN dbo.Contact Contact ON Inventory.OwnerId = Contact.ContactId order by Location, LastName, FirstName - - now, let's do record sorting ---- Make, Model ------------------- create table #tmp (SID int identity(1,1), ID int) insert into #tmp(ID) select ID from #inv order by Make, Model update #inv set MakeModelSIdx = #tmp.SId from #inv inner join #tmp on #inv.ID = #tmp.id drop table #tmp ----------------------------------------- ---- CountrySIdx: Country, Province, City, Location -------------- create table #tmp2 (SID int identity(1,1), ID int) insert into #tmp2(ID) select ID from #inv order by Country, ProvinceId, City, Location update #inv set CountrySIdx = #tmp2.SId from #inv inner join #tmp2 on #inv.ID = #tmp2.id drop table #tmp2 ----------------------------------- ---- LFNameSIdx: LName, FName ------------ create table #tmp3 (SID int identity(1,1), ID int) insert into #tmp3(ID) select ID from #inv order by LastName, FirstName update #inv set LFNameSIdx = #tmp3.SId from #inv inner join #tmp3 on #inv.ID = #tmp3.id drop table #tmp3 --------------------------- - - use transaction to hide operation from users Select @intTransactionCountOnEntry = (SOTranCount BEGIN TRANSACTION -- recreate table if exists (select * from dbo.sysobjects where id = object_id(N'[InventorySum]') and OBJECTPROPERTYfid, N'IsUserTable') = 1) drop table dbo.[InventorySum] create table dbo.InventorySum(ID int, InventoryID int. Make varchar(50), Model varchar(50), Location varchar(50), FirstName varchar(30), LastName varchar(30), AcquisitionType varchar(12), Address varchar(50), City varchar(50), ProvinceId char(3), Country varchar(50), EqType varchar(50), Phone varchar(20), Fax varchar (20) , Email varchar(128), UserName varchar(50), MakeModelSIdx int, LFNameSIdx int, CountrySIdx int) - - populate table insert into dbo.InventorySum (ID, InventoryID, Make, Model, Location, FirstName, LastName, AcquisitionType, Address, City, ProvinceId, Country, EqType, Phone, Fax, Email, UserName, MakeModelSIdx, LFNameSIdx, CountrySIdx) select ID, InventoryID, Make, Model, Location, FirstName, LastName, AcquisitionType, Address, City, ProvinceId, Country, EqType, Phone, Fax, Email, UserName, MakeModelSIdx, LFNameSIdx, CountrySIdx from #inv - - create indexes CREATE UNIQUE CLUSTERED INDEX [idx_InvSum_Id] ON [dbo] . [InventorySum] ( [ID] ) CREATE INDEX [idx_InvSum_LFName] ON [dbo].[InventorySum] (LastName, FirstName) CREATE INDEX [idx_InvSum_Location] ON [dbo].[InventorySum] (Location) CREATE INDEX [idx_InvSum_ModelMakeEqType] ON [dbo].[InventorySum] (Model, Make, EqType) -- complete transaction - give access to users If @@TranCount > @intTransactionCountOnEntry COMMIT TRANSACTION return

At first sight, the stored procedure might look a bit unusual to you. I first collect all data in a temporary table and then, within a transaction, drop the denormalized table, re-create it, and copy the collected data from the temporary table into it. I wrote the stored procedure in this way for performance reasons. It is critical for users to be able to query the table without interruption. The whole process typically takes a couple of minutes, and queries will time-out if not completed after 30 seconds (or the limit that is specified on the server, in the ADO connection, or MTS). Therefore, it is critical to shorten the interruption. The process of copying collected data is much shorter than the process of collecting it.

On one project where I applied this solution, the complete process took about three minutes. The transaction that re-creates the table and copies data into it took about 20 seconds.

| Note | It is certainly possible to perform the loading transaction in other ways. Creating an additional static table and renaming it comes to mind. |

Quick Queries

This section walks you through the process of gradually adding the following features to a stored procedure, implementing a quick query:

-

Result splitting

-

Sorting

-

Search type

-

Counting

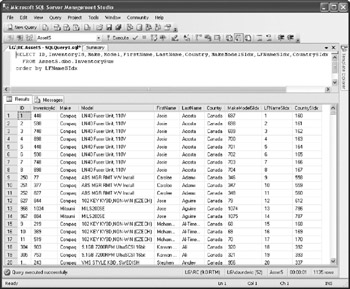

I will build a stored procedure that returns a list of equipment with a specified make and model:

alter proc ap_InventoryByMakeModel_Quickl @Make varchar(50) = null, -- criteria @Model varchar(50) = null -- criteria /* test: exec ap_InventoryByMakeModel_Quickl 'Compaq', 'D%' */ as select Inventoryid , Make , Model, Location , FirstName , LastName , AcquisitionType, Address , City , ProvinceId , Country , EqType , Phone , Fax , Email , UserName from dbo.InventorySum where Make LIKE @Make and Model LIKE @Model

The preceding is a simple stored procedure that uses the Like operator, and therefore enables the caller to add a wild card (%) to the string.

Now I'll add sorting to the stored procedure. I can sort only by sort orders for which I have defined surrogate indexes:

create proc ap_InventoryByMakeModel_Quick2 @Make varchar(50) = null, -- criteria @Model varchar(50) = null, -- criteria @SortOrderId smallint = 0 /* test: exec ap_InventoryByMakeModel_Quick2 'Compaq', 'D%', 1 */ as select Id = Case @SortOrderId when 1 then MakeModelSIdx when 2 then CountrySIdx when 3 then LFNameSIdx End, Inventoryid , Make , Model, Location , FirstName , LastName , AcquisitionType, Address , City , ProvinceId , Country , EqType , Phone , Fax , Email , UserName from dbo.InventorySum where Make like @Make and Model like @Model order by case @SortOrderId when 1 then MakeModelSIdx when 2 then CountrySIdx when 3 then LFNameSIdx end return

I have also added an ID column to the recordset. I use a Case statement to set it with one of the surrogate indexes. The second instance of the Case statement is a little bit unusual. Note that I am using it inside an Order By clause.

Now I will add code that will return the result set in batches of 25 records:

create proc ap_InventoryByMakeModel_Quick3 @Make varchar(50) = null, -- criteria @Model varchar(50) = null, -- criteria @PreviousID int =0, -- last record from the previous batch @SortOrderId smallint = 0 /* test: exec ap_InventoryByMakeModel_Quick3 'Compaq', 'D%', 444, 1 */ as select top 25 Id = Case @SortOrderId when 1 then MakeModelSIdx when 2 then CountrySIdx when 3 then LFNameSIdx End, Inventoryid , Make , Model, Location , FirstName , LastName , AcquisitionType, Address , City , ProvinceId , Country , EqType , Phone , Fax , Email , UserName from dbo.InventorySum where Case @SortOrderId when 1 then MakeModelSIdx when 2 then CountrySIdx when 3 then LFNameSIdx End > @PreviousID and Make like @Make and Model like @Model order by case @SortOrderId when 1 then MakeModelSIdx when 2 then CountrySIdx when 3 then LFNameSIdx end return

I have added Top 25 to the Select statement. I added a parameter that will be used to pass the identifier of the last record seen in the previous batch. I also added a Case function in the Where clause that allows me to return records that were not previously seen by the user.

Next I will add code to support Begins With, Contains, and Match search types. I will simply add different combinations of wild cards to the search parameters:

create proc ap_InventoryByMakeModel_Quick @Make varchar(50) = null, -- criteria @Model varchar(50) = null, -- criteria @PreviousID int =0, -- last record from the previous batch @SortOrderId smallint = 0, @SearchTypeid tinyint =0 -- 0: Begins With, 1: Match, 2: Contains /* test: exec ap_InventoryByMakeModel_Quick 'Compaq', 'D', 50, 2, 2 */ as if @SearchTypeid = 0 begin set @Make = @Make + '%' set @Model = @Model + '%' end if @SearchTypeid = 2 begin set @Make = '%' + @Make + '%' set @Model = '%' + @Model + '%' end select top 25 Id = Case OSortOrderId when 1 then MakeModelSIdx when 2 then CountrySIdx when 3 then LFNameSIdx End, Inventoryid , Make , Model, Location , FirstName , LastName , AcquisitionType, Address , City , ProvinceId , Country , EqType , Phone , Fax , Email , UserName from dbo.InventorySum where Case @SortOrderId when 1 then MakeModelSIdx when 2 then CountrySIdx when 3 then LFNameSIdx End > @PreviousID and Make like @Make and Model like @Model order by case @SortOrderId when 1 then MakeModelSIdx when 2 then CountrySIdx when 3 then LFNameSIdx end return

Since it is important to display the total number of records that satisfy specified criteria, I will create a stored procedure to return the count to the user. I have two options: I could add the code to the stored procedure that does the search, but the better option is to make code more readable and create a separate procedure:

create proc ap_InventoryByMakeModel_Count @Make varchar(50) = null, -- criteria @Model varchar(50) = null, -- criteria @SearchTypeid tinyint =0, -- 0: Begins With, 1: Match, 2: Contains @Count int output /* test: declare @count int exec ap_InventoryByMakeModel_Count 'Compaq', 'D', 2, @count output select @count count */ as if @SearchTypeId = 0 begin set @Make = @Make + '%' set @Model = @Model + '%' end if @SearchTypeid = 2 begin set @Make = '%' + @Make + '%' set @Model = '%' + @Model + '%' end select @Count = count(*) from dbo.InventorySum where Make like @Make and Model like @Model return

Advanced Queries

An advanced query allows users to specify criteria using any combination of input parameters. Therefore, all parameters have default values specified so that they will never become part of the criteria (I can agree with web developers that null means that the user didn't specify a value for the parameter on the web page):

alter procedure ap_InventorySearchAdvFull_ListPage -- display a batch of 25 assets that specify the criteria -- Example of use of dynamically assembled query -- and denormalized table with surrogate index fields --to return result in batches of 25 records. @Make varchar(50) = null, @Model varchar(50) = null, @Location varchar(50) = null, @FirstName varchar(30) = null, @LastName varchar(30) = null, @AcquisitionType varchar(20) = null, @ProvinceId char (3) = null, @Country varchar(50) = null, @EqType varchar(30) = null, @City varchar(50) = null, @UserName varchar(50) = null, @email varchar(50) = null, @SortOrderId smallint =0, -- 1: Make and model; -- 2: Country, Prov, City, Loc; -- 4: LName; FName @PreviousID int =0, -- last record from the previous batch @BatchSize int = 25, @debug int = 0 /* test: exec ap_InventorySearchAdvFull_ListPage @Make = 'Compaq', @Model= null, @Location = null, @FirstName = 'Michael', @LastName = null, @AcquisitionType = null, @ProvinceId = null, @Country = null, @EqType = null, @City = null, @UserName = null, @email = null, @SortOrderId =2, --2: Make and model @PreviousID =25, -- last record from the previous batch @BatchSize = 25, @debug = 0 */ as SET CONCAT_NULL_YIELDS_NULL OFF SET NOCOUNT ON declare @chvSelect varchar(max), @chvFrom varchar(max), @chvWhere varchar(max), @chvOrderby varchar(max), @chvSQL varchar(max) -- order records set (SchvSelect = 'SELECT top ' + Convert (varchar, @BatchSize) + ' Inventoryid , Make , Model, Location , FirstName , LastName , AcquisitionType, Address , City , ProvinceId , Country , EqType , Phone , Fax , Email , UserName, ' + Case @SortOrderId when 1 then ' MakeModelSIdx ' when 2 then ' CountrySIdx ' when 3 then ' LFNameSIdx ' End + ' as ID ' set @chvFrom = ' FROM dbo. InventorySum ' set @chvWhere = ' where ' + Case @SortOrderId when 1 then ' MakeModelSIdx ' when 2 then ' CountrySIdx ' when 3 then ' LFNameSIdx ' End + '> ' + Convert (varchar, @PreviousID) if @Make is not null set @chvWhere = @chvWhere + ' AND Make = ' ' ' + @Make + ' ' ' ' if @Model is not null set @chvWhere = @chvWhere + ' AND Model = ' ' ' + @Model + ' ' ' ' if @Location is not null set @chvWhere = @chvWhere + ' AND Location = ' ' ' + @Location + ' ' ' ' if @FirstName is not null set @chvWhere = @chvWhere + ' AND FirstName = ' ' ' + @FirstName + ' ' ' if @LastName is not null set @chvWhere = @chvWhere + ' AND lastName = ' ' ' + @lastName + ' ' ' ' if @AcquisitionType is not null set @chvWhere = @chvWhere + ' AND AcquisitionType = ' ' ' + @AcquisitionType + ' ' ' ' if @ProvinceId is not null set @chvWhere = @chvWhere + ' AND Province Id = ' ' ' + @ProvinceId + ' if @Country is not null set @chvWhere = @chvWhere + ' AND Country = ' ' ' + @Country + ' ' ' ' if @EqType is not null set @chvWhere = @chvWhere + ' AND EqType = ' ' ' + @EqType + ' ' ' ' if @City is not null set @chvWhere = @chvWhere + ' AND City = ' ' ' + @City + ' ' ' ' if @UserName is not null set @chvWhere = @chvWhere + ' AND UserName = ' ' ' + @UserName + ' ' ' ' if @email is not null set @chvWhere = @chvWhere + ' AND email = ' ' ' + @email + ' ' ' ' set @chvOrderBy = ' order by ' + Case @SortOrderId when 1 then ' MakeModelSIdx' when 2 then ' CountrySIdx ' when 3 then ' LFNameSIdx ' End set @chvSQL = @chvSelect + @chvFr@m + @chvWhere + @chvOrderby if @debug = 0 exec (@chvSQL) else select @chvSQL

The stored procedure dynamically assembles the query using just the Where clause for input parameters that were specified. This technique allows SQL Server to optimize the query and use the appropriate indexes. Similarly, Order By and Select clauses are assembled based on the specified sort order. The Top clause is added dynamically to the Select clause based on the number of records that the user wants to see in the batch.

| Note | Something that I didn't demonstrate here, hut that could also he very useful for joining tables on demand, is the following: if some tables are joined just to support some additional search criteria, they might he added to the rest of the From clause only when their values are specified. The query will perform better if the number of joined tables is smaller. |

Fancy New Solution: Row Versioning

All the solutions we have explored so far are applicable to all versions of SQL Server. Row versioning is a new feature in SQL Server 2005 that you can use to address the reader and writer(b) locking problem.

When the ALLOW_SNAPSHOT_ISOLATION or READ_COMMITTED_ SNAPSHOT database option is set to ON, the SQL Server 2005 Database Engine starts tracking versions of all rows that are modified. When an application or a Transact-SQL batch modifies a row in that database, the Database Engine stores the original version of the row in the tempdb database. If an application or a query using compatible isolation levels (Snapshot or Read Committed Snapshot) needs to read the record before the transaction is completed, the Database Engine provides the version from tempdb. It does not need to wait for the transaction to be completed, nor to read the changed record before it is committed.

If more than one application or batch is modifying the record concurrently, multiple versions of the record are stored in tempdb in the form of a linked list. Naturally, as transactions are committed, the Database Engine records the changes in the database and deletes the old row versions in tempdb.

This row versioning feature isolates readers from the effects of record modifications. There is no need to handle this issue using shared locks. Therefore, when the database is set in this mode, readers do not acquire shared locks, which leads to a significant reduction in the number of locks. Locks are needed only for transactions that are modifying records.

In the following sections, I will demonstrate how to set a database to perform row versioning, and what needs to be done on the client to exploit this feature.

How to Set Databases

Administrators can set the database option to allow snapshot isolation using the Alter Database statement:

ALTER DATABASE Asset5 SET ALLOW_SNAPSHOT_ISOLATION ON;

From this point, the database will track row versions of all (new) transactions in tempdb.

| Note | This database option can be set wbile users are connected to tbe database. Administrators do not need to disconnect tbem (to kill connections). However, if users are running transactions, tbose transactions must be completed before row versioning can be set. Tbe database is in tbe PENDING_ON state until tbe transactions are finisbed. If someone tries to run snapsbot transactions before tbe regular transactions are finisbed, an error will be raised. |

Alternatively, an administrator can set the READ_COMMITTED_SNAPSHOT database option to ON:

ALTER DATABASE Asset5 SET READ_COMMITTED_SNAPSHOT ON;

From this point, the Database Engine will use row versioning instead of locking for transactions, using the READ_COMMITTED_SNAPSHOT isolation level.

| Note | READ_COMMITTED_SNAPSHOT can be set to ON only when the database is set to single-user mode. However, the advantage to this option is that the database is switched to this mode immediately. There is no possibility of a pending state as in the case of snapshot isolation. |

How to Set Connections/Clients

When the ALLOW_SNAPSHOT_ISOLATION database option is set to ON, queries must set the Transaction Isolation Level to Snapshot in order to access original rows and avoid being blocked by transactions:

SET TRANSACTION ISOLATION LEVEL SNAPSHOT; GO exec ap_InventoryByMakeModel_Quick 'Nee', 'V, 50, 2, 2

Naturally, if a query is executed with a noncompatible isolation level, results will be as usual. For example, if you use the default (Read Committed) or higher isolation level, the query waits for the transaction to complete; if you use Read Uncommitted, the query immediately returns the changed record (even if it is not committed).

After the database is set to support snapshot isolation level, transactions do not have to be run with isolation level set to Snapshot. However, you may choose to do so if your transaction is also reading records:

SET TRANSACTION ISOLATION LEVEL SNAPSHOT; GO declare @EqId int select @EqId = EqId from dbo.Inventory where InventoryID = 117 begin tran update dbo.Eq Set Model = 'V91' where EqId = @EqId update dbo.Eq Set Model = 'V90' where EqId = (select EqId from Inventory where InventoryID = 118) commit tran

Managed applications that are using the System.Data.SQLClient namespace should set the isolation level using the SQLConnection.BeginTransaction method:

SqlConnection conn = new (string myConnString); SqlTransaction trans = conn.BeginTransaction(IsolationLevel.Snapshot); . . .

Older systems such as ADO applications can set the Autocommit Isolation Levels property. OLEDB applications should set the DBPROPSET_SESSION property to DBPROP_SESS_AUTOCOMMITISOLEVELS, and ODBC applications should set the SQL_ATTR_TXN_ISOLATION attribute using the SQLSetConnectAttr.

Clients could be set to use Read Committed Snapshot isolation level when the READ_COMMITTED_SNAPSHOT database option is set to ON:

SET TRANSACTION ISOLATION LEVEL READ_COMMITTED_SNAPSHOT; GO exec ap_InventoryByMakeModel_Quick 'Nee', 'V, 50, 2, 2

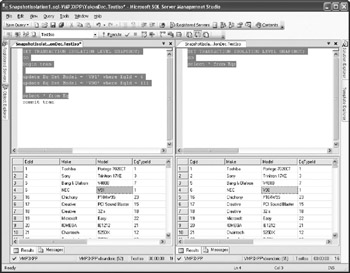

Using Row Versioning

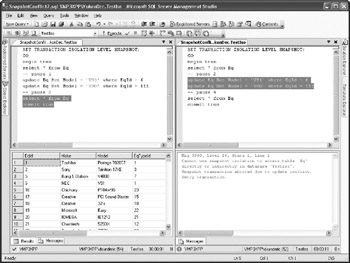

Figure 20-2 demonstrates how data can be available for reading during a long-running transaction. The first connection modifies a record, but it does not finish the transaction. I didn't execute Commit Tran to simulate execution of the long query. The second query can access a copy of the record before the change. By using snapshot isolation, the second connection is able to overcome this limitation in the default Database Engine's behavior (that is, it does not have to wait for the transaction to finish and the lock to be released).

Figure 20-2: Connections using snapshot isolation level before transaction completion

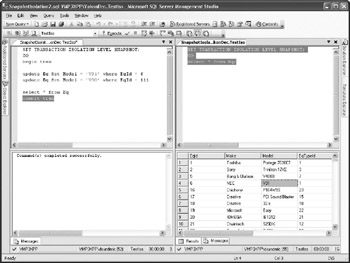

Naturally, after the transaction is committed, the second query can see the modified record (see Figure 20-3).

Figure 20-3: Connections using snapshot isolation level after transaction completion

| Note | The tempdb database has to have enough space to store all row versions of all changed records. However, if tempdb becomes full, transactions will continue to work without problem, but queries that require missing row versions will fail. |

The example in Figure 20-4 demonstrates the concurrency conflict that arises when two transactions read and update the same record. I opened their code in two windows and I executed them part by part (you can use "–– pause" comments to identify the parts). I initially executed the batches to pause 1 and pause 2, respectively. Since they both use snapshot isolation, they both initially read the original values of rows that will be updated later. Then I executed the pause 1 to pause 3 section of the first transaction. It updated the rows, but the transaction was not completed. Then I executed the pause 2 to pause 4 section in the second batch. Since the first transaction was not committed, the second batch had to wait for the exclusive lock to be cleared. As soon as I executed the remainder of the first transaction, the Database Engine attempted to update the records. It detected that the row had been changed in the meantime and returned error 3960.

Figure 20-4: Conflict of concurrent snapshot transactions

| Note | There is one more thing that you should worry about. If transactions are using global temporary tables, you must set tempdb to support Snapshot or Read Committed Snapshot isolation levels (since global temporary tables are stored in the tempdb database). You do not have to do so if your transactions are using (regular) temporary tables or table variables, since they are not shared. |

Its Disadvantages…

Snapshot and Read Committed Snapshot isolation levels are not the Holy Grail of database programming. They have significant advantages and disadvantages. First, the disadvantages:

-

Storing row versions in tempdb leads to increased space requirements and an increase in tempdb IDs.

-

Setting a database to support the Snapshot or Read Committed Snapshot isolation level will increase the required CPU cycles and use more memory.

-

Transactions need to maintain row versions even if there are no readers that require original rows using a row versioning isolation level. Therefore, updates are generally slower. How efficient this method is depends on the ratio of update to read operations.

-

Readers are also slowed down because they have to go across the linked list of row versions to get the data.

-

Some DDL operations are prohibited in snapshot transactions (Alter Index, Alter Partition Function, Alter Table, Create Index, Create Xml Index, Dbcc DbReindex, Drop Index).

-

New concurrency issues may occur when a database is set to support snapshot isolation. If two snapshot isolation transactions are trying to update the same record, the later transaction will fail with a 3960 error (see Figure 20-4). The engine will prevent the second transaction from updating the record that was already updated without reading it first. However, this problem does not occur in the case of the Read Committed Snapshot isolation level.

-

Distributed transactions and queries are not supported.

-

I think the biggest limitation of snapshot isolation is the fact that you have to change both database and application (queries) to support it. It would be nicer if all you needed to do was to adjust your database.

… and Its Advantages

The most important advantages of row versioning are

-

Writers do not block readers, since readers can get the last committed version of the row from tempdb.

Readers do not block writers, since they do not issue shared locks.

-

Readers receive transactionally consistent content—row values that were valid and consistent before the transaction started.

-

The number of deadlocks is reduced.

| Tip | You can also combine row versioning and denormalized tables. Users will not be blocked during table re-creation. |

EAN: 2147483647

Pages: 165

- Chapter I e-Search: A Conceptual Framework of Online Consumer Behavior

- Chapter IV How Consumers Think About Interactive Aspects of Web Advertising

- Chapter VI Web Site Quality and Usability in E-Commerce

- Chapter XI User Satisfaction with Web Portals: An Empirical Study

- Chapter XIII Shopping Agent Web Sites: A Comparative Shopping Environment