Web-Tier Security Patterns

Authentication EnforcerProblemYou need to verify that each request is from an authenticated entity, and since different classes handle different requests, authentication code is replicated in many places and the authentication mechanism can't easily be changed. Choice of user authentication mechanisms often require changes based on changes in business requirements, application-specific characteristics, and underlying security infrastructures. In a coexisting environment, some applications may use HTTP basic authentication or form-based authentication. In some applications, you may be required to use client certificate-based authentication or custom authentication via JAAS. It is therefore necessary that the authentication mechanisms be properly abstracted and encapsulated from the components that use them. During the authentication process, applications transfer user credentials to verify the identity requesting access to a particular resource. The user credentials and associated data must be kept private and must not be made available to other users or coexisting applications. For instance, when a user sends a credit card number and PIN to authenticate a Web application for accessing his or her banking information, the user wants to ensure that the information sent is kept extremely confidential and does not want anyone else to have access to it during the process. Forces

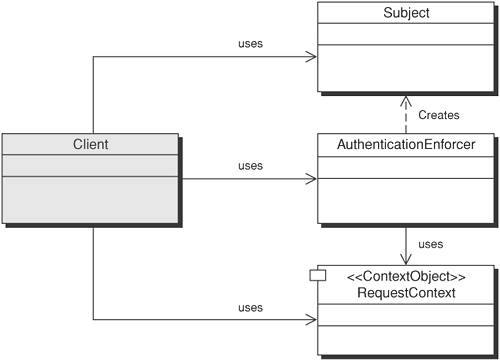

SolutionCreate a centralized authentication enforcement that performs authentication of users and encapsulates the details of the authentication mechanism. The Authentication Enforcer pattern handles the authentication logic across all of the actions within the Web tier. It assumes responsibility for authentication and verification of user identity and delegates direct interaction with the security provider to a helper class. This applies not only to password-based authentication, but also to client certificate-based authentication and other authentication schemes that provide a user's identity, such as Kerberos. Centralizing authentication and encapsulating the mechanics of the authentication process behind a common interface eases migration to evolving authentication requirements and facilitates reuse. The generic interface is protocol-independent and can be used across tiers. This is especially important in cases where you have clients that access the Business tier or Web Services tier components directly. StructureFigure 9-1 shows a class diagram of the Authentication Enforcer pattern. The core Authentication Enforcer consists of three classes: AuthenticationEnforcer, RequestContext, and Subject. Figure 9-1. Authentication Enforcer class diagram Participants and ResponsibilitiesFigure 9-1 is a class diagram of the Authentication Enforcer pattern participant classes. Their responsibilities are:

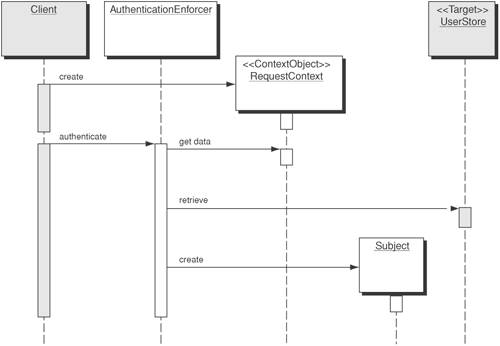

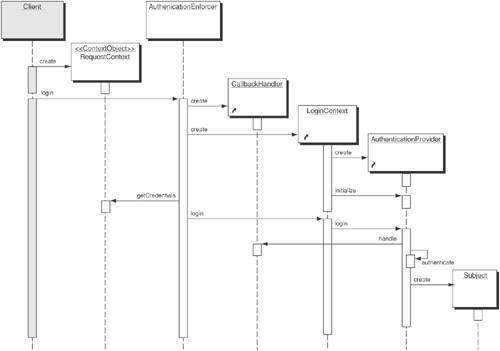

Figure 9-2 is a sequence diagram representing the interaction of the participants. Figure 9-2. Sequence diagram for Authentication Enforcer Figure 9-2 depicts a typical client authentication using Authentication Enforcer. In this case, the Client is a SecureBaseAction that delegates to the AuthenticationEnforcer, which retrieves the appropriate user credentials from the UserStore. Upon successful authentication, the AuthenticationEnforcer creates a Subject instance for the requesting user and stores it in its cache.

AuthenticationEnforcer retrieves the user's credentials from the RequestContext and attempts to locate user's Subject instance in its cache based upon the supplied user identifier in the credentials. This identifier may vary depending upon the authentication mechanism and may possibly require some form of mapping, for example, if an LDAP DN retrieved from a client certificate is used as a credential. Unable to locate an entry in the cache, the AuthenticationEnforcer retrieves the user's corresponding credentials in the UserStore. (Typically this will contain a hash of the password.) The AuthenticationEnforcer will verify that the user-supplied credentials match the known credentials for that user in the UserStore and upon successful verification will create a Subject for that user. The AuthenticationEnforcer will then place the Subject in the cache and return it to the SecureBaseAction. StrategiesThe Authentication Enforcer pattern provides a consistent and structured way to handle authentication and verification of requests across actions within Web-tier components and also supports Model-View-Controller (MVC) architecture without duplicating the code. The three strategies for implementing an Authentication Enforcer pattern include Container Authenticated Strategy, Authentication Provider Strategy (Using Third-party product), and the JAAS Login Module Strategy. Container Authenticated StrategyThe Container Authenticated Strategy is usually considered to be the most straightforward solution, where the container performs the authentication process on behalf of the application. The J2EE specification mandates support for HTTP Basic Authentication, Form Based Authentication, Digest-based Authentication, and Client-certificate Authentication. The J2EE container takes the responsibility for authenticating the user using one of these four methods. These mechanisms don't actually define the method to verify the credentials, but rather they show how to retrieve them from the user. How the container performs the authentication with the supplied credentials depends on the vendor-specific J2EE container implementation. Most J2EE containers handle the authentication process by associating the current HTTPServletRequest object, and its internal session, with the user. By associating a session with the user, the container ensures that the initiated request and all subsequent requests from the same user can be associated with the same session until that user's logout or the authenticated session expires. Once authenticated, the Web application can make use of the following methods provided by the HTTPServletRequest interface.

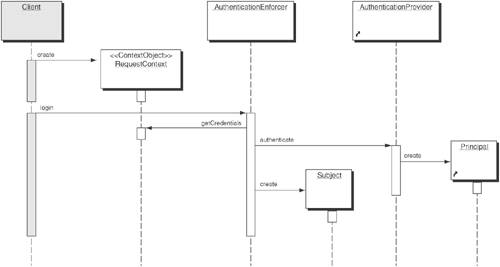

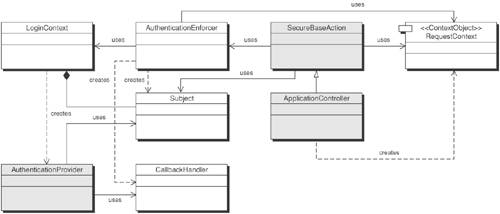

Authentication Provider-Based StrategyThis Strategy adopts a third-party authentication provider for providing authentication for J2EE applications. Figure 9-3 illustrates how the Authentication provider is responsible for the authentication of the user, and the Authentication Enforcer extracts the user's Principal and creates a Subject instance with that Principal. Figure 9-3. Sequence diagram for Authentication Provider strategy As you can see in Figure 9-3, the authentication provider takes care of the authentication and creation of the Principal. The Authentication Enforcer simply creates the Subject and adds the Principal and the Credential to it. The Subject then holds a collection of permissions associated with all the Principals for that user. The Subject object can then be used in the application to identify, and also to authorize, the user. JAAS Login Module StrategyThe JAAS Login Module Strategy is more involved, because it takes responsibility for authentication from the container and moves it to the application that uses an authentication provider. This provides a pluggable approach and more programmatic control offering more flexibility to applications that require additional authentication mechanisms not supported by the J2EE specification. In essence, JAAS provides a standard programmatic approach to nonstandard authentication mechanisms. It also allows incorporation of multifactor authentication using security providers based on smart cards and biometrics. Figure 9-4 shows the additional components required by this strategy. Figure 9-4. Class diagram for JAAS Login Module strategy In this strategy, the AuthenticationEnforcer is implemented as a JAAS client that interacts with JAAS LoginModule(s) for performing authentication. The JAAS LoginModules are configured using a JAAS configuration file, which identifies one or more JAAS LoginModules intended for authentication. Each LoginModule is specified via its fully qualified class name and an authentication Flag value that controls the overall authentication behavior. The flag values (such as Required, Requisite, Sufficient, Optional) defines the overall authentication process. The authentication process proceeds down the specified list of entries in the configuration file based on the flag values. The AuthenticationEnforcer instantiates a LoginContext class that loads the required LoginModule(s), specified in the JAAS configuration file. To initiate authentication the AuthenticationEnforcer invokes the LoginContext.login() method which in turn calls the login() method in the LoginModule to perform the login and authentication. The LoginModule invokes a CallbackHandler to perform the user interaction and to prompt the user for obtaining the authentication credentials (such as username/password, smart card and biometric samples). Then the LoginModule authenticates the user by verifying the user authentication credentials. If authentication is successful, the LoginModule populates the Subject with a Principal representing the user. The calling application can retrieve the authenticated Subject by calling the LoginContext's getSubject method. Figure 9-5 shows the sequence diagram for JAAS Login Module strategy. Figure 9-5. Sequence diagram for JAAS Login Module strategy

ConsequencesBy employing the Authentication Enforcer pattern, developers will be able to benefit from reduced code and consolidated authentication and verification to one class. The Authentication Enforcer pattern encapsulates the authentication process needed across actions into one centralized point that all other components can leverage. By centralizing authentication logic and wrapping it in a generic Authentication Enforcer, authentication mechanism details can be hidden and the application can be protected from changes in the underlying authentication mechanism. This is necessary because organizations change products, vendors, and platforms throughout the lifetime of an enterprise application. A centralized approach to authentication reduces the number of places that authentication mechanisms are accessed and thereby reduces the chances for security holes due to misuse of those mechanisms. The Authentication Enforcer enables authenticating users by means of various authentication techniques that allow the application to appropriately identify and distinguish user's credentials. A centralized approach also forms the basis for authorization that is discussed in the Authorization Enforcer pattern. The Authentication Enforcer also provides a generic interface that allows it to be used across tiers. This is important if you need to authenticate on more than one tier and do not want to replicate code. Authentication is a key security requirement for almost every application, and the Authentication Enforcer provides a reusable approach for authenticating users. Sample CodeExamples 9-1, 9-2, and 9-3 illustrate different authentication configurations that can be specified in the web.xml file of a J2EE application deployment. Example 9-4 shows the programmatic approach to authentication. Example 9-1. Basic HTTP authentication entry in the web.xml file<login-config> <auth-method>BASIC</auth-method> </login-config> Example 9-2. Form-based authentication entry in the web.xml file<login-config> <auth-method>FORM</auth-method> </login-config> <!-- LOGIN AUTHENTICATION --> <login-config> <auth-method>FORM</auth-method> <realm-name>default</realm-name> <form-login-config> <form-login-page>login.jsp</form-login-page> <form-error-page>error.jsp</form-error-page> </form-login-config> </login-config> Example 9-3. Client certificate-based authentication entry in the web.xml file<login-config> <auth-method>CLIENT-CERT</auth-method> </login-config> Example 9-4. JAAS authentication strategy code examplepackage com.csp.web; public class AuthenticationEnforcer { public Subject login(RequestContext request) throws InvalidLoginException { // 1. Instantiate the LoginContext // and load the LoginModule try { LoginContext ctx = new LoginContext("MyLoginModule", new WebCallbackHandler(request)); } catch(LoginException le) { System.err.println("LoginContext not created. "+ le.getMessage()); } catch(SecurityException se) { System.err.println("LoginContext not created. "+ se.getMessage()); } // 2. Invoke the Login method try { ctx.login(); } catch(LoginException le) { System.out.println("Authentication failed"); } System.out.println("Authentication succeeded"); // Get the Subject Subject mySubject = ctx.getSubject(); return mySubject; } }Security Factors and RisksThe following security factors and risks apply when using the Authentication Enforcer pattern and its strategies.

Reality CheckThe following reality checks should be considered before implementing an Authentication Enforcer pattern.

Related PatternsThe following are patterns related to the AuthenticationEnforcer.

Authorization EnforcerProblemMany components need to verify that each request is properly authorized at the method and link level. For applications that cannot take advantage of container-managed security, this custom code has the potential to be replicated. In large applications, where requests can take multiple paths to access multiple business functionality, each component needs to verify access at a fine-grained level. Just because a user is authenticated does not mean that user should have access to every resource available in the application. At a minimum, an application makes use of two types of users; common end users and administrators who perform administrative tasks. In many applications there are several different types of users and roles, each of them require access based on a set of criterion defined by the business rules and policies specific to a resource. Based on the defined set of criterion, the application must enforce that a user can be able to access only the resources (and in the manner) that user is allowed to do. Forces

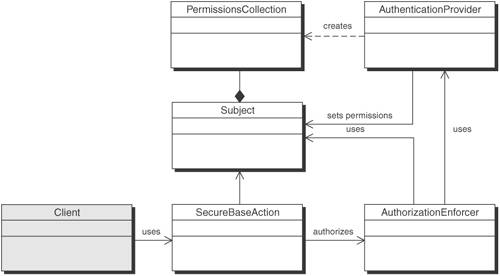

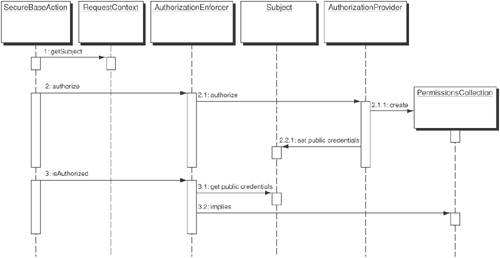

SolutionCreate an Access Controller that will perform authorization checks using standard Java security API classes. The AuthorizationEnforcer provides a centralized point for programmatically authorizing resources. In addition to centralizing authorization, it also serves to encapsulate the details of the authorization mechanics. With programmatic authorization, access control to resources can be implemented in a multitude of ways. Using an AuthorizationEnforcer provides a generic encapsulation of authorization mechanisms by defining a standardized way for controlling access to Web-based applications. It provides fine-grained access control beyond the simple URL restriction. It provides the ability to restrict links displayed in a page or a header as well as to control the data within a table or list that is displayed, based on user permissions. StructureFigure 9-6 shows the AuthorizationEnforcer class diagram. Figure 9-6. Authorization Enforcer class diagram Participants and ResponsibilitiesFigure 9-7 shows a sequence diagram depicting the authorization of a user to a permission using the Authorization Enforcer. Figure 9-7. Authorization Enforcer sequence diagram

StrategiesThere are three commonly adopted strategies that can be employed to provide authorization using Authorization Enforcer pattern. The first is using an authorization provider, using a third-party security solution that provides authentication and authorization services. The second is purely programmatic authorization strategy which makes use of the Java 2 security API classes and leveraging the Java 2 Permissions class. The third is a JAAS authorization strategy that makes use of the JAAS principal based policy files and takes advantage of the underlying JAAS programmatic authorization mechanism for populating and checking a user's access privileges. Not discussed further here is the J2EE container-managed authorization strategy. This strategy, or more correctly, the implementation, was found to be too static and inflexible. Authorization Provider StrategyIn this strategy, the Authorization Enforcer makes use of a third-party security provider which handles authentication and provides policy based access control to J2EE based application components. In a typical authorization scenario (see Figure 9-7), the client (an Application Controller or extended action class) wants to perform a permission check on a particular user defined in the Subject class retrieved from his or her session. Prior to the illustrated flow, the SecureBaseAction class would have used the AuthenticationEnforcer to authenticate a user and then placed that user's Subject into the session. The Subject object can then be subsequently retrieved from the RequestContext. In the flow above through the following process:

Sometime later, the SecureBaseAction needs to check that a user has a specific Permission and calls the isAuthorized method of the AuthorizationEnforcer.

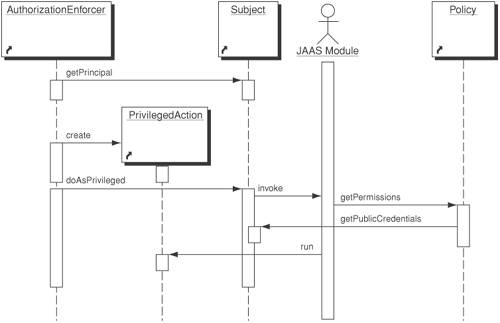

Programmatic Authorization StrategyThe Programmatic Authorization Strategy has the advantage of being flexible enough to easily accommodate new types of permissions for the variety of resources that you want to protect. The programmatic authorization strategy is a purely programmatic approach to authorization. It allows developers to arbitrarily create permissions and store them in the PermissionsCollection class, as demonstrated in Figure 9-7. These permissions could be dynamically created at runtime as resources are created. For example, consider an application that allows administrators to upload new forms. Those forms may have access-control requirements that do not correspond to existing roles. You may need to create a resource permission that allows you to specify the name of the form and to then assign that permission to a user or group of users as necessary. It is often necessary to not only deny access to a particular link on a page, but to hide it from the view of those users without appropriate permissions to view its contents. In this case, a custom tag library can be constructed to provide tags for defining permission-based access to links and other resources in the JSPs. Example 9-5 shows a code sample of a JSP utilizing such a library. In the example, we show a permission tag that protects the admin page from being accessed by anyone other than those with the "admin" permission and the application page from anyone without the "user" permission. Example 9-5. JSP utilizing permission enforcement custom tag library<TD> <%-- display administration links --%> <pg:permission forward="admin"> <strong>Administration</strong> <ul> <pg:permission forward="admin"> <li> <pg:link forward="admin"> <pg:message bundle="common" key="text.admin"/> </pg:link> </li> </pg:permission> </ul> </pg:permission> <%-- display user links --%> <pg:permission forward="user"> <strong>Application</strong> <ul> <pg:permission forward="user"> <li> <pg:link forward="user"> <pg:message bundle="common" key="text.user"/> </pg:link> </li> </pg:permission> </ul> </pg:permission> </TD> In Example 9-5, a table with admin and user links is rendered based on the requester's permissions. A user who has admin and user permissions will see both links. Regular users will only see the user link. Public users would see neither. Example 9-6 shows the custom tag library used in Example 9-5. Example 9-6. Permission enforcement custom tag library<?xml version="1.0" encoding="UTF-8"?> <!DOCTYPE taglib PUBLIC "-//Sun Microsystems, Inc.//DTD JSP Tag Library 1.2/ /EN" "http://java.sun.com/dtd/web-jsptaglibrary_1_2.dtd"> <taglib> <tag> <name>permission</name> <tag-class> coresecuritypatterns.web.tags.PermissionTag </tag-class> <body-content>JSP</body-content> <attribute> <name>require</name> <required>false</required> <rtexprvalue>false</rtexprvalue> <type>java.lang.String</type> </attribute> <attribute> <name>any</name> <required>false</required> <rtexprvalue>false</rtexprvalue> <type>java.lang.String</type> </attribute> <attribute> <name>deny</name> <required>false</required> <rtexprvalue>false</rtexprvalue> <type>java.lang.String</type> </attribute> <attribute> <name>action</name> <required>false</required> <rtexprvalue>false</rtexprvalue> <type>java.lang.String</type> </attribute> <attribute> <name>forward</name> <required>false</required> <rtexprvalue>false</rtexprvalue> <type>java.lang.String</type> </attribute> <attribute> <name>relative</name> <required>false</required> <rtexprvalue>false</rtexprvalue> <type>java.lang.String</type> </attribute> </tag> <tag> </taglib> JAAS Authorization StrategyThe JAAS Authorization Strategy is less flexible than a purely programmatic authorization strategy but provides the benefit of offering a standard JAAS LoginModule-based approach to authorization. It also utilizes a declarative means of mapping permissions to resources. This is a good approach for applications that do not support dynamic resource creation. Developers can map permissions to resources and roles to permissions declaratively at deployment time, thus eliminating programmatic mappings that often result in bugs and cause security vulnerabilities. Figure 9-8 shows the sequence diagram of the Authorization Enforcer implemented using the JAAS Authorization Strategy. The key participants and their roles are as follows:

Figure 9-8. JAAS Authorization Enforcer Strategy sequence diagram Example 9-7 shows a JAAS Authorization policy file (MyJAASAux.policy). Example 9-8 shows the source code for a JAAS-based authorization strategy (SampleAuthorizationEnforcer.java) and Example 9-9 shows the Java source code for PrivilegedAction implementation (MyPrivilegedAction.java). Example 9-7. JAAS authorization policy file (MyJAASAux.policy)// grant the LoginModule for enforcing // authorization permissions grant codebase "file:./MyLoginModule.jar" { permission javax.security.auth.AuthPermission "modifyPrincipals"; }; grant codebase "file:./SampleAuthorizationEnforcer.jar" { permission javax.security.auth.AuthPermission "doAsPrivileged"; }; /** User-Based Access Control Policy ** for executing the MyAction class ** instantiated by MyJAASAux.policy **/ grantcodebase "file:./MyPrivilegedAction.jar", Principal csp.principal.myPrincipal "chris" { permission java.util.PropertyPermission "java.home", "read"; permission java.util.PropertyPermission "user.home", "read"; permission java.io.FilePermission "Chris.txt", "read"; };Example 9-8. SampleAuthorizationEnforcer.javaimport java.io.*; import java.util.*; import java.security.Principal; import java.security.PrivilegedAction; import javax.security.auth.*; import javax.security.auth.callback.*; public class SampleAuthorizationEnforcer { public void executeAsPrivileged(LoginContext lc) { Subject mySubject = lc.getSubject(); // Identify the Principals we have Iterator principalIterator = mySubject.getPrincipals().iterator(); System.out.println("Authenticated user - Principals:"); while (principalIterator.hasNext()) { Principal p = (Principal)principalIterator.next(); System.out.println("\t" + p.toString()); } // Execute the required Action // as the authenticated Subject PrivilegedAction action = new MyPrivilegedAction(); Subject.doAsPrivileged(mySubject, action, null); } }Example 9-9. MyPrivilegedAction.javaimport java.security.PrivilegedAction; public class MyPrivilegedAction implements PrivilegedAction { public Object run() { System.out.println("\nYour java.home property: " +System.getProperty("java.home")); System.out.println("\nYour user.home property: " +System.getProperty("user.home")); File f = new File("MyAction.txt"); System.out.print("\nMyAction.txt does "); if (!f.exists()) System.out.print("not "); System.out.println("exist in working directory."); return null; } }Consequences

Security Factors and RisksAuthorization. Protect resources on a case by case basis. Fine-grained authorization allows you to properly protect the application without imposing a one-size-fits-all approach that could expose unnecessary security vulnerabilities. A common security vulnerability arises from access-control models that are too coarse-grained. When the model is too coarse-grained, you inevitably have users that do not fit nicely into the role-permission mappings defined. Often, administrators are forced to give these users elevated access due to business requirements. This leads to increased exposure. For instance, you have two groups of users that you break into two roles (staff and admin). The staff role only has the ability to read form data. The admin role has the ability to create, read, update, and delete (CRUD) form data. You find that you have a few users that need to update the form data, though they should not be able to create or delete it. You are now forced to put them into the admin role, giving them these additional permissions because your model is too coarse-grained. Reality CheckToo complex. Implementing a JAAS Authorization Strategy and all but the most simplistic authorization strategies requires an in-depth understanding of the Java 2 security model and a variety of Java security APIs. As with any security mechanism, complexity can lead to vulnerabilities. Make sure you understand how your resources are being protected through this approach before diving in and implementing it. Related Patterns

Intercepting ValidatorProblemYou need a simple and flexible mechanism to scan and validate data passed in from the client for malicious code or malformed content. The data could be form-based, queries, or even XML content. Several well-known attack strategies involve compromising the system by sending requests containing invalid data or malicious code. Such attacks include injection of malicious scripts, SQL statements, XML content, and invalid data using a form field that the attacker knows will be inserted into the application to cause a potential failure or denial of service. The embedded SQL commands can go further, allowing the attacker to wreak havoc on the underlying database. These types of attacks require the application to intercept and scrub the data prior to its use. While some of the approaches for scrubbing the data are well known, it is a constant battle to keep up-to-date as new attacks are discovered. Forces

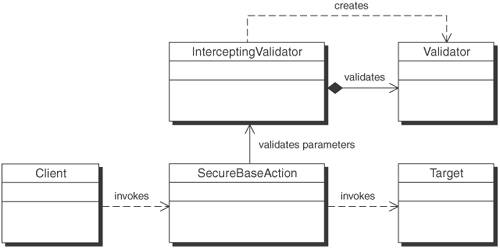

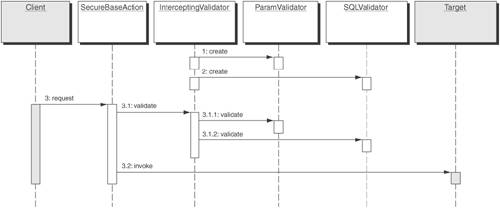

SolutionUse an Intercepting Validator to cleanse and validate data prior to its use within the application, using dynamically loadable validation logic. A good application will verify all input, for both business and security reasons. Similar to the Intercepting Filter pattern [CJP2], the Intercepting Validator makes use of a pluggable filter approach. The filters can then be applied declaratively based on URL, allowing different requests to be mapped to different filter chains. In the case of the Intercepting Validator, filtering would be restricted to preprocessing of requests and would primarily consist of validation (yes or no) logic that determines whether or not the request should continue as opposed to manipulation logic that would require business logic above and beyond the security concerns of the application. While applications could incorporate security filters into an existing Intercepting Filter implementation, the preferred approach would be to employ both and keep them separate. Typically, the Intercepting Validator would be invoked earlier in the request-handling process than the Intercepting Filter and would consist of a more static and reusable set of filters because it is not tied to any particular set of business rules. Business rules are often tied to the business logic and must be performed in the Business tier, but security rules are often independent of the actual application and should be performed up front in the Web tier. Client-side validations are inherently insecure. It is easy to spoof submitting a Web page and bypass any scripting on the original page. [Vau] For the application to be secure, validations must be performed on the server side. This does not detract from the value of client-side validations. Client-side validations via JavaScript make sense for business rules and should be optionally supported. They provide the user with validation feedback before the form gets submitted. This increases the perceived performance by the end user and saves the server the cost of processing errors for the vast majority of cases. Input from malicious users who circumvent client-side validations must still be validated, though. Therefore, to be prudent, validation checks must always be performed on the server side, whether or not client-side checking is done as well. StructureFigure 9-9 depicts a class diagram of the Intercepting Validator pattern. Figure 9-9. Intercepting Validator class diagram Participants and ResponsibilitiesFigure 9-10 shows an Intercepting Validator sequence diagram. Figure 9-10. InterceptingValidator sequence diagram Figure 9-10 illustrates a sequence of events for the Intercepting Validator pattern described by the following components.

Figure 9-10 depicts a use case scenario of how a request from a client to a resource gets properly validated to ensure against attacks based on malformed data. The scenario follows these steps:

StrategiesDifferent validators are used to validate different types of data in a request or perform validation on that data in a different manner. For example, certain form fields need to be validated for size constraints. Form fields that will contain data that will be become part of an SQL query or update require SQL character validation to ensure that embedded SQL commands cannot be entered. The logic to perform the validations can often be cumbersome. This logic can be simplified through use of the new J2SE 1.4 regular expressions package. This package contains classes that allow developers to perform regular expression matches as they would in the Perl programming language. These classes can be used for validation implementations. Example 9-10 illustrates a simple action configuration in MVC architecture using Apache Struts. To implement a simple Intercepting Validator, the parameter attribute can define a key that can be used to apply a Validator against an ActionForm/HTTPRequest. Alternatively, a separate validation file, such as validator.xml as shown in Example 9-11 can define generic sets of validation rules to be applied to a form or other input request. Such XML-descriptive validation definitions can be leveraged by Intercepting Validator to dynamically apply over input requests; Validator instances that have been coded to be configured based on the XML content. Both these scenarios are illustrated in Figure 9-10. Example 9-10. Web data validation using Apache Struts<struts-config> <!-- Action Form Beans --> <form-beans> <form-bean name="simpleForm" type="SimpleForm"/> </form-beans> <!-- action mappings --> <action-mappings> <action path="/submitSimpleForm" type="SimpleFormAction" name="simpleForm" scope="request" validate="true" input="simpleForm.jsp" parameter="additional_security_validator_identifier"> <forward name="continueWorkFlow" path="continue.jsp"/> </action> </action-mappings> </struts-config> Example 9-11. Form validation XML using Apache Struts<form-validation> <formset> <form name="simpleForm"> <field dataItemName="dataToSave" rule="preprocessorRule"> <var name="minValue" value="1"/> <var name="maxValue" value="9999"/> <var name="maskingExpression" value="^\(?(\d{3})\)?[-| ]?(\d{3})[-| ]?(\d{4})$"/> <var msg="errors.dataToSave"/> </field> </form> </formset> </form-validation>Example 9-12. SecureBaseAction class using InterceptingValidator with Apache Strutsimport org.apache.struts.action.Action; import org.apache.struts.action.ActionMapping; import org.apache.struts.action.ActionErrors; /** * This code is based on Apache Struts examples. * It requires a working knowledge of Struts, not * explained here. */ public final class SecureBaseAction extends Action { public ActionErrors validate(ActionMapping actionmapping, HttpServletRequest request) { //perform basic input validation Validator validator = InterceptingValidator.getValidator (actionmapping); ValidationErrors errors = validator.process(request); if(errors.hasErrors()) return InterceptingValidator. transformToActionErrors(errors); //For any additional externalized processing, use the //key 'additional_security_validator_identifier' //specified as 'parameter' attribute in action-mappings String externalizedProcessingKey = actionmapping.getParameter(); ExternalizedValidator validatorEx = InterceptingValidator.getExternalizedValidator( externalizedProcessingKey); errors = validatorEx.process(request); if(errors.hasErrors()) return InterceptingValidator.transformToActionErrors( errors); // Alternatively, // use 'additional_security_validator_identifier' to //specify a class that implements command pattern //and invoke the 'process' method on the instantiation try { Class cls = InterceptingValidatorUtil.loadClass( externalizedProcessingKey); Method method = InterceptingValidatrUtil. getValidationActionMethod("process"); InterceptingValidator.invoke( cls, method, new Object[] {request}); } catch(Exception ex) { log("Invocation exception", ex); return InterceptingValidator.transformToActionErrors(ex); } } }An architect who is not inclined to use the Intercepting Validator pattern may end up forcing each developer to naively hardcode validation logic in each of the servlets/action classes/forms beans. Developers implementing business action classes (front controllers), who may not necessarily be security-aware, are prone to miss necessary validations. An example of this type of programmatic validation is illustrated in Example 9-13. Example 9-13. SimpleFormAction using programmatic validation logic using Apache Strutsimport org.apache.struts.action.Action; import org.apache.struts.action.ActionErrors; import org.apache.struts.action.ActionForm; import org.apache.struts.action.ActionForward; import org.apache.struts.action.ActionMapping; /** * This code is taken from the Apache Struts examples. * It requires a working knowledge of Struts, not * explained here. */ public final class SimpleFormAction extends Action { public ActionForward perform(ActionMapping actionmapping, ActionForm actionform, HttpServletRequest httpservletrequest, HttpServletResponse httpservletresponse) throws IOException, ServletException { //perform explicit validation since // it is not implicitly taken care of by //the web application framework ActionErrors actionerrors = actionform.validate(); if(!actionerrors.empty()) { saveErrors(httpservletrequest, actionerrors); //redirect to input page with errors return new ActionForward(actionmapping.getInputForward()); } else { return actionmapping.findForward("continueWorkFlow"); } } } public class SimpleForm extends Form { //... public ActionErrors validate(ActionMapping actionmapping, HttpServletRequest request) { //for each request/form parameter, code for validation ActionErrors errs = new ActionErrors(); if (request.getParameter("param1").indexOf("&")!=-1) errs.add(Action.ERROR_KEY, new ActionError("error_unacceptable_parameter1")); //... return errs; } }ConsequencesUsing the Intercepting Validator pattern helps in identifying malicious code and data injection attacks before the business logic processes the request. It ensures verification and validation of all inbound requests and safeguards the application from forged requests, parameter tampering, and validation failure attacks. In addition, the Intercepting Validator offers developers several key benefits:

Security Factors and RisksProcessing Overhead: Failure to validate input data exposes an application to a variety of attacks, such as malicious code injection, cross-site scripting (XSS), SQL injection attacks, and buffer overruns. The validation process adds some processing overhead and can cause application failure if the application fails to detect a buffer overflow or endless-loop attack. Reality Check

Related Patterns

Secure Base ActionProblemYou want to consolidate interaction of all security-related components in the Web tier into a single point of entry for enforcing security and integration of security mechanisms. Security-related data and methods are used across many or most of the Web tier components. Operations such as verifying authentication, checking authorization, and storing and retrieving session information are prevalent throughout the servlets and JSPs. Due to the nature of security, these operations are often tied together through their implementation. When many normal application components are exposed to many of these security-related components, flexibility and reuse are reduced because of the inherent underlying coupling of security components. For example, in many applications, several different components set cookies directly. If a new security mandate were to prohibit the use of cookies due to a newly found security hole in a popular Web browser, the application would have to be changed in many different areas. There is a possibility that some code would be missed and a security hole opened up. New authentication mechanisms and validation checks are other examples of changing code that is often exposed to many Web components. Forces

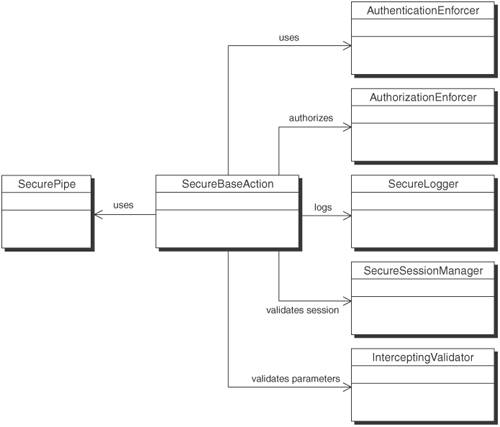

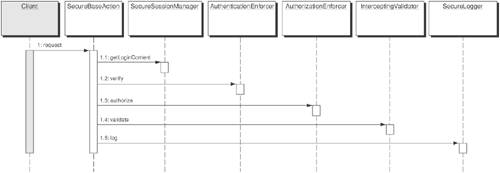

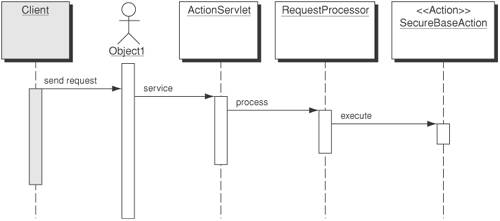

SolutionUse a Secure Base Action to coordinate security components and to provide Web tier components with a central access point for administering security-related functionality. A Secure Base Action pattern can be used as a single point for security-related functionality within the Web tier. By having Web components such as Front Controllers [CJP2] and Application Controllers [CJP2] inherit from it, they gain access to all of the security operations that are necessary throughout the front end. Authentication, authorization, validation, logging, and session management are areas that the Secure Base Action encapsulates and provides centralized access to. StructureFigure 9-11 depicts a class diagram for the Secure Base Action. Figure 9-11. Secure Base Action class diagram As shown in Figure 9-11, the client is a FrontController or ApplicationController that allows delegation of the security handling of the request to the SecureBaseAction. The SecureBaseAction in turn delegates the individual tasks to the appropriate classes. Participants and ResponsibilitiesFigure 9-12 shows a client invoking the execute method of SecureBaseAction. Figure 9-12. Secure Base Action sequence diagram

As shown in Figure 9-12, the SecureBaseAction invokes methods on all of the supporting security classes, ensuring that the request is authenticated, authorized, validated, and logged. The sequence is as follows:

Typically, the client would be a FrontController [CJP2] or an ApplicationController [CJP2] that would invoke and execute prior to delegating to any other classes. You would not want to make more than one call per request, because it would cause redundant processing. StrategiesMVC Style Secure Base Action StrategySecure Base Action class is well suited for the MVC frameworks (like Struts). Most MVC-based applications will have a base action class that provides the common services that all of the individual actions throughout the application need. It will often be this base action class that provides the operations specified in the Secure Base Action class. By consolidating a variety of security-related operations and separating them into the Secure Base Action, you achieve better segregation of responsibility. Developers who are responsible for the base action class in an application are usually not the same developers responsible for the security-related requirements. Secure Base Action provides a means for separating responsibility through inheritance or use of the Command [GoF] pattern. Figure 9-13 illustrates the sequence of events for an MVC-style strategy using Struts. Figure 9-13. MVC style Secure Base Action strategy ConsequencesUsing Secure Base Action helps in aggregating and enforcing security operations that include authentication, authorization, auditing, input validation, and other management functions before processing the request with presentation or business logic. By employing the Secure Base Action pattern, developers will realize the following benefits:

Sample CodeExample 9-14. Secure base action classPackage com.csp.web; import javax.servlet.http.HttpServletRequest; import javax.servlet.http.HttpServletResponse; import javax.security.auth.login.LoginContext; import javax.security.auth.Subject; public class SecureBaseAction { /** * Execute method called on each request. All exceptions will bubble up. */ public void execute(HttpServletRequest req, HttpServletResponse) throws Exception { // Get an instance of ContextFactory ContextFactory factory = ContextFactory.getInstance(); // Get LoginContext from factory RequestContext rc = factory.getContext(req, Constants.Request_CONTEXT); LoginContext lc = secureSessionManager.getLoginContext(rc); if(! authenticationEnforcer.verify(lc)) { lc = authenticationEnforcer.authenticate(rc); secureSessionManager.setLoginContext(lc); } // Authorize the request authorizationEnforcer.authorize(req, lc); // Validate request data interceptingValidator.validate(req); // Log data secureLogger.log(lc.getSubject().getPrincipals()[0] + rc); } /** * Set the subject in the login context */ public void setLoginContext(ResponseContext resp, Subject s) throws Exception { // Get an instance of ContextFactory ContextFactory factory = ContextFactory.getInstance(); // Get LoginContext from factory LoginContext lc = factory.getContext(Constants.LOGIN_CONTEXT); lc.setSubject(s); resp.setParameter(Constants.LOGIN_CONTEXT_KEY, lc); } }Security Factors and RisksThe Secure Base Action pattern acts as the focal point for enforcing security-related functionality within the Web tier. It is intended as a reusable base class that provides pluggable security functionality into the front end.

The Secure Base Action Pattern must adopt high-availability and fault-tolerance strategies to support the underlying security operations and to enhance reliability and performance of the infrastructure. When a failure is detected, it must securely failover to a redundant infrastructure without jeopardizing any existing inbound requests or its intermediate processing state. Reality Check

Related Patterns

Secure LoggerProblemAll application events and related data must be securely logged for debugging and forensic purposes. This can lead to redundant code and complex logic. All trustworthy applications require a secure and reliable logging capability. This logging capability may be needed for forensic purposes and must be secured against stealing or manipulation by an attacker. Logging must be centralized to avoid redundant code throughout the code base. All events must be logged appropriately at multiple points during the application's operational life cycle. In some cases, the data that needs to be logged may be sensitive and should not be viewable by unauthorized users. It becomes a critical requirement to protect the logging data from unauthorized users so that the data is not accessible or modifiable by a malicious user who tries to identify the information trail. Without centralized control, sometimes the code usually gets replicated, and it becomes difficult to maintain the changes and monitor the functionality. One of the common elements of a successful intrusion is the ability to cover one's tracks. Usually, this means erasing any tell-tale events in various log files. Without a log trail, an administrator has no evidence of the intruder's activities and therefore no way to track the intruder. To prevent an attacker from breaking in again and again, administrators must take precautions to ensure that log files cannot be altered. Cryptographic algorithms can be adopted to ensure data confidentiality and the integrity of the logged data. But the application processing logic required to apply encryption and signatures to the logged data can be complex and cumbersome, further justifying the need to centralize the logger functionality. Forces

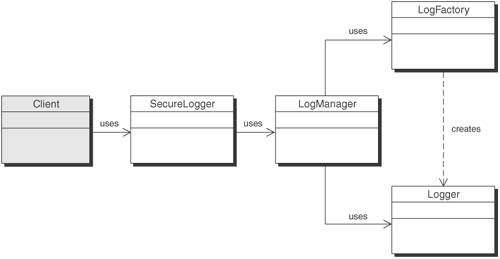

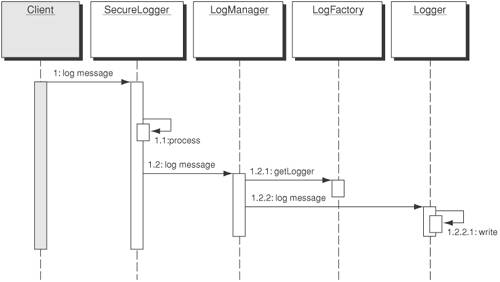

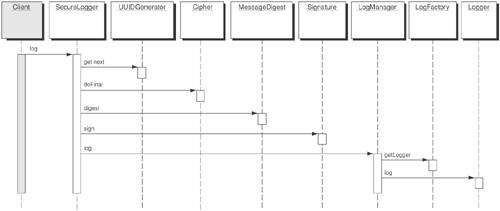

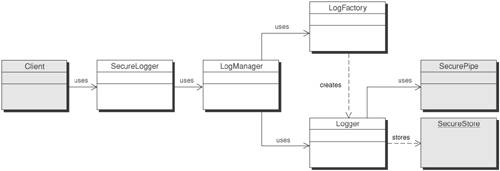

SolutionUse a Secure Logger to log messages in a secure manner so that they cannot be easily altered or deleted and so that events cannot be lost. The Secure Logger provides centralized control of logging functionality that can be used in various places throughout the application request and response. Centralizing control provides a means of decoupling the implementation details of the logger from the code of developers who will use it throughout the application. The processing of the events can be modified without impacting existing code. For instance, developers can make a single method call in their Java code or JSP code. The Secure Logger takes care of how the events are securely logged in a reliable manner. StructureFigure 9-14 depicts a class diagram for Secure Logger. Figure 9-14. Secure Logger class diagram Participants and ResponsibilitiesFigure 9-15 shows a sequence diagram that depicts the structure of the Secure Logger pattern. Figure 9-15. Secure Logger sequence diagram

A client uses the SecureLogger to log events. The SecureLogger centralizes logging management and encapsulates the security mechanisms necessary for preventing unauthorized log alteration.

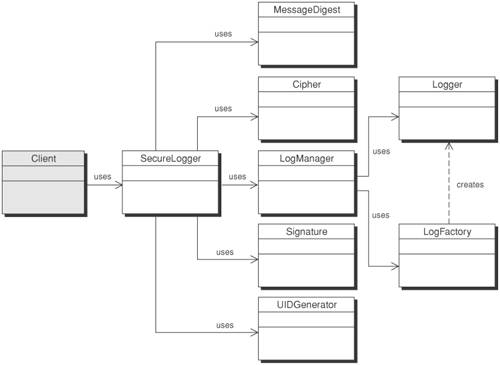

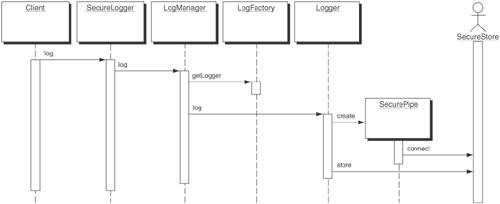

There are two parts to this logging process. The first part involves securing the data to be logged and the second part involves logging the secured data. The SecureLogger class takes care of securing the data and the LogManager class takes care of logging it. StrategiesThere are two basic strategies for implementing a Secure Logger. One strategy is to secure the log itself from being tampered with, so that all data written to it is guaranteed to be correct and complete. This strategy is the Secure Log Store Strategy. The other strategy, the Secure Data Logger Strategy, secures the data so that any alteration or deletion of it can be detected. This works well in situations where you cannot guarantee the security of the log itself. Secure Data Logger StrategyThe Secure Data Logger Strategy entails preprocessing of the data prior to logging it. After the data is secured in the preprocessing, it is sent to the logger in the usual manner. There are four new classes introduced to help secure the data. Figure 9-16 illustrates the structure of the Secure Logger implemented using a Secure Data Logger Strategy. Figure 9-16. Secure Logger with Secure Data Logger Strategy class diagram We use the MessageDigest, Cipher, Signature, and UIDGenerator classes for applying cryptographic mechanisms and performing various functions necessary to guarantee the data logged is confidential and tamperproof. Figure 9-17 shows the sequence of events used to secure the data prior to being logged. Figure 9-17. Secure Logger with Secure Data Logger Strategy sequence diagram When you have sensitive data or fear that log entries might be tampered with and can't rely on the security of the infrastructure to adequately protect those entries, it becomes necessary to secure the data itself prior to being logged. That way, even if the log destination (file, database, or message queue) is compromised, the data remains secure and any corruption of the log will become clearly evident. There are three elements to securing the data:

To protect sensitive data, encrypt it using a symmetric key algorithm. Public-key algorithms are too CPU-intensive to use for bulk data. They are better for encrypting and protecting a symmetric key for use with a symmetric key algorithm. Properly protecting the symmetric key can ensure that attackers cannot access sensitive data even if they have access to the logs. For this, the SecureLogger can use an EncryptionHelper class. This class is responsible for encrypting a given string but not for decrypting it. This is an extra security precaution to make it harder for attackers to gain access to that sensitive data. Decryption should only be done outside the application, using an external utility that is not accessible from the application and its residing host. Data alteration can be prevented by using digitally signed message digests in the same manner that e-mail is signed. A message digest is generated for each message in the log file and then signed. The signature prevents an attacker from modifying the message and creating a subsequent message digest for the altered data. For this operation, the SecureLogger uses MessageDigestHelper and DigitalSignatureHelper classes. Finally, to detect deletion of data, a sequence number must be used. Using message digests and digital signatures is of no use if the entire log entry, including the signed message, is deleted. To prevent deletion, each entry must contain a sequence number that is part of the data that gets signed. That way, it will be evident if an entry is missing, since there will be a gap in the sequence numbers. Because the sequence numbers are signed, an attacker would be unable to alter subsequent numbers in the sequence, making it easy for an administrator reviewing the logs to detect deletions. To accomplish this, the SecureLogger uses a UUID [Middleware] pattern. Secure Log Store StrategyIn the Secure Log Store Strategy, the log itself is secured from tampering. A secure repository houses the log data and can be implemented using a variety of off-the-shelf products or various techniques such as a Secure Pipe. (See the "Secure Pipe Pattern" section later in this chapter.). A Secure Pipe pattern is used to guarantee that the data is not tampered with in transit to the Secure Store. Figure 9-18 illustrates the structure of the Secure Logger pattern implemented using a Secure Log Store Strategy. Figure 9-18. Secure Logger Pattern with Secure Log Store Strategy class diagram The Secure Log Store strategy does not require the data processing that the Secure Data Logger Strategy introduced. Instead, it makes use of a Secure Pipe pattern and a secure datastore (such as a database), represented as the SecureStore object in Figure 9-18. In Figure 9-19, the only change from the main Secure Logger pattern sequence is the introduction of the Secure Pipe pattern. Figure 9-19. Secure Logger pattern using Secure Pipe In the Secure Log Store Strategy sequence diagram, depicted in Figure 9-19, Logger establishes a secure connection to the SecureStore using a SecurePipe. The Logger then logs messages normally. The SecureStore is responsible for preventing tampering with the log file. It could be implemented as a database with create-only permissions for the Logger user; a listener on a separate, secure box with write only capabilities; or any other solution that prevents deletion, modification, or unauthorized creation of log entries. ConsequencesUsing the Secure Logger pattern helps in logging all data-related application events, user requests, and responses. It facilitates confidentiality and integrity of log files. In addition, it provides the following benefits:

Sample CodeExample 9-15 shows a sample signer class, Example 9-16 depicts a digest class, and Example 9-17 provides a sample encryptor class. These classes are used by the Secure Logger to sign, digest, and encrypt messages, respectively. Example 9-15. Signer classpackage com.csp.web; import java.security.*; import sun.misc.BASE64Encoder; public class Signer { public String sign(String msg) throws Exception { // Create a Signature object to use for signing signer = Signature.getInstance(Constants.SIGNATURE_ALGORITHM); PrivateKey privateKey = keyPair.getPrivate(); // Initialize the Signature object for signing signer.initSign(privateKey); signer.update(msg.getBytes()); // Now sign the message byte[] signature = signer.sign(); // Encode the signature using Base64 for logging. String encodedSignature = Base64Encoder.encode(signature); return encodedSignature; } }Example 9-16. Digester classpackage com.csp.web; import java.security.MessageDigest; import sun.misc.BASE64Encoder; public class Digester { public String digest(String msg) throws Exception { MessageDigest md = MessageDigest.getInstance(Constants.DIGEST_ALGORITHM); md.update(msg.getBytes()); byte[] digest = md.digest(); // Encode digest using Base64 String encodedDigest = Base64Encoder.encode(digest); return encodedDigest; } }Example 9-17. Encryptor classpackage com.csp.web; import java.security.*; import sun.misc.BASE64Encoder; public class Encryptor { public String encrypt(String msg) throws Exception { // Create a Cipher and initialize it for encryption Cipher desCipher = Cipher.getInstance(Constants.CIPHER_ALGORITHM); // Retrieve DES key from storage such as Keystore desCipher.init(Cipher.ENCRYPT_MODE, desKey); // Create a message to send and encrypt it byte[] cipherText = desCipher.doFinal(msg.getBytes()); String encodedCipher = Base64Encoder.encode(cipherText); return encodedCipher; } }Security Factors and RisksThe Secure Logger pattern provides the entry point for logging in the application. As such, it has the following security factors and risks associated with it.

Reality Check

Related Patterns

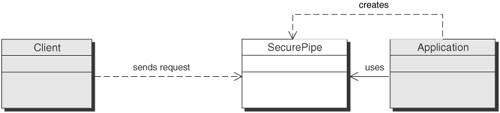

Secure PipeProblemYou need to provide privacy and prevent eavesdropping and tampering of client transactions caused by man-in-the-middle attacks. Web-based transactions are often exposed to eavesdropping, replay, and spoofing attacks. Anytime a request goes over an insecure network, the data can be intercepted or exposed by unauthorized users. Even within the confines of a VPN, data is exposed at the endpoint, such as inside of an intranet. When exposed, it is subject to disclosure, modification, or duplication. Many of these types of attacks fall into the category of man-in-the-middle attacks. Replay attacks capture legitimate transactions, duplicate them, and resend them. Sniffer attacks just capture the information in the transactions for use later. Network sniffers are widely available today and have evolved to a point where even novices can use them to capture unencrypted passwords and credit card information. Other attacks capture the original transactions, modify them, and then send the altered transactions to the destination. This is a common problem shared by all applications that do business over an untrusted network, such as the Internet. For simple Web applications that just serve up Web pages, it is not cost-effective to address these potential attacks, since there is no reason for attackers to carry out such an attack (other than for defacement of the pages) and therefore the risk is relatively low. But, if you have an application that requires sending sensitive data (such as a password) over the wire, you need to protect it from such an attack. Forces

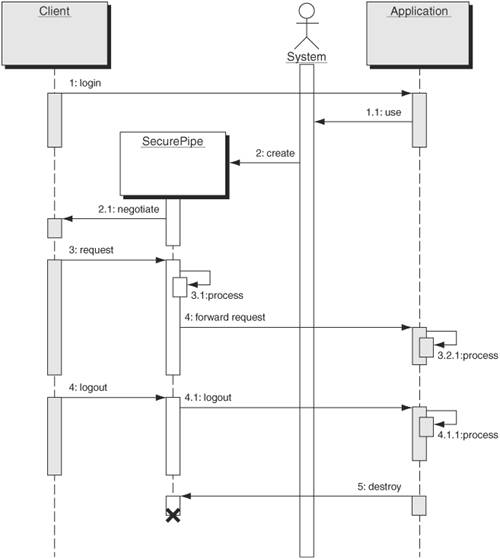

SolutionUse a Secure Pipe to guarantee the integrity and privacy of data sent over the wire. A Secure Pipe provides a simple and standardized way to protect data sent across a network. It does not require application-layer logic and therefore reduces the complexity of implementation. In some instances, the task of securing the pipe can actually be moved out of the application and even off of the hardware platform altogether. Because a Secure Pipe relies on encrypting and decrypting all of the data sent over it, there are performance issues to consider. A Secure Pipe allows developers to delegate processing to hardware accelerators, which are designed especially for the task. StructureFigure 9-20 depicts a class diagram of the Secure Pipe pattern in relation to an application. Figure 9-20. Secure Pipe Pattern class diagram Participants and ResponsibilitiesFigure 9-21 shows a sequence diagram depicting use of the Secure Pipe pattern. Figure 9-21. Secure Pipe sequence diagram The following participants are illustrated in the sequence diagram shown in Figure 9-20.

In the sequence shown in Figure 9-21, a client needs to connect to an application over a secure communication line. The sequence diagram shows how the client and the application communicate using the Secure Pipe. The interaction is as follows.

There are two components of the Secure Pipe pattern: the client-side component and the server-side component. These components work together to establish a secure communication. Typically, these components would be SSL or TLS libraries that the client's Web browser and the application use for secure communications. StrategiesThere are several strategies for implementing a Secure Pipe pattern, each with its own set of benefits and drawbacks. Those strategies include:

Web-Server-Based SSLAll major Web-server vendors support SSL. All it takes to implement SSL is to obtain or create server credentials from a CA, including the server X.509 certificate, and configure the Web server to use SSL with these credentials. Before enabling SSL, the Web server must be security-hardened to prevent compromise of the server's SSL credentials. Since these credentials would be stored on the Web server, if that server were compromised, an attacker could gain access to the server's credentials (including the private key associated with the certificate) and would then be able to impersonate the server. Hardware-Based Cryptographic Card StrategyTo enhance SSL performance, a specialized hardware referred to as SSL accelerators can be used to assist with cryptographic computations. When a new SSL session is established, the Web server will use the SSL accelerator hardware to accept the SSL connection and perform the necessary cryptographic calculations for verifying certificates, encrypting session keys, and so forth instead of having the server CPU perform these calculations in software. SSL acceleration improves Web application performance by relieving servers of complex public key operations, bulk encryption, and high SSL traffic volumes. Network Appliance StrategyA network appliance is a stand-alone piece of hardware dedicated to a particular purpose. In this strategy, we refer to network appliances that act as dedicated SSL/TLS endpoints. They make use of hardware-based encryption algorithms and optimized network ports. Network appliances move the responsibility for establishing secure connections further out into the perimeter and provide greater performance. They sit out in front of the Web servers and promote a greater degree of reusability, since they can service multiple Web servers and applications. However, the security gap between the Secure Pipe endpoint and the application has widened as the appliance is moved logically and physically further away from the application endpoint on the network. Application Layer Using JSSE StrategyIn some cases, Secure Pipe can be implemented in the application layer by making use of Java Secure Socket Extensions (JSSE) framework. JSSE allows enabling secure network communications using Secure Sockets Layer (SSL) and Transport Layer Security (TLS) protocols. It includes functionality for data encryption, server authentication, message integrity, and optional client authentication. Example 9-18 shows how to create secure RMI connections by implementing an RMI Secure Socket Factory that provides SSL connections for the RMI protocol, which provides a secure tunnel. Consequences

Sample CodeExample 9-18. Creating a Secure RMI Server Socket Factory that Uses SSLpackage com.csp.web.securepipe; import java.io.*; import java.net.*; import java.rmi.server.*; import java.security.KeyStore; import javax.net.*; import javax.net.ssl.*; import com.sun.net.ssl.*; import javax.security.cert.X509Certificate; /** * This class creates RMI SSL connections. */ public class RMISSLServerSocketFactory implements RMIServerSocketFactory, Serializable { SSLServerSocketFactory ssf = null; /** * Constructor. */ public RMISSLServerSocketFactory(char[] passphrase) { // set up key manager to do server authentication SSLContext ctx; KeyManagerFactory kmf; KeyStore ks; try { ctx = SSLContext.getInstance("SSL"); // Retrieve an instance of an X509 Key manager kmf = KeyManagerFactory.getInstance("SunX509"); // Get the keystore type. String keystoreType = System.getProperty( "javax.net.ssl.KeyStoreType"); ks = KeyStore.getInstance(keystoreType); String keystoreFile = System.getProperty( "javax.net.ssl.trustStore"); // Load the keystore. ks.load(new FileInputStream(keystoreFile), passphrase); kmf.init(ks, passphrase); passphrase = null; // Initialize the SSL context. ctx.init(kmf.getKeyManagers(), null, null); // Set the Server Socket Factory for getting SSL connections. ssf = ctx.getServerSocketFactory(); } catch(Exception e) { e.printStackTrace(); } } /** * Creates an SSL Server socketnad returns it. */ public ServerSocket createServerSocket(int port) throws IOException { ServerSocket ss = ssf.createServerSocket(port); return ss; } }Example 9-19. Creating a secure RMI client socket factory that uses SSLPackage com.csp.web.securepipe; import java.io.*; import java.net.*; import java.rmi.server.*; import javax.net.ssl.*; public class RMISSLClientSocketFactory implements RMIClientSocketFactory, Serializable { public Socket createSocket(String host, int port) throws IOException { SSLSocketFactory factory = (SSLSocketFactory)SSLSocketFactory.getDefault(); SSLSocket socket = (SSLSocket)factory.createSocket(host, port) return socket; } }Security Factors and RisksThe Secure Pipe pattern is an integral part of most Web server infrastructures because we make use of SSL/TLS between the client and the Web Server. Without it, mechanisms for ensuring data privacy and integrity must be performed in the application itself, leading to increased complexity, reduced manageability, and the inability to push the responsibility down into the infrastructure. Infrastructure

Web Tier

Reality Check

Related Patterns

Secure Service ProxyProblemIntegrating newer security protocols into existing applications can prove cumbersome and introduce risk, especially if the existing applications are legacy systems. Often, you find that you need to adapt existing systems with integrated security protocols to newer security protocols. This is especially true when wrapping existing systems as services in a Service Oriented Architecture (SOA). You want to expose the existing system as a service that interacts with newer services, but the security protocols differ. You do not want to rewrite the existing system and therefore must integrate its existing security protocol. Forces

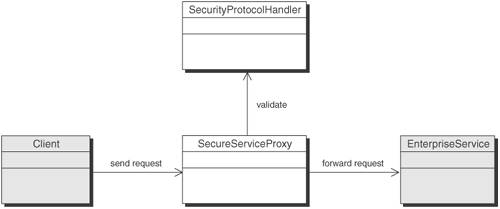

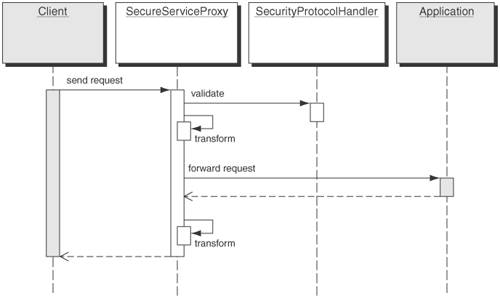

SolutionUse Secure Service Proxy to provide authentication and authorization externally by intercepting requests for security checks and then delegating the requests to the appropriate service. A Secure Service Proxy intercepts all requests from the client, identifies the requested service, enforces the security policy as mandated by the specific service, optionally transforms the request from the inbound protocol to that expected by the destination service, and finally forwards the request to the destination service. On the return path, it transforms the results from the protocol and format used by the service to that format expected by the requesting client. It could also choose to maintain the security context, created in the initial request, in a session created for the client with the intent of using it in future requests. The Secure Service Proxy can be configured on the corporate perimeter to provide authentication, authorization, and other security services that enforce policy to security-unaware lightweight or legacy enterprise services. While the Secure Service Proxy pattern acts similar to an Intercepting Web Agent pattern, it is more advanced because it does not require restricting HTTP URL-based access control, or delegating service requests to any service using any transport protocol. It externalizes the addition of security logic to existing applications that have been implemented and deployed already and integrates cleanly with newer applications that have been developed without security. StructureThe Secure Service Proxy pattern allows developers to decouple the protocol details from the service implementation. This allows multiple clients using different network protocols to access the same enterprise service that expects one particular protocol. For instance, you may have an enterprise service that expects only HTTP requests. If you want to add additional protocol support, you can use a Secure Service Proxy, rather than building each protocol handler into the service. Each protocol may have its own way of handling security, and therefore the Secure Service Proxy can delegate security handling of each protocol to its appropriate protocol handler. For example, using a Secure Service Proxy in an enterprise messaging scenario involves transforming message formats, such as converting a HTTP or an IIOP request from the client to a Java Messaging Service (JMS) message expected by a message-based service and vice versa. In so doing, the proxy can choose to use a channel that connects to the destination service, further decoupling the service implementation details from the proxy. Figure 9-22 is a class diagram of a Secure Service Proxy pattern. Figure 9-22. Secure Service Proxy class diagram Participants and ResponsibilitiesFigure 9-23 shows the sequence diagram for Secure Service Proxy. Figure 9-23. Secure Service Proxy sequence diagram In the sequence shown in Figure 9-23, a Secure Service Proxy provides security to an Enterprise Service. The following are the participants.

In Figure 9-23, the following sequence takes place.

StrategiesThe Secure Service Proxy can represent a single service or act as a service coordinator, orchestrating multiple services. A Secure Service Proxy may act as a façade exposing a coarse-grained interface to many fine-grained services, coordinating the interaction between those services, maintaining security context and transaction state between service invocations, and transforming the output of one service to the input format expected by any other service. This avoids having the client make any changes in the code if the service implementations and interfaces change over time. Single-Service Secure Service Proxy StrategyThe Single-Service Secure Service Proxy Strategy acts as a router to a single service. It performs message authentication, authorization, and message translation. Upon receiving a message, it authenticates and authorizes it. Then, depending on the inbound protocol and the protocol expected by the service, it performs the necessary transformation. For example, the service may be expecting a SOAP message and the client may be sending an HTTP request. The Secure Service Proxy extracts the request parameters, generates a SOAP message with those parameters, and forwards the message to the service. In effect, the Secure Service Proxy has translated the security protocol used by the client to that expected by the service. This is also useful for retrofitting newer security protocols to legacy applications. If you want to provide a Web service façade to an existing application that expects a security token in the form of a cookie, you need to adapt the Web services security protocol requirement (e.g., SAML token) to support the legacy format or rewrite the application. Since you may not be able to change the code for the legacy application, or it may prove too cumbersome, you are better off using the Single Service Secure Service Proxy Strategy. That way, the Secure Service Proxy can perform the necessary translation, independent of the existing service. This reduces effort and complexity and is less likely to introduce bugs or security holes. Multi-Service Controller Secure Service Proxy StrategyThe Multi-Service Secure Service Proxy Strategy is similar to the Single Service Secure Service Proxy Strategy. However, it manages state between service calls and provides multi-protocol translation for multiple clients and services. Therefore, it needs to understand which service it is translating and to provide the appropriate transformation. In this case, you may have some services that expect SOAP messages, some services that expect HTTP requests, and some services that expect IIOP requests. In the Multi-Service Secure Service Proxy Strategy, the Secure Service proxy becomes a state-based router. It may translate between several inbound and outbound protocols. Often, this is desirable if the work that goes into the translation is so complex that you do not want to create multiple single service secure proxies or deal with multiple code bases of largely redundant code. Doing so increases the risk of fixing a security hole in one codebase and then failing to migrate the fix to the other codebases. Sample CodeExample 9-20 provides a sample service proxy single service strategy. Example 9-20. Secure Service Proxy Single Service Strategy Sample Codepackage com.csp.web; public class ServiceProxyEndpoint extends HTTPServlet { /** * Process the HTTP Post request, */ public void doPost(HttpServletRequest request, HttpServletResponse response) throws ServletException, IOException { // Get the appropriate service proxy based on the request parameter // which contains the service identifier SecureServiceProxy proxy = ProxyFactory.getProxy(request.getQueryString()); ((HTTPProxy)proxy).init(request, response); //Make the request pass through security validation if(!proxy.validateSecurityContext(request)) request.getRequestDispatcher("unauthorizedMessage").forward(request, response); //Have the proxy process the request proxy.process(); //Finally, send the response back to the client proxy.respond(); } } // This interface must be implemented by all service proxies. public interface SecureServiceProxy { public boolean validateSecurityContext(); public void process(); public void respond(); } // This interface, that caters to process and respond to HTTP requests, // must be implemented by a HTTP Proxy public interface HTTPProxy extends SecureServiceProxy { public void init(HttpServletRequest request, HttpServletResponse response); } /** * This is a sample proxy class that uses HTTP for client-to-proxy * communication and SOAP for proxy-to-service communication * It is responsible for security validations and message translations, * that involve marshalling and unmarshalling messages in one format to * another */ public class SimpleSOAPServiceSecureProxy implements HTTPProxy { private HttpServletRequest request; private HttpServletResponse response; private SOAPMessage input; private SOAPMessage output; public void init(HttpServletRequest request, HttpServletResponse response) { this.request = request; this.response = response; } //validates the security credentials in the request public boolean validateSecurityContext(HttpServletRequest request) { HttpSession session = request.getSession(); LoginContext lc = session.getAttribute("LoginContext"); if(lc == null) Return false; if(!AuthenticationProvider.verify(lc)) return false; if(!AuthorizationProvider.authorize(lc, request)) return false; return true; } public void process() { MessageFactory factory = MessageFactory.newInstance(); input = factory.createSOAPMessage(request); SOAPService service = SOAPService.getService(request.getParameter("action")); output = service.execute(input); } public void respond() { response.write(output.getHTTPResponse()); } }Consequences

Security Factors and Risks

Reality Check

Related Patterns

Intercepting Web AgentProblemRetrofitting authentication and authorization into an existing Web application is cumbersome and costly. It is often necessary to provide authentication and authorization for an existing application or to retrofit an application that has already been designed with a security architecture. Security is often overlooked or postponed until after the functional pieces of the application have been designed. After an application is deployed, or after it has been mostly implemented, it is very difficult to implement the authentication, authorization, and auditing mechanisms. Forces

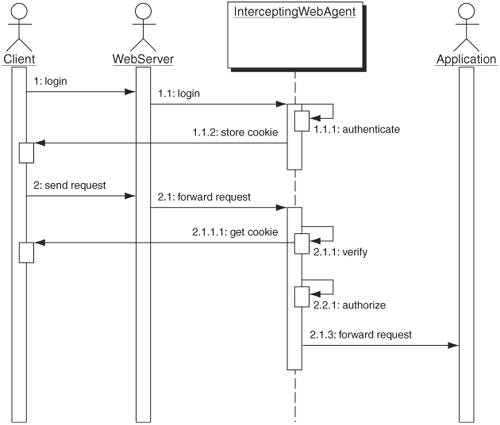

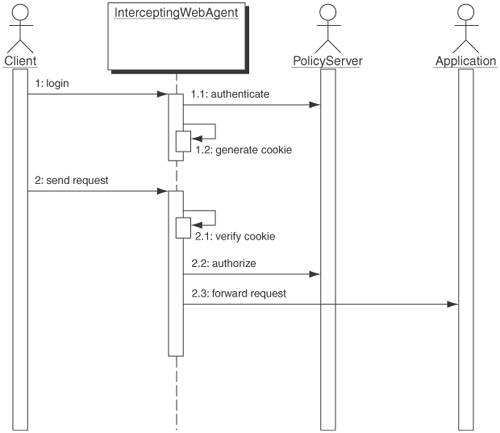

SolutionUse an Intercepting Web Agent to provide authentication and authorization external to the application by intercepting requests prior to the application. Using an Intercepting Web Agent protects the application by providing authentication and authorization of requests outside the application. For example, you inherit an application with little or no security and you are tasked with providing proper authentication and authorization. Rather than attempt to modify the code or rewrite the Web tier, use an Intercepting Web Agent to provide the proper protection for the application. The Intercepting Web Agent can be installed on the Web server and provide authentication and authorization of incoming requests by intercepting them and enforcing access control policy at the Web server. The decoupling of security from the application provides the ideal approach to securing existing applications that can't do so themselves or that are too difficult to modify. It also provides centralized management of the security-related components. Policy and the details of its implementation are enforced outside the application and can therefore be changed, usually without affecting the application. Third-party products from a variety of vendors provide security using the Intercepting Web Agent pattern. The Intercepting Web Agent improves maintainability by isolating application logic from security logic. Typically, the implementation requires no code changes, just configuration. It also increases application performance by moving security-related processing out of the application and onto the Web server. Requests that are not properly authenticated or authorized are rejected at the Web server and thus use no extra cycles in the application. StructureFigure 9-24 is a class diagram of an Intercepting Web Agent. Figure 9-24. Intercepting Web Agent class diagram Participants and ResponsibilitiesFigure 9-25 shows the sequence diagram for the Intercepting Web Agent. Figure 9-25. Intercepting Web Agent sequence diagram

Figure 9-25 takes us through a typical sequence of events for an application employing an Intercepting Web Agent. The Intercepting Web Agent is located either on the Web server or between the Web server and the application, external to the application. The Web Server delegates handling of the requests for the application to the Intercepting Web Agent. It, in turn, checks authentication and authorization of the requests before forwarding to the application itself. When attempting to access the application, the client will be prompted by the Intercepting Web Agent to log in. Figure 9-25 illustrates the following sequence of events:

StrategiesExternal Policy Server StrategyThe External Policy Server Strategy is the strategy that most third-party vendors implement. With an External Policy Strategy, user and policy data are stored externally to the Web server. This is done for a variety of reasons.

The External Policy Server Strategy provides centralized storage and management of user and policy information that can be accessed by all Intercepting Web Agents on different Web servers. Using an external policy server, performance can be improved through caching. Load balancing and failover are possible due to the use of cookies and separation of authentication and authorization from particular Web Agent instances. The External Policy Server itself must be replicated. Figure 9-26 depicts the interaction between a client and an Intercepting Web Agent that is protecting an application. Figure 9-26. Intercepting Web Agent using External Policy Server sequence diagram The sequence of events for an Intercepting Web Agent using and external Policy Server is:

ConsequencesUsing the Intercepting Web Agent, developers and architects gain the following: