Chapter 16: Closing Thoughts About Design for Six Sigma (DFSS)

Design for six sigma (DFSS) is really a breakthrough strategy to improvement, as well as customer satisfaction. In the new millennium , it is the most advantageous way as well as an economical way to plan. Fundamentally, the process of DFSS is really a four-step approach. It recognizes the customer and progressively builds on the system concept for robustness in product or service development and finally testing as well as verifying the results against the design. Some of the essential tools used in DFSS are:

-

Define

-

Customer understanding

-

Market research

-

Kano model

-

Organizational knowledge

-

Target setting

-

-

Characterize

-

Concept selection

-

Pugh selection

-

Value analysis

-

-

System diagram

-

Structure matrix

-

Functional flow

-

Interface

-

-

QFD

-

TRIZ

-

Conjoint analysis

-

Robustness

-

Reliability checklist

-

Signal process flow diagrams

-

Axiomatic designs

-

P-diagram

-

Validation

-

Verification

-

Specifications

-

-

Optimize

-

Parameter and tolerance design

-

Simulation

-

Taguchi

-

Statistical tolerancing

-

QLF

-

Design and process failure mode and effects analysis (FMEA)

-

Robustness

-

Reliability checklist

-

Process capability

-

Gauge R & R

-

Control plan

-

-

Verify

-

Assessment (validation and verification score cards)

-

Design verification plan and report

-

Robustness reliability

-

Process capability

-

Gauge R & R

-

Control plan

-

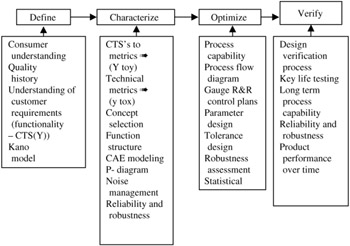

The concept of DFSS may be translated into a model shown in Figure 16.1. This model not only identifies the components DCOV (define, characterize, optimize, verify), but it also identifies the key characteristics of each one of the stages.

Figure 16.1: The DFSS model.

To understand and appreciate how and why this model works, one must understand the purpose and the deliverables of each stage in the model. So, let us give a summary of what the DFSS is all about.

In the Define (D) stage, it is imperative to make sure that the customer is understood . The "spoken" and the " unspoken " requirements must be accounted for and then the definition of the CTS drivers takes place. It is very tempting to jump right away into the Y i without really knowing what the critical characteristics (or functionalities) are for the customer. Unless they are understood, establishing operating window(s) for these Ys will be fruitless.

So the question then is, "How do we get to this point?" And the answer in general terms (the specific answer depends on the organization and its product or service) is that the inputs must be developed from a variety of sources including but not limited to the following ” the order does not matter:

-

Consumer understanding

-

Kano model application

-

Regulatory requirements

-

Corporate requirements

-

Quality/customer satisfaction history

-

Functional, serviceability, expectations

-

Understanding of integration targets process

-

Brand profiler/DNA

-

Benchmarking

-

Quality Function Deployment (QFD)

-

Product Design Specifications (PDS)

-

Business strategy

-

Competitive environment

-

Market segmentation

-

Technology assessment

Once these inputs have been identified, developed, and understood by the DFSS team, then the translation of these "functionalities" may be articulated into the Ys and thus the iteration process begins. How is this done? By making sure all the active individuals participate and have ownership of the project as well as technical knowledge. Specifically, in this stage the owners of the DFSS project will be looking to make correlated connections of what they expect and what they have found in their research. Thus, the search for a "transformation function" begins and the journey to improvement begins in a formal way. Some of the steps to identify the Ys are:

-

Define customer and product needs/requirements.

-

Relate needs/requirements to customer satisfaction; benchmark.

-

Prioritize needs/requirements to determine CTS Ys.

-

Review and develop consensus.

Once the technical team has finished its review and come up with a consensus for "action," the following deliverables are expected:

-

Kano diagram

-

Targets and ranges for CTS Y's

-

Y relationship to customer satisfaction

-

Y relationship to customer satisfaction

-

Benchmarked CTSs

-

CTS scorecard

At this point, one of the most important steps must be completed before the DFSS team must go officially into the next step ” characterize. This step is the evaluation process. A thorough question and answer session takes place with focus on what has transpired in this stage. It is important to ask questions such as: Are we sure that our CTS Ys are really associated with customer satisfaction? Did we review all attributes and functionalities? And so on. Typical tools for the basis of the evaluation are:

-

Consumer insight

-

Market research

-

Quality history

-

Kano analysis

-

QFD

-

Regression modeling

-

Conjoint analysis

When everyone is satisfied and consensus has been reached, then the team officially moves into the characterize (C) stage. In this stage, all participants must make sure that the system is understood. As a result of this understanding, the team begins to formalize the concepts. The process for this development proceeds as follows :

-

Flow CTS Ys down to lower level y's, e.g., Y = f(y 1 , y 2 ,... y n ).

-

Relate y's to CTQ parameters (x's and n's), y = f(x 1 ,..., x k , n 1 ,..., n j ) (x is the characteristic and n is the noise).

-

Characterize robustness opportunities (optimize characteristics in the presence of noise).

Specifically, the inputs for this discussion are the:

-

Kano diagram

-

CTS Ys, with targets and ranges

-

Customer satisfaction scorecard

-

Functional boundaries and interfaces from system design specification(s) and/or verification analysis

-

Existing hardware FMEA data

Once these inputs have been identified, developed, and understood, then the formal decomposition of Y to y to y 1 as well as the relationship of X to x to x 1 and n's to the Ys begins. How is this done? By making sure all the active individuals participate and all have ownership of the project as well as technical knowledge. Specifically, in this stage the owners of the DFSS project will be looking to make correlated connections of what they expect and what they have found in their research. Thus, the formal search for the "transformation function," preferably the "ideal" function, gets underway. Some of the steps to identify both the decomposition of the Ys and its relationship to x are (order does not matter, since in most cases these items will be worked on simultaneously ):

-

Identify functions associated with CTSs

-

Identify control and noise factors

-

Create function structure or other model for identified functions

-

Select Ys that measure the intended function

-

Create general or explicit transfer function

-

Peer review

The deliverables of this activity are:

-

Function diagram(s)

-

Mapping of Y ’ functions ’ critical functions ’ y's

-

P-diagram, including critical

-

Control factors, x's,

-

Technical metrics, y's,

-

Noise factors, n's

-

-

Transfer function

-

Scorecard with target and range for y's and x's

-

Plan for optimization and verification (R&R checklist)

At this point, one of the most important steps must be completed before the DFSS team must go officially into the next step ” optimization. This step is the evaluation process. A thorough question and answer session takes place with focus on what has transpired in this stage. It is important to ask questions such as: Have all the y's technical metrices been accounted for? Are all the CTQ x's measurable and correlated to the Ys of the customer? Are all functionalities accounted for? And so on. Typical tools for the basis of the evaluation are:

-

Function structures

-

P-diagram

-

Robustness/reliability checklist

-

Modeling using design of experiments (DOE)

-

TRIZ

When everyone is satisfied, then the team officially moves into the optimization (O) stage. In this stage, we make sure that the system is designed with robustness in mind, which means the focus is on

-

Minimizing product sensitivity to manufacturing and usage conditions

-

Minimizing process sensitivity to product and manufacturing variations

In essence here, we design for producibility. The process for this development follows the following steps:

-

Understand capability and stability of present processes.

-

Understand the high time-in-service robustness of the present product.

-

Minimize product sensitivity to noise, as required.

-

Minimize process sensitivity to product and manufacturing variations, as required.

The inputs for this process are based on the following processes and information:

-

Present process capability ( ¼ target and ƒ )

-

P-diagram, with critical y's, x's, n's

-

Transfer function (as developed to date)

-

Manufacturing and assembly process flow diagrams, maps

-

Gage R&R capability studies

-

PFMEA & DFMEA data

-

Verification plans: robustness and reliability checklist

-

Noise management strategy

Once these inputs have been identified, developed, and understood, then the formal optimization begins. Remember, there is a big difference between maximization and optimization. We are interested in optimization because we want to equalize our input in such a way that when we do the trade-off analysis we are still ahead. That means we want to decrease variability and satisfy the customer without adding extra cost. How is this done? By making sure all the active individuals participate and all have ownership of the project as well as technical knowledge. Specifically, in this stage, the owners of the DFSS project will be looking to make adjustments in both variability and sensitivity using optimization and modeling equations and calculations to optimize both product and process. The central formula is

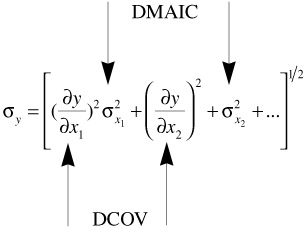

Whereas the focus of the DMAIC model is to reduce ![]() (variability), the focus of the DCOV is to reduce the ( ˆ‚ y/ ˆ‚ x ) (sensitivity). This is very important, and it is why we use the partial derivatives of the x's to define the Ys. Of course, if the transformation function is a linear one, then the only thing we can do is to control variability. Needless to say, in most cases we deal with polynomials , and that is why DOE and especially parameter design are very important in any DFSS endeavor.

(variability), the focus of the DCOV is to reduce the ( ˆ‚ y/ ˆ‚ x ) (sensitivity). This is very important, and it is why we use the partial derivatives of the x's to define the Ys. Of course, if the transformation function is a linear one, then the only thing we can do is to control variability. Needless to say, in most cases we deal with polynomials , and that is why DOE and especially parameter design are very important in any DFSS endeavor.

Some of the steps to identify this optimizing process are (order does not matter, since in most cases these items will be worked on simultaneously):

-

Minimize variability in y by selecting optimal nominals for x's.

-

Optimize process to achieve appropriate ƒ x .

-

Ensure ease of assembly and manufacturability (in both steps above).

-

Eliminate specific failure modes.

-

Update control plan.

-

Review and develop consensus.

The deliverables of this stage are:

-

Transfer function

-

Scorecard with estimate of ƒ y

-

Target nominal values identified for x's

-

Variability metric for CTS Y or related function, e.g., range, standard deviation, S/N ratio improvement

-

Tolerances specified for important characteristics

-

Short- term capability, "z" score

-

Long-term capability

-

Updated verification plans: robustness and reliability checklist

-

Updated control plan

At this point, one of the most important steps must be completed before the DFSS team must go officially into the next step ” testing and verification. This step is the evaluation process. A thorough question and answer session takes place with focus on what has transpired in this stage. It is important to ask questions such as: Have all the z scores for the CTQs been identified? How about their targets and ranges? Is there a clear definition of the product variability over time metric? And so on. Typical tools for the basis of the evaluation are:

-

Experimental plan with two-step optimization and confirmation run

-

Design FMEA with robustness linkages

-

Process FMEA (including noise factor analysis)

-

Parameter design

-

Robustness assessment

-

Simulation software

-

Statistical tolerancing

-

Tolerance design

-

Error prevention: compensation, eliminate noise, Poka-Yoke

-

Gage R&R studies

-

Control plan

After the team is satisfied with the progress thus far, it is ready to address the issues and concerns of the last leg of the model ” verification of results (V). In this stage, the team focuses on assessing the performance, the reliability, and the manufacturability of what has been designed. The process for developing the verification begins by emphasizing two items:

-

Assessing the actual performance, reliability and manufacturing capability

-

Demonstrating customer-correlated performance over time.

The inputs to generate this information are based on but not limited to the following:

-

Updated verification plans: robustness and reliability checklist

-

Scorecard with predicted values of y, ƒ y , based upon ¼ x and ƒ x

-

Historical design verification plan(s) and reliability ” if available

-

Control plan

Once these inputs have been identified, developed, and understood, then the team is entering perhaps one of the most critical phases in the DFSS process. This is where the experience and knowledge of the team members through synergy will indeed shine . This is where the team members will be expected to come up with physical and analytical performance test(s) as well as key life testing to verify the correlation of what has been designed and the functionality that the customer is seeking. In other words, the team is actually testing the "ideal function" and the model generating the characteristics that will delight the customer. Awesome responsibility indeed, but doable. The approach of generating some of the tests is:

-

Enhance tests with key noise factors.

-

Improve ability of tests to discriminate good/bad commodities.

-

Apply test strategy to maximize resource efficiency.

-

Review and develop consensus.

The deliverables are test results, such as:

-

Product performance over time

-

Weibull, hazard plot, etc.

-

Long-term process capabilities

-

Completed robustness and reliability checklist with demonstration matrix

-

Scorecard with actual values of y, ƒ y .

-

Lessons learned captured in system design specifications, component design specifications, and verification design system.

To say that we have such and such a test that will do this and this and will conform to such and such condition or circumstance is not a big issue or important. What is important and essential is to be able to assess the performance of what you have designed against the customer's functionalities. In other words: Are all your x's correlated (and if so, to what degree) to Xs which in turn correlate to y which in turn correlate to the Y (the real functional definition of the customer)? Have the phases D, C, and O of the model been appropriately assessed in every stage? How reliable is the testing? And so on. Some of the approaches and methodologies used are (order does not matter, since in most cases these items will be worked on simultaneously):

-

Reliability/robustness plan

-

Design verification plan with key noises

-

Correlation: tests to customer usage

-

Reliability/robustness demonstration matrix

EAN: 2147483647

Pages: 235