Designing Your Cluster

|

There are a number of issues to be considered and decisions to be made when you're designing a cluster solution. It's worth keeping in mind that a resilient solution is worthless if poor design makes the clustering mechanism result in more downtime than would be expected with a single system.

Why Do You Need a Cluster?

It might be safe to say that the majority of this chapter's readers have already made the decision to install a clustered firewall, and so those readers know why this is a good idea. For readers who are not yet decided or aren't sure why they are installing a cluster, let's look at the reasons why a cluster might be a good option.

The concept of any cluster solution is that the cluster itself appears on the outside as a single system. In the case of a firewall cluster, this system is a secure gateway, possibly providing a VPN end point and other services. There are two key benefits of a cluster that consists of multiple physical hosts: resilience and increased capacity.

Resilience

A cluster of multiple hosts should have the advantage of being able to provide continuous service, irrespective of whether members of the cluster are available or not. Even the best cluster will struggle if every member is unavailable, but as long as one member is running, service should continue if other members have failed or are down for maintenance.

Increased Capacity

According to some pretty simple logic, if we have three active hosts in a cluster, we can push three times as much traffic through it. Things are not quite that simple, however; there can be significant overhead in operation of the cluster technology itself, which tends to increase in proportion to the number of members and could become a bottleneck. Other bottlenecks could be the network bandwidth available on either side of the cluster and the performance of servers protected by the cluster. This concept might appear obvious, but it could be overlooked during the calculations of the incredible throughput theoretically possible if a further five members are added to a cluster!

High Availability or Load Sharing?

There are two distinct models for clustering solutions: HA and load sharing. Let's take a brief look at each.

Load Sharing

In a load-sharing cluster, all available members are active and passing traffic. This setup provides both resilience and increased capacity due to the distribution of traffic between the members. Some load-sharing solutions can be described as load balancing because there is a degree of intelligence in the distribution of traffic between members. This intelligence might be in the form of a performance rating for each cluster member or even a dynamic rating based on current load.

High Availability

In an HA cluster, only one member is active and routing traffic at any one time. This solution provides resilience but no increased capacity. The choice of HA is often due to the simpler solution being easier to manage and troubleshoot and sometimes more reliable than a load-sharing solution due to the latter's additional complexity. The simplicity of these solutions often means that they are a cheaper option financially.

Clustering and Check Point

Let's now look at design issues that arise in planning Check Point firewall clusters.

Operating System Platform

Depending on the operating system platform, different options are available for clustering solutions, including Check Point solutions and those from Check Point OPSEC partners. Here we look at Check Point's ClusterXL solution, which is available on the usual NG platforms—Windows, Solaris, Linux, and SecurePlatform—with the exception of Nokia IPSO. The IPSO platform offers the IPSO clustering load-balancing solution and VRRP HA, both of which we also cover in this chapter. We do not cover OPSEC partner solutions other than references given toward the end of this chapter.

Clustering and Stateful Inspection

Key to the operation of FireWall-1 is the stateful inspection technology that tracks the state of connections. If a cluster solution is to provide true resilience, a connection should be preserved irrespective of which cluster member its packets are routed through. In order for stateful inspection to deal with connections "moving" between members, a method of sharing state information must be provided. This method is known as state synchronization and is an integral part of FireWall-1 clustering. A dedicated network, known as the sync network or secured network, is used for the state synchronization traffic. Note that it is possible to configure a cluster without state synchronization if no connection resilience is needed.

Desire for Stickiness

Although in theory state synchronization allows each packet of a given connection to pass through any one of the cluster members, it is far more desirable to ensure that each connection "sticks" to a specific cluster member where possible. This is due to timing issues; there is inevitably some delay between one cluster member seeing a packet and that member passing its updated state information to other members. This delay can cause a subsequent packet to be dropped because it appears to be invalid—out of state—to the other members. The problem is particularly likely to be an issue during connection establishment, where a quick exchange of packets must adhere to strict conditions—in the case of TCP connections, the three-way handshake. Fortunately, once a connection is established, it is more resilient to packet loss, and the state is not so strictly defined. This allows connection failover, where connections stuck to failed members can be moved to other cluster members with minimal disruption.

In an HA solution, stickiness is no problem; all connections naturally stick to the one active member. In a load-sharing environment, stickiness requires some intelligence from the clustering solution. It must ensure that there is stickiness, but the members should each have roughly equal numbers of connections stuck to them.

Location of Management Station

If you want a clustering solution, you must install an NG distributed management architecture, or in other words, your Check Point management station (also known as the SmartCenter Server) must be installed on a dedicated host, not on a cluster member.

Beware of upgrading FireWall 4.1 HA configurations that perform state synchronization but were not part of a cluster object. It was possible in version 4.1 to make one of the state synchronized firewalls a management station as well, but you cannot do this in FireWall-1 NG.

You must make a decision regarding which network the management station resides on. It is clearly desirable that each cluster member is reachable from the management station, irrespective of whether that member, and other members, are currently active in the cluster. Conversely, irrespective of which cluster members are active, the management station requires normal network connectivity to allow remote management, access to DNS servers, and so on. This decision will depend largely on the type of clustering solution implemented. Let's now look at two options for location, with examples of a simplified network topology.

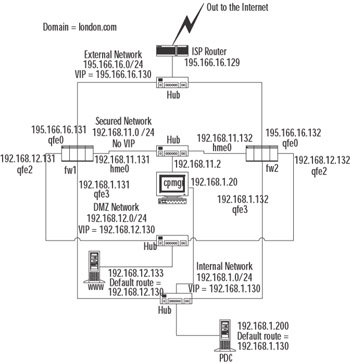

A Management Station on a Cluster-Secured Network

The traditional configuration for many HA solutions has been to place the management station on a dedicated, "secured" network (sometimes shared with cluster control and state synchronization traffic). The network topology is shown in Figure 21.1. Each cluster member is reachable over the secured network, whether the members are in Active or Standby mode. This configuration will be a requirement if members running in Standby mode are only contactable on this secured network interface; other interfaces are down while the member is in standby.

Figure 21.1: A Management Station on a Secured Network

| Note | This is the required configuration in which Check Point ClusterXL HA Legacy mode is implemented. |

A limitation of this configuration is that the management station does not have reliable connectivity with any other networks, because its default gateway must be configured to one of the member's secured network IP addresses. Therefore, the management station relies on that member being active in order to "see" the outside world. To work around this problem, the management station can have a second interface that connects to an internal network. The management station default gateway can then be configured as the gateway on the internal network—possibly the internal IP address of the cluster itself. Alternatively, the second interface could be external facing, with a valid Internet address. This solution might be desirable if the management station manages remote firewall gateways. If the second interface is external facing, it must be firewalled in some way. A possible solution is to install a FireWall-1 enforcement module on the management station (at the time of install) and license it with a SecureServer (nonrouting module) license.

If you run a backup management station, it also needs to be on the secured network.

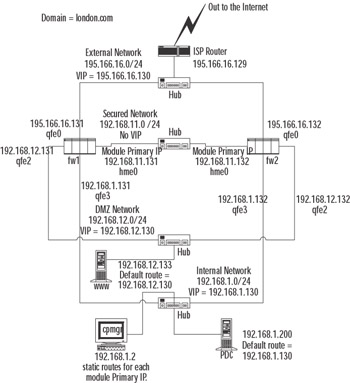

Management Station on Internal Network

The complications of placing the management station on a dedicated network can be avoided if the cluster solution allows members to be reachable on all interfaces, whether the member is active or not. This is achieved where members each have a unique IP address in addition to an IP address shared over the cluster.

Happily, all the other solutions we discuss—ClusterXL HA New mode, load sharing, IPSO clusters, and IPSO VRRP—behave in this way. These solutions can support a management station located behind any interface. Figure 21.2 shows a typical network topology, with the management station located internally. If the management station also manages a cluster at a remote site, it can do so by connecting via the Internet to the external interfaces of that remote cluster's members.

Figure 21.2: A Management Station on an Internal ("Nonsecure") Network

There are some routing factors to consider in using this topology. Some hosts on the internal network will often need to make connections to individual cluster members, including Check Point policy installs and administrative connections such as FTP or SSH. Where these connections are made to the "nearest" IP address, this is no problem. However, if an IP address other than the "nearest" is specified—for example, the external IP address of the member—the packet will probably be routed via the default route (the cluster virtual IP address). There is a good chance that the packet will route through a different member to the one we want to connect to, resulting in asynchronous routing at best and possibly no connection at all. To avoid this situation, static host routes are required on adjacent hosts/routers that ensure that packets destined for each member's unique addresses are routed via that member's nearest unique IP address.

Connecting the Cluster to Your Network : Hubs or Switches?

The nature of clustering—several devices trying to act as one—tends to throw up some unusual network traffic, which invariably upsets some other network devices in one way or another. The most vulnerable devices involved will be any network switches that are connected to cluster interfaces, because by their nature the switch wants to track which devices (with particular MAC addresses) are connected to each of their ports. If we have a number of cluster members, each connected to a different switch port, but each pretending to be the same device, it is no wonder that an unsuspecting switch might struggle. In addition, cluster solutions often use multicast IP and MAC addresses—something else that a switch might need advance warning of.

In summary, most switches can be persuaded to cope with cluster configurations, but some can't. More important, the cluster solution provider is likely to have a list of supported switch hardware—and if your switch is not on that list, you might find yourself in trouble. Always check to find out the supported switches for your chosen configuration.

Hubs, on the other hand, really don't care about any strange protocols that the cluster might be talking, so they make life a lot simpler. The downside is throughput; particularly in a load-sharing configuration, hubs can become a bottleneck. Even so, although switches are probably the best solution, a handful of spare hubs nearby are useful for troubleshooting. Do remember to disable full-duplex settings on network cards before dropping in a hub, though.

FireWall-1 Features, Single Gateways versus Clusters: The Same, But Different

The concept behind the cluster is that it replaces a single gateway but provides resilience and possibly increased capacity. In reality, some FireWall-1 features will behave differently or require different configuration in a cluster environment.

Network Address Translation

When you're configuring NAT, you always need to ensure that packets on a NATed connection are correctly routed to the firewall from adjacent routers. A typical single gateway configuration, with Static NAT performed on an internal server, has the firewall performing proxy ARP for the legal (virtual) IP address, advertising the gateway's own external MAC address. In a cluster environment, it is vital that these packets are routed to the cluster MAC address, not the MAC address of a cluster member. Similarly, if a static host route for the legal IP address is added on adjacent routers (for example, the ISP router), the destination gateway for the route must be the cluster IP address, not a cluster member IP address.

Security Servers

FireWall-1 uses security servers for user authentication and content scanning (in other words, where resources are used in rules). These servers run at the application level and are effectively transparent proxy servers (or mail relays, in the case of SMTP). These applications are not based on stateful inspection, and their state is not synchronized. Security servers can still be used in a clustered solution, but the clustering solution must maintain connection stickiness, and connections will not survive member failure. Whether this limitation is a problem will depend on the applications involved:

-

Relay-to-relay SMTP Deals with connection failure cleanly, with the sending relay retrying the connection later.

-

Client-to-server SMTP Shows the user an error if the mail client was in the process of sending a message at the time of failure—but the chances of this are fairly slim.

-

General HTTP Web browsing Users are accustomed to the odd connection failure on the Internet from time to time, and one more when a cluster member fails should not be an issue.

-

Authenticated HTTP Users (using user authentication and proxying the cluster) throw up an additional complication. While each individual HTTP connection will stick to its cluster member, the browsing session consists of multiple HTTP connections, and each may stick to a different member. As the authentication takes place at the application level, users will need to authenticate against each member they connect to. Fortunately, Web browsers cache the proxy password that the user supplies, so this occurs transparently—unless a one-time password authentication method is in use (for example, RSA SecurID). The nature of one-time passwords means that a different password is required for each cluster member, and in the case of SecurID, this requires a delay of a minute between each attempt! In a load-sharing solution, this is unworkable.

-

File downloads over FTP (or HTTP) Failures here are more likely to cause upset, particularly failure of long downloads that were 95-percent complete. A possible solution for FTP downloads is using client authentication (and not configuring a proxy for FTP).

| Note | If the security server is used as a traditional proxy server or mail relay (in other words, connections are made directly to the gateway), the cluster IP address should be used as the target proxy address or mail relay address. |

Remote Authentication Servers

Where the gateways must connect to a remote server to perform authentication—for example, RADIUS servers or RSA ACE servers—the remote server will often verify the request based on the source IP address of the connection. In some cluster environments, implicit address translation will occur so that this connection will appear to have come from the virtual cluster address. It might be that the remote server will be happy to treat the cluster as a single entity, but this will often cause problems—for example, authentication credentials might include one-time passwords that are maintained locally by the cluster members. In this scenario, we need each cluster member to communicate with the remote server as a separate client. In ClusterXL solutions, this can usually be achieved by creating explicit address translation rules that specify that the connections to remote authentication servers are translated to the real member addresses, as defined in the cluster member object. An example of NAT translation rules to avoid this problem is shown in Figure 21.3.

Figure 21.3: NAT Rules That Ensure No NAT for Authenticating Servers

External VPN Partner Configuration

When participating in VPNs, the cluster appears as a single virtual gateway. External gateways should be configured appropriately, with references to the cluster address only, not member addresses.

|

EAN: 2147483647

Pages: 240